NVIDIA Run:ai and Amazon Web Services have introduced an integration that lets developers seamlessly scale and manage complex AI training workloads. Combining AWS SageMaker HyperPod and Run:ai’s advanced AI workload and GPU orchestration platform improves efficiency and flexibility.

Amazon SageMaker HyperPod provides a fully resilient, persistent cluster that’s purpose-built for large-scale distributed training and inference. It removes the undifferentiated heavy lifting involved in managing ML infrastructure and optimizes resource utilization across multiple GPUs, significantly reducing model training times. This feature supports any model architecture, allowing teams to scale their training jobs efficiently.

Amazon SageMaker HyperPod enhances resiliency by automatically detecting and handling infrastructure failures and ensuring that training jobs can seamlessly recover without significant downtime. Overall, it enhances productivity and accelerates the ML lifecycle.

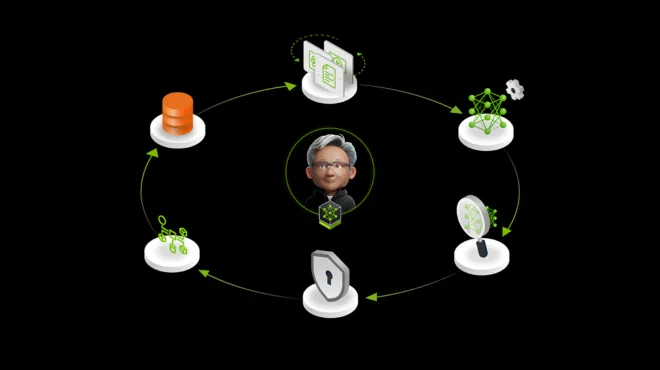

The NVIDIA Run:ai platform streamlines AI workload and GPU orchestration across hybrid environments—on premise and public/private clouds—all from a single interface. This centralized approach benefits IT administrators who oversee GPU resources across different geographic locations and teams, enabling efficient use of on-prem, AWS Cloud, and hybrid GPU resources while allowing for seamless cloud bursts when demand increases.

Both AWS and NVIDIA Run:ai technical teams have successfully tested and validated the integration between Amazon SageMaker HyperPod and NVIDIA Run:ai. This integration allows users to leverage the flexibility of Amazon SageMaker HyperPod’s capabilities while benefiting from NVIDIA Run:ai’s GPU optimization, orchestration, and resource-management features.

With the integration of NVIDIA Run:ai and Amazon SageMaker HyperPod, organizations can now seamlessly extend their AI infrastructure across both on-premise and public/private cloud environments. Advantages include:

Unified GPU resource management across hybrid environments

NVIDIA Run:ai provides a single control plane that lets enterprises efficiently manage GPU resources across enterprise infrastructure and Amazon SageMaker HyperPod. It also provides a simplified way through the GUI or CLI for scientists to submit their jobs to either their on-prem or HyperPod nodes. This centralized approach streamlines the orchestration of workloads, enabling admins to allocate GPU resources based on demand while ensuring optimal utilization across both environments. Whether on premise or in the cloud, workloads can be prioritized, queued, and monitored from a single interface.

Enhanced scalability and flexibility

With NVIDIA Run:ai, organizations can easily scale their AI workloads by bursting to SageMaker HyperPod when additional GPU resources are needed. This hybrid cloud strategy allows businesses to scale dynamically without over-provisioning hardware, reducing costs while maintaining high performance. SageMaker HyperPod’s flexible infrastructure further supports large-scale model training and inference. That makes it ideal for enterprises looking to train or fine-tune foundation models such as Llama or Stable Diffusion.

Resilient distributed training

NVIDIA Run:ai’s integration with Amazon SageMaker HyperPod enables efficient management of distributed training jobs across clusters. Amazon SageMaker HyperPod continuously monitors the health of GPU, CPU, and network resources. It automatically replaces faulty nodes to maintain system integrity. In parallel, NVIDIA Run:ai minimizes downtime by automatically resuming interrupted jobs from the last saved checkpoint, reducing the need for manual intervention and minimizing engineering overhead. This combination helps keep enterprise AI initiatives on track, even in the face of hardware or network issues.

Optimized resource utilization

NVIDIA Run:ai’s AI workload and GPU orchestration capabilities ensure that AI infrastructure is used efficiently. Whether running on Amazon SageMaker HyperPod clusters or on-premise GPUs, NVIDIA Run:ai’s advanced scheduling and GPU fractioning capabilities help optimize resource allocation. It allows organizations to run more workloads on fewer GPUs. This flexibility is especially valuable for enterprises managing fluctuating demand, such as varying compute needs by time of day or season. NVIDIA Run:ai adapts to these shifts, prioritizing resources for inference during peak demand while balancing training requirements. That ultimately reduces idle time and maximizes GPU return on investment.

As part of the validation process, NVIDIA Run:ai tested several key capabilities such as hybrid and multi-cluster management, automatic job resumption after hardware failures, FSDP elastic PyTorch preemption, inference serving, and Jupyter integration, as well as resiliency testing. For more details on how to deploy this integration in your environment—including configuration steps, infrastructure setup, and architecture—visit NVIDIA Run:ai on SageMaker HyperPod.

NVIDIA Run:ai is partnering with AWS to make it easier to manage and scale AI workloads across hybrid environments using Amazon SageMaker HyperPod. To learn how NVIDIA Run:ai and AWS can accelerate your AI initiatives, contact NVIDIA Run:ai today.