Database Operations in HIVE Using CLOUDERA - VMWARE Work Station

Last Updated :

17 Jan, 2021

We are going to create a database and create a table in our database. And will cover Database operations in HIVE Using CLOUDERA - VMWARE Work Station. Let's discuss one by one.

Introduction:

- Hive is an ETL tool that provides an SQL-like interface between the user and the Hadoop distributed file system which integrates Hadoop.

- It is built on top of Hadoop.

- It facilitates reading, writing, and handling wide datasets that stored in distributed storage and queried by Structure Query Language (SQL) syntax.

Requirements:

Cloudera:

Cloudera enables you to deploy and manage Apache Hadoop, manipulate and analyze your data, and keep that data secure and protected.

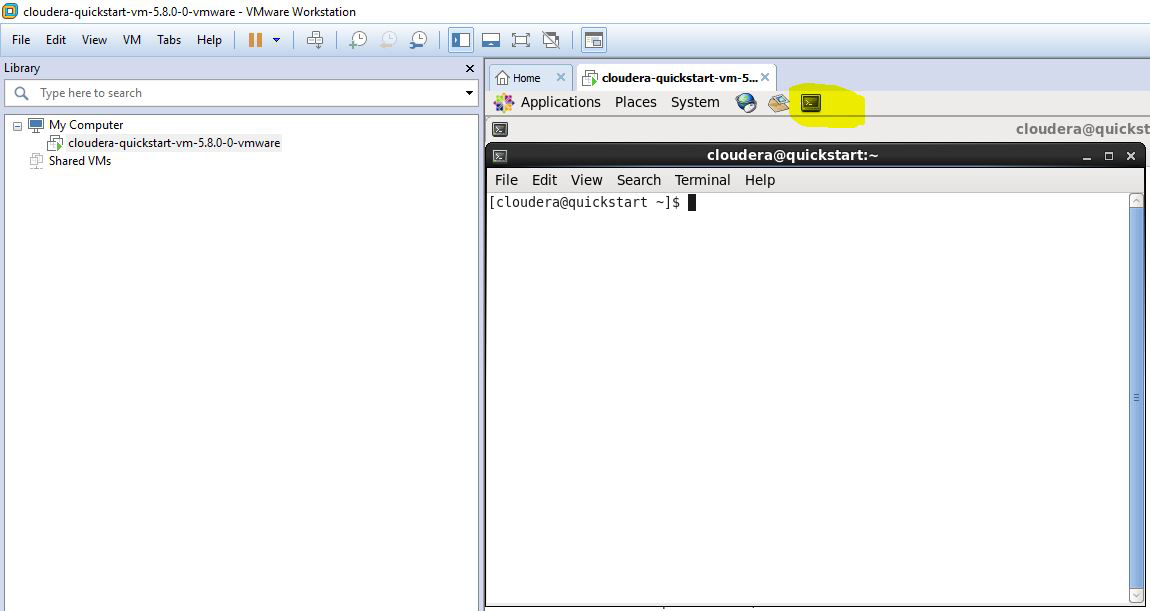

Steps to Open Cloudera after Installation

Step 1: On your desktop VMware workstation is available. Open that.

Step 2: Now you will get an interface. Click on open a virtual device.

Step 3: Select path - In this step, you have to select the path and file where you have downloaded the file.

Step 4: Now your virtual environment is creating.

Step 5: You can view your virtual machine details in this path.

Step 6: Now open the terminal to get started with hive commands.

Step 7: Now type hive in the terminal. It will give output as follows.

[cloudera@quickstart ~]$ hive

2020-12-09 20:59:24,314 WARN [main] mapreduce.TableMapReduceUtil:

The hbase-prefix-tree module jar containing PrefixTreeCodec is not present. Continuing without it.

Logging initialized using configuration in file:/etc/hive/conf.dist/hive-log4j.properties

WARNING: Hive CLI is deprecated and migration to Beeline is recommended.

hive>

Step 8: Now, you are all set and ready to start typing your hive commands.

Database Operations in HIVE

1. Create a database

Syntax:

create database database_name;

Example:

create database geeksportal;

Output:

2. Creating a table

Syntax:

create database.tablename(columns);

Example:

create table geeksportal.geekdata(id int,name string);

Here id and string are the two columns.

Output :

3. Display Database

Syntax:

show databases;

Output: Display the databases created.

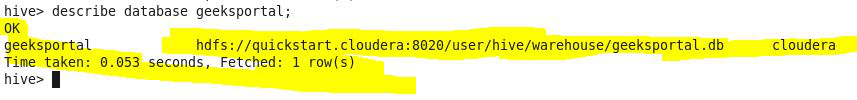

4. Describe Database

Syntax:

describe database database_name;

Example:

describe database geeksportal;

Output: Display the HDFS path of a particular database.

Similar Reads

Apache HIVE - Database Options Apache hive is a data-warehousing tool built on top of Hadoop. The structured data can be handled with the Hive query language. In this article, we are going to see options that are available with databases in the Hive. The database is used for storing information. The hive will create a directory f

4 min read

Inserting data using a CSV file in Cassandra In this article, we will discuss how you can insert data into the table using a CSV file. And we will also cover the implementation with the help of examples. Let's discuss it one by one. Pre-requisite - Introduction to Cassandra Introduction :If you want to store data in bulk then inserting data fr

2 min read

Apache Hive - Static Partitioning With Examples Partitioning in Apache Hive is very much needed to improve performance while scanning the Hive tables. It allows a user working on the hive to query a small or desired portion of the Hive tables. Suppose we have a table student that contains 5000 records, and we want to only process data of students

5 min read

Table Partitioning in Cassandra In this article, we are going to cover how we can our data access on the basis of partitioning and how we can store our data uniquely in a cluster. Let's discuss one by one. Pre-requisite — Data Distribution Table Partitioning : In table partitioning, data can be distributed on the basis of the part

2 min read

Apache Hive - Getting Started With HQL Database Creation And Drop Database Pre-requisite: Hive 3.1.2 Installation, Hadoop 3.1.2 Installation HiveQL or HQL is a Hive query language that we used to process or query structured data on Hive. HQL syntaxes are very much similar to MySQL but have some significant differences. We will use the hive command, which is a bash shell sc

3 min read

Import and Export Data using SQOOP SQOOP is basically used to transfer data from relational databases such as MySQL, Oracle to data warehouses such as Hadoop HDFS(Hadoop File System). Thus, when data is transferred from a relational database to HDFS, we say we are importing data. Otherwise, when we transfer data from HDFS to relation

3 min read