Implement Value Iteration in Python

Last Updated :

08 Jul, 2025

Value iteration is a fundamental algorithm in the field of Reinforcement learning(RL) used to find the optimal policy for a Markov Decision Process (MDP). It iteratively updates the value of each state by considering all possible actions and states, progressively refining its estimates until it converges to the optimal solution. This allows an agent to find the best strategy to maximize its cumulative reward over time. In this article, we will see the process of implementing Value Iteration in Python and breaking down the algorithm step-by-step.

Understanding Markov Decision Processes (MDPs)

Before moving to value iteration algorithm, it's important to understand the basics of Markov Decision Processes which is defined by:

- States (S): A set of all possible situations in the environment.

- Actions (A): A set of actions that an agent can take.

- Transition Model (P): The probability P(s′∣s, a)

of transitioning from state s to state s′ after taking action a.

- Reward Function (R): The immediate reward received after transitioning from state s to state s′ due to action a.

- Discount Factor (γ): A factor between 0 and 1 that discounts future rewards.

The goal of an MDP is to find an optimal policy π that maximizes the expected cumulative reward for the agent over time.

Key Steps of the Value Iteration Algorithm

1. Initialization

Start by initializing the value function V(s) for all states. Typically, this value is set to zero for all states at the beginning.

2. Value Update

Iteratively update the value function using the Bellman equation:

V_{k+1}(s) = \max_{a \in A} \sum_{s'} P(s'|s,a) \left[ R(s,a,s') + \gamma V_k(s') \right]

This equation calculates the expected cumulative reward for taking action a in state s, transitioning to state s′ and then following the optimal policy thereafter.

3. Convergence Check

Continue the iteration until the value function converges i.e the change in the value function between iterations is smaller than a predefined threshold ϵ.

Once the value function has converged, the optimal policy π(s) can be derived by selecting the action that maximizes the expected cumulative reward for each state:

\pi^*(s) = \arg\max_{a \in A} \sum_{s'} P(s'|s,a) \left[ R(s,a,s') + \gamma V^*(s') \right]

Example: Simple MDP Setup

Let’s implement the Value Iteration algorithm using a simple MDP with three states: S = \{s_1, s_2, s_3\} and two actions\quad A = \{a_1, a_2\}.

1. Transition Model P(s′∣s,a):- P(s_2|s_1, a_1) = 1

- P(s_3|s_1, a_2) = 1

- P(s_1|s_2, a_1) = 1

- P(s_3|s_2, a_2) = 1

- P(s_1|s_3, a_1) = 1

- P(s_2|s_3, a_2) = 1

2. Reward Function

- R(s_1, a_1, s_2) = 10

- R(s_1, a_2, s_3) = 5

- R(s_2, a_1, s_1) = 7

- R(s_2, a_2, s_3) = 3

- R(s_3, a_1, s_1) = 4

- R(s_3, a_2, s_2) = 8

Using the value iteration algorithm, we can find the optimal policy and value function for this MDP.

Implementation of the Value Iteration Algorithm

Now, let’s implement the Value Iteration algorithm in Python.

Step 1: Define the MDP Components

In this step, we will be using Numpy library and we define the states, actions and the transition model and reward function that govern the system.

Python

import numpy as np

states = [0, 1, 2]

actions = [0, 1]

def transition_model(s, a, s_next):

if (s == 0 and a == 0 and s_next == 1) or (s == 1 and a == 0 and s_next == 0):

return 1

elif (s == 0 and a == 1 and s_next == 2) or (s == 2 and a == 1 and s_next == 1):

return 1

return 0

def reward_function(s, a, s_next):

if s == 0 and a == 0 and s_next == 1:

return 10

elif s == 0 and a == 1 and s_next == 2:

return 5

return 0

gamma = 0.9

epsilon = 0.01

Step 2: Value Iteration Process

Here we implement the Value Iteration process that iteratively updates the value of each state until convergence.

Python

def value_iteration(states, actions, transition_model, reward_function, gamma, epsilon):

V = {s: 0 for s in states}

while True:

delta = 0

for s in states:

v = V[s]

V[s] = max(sum(transition_model(s, a, s_next) *

(reward_function(s, a, s_next) + gamma * V[s_next])

for s_next in states) for a in actions)

delta = max(delta, abs(v - V[s]))

if delta < epsilon:

break

policy = {}

for s in states:

policy[s] = max(actions,

key=lambda a: sum(

transition_model(s, a, s_next) *

(reward_function(

s, a, s_next) + gamma * V[s_next])

for s_next in states))

return policy, V

Step 3: Running the Algorithm

Now we call the value_iteration function with the defined parameters and display the results (optimal policy and value function).

Python

policy, value_function = value_iteration(states, actions, transition_model, reward_function, gamma, epsilon)

print("Optimal Policy:", policy)

print("Value Function:", value_function)

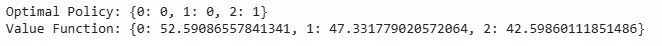

Output:

Result

ResultApplications of Value Iteration

Value iteration is used in various applications like:

- Robotics: For path planning and decision-making in uncertain environments in dynamic games.

- Game Development: For creating intelligent agents that can make optimal decisions.

- Finance: For optimizing investment strategies and managing portfolios.

- Operations Research: For solving complex decision-making problems in logistics and supply chain management.

- Healthcare: For optimizing treatment plans and balancing short-term costs with long-term health outcomes.

By mastering Value Iteration, we can solve complex decision-making problems in dynamic, uncertain environments and apply it to real-world challenges across various domains.

Similar Reads

Using Iterations in Python Effectively Prerequisite: Iterators in Python Following are different ways to use iterators. C-style approach: This approach requires prior knowledge of a total number of iterations. Python # A C-style way of accessing list elements cars = ["Aston", "Audi", "McLaren"] i = 0 while (

6 min read

Iterate over a tuple in Python Python provides several ways to iterate over tuples. The simplest and the most common way to iterate over a tuple is to use a for loop. Below is an example on how to iterate over a tuple using a for loop.Pythont = ('red', 'green', 'blue', 'yellow') # iterates over each element of the tuple 't' # and

2 min read

Infinite Iterators in Python Iterator in Python is any python type that can be used with a ‘for in loop’. Python lists, tuples, dictionaries, and sets are all examples of inbuilt iterators. But it is not necessary that an iterator object has to exhaust, sometimes it can be infinite. Such type of iterators are known as Infinite

2 min read

Iterators in Python An iterator in Python is an object that holds a sequence of values and provide sequential traversal through a collection of items such as lists, tuples and dictionaries. . The Python iterators object is initialized using the iter() method. It uses the next() method for iteration.__iter__(): __iter__

3 min read

Iterate over a set in Python The goal is to iterate over a set in Python. Since sets are unordered, the order of elements may vary each time you iterate. There are several ways to access and process each element of a set in Python, but the sequence may change with each execution. Let's explore different ways to iterate over a s

2 min read

Decrement in While Loop in Python A loop is an iterative control structure capable of directing the flow of the program based on the authenticity of a condition. Such structures are required for the automation of tasks. There are 2 types of loops presenting the Python programming language, which are: for loopwhile loop This article

3 min read