(Msc Thesis) Sparse Coral Classification Using Deep Convolutional Neural Networks

- 1. Sparse Coral Classification Using Deep Convolutional Neural Networks Mohamed Elawady, Neil Robertson, David Lane Heriot-Watt University VIBOT 7

- 2. • Introduction • Problem Definition • Related Work • Methodology • Results • Conclusion and Future Work 18 June 2014 2 Outline

- 3. • Introduction • Problem Definition • Related Work • Methodology • Results • Conclusion and Future Work 18 June 2014 3 Outline

- 4. Introduction 18 June 2014 4 Fast facts about coral: Consists of tiny animals (not plants). Takes long time to grow (0.5 – 2cm per year). Exists in more than 200 countries. Generates 29.8 billion dollars per year through different ecosystem services. 10% of the world's coral reefs are dead, more than 60% of the world's reefs are at risk due to human- related activities. By 2050, all coral reefs will be in danger.

- 5. Introduction 18 June 2014 5 Coral Transplantation: Coral gardening through involvement of SCUBA divers in coral reef reassemble and transplantation. Examples: Reefs capers Project 2001 at Maldives & Save Coral Reefs 2012 at Thailand. Limitations: time & depth per dive session. Robot-based strategy in deep-sea coral restoration through intelligent autonomous underwater vehicles (AUVs) grasp cold- water coral samples and replant them in damaged reef areas.

- 6. • Introduction • Problem Definition • Related Work • Methodology • Results • Conclusion and Future Work 18 June 2014 6 Outline

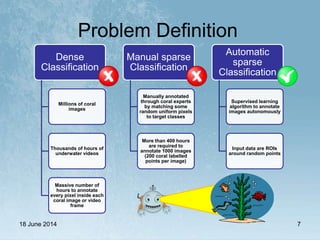

- 7. Problem Definition 18 June 2014 7 Dense Classification Millions of coral images Thousands of hours of underwater videos Massive number of hours to annotate every pixel inside each coral image or video frame Manual sparse Classification Manually annotated through coral experts by matching some random uniform pixels to target classes More than 400 hours are required to annotate 1000 images (200 coral labelled points per image) Automatic sparse Classification Supervised learning algorithm to annotate images autonomously Input data are ROIs around random points

- 8. Moorea Labeled Corals (MLC) University of California, San Diego (UCSD) Island of Moorea in French Polynesia ~ 2000 Images (2008, 2009, 2010) 200 Labeled Points per Image Problem Definition 18 June 2014 8 MLC Dataset

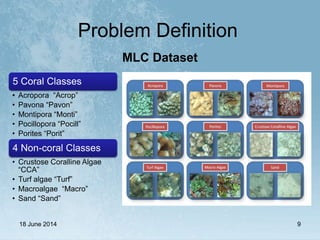

- 9. 18 June 2014 9 5 Coral Classes • Acropora “Acrop” • Pavona “Pavon” • Montipora “Monti” • Pocillopora “Pocill” • Porites “Porit” 4 Non-coral Classes • Crustose Coralline Algae “CCA” • Turf algae “Turf” • Macroalgae “Macro” • Sand “Sand” MLC Dataset Problem Definition

- 10. Atlantic Deep Sea (ADS) Heriot-Watt University (HWU) North Atlantic West of Scotland and Ireland ~ 50 Images (2012) 200 Labeled Points per Image 18 June 2014 10 ADS Dataset Problem Definition

- 11. 18 June 2014 11 5 Coral Classes • DEAD “Dead Coral” • ENCW “Encrusting White Sponge” • LEIO “Leiopathes Species” • LOPH “Lophelia” • RUB “Rubble Coral” 4 Non-coral Classes • BLD “Boulder” • DRK “Darkness” • GRAV “Gravel” • Sand “Sand” ADS Dataset Problem Definition

- 12. • Introduction • Problem Definition • Related Work • Methodology • Results • Conclusion and Future Work 18 June 2014 12 Outline

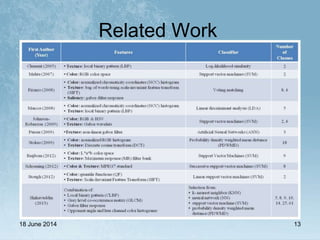

- 13. Related Work 18 June 2014 13

- 14. Related Work 18 June 2014 14

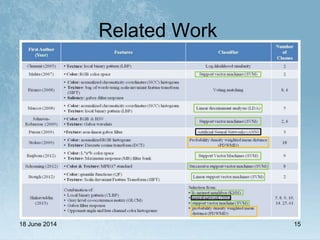

- 15. Related Work 18 June 2014 15

- 16. Related Work Sparse (Point-Based) Classification18 June 2014 16

- 17. • Introduction • Problem Definition • Related Work • Methodology • Results • Conclusion and Future Work 18 June 2014 17 Outline

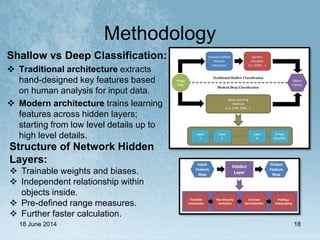

- 18. Methodology 18 June 2014 18 Shallow vs Deep Classification: Traditional architecture extracts hand-designed key features based on human analysis for input data. Modern architecture trains learning features across hidden layers; starting from low level details up to high level details. Structure of Network Hidden Layers: Trainable weights and biases. Independent relationship within objects inside. Pre-defined range measures. Further faster calculation.

- 19. Methodology 18 June 2014 19 “LeNet-5” by LeCun 1998 First back-propagation convolutional neural network (CNN) for handwritten digit recognition

- 20. Methodology 18 June 2014 20 Recent CNN applications Object classification: Buyssens (2012): Cancer cell image classification. Krizhevsky (2013): Large scale visual recognition challenge 2012. Object recognition: Girshick (2013): PASCAL visual object classes challenge 2012. Syafeeza (2014): Face recognition system. Pinheiro (2014): Scene labelling. Object detection system overview (Girshick) More than 10% better than top contest performer

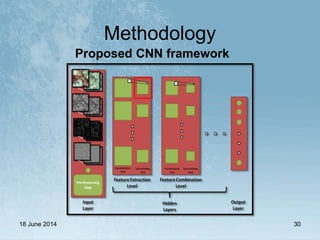

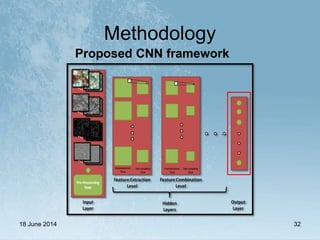

- 21. Methodology 18 June 2014 21 Proposed CNN framework

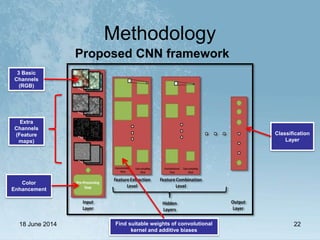

- 22. Methodology 18 June 2014 22 Proposed CNN framework 3 Basic Channels (RGB) Extra Channels (Feature maps) Find suitable weights of convolutional kernel and additive biases Classification Layer Color Enhancement

- 23. Methodology 18 June 2014 23 Proposed CNN framework

- 24. Methodology 18 June 2014 24 Hybrid patching: Three different-in-size patches are selected across each annotated point (61x61, 121x121, 181x181). Scaling patches up to size of the largest patch (181x181) allowing blurring in inter- shape coral details and keeping up coral’s edges and corners. Scaling patches down to size of the smallest patch (61x61) for fast classification computation.

- 25. Methodology 18 June 2014 25 Feature maps: Zero Component Analysis (ZCA) whitening makes data less- redundant by removing any neighbouring correlations in adjacent pixels. Weber Local Descriptor (WLD) shows a robust edge representation of high-texture images against high- noisy changes in illumination of image environment. Phase Congruency (PC) represents image features in such format which should be high in information and low in redundancy using Fourier transform.

- 26. Methodology 18 June 2014 26 Color enhancement: Bazeille’06 solves difficulties in capturing good quality under-water images due to non-uniform lighting and underwater perturbation. Iqbal ‘07 clears under-water lighting problems due to light absorption, vertical polarization, and sea structure. Beijbom’12 figures out compensation of color differences in underwater turbidity and illumination.

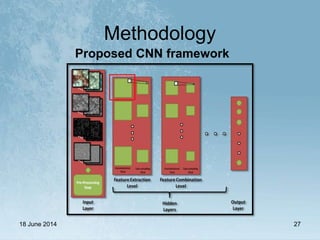

- 27. Methodology 18 June 2014 27 Proposed CNN framework

- 28. Methodology 18 June 2014 28 Kernel weights & bias initialization: The network initialized biases to zero, and kernel weights using uniform random distribution using the following range: where Nin and Nout represent number of input and output maps for each hidden layer (i.e. number of input map for layer 1 is 1 as gray-scale image or 3 as color image), and k symbolizes size of convolution kernel for each hidden layer.

- 29. Methodology 18 June 2014 29 Convolution layer: Convolution layer construct output maps by convoluting trainable kernel over input maps to extract/combine features for better network behaviour using the following equation: where xi l-1 & xj l are output maps of previous (l-1) & current (l) layers with convolution kernel numbers (input i and output j ) with weight kij l, f (.) is activation sigmoid function for calculated maps after summation, and bj l is an addition bias of current layer l with output convolution kernel number j.

- 30. Methodology 18 June 2014 30 Proposed CNN framework

- 31. Methodology 18 June 2014 31 Down-sampling layer: The functionality of down-sampling layer is dimensional reduction for feature maps through network's layers starting from input image ending to sufficient small feature representation leading to fast network computation in matrix calculation, which uses the following equation: where hn is non-overlapping averaging function with size nxn with neighbourhood weights w and applied on convoluted map x of kernel number j at layer l to get less-dimensional output map y of kernel number j at layer l (i.e. 64x64 input map will be reduced using n=2 to 32x32 output map).

- 32. Methodology 18 June 2014 32 Proposed CNN framework

- 33. Methodology 18 June 2014 33 Learning rate: An adapt learning rate is used rather than a constant one with respect to network's status and performance as follows: where αn & αn-1 are learning rates of current & previous iterations (if first network iteration is the current one, then learning rate of previous network iteration represents initial learning rate as network input), n & N are number of current network iteration & total number of iterations, en is back- propagated error of current network iteration, and g(.) is linear limitation function to keep value of learning rate in range (0,1].

- 34. Methodology 18 June 2014 34 Error back-propagation: The network is back-propagated with squared-error loss function as follows: where N & C are number of training samples & output classes, and t & y are target & actual outputs.

- 35. • Introduction • Problem Definition • Related Work • Methodology • Results • Conclusion and Future Work 18 June 2014 35 Outline

- 36. Results 18 June 2014 36 Parameters for Experimental Results Ratio of training/test sets 2:1 Size of hybrid input image (61 x 61) , (121 x 121) , (181 x 181) Number of input channels 3 (RGB) , 4 +(WLD, PC, ZCA) , 6 +(WLD + PC,+ZCA) Number of samples per class 300 Enhancement for RBG input Bazeille'06 , Iqbal'07, Beijbom'12, NoEhance Normalization method min-max [-1,+1] Initial learning rate 1 Network batch size 3 Number of network epochs 10 Number of hidden output maps (6-12) , (12-24) , (24-48) Size of last hidden output maps 4 x 4 Number of output classes 9

- 37. Results 18 June 2014 37 MLC ADS Experimental results on hybrid patching: Unified-scaling multi-size image patches have less error rates over single-sized image patches. Up-scaling in multi-size image patches have the best comparison results across different measurements. Hybrid down-scaling (61) is finally selected for fast computation.

- 38. Results 18 June 2014 38 MLC ADS Experimental results on hybrid patching: Unified-scaling multi-size image patches have less error rates over single-sized image patches. Up-scaling in multi-size image patches have the best comparison results across different measurements. Hybrid down-scaling (61) is finally selected for fast computation.

- 39. Results 18 June 2014 39 MLC ADS Experimental results on feature maps: Combination of three feature-based maps has slightly better classification results over basic color channels without any additional supplementary channels. In conclusion, additional feature- based channels besides basic color channels can be useful in coral discrimination in both datasets (MLC,ADS)!

- 40. Results 18 June 2014 40 MLC ADS Experimental results on color enhancement: Bazeille'06 is the best color enhancement algorithm over other algorithms (Iqbal'07, Beijbom'12). Raw image data without any enhancement is the best pre- processing choice for network classification.

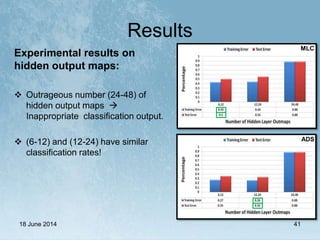

- 41. Results 18 June 2014 41 MLC ADS Experimental results on hidden output maps: Outrageous number (24-48) of hidden output maps Inappropriate classification output. (6-12) and (12-24) have similar classification rates!

- 42. Results 18 June 2014 42 Summary for Experimental Results Size of hybrid input image (61 x 61) , (121 x 121) , (181 x 181) Number of input channels 3 (RGB) , 4 +(WLD, PC, ZCA) , 6 +(WLD + PC,+ZCA) Enhancement for RBG input Bazeille'06 , Iqbal'07, Beijbom'12, NoEhance Number of hidden output maps (6-12) , (12-24) , (24-48) Updated Parameters for Final Results Number of network epochs 50

- 43. Results 18 June 2014 43 MLC ADS Final results: In MLC dataset , testing phase of has almost the same results and training phase has better results number of hidden output maps (12- 24) and using additional feature- based maps as supplementary channels. In ADS dataset, testing phase has best significant accuracy results with same selected configuration.

- 44. Results 18 June 2014 44 MLC ADS Final results (continued): In MLC dataset, best classification Acrop (coral) and Sand (non-coral), and lowest classification Pavon (coral) and Turf (non- coral). Misclassification Pavon as Monti / Macro and Turf as Macro/CCA/Sand due to similarity in their shape properties or growth environment. In ADS dataset, perfect classification DRK (non-coral) due to its distinct nature (almost dark blue plain image), excellent classification LEIO (coral) due to its distinction color property (orange). 56 % 81 %

- 45. Outline • Introduction • Problem Definition • Related Work • Methodology • Results • Conclusion and Future Work 18 June 2014 45

- 46. Conclusion and Future Work 18 June 2014 46 Conclusion • First application of deep learning techniques in under-water image processing. • Introduction of new coral-labeled dataset “Atlantic Deep Sea” representing cold- water coral reefs. • Investigation of convolutional neural networks in handling noisy large-sized images, manipulating point-based multi-channel input data. • Production of two pending publications in ICPR-CVAUI 2014, and ACCV 2014. Future Work • Composition of multiple deep convolutional models for N-dimensional data. • Development of real-time image/video application for coral recognition and detection. • Code optimization and improvement to develop GPU computation for processing huge image datasets and edge enhancement for feature-based maps. • Intensive nature analysis for different coral classes in variant aquatic environments.

- 47. References a.S.M. Shihavuddin, N. Gracias, R. Garcia, A. Gleason, and B. Gintert, “Image-Based Coral Reef Classification and Thematic Mapping,” Remote Sensing, vol. 5, pp. 1809-1841, 2013. O. Beijbom, P. J. Edmunds, D. I. Kline, B. G. Mitchell, and D. Kriegman, “Automated annotation of coral reef survey images,” 2012 IEEE CVPR, pp. 1170–1177, 2012. Y. A. LeCun, L. Bottou, G. B. Orr, and K.-R. Müller, “Efficient backprop,” in Neural networks: Tricks of the trade, pp. 9–48, Springer, 2012. R. Palm, “Prediction as a candidate for learning deep hierarchical models of data,” Technical University of Denmark, Palm, 2012. Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document recognition,” Proceedings of the IEEE, vol. 86, pp. 2278–2324, 1998. 18 June 2014 47

- 48. Thank You! 18 June 2014 48

- 49. Questions?! 18 June 2014 49

![Methodology

18 June 2014 33

Learning rate:

An adapt learning rate is used rather than a constant one with respect to

network's status and performance as follows:

where αn & αn-1 are learning rates of current & previous iterations (if first

network iteration is the current one, then learning rate of previous network

iteration represents initial learning rate as network input), n & N are

number of current network iteration & total number of iterations, en is back-

propagated error of current network iteration, and g(.) is linear limitation

function to keep value of learning rate in range (0,1].](https://image.slidesharecdn.com/masterspresentationmawady-140817205949-phpapp01/85/Msc-Thesis-Sparse-Coral-Classification-Using-Deep-Convolutional-Neural-Networks-33-320.jpg)

![Results

18 June 2014 36

Parameters for Experimental Results

Ratio of training/test sets 2:1

Size of hybrid input image (61 x 61) , (121 x 121) , (181 x 181)

Number of input channels

3 (RGB) , 4 +(WLD, PC, ZCA) ,

6 +(WLD + PC,+ZCA)

Number of samples per class 300

Enhancement for RBG input Bazeille'06 , Iqbal'07, Beijbom'12, NoEhance

Normalization method min-max [-1,+1]

Initial learning rate 1

Network batch size 3

Number of network epochs 10

Number of hidden output maps (6-12) , (12-24) , (24-48)

Size of last hidden output maps 4 x 4

Number of output classes 9](https://image.slidesharecdn.com/masterspresentationmawady-140817205949-phpapp01/85/Msc-Thesis-Sparse-Coral-Classification-Using-Deep-Convolutional-Neural-Networks-36-320.jpg)