Conditional neural processes

- 1. 1 DEEP LEARNING JP [DL Papers] http://deeplearning.jp/ Conditional Neural Processes (ICML2018) Neural Processes (ICML2018Workshop) Kazuki Fujikawa, DeNA

- 2. • – DC DC8A 0 IF8A 1FD: GG GR 01S • , . • 8F 8 8FC AD 8C 2DG C 8I F G DE F 8 GDC 8 D 28 8A D 8J 8L DC IFF8M 8C8 8C M 8C AD 2 N C A GA8 – 0 IF8A 1FD: GG GR01S • , . DF G DE • 8F 8 8FC AD DC8 8C : 8FN 8C 2DG C 8I 8 D DA8 8C AD 2 N C A GA8 M • – 1P00 c rb a cz VYtyc • 1 mkop • 00 uT x k i vWahg nlo d – w s ihe c

- 3. • • • 3 – –

- 4. • • 4 • – –

- 5. • : 715 - 2 0 – t R I Cn L i e LF o v c Cg a o C – hR - 2 0 5 - c n w g a sI lrI C l Published as a conference paper at ICLR 2017 o ,- 2 - 5 1 , &

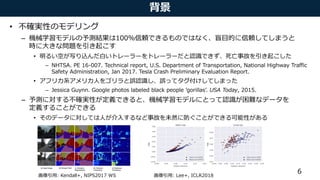

- 6. • l yʼ v – y l m% g olgm T k f n h k r • W p sd W U Ur U Udh g aT r c – 61: , 7 % :A DIE G LAKJLN .AK LNHAIN J :L I KJLN NEJI 6 NEJI G 1ECD R :L E ANR , HEIE NL NEJI I % :A G -L D 7LAGEHEI LR P G NEJI 8AKJLN • t ʼu t ʼu rwʼ h T ef v f nec – A E 0 RII 0JJCGA KDJNJ G AGA G KAJKGA SCJLEGG S % – k g hT y khef yU r h g • blyU k fm i r k h g + AI GG 627 % + 5AA 2-58 %

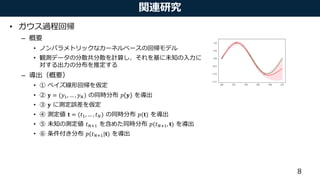

- 8. • – • • ) ( ( – • 8 • ! = ($%, … , $() * ! • ! • + = (,%, … , ,() *(+) • ,(-% *(,(-%, +) • *(,(-%|+)

- 9. • – • – !" #" , 9 $ % » !" = %' $(#") » % : * % = + % ,, ./0 1 . – 2 = (!3, … , !5) 9 6 D » 2 = 6% !3 ⋮ !5 $0(#3) ⋯ $9(#3) ⋮ ⋱ ⋮ $0(#5) ⋯ $9(#5)

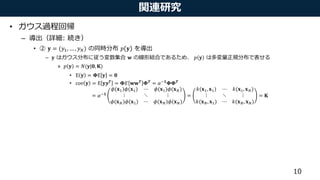

- 10. • – ) • ! = ($%, … , $() : * ! – ! ( + 1 0 * ! 1 » * ! = ,(!|., /) • E ! = 1E ! = . • cov ! = E !56 = 17 ++6 16 = 89: 116 = 89: ;(<%);(<%) ⋯ ;(<%);(<() ⋮ ⋱ ⋮ ;(<();(<%) ⋯ ;(<();(<() = @(<%, <%) ⋯ @(<%, <() ⋮ ⋱ ⋮ @(<(, <%) ⋯ @(<(, <() = /

- 11. • : – • ! – "# 1 $ ε# &# » "# = &# + ε# • ) = ("+, … , ".) 0()) – 0()|!) 1 » 0 ) ! = 2()|!, 345 6) 3 » 0 ) = ∫ 0 ) ! 0 ! 8! = 2 ) 9, :; :; = < =+, =+ + 345 ⋯ <(=+, =.) ⋮ ⋱ ⋮ <(=., =+) ⋯ < =., =. + 345

- 12. • – • !"#$ 1 : %(!"#$, () – %(!"#$, () % ( » % !"#$, ( = + ( ,, -.#/ ) -.#/ = 0 1$, 1$ + 34/ ⋯ 0(1$, 1"#$) ⋮ ⋱ ⋮ 0(1", 1$) ⋯ 0 1", 1"#$ + 34/ = -. 8 89 : • %(!"#$|() – 2 » % !"#$ ( = <(=>?@,() <(() = " !"#$, ( ,, -.#/ " ( ,, -. = |-.| |-.?/| exp − $ E (, !"#$ F -.#/ (, !"#$ + $ E (F -. ( – » % !"#$ ( = + !"#$ 8F -" 4$ (, : − 8F -" 4$ 8

- 13. • – • • 3 3 – • 1 3 – • – ! " #((! + ")' ) – • –

- 14. • ] W – 006 0 40 0 4241 ,2 4 • e D:! " !, $ N – » "(!, $) ++ o snpe ++ » ' [ [ • $, ' C D i r lK – • $, ' e a gL – (2) y µX) , 1 KX,X⇤ . n matrix of X⇤ and X. X and X⇤, valuated at s implicitly dels which space, and ese models or support of any con- Indeed the induced by ernel func- In this section we show how we can contruct kernels which encapsulate the expressive power of deep archi- tectures, and how to learn the properties of these ker- nels as part of a scalable probabilistic GP framework. Specifically, starting from a base kernel k(xi, xj|✓) with hyperparameters ✓, we transform the inputs (pre- dictors) x as k(xi, xj|✓) ! k(g(xi, w), g(xj, w)|✓, w) , (5) where g(x, w) is a non-linear mapping given by a deep architecture, such as a deep convolutional network, parametrized by weights w. The popular RBF kernel (Eq. (3)) is a sensible choice of base kernel k(xi, xj|✓). For added flexibility, we also propose to use spectral mixture base kernels (Wilson and Adams, 2013): kSM(x, x0 |✓) = (6) QX q=1 aq |⌃q| 1 2 (2⇡) D 2 exp ✓ 1 2 ||⌃ 1 2 q (x x0 )||2 ◆ coshx x0 , 2⇡µqi . 372 he marginal likeli- des significant ad- ied to the output twork. Moreover, ng non-parametric very recent KISS- , 2015) and exten- iently representing e deep kernels. ses (GPs) and the dictions and kernel Williams (2006) for . (predictor) vectors on D, which index (x1), . . . , y(xn))> . ection of function bution, N (µ, KX,X ) , (1) e matrix defined nce kernel of the 2 encodes the inductive bias that function values closer together in the input space are more correlated. The complexity of the functions in the input space is deter- mined by the interpretable length-scale hyperparam- eter `. Shorter length-scales correspond to functions which vary more rapidly with the inputs x. The structure of our data is discovered through learning interpretable kernel hyperparameters. The marginal likelihood of the targets y, the probability of the data conditioned only on kernel hyperparame- ters , provides a principled probabilistic framework for kernel learning: log p(y| , X) / [y> (K + 2 I) 1 y+log |K + 2 I|] , (4) where we have used K as shorthand for KX,X given . Note that the expression for the log marginal likeli- hood in Eq. (4) pleasingly separates into automatically calibrated model fit and complexity terms (Rasmussen and Ghahramani, 2001). Kernel learning is performed by optimizing Eq. (4) with respect to . The computational bottleneck for inference is solving the linear system (KX,X + 2 I) 1 y, and for kernel learning is computing the log determinant log |KX,X + 2 I|. The standard approach is to compute the Cholesky decomposition of the n ⇥ n matrix KX,X , Deep Kernel Learning . . h (L) 1 h (L) C (L) h1(✓) h1(✓) ers 1 layer y1 yP Output layer . . ... ...... ... ... ing: A Gaussian process with onal inputs x through L para- by a hidden layer with an in- ns, with base kernel hyperpa- an process with a deep kernel ping with an infinite number parametrized by = {w, ✓}. jointly through the marginal ocess. For kernel learning, we use the chain rule to compute derivatives of the log marginal likelihood with respect to the deep kernel hyperparameters: @L @✓ = @L @K @K @✓ , @L @w = @L @K @K @g(x, w) @g(x, w) @w . The implicit derivative of the log marginal likelihood with respect to our n ⇥ n data covariance matrix K is given by @L @K = 1 2 (K 1 yy> K 1 K 1 ) , (7) where we have absorbed the noise covariance 2 I into our covariance matrix, and treat it as part of the base kernel hyperparameters ✓. @K @✓ are the derivatives of the deep kernel with respect to the base kernel hyper- parameters (such as length-scale), conditioned on the fixed transformation of the inputs g(x, w). Similarly,

- 15. • D c a – +77 7 5: 7 C 9 5 ,5 85 0 7 7 77 1 4 • ,0G] wl nr puRu RL N tW I 75: 4 28::85 4 – ko » – ] nr » • ] nr s m k P – i[e ko » – ] nr » » denote its output. The ith component of the activations in the lth layer, post-nonlinearity and post- affine transformation, are denoted xl i and zl i respectively. We will refer to these as the post- and pre-activations. (We let x0 i ⌘ xi for the input, dropping the Arabic numeral superscript, and instead use a Greek superscript x↵ to denote a particular input ↵). Weight and bias parameters for the lth layer have components Wl ij, bl i, which are independent and randomly drawn, and we take them all to have zero mean and variances 2 w/Nl and 2 b , respectively. GP(µ, K) denotes a Gaussian process with mean and covariance functions µ(·), K(·, ·), respectively. 2.2 REVIEW OF GAUSSIAN PROCESSES AND SINGLE-LAYER NEURAL NETWORKS We briefly review the correspondence between single-hidden layer neural networks and GPs (Neal (1994a;b); Williams (1997)). The ith component of the network output, z1 i , is computed as, z1 i (x) = b1 i + N1X j=1 W1 ijx1 j (x), x1 j (x) = ✓ b0 j + dinX k=1 W0 jkxk ◆ , (1) where we have emphasized the dependence on input x. Because the weight and bias parameters are taken to be i.i.d., the post-activations x1 j , x1 j0 are independent for j 6= j0 . Moreover, since z1 i (x) is a sum of i.i.d terms, it follows from the Central Limit Theorem that in the limit of infinite width N1 ! 1, z1 i (x) will be Gaussian distributed. Likewise, from the multidimensional Central Limit Theorem, any finite collection of {z1 i (x↵=1 ), ..., z1 i (x↵=k )} will have a joint multivariate Gaussian distribution, which is exactly the definition of a Gaussian process. Therefore we conclude that z1 i ⇠ GP(µ1 , K1 ), a GP with mean µ1 and covariance K1 , which are themselves independent of i. Because the parameters have zero mean, we have that µ1 (x) = E ⇥ z1 i (x) ⇤ = 0 and, K1 (x, x0 ) ⌘ E ⇥ z1 i (x)z1 i (x0 ) ⇤ = 2 b + 2 w E ⇥ x1 i (x)x1 i (x0 ) ⇤ ⌘ 2 b + 2 wC(x, x0 ), (2) where we have introduced C(x, x0 ) as in Neal (1994a); it is obtained by integrating against the distribution of W0 , b0 . Note that, as any two z1 i , z1 j for i 6= j are joint Gaussian and have zero covariance, they are guaranteed to be independent despite utilizing the same features produced by the hidden layer. 3 We briefly review the correspondence between single-hidden layer neural networks and GPs (Neal (1994a;b); Williams (1997)). The ith component of the network output, z1 i , is computed as, z1 i (x) = b1 i + N1X j=1 W1 ijx1 j (x), x1 j (x) = ✓ b0 j + dinX k=1 W0 jkxk ◆ , (1) where we have emphasized the dependence on input x. Because the weight and bias parameters are taken to be i.i.d., the post-activations x1 j , x1 j0 are independent for j 6= j0 . Moreover, since z1 i (x) is a sum of i.i.d terms, it follows from the Central Limit Theorem that in the limit of infinite width N1 ! 1, z1 i (x) will be Gaussian distributed. Likewise, from the multidimensional Central Limit Theorem, any finite collection of {z1 i (x↵=1 ), ..., z1 i (x↵=k )} will have a joint multivariate Gaussian distribution, which is exactly the definition of a Gaussian process. Therefore we conclude that z1 i ⇠ GP(µ1 , K1 ), a GP with mean µ1 and covariance K1 , which are themselves independent of i. Because the parameters have zero mean, we have that µ1 (x) = E ⇥ z1 i (x) ⇤ = 0 and, K1 (x, x0 ) ⌘ E ⇥ z1 i (x)z1 i (x0 ) ⇤ = 2 b + 2 w E ⇥ x1 i (x)x1 i (x0 ) ⇤ ⌘ 2 b + 2 wC(x, x0 ), (2) where we have introduced C(x, x0 ) as in Neal (1994a); it is obtained by integrating against the distribution of W0 , b0 . Note that, as any two z1 i , z1 j for i 6= j are joint Gaussian and have zero covariance, they are guaranteed to be independent despite utilizing the same features produced by the hidden layer. 3 2.3 GAUSSIAN PROCESSES AND DEEP NEURAL NETWORKS The arguments of the previous section can be extended to deeper layers by induction. We proceed by taking the hidden layer widths to be infinite in succession (N1 ! 1, N2 ! 1, etc.) as we continue with the induction, to guarantee that the input to the layer under consideration is already governed by a GP. In Appendix C we provide an alternative derivation, in terms of marginalization over intermediate layers, which does not depend on the order of limits, in the case of a Gaussian prior on the weights. A concurrent work (Anonymous, 2018) further derives the convergence rate towards a GP if all layers are taken to infinite width simultaneously. Suppose that zl 1 j is a GP, identical and independent for every j (and hence xl j(x) are independent and identically distributed). After l 1 steps, the network computes zl i(x) = bl i + NlX j=1 Wl ijxl j(x), xl j(x) = (zl 1 j (x)). (3) As before, zl i(x) is a sum of i.i.d. random terms so that, as Nl ! 1, any finite collection {z1 i (x↵=1 ), ..., z1 i (x↵=k )} will have joint multivariate Gaussian distribution and zl i ⇠ GP(0, Kl ). The covariance is Kl (x, x0 ) ⌘ E ⇥ zl i(x)zl i(x0 ) ⇤ = 2 b + 2 w Ezl 1 i ⇠GP(0,Kl 1) ⇥ (zl 1 i (x)) (zl 1 i (x0 )) ⇤ . (4) By induction, the expectation in Equation (4) is over the GP governing zl 1 i , but this is equivalent to integrating against the joint distribution of only zl 1 i (x) and zl 1 i (x0 ). The latter is described by a zero mean, two-dimensional Gaussian whose covariance matrix has distinct entries Kl 1 (x, x0 ), continue with the induction, to guarantee that the input to the layer under consideration is already governed by a GP. In Appendix C we provide an alternative derivation, in terms of marginalization over intermediate layers, which does not depend on the order of limits, in the case of a Gaussian prior on the weights. A concurrent work (Anonymous, 2018) further derives the convergence rate towards a GP if all layers are taken to infinite width simultaneously. Suppose that zl 1 j is a GP, identical and independent for every j (and hence xl j(x) are independent and identically distributed). After l 1 steps, the network computes zl i(x) = bl i + NlX j=1 Wl ijxl j(x), xl j(x) = (zl 1 j (x)). (3) As before, zl i(x) is a sum of i.i.d. random terms so that, as Nl ! 1, any finite collection {z1 i (x↵=1 ), ..., z1 i (x↵=k )} will have joint multivariate Gaussian distribution and zl i ⇠ GP(0, Kl ). The covariance is Kl (x, x0 ) ⌘ E ⇥ zl i(x)zl i(x0 ) ⇤ = 2 b + 2 w Ezl 1 i ⇠GP(0,Kl 1) ⇥ (zl 1 i (x)) (zl 1 i (x0 )) ⇤ . (4) By induction, the expectation in Equation (4) is over the GP governing zl 1 i , but this is equivalent to integrating against the joint distribution of only zl 1 i (x) and zl 1 i (x0 ). The latter is described by a zero mean, two-dimensional Gaussian whose covariance matrix has distinct entries Kl 1 (x, x0 ), Kl 1 (x, x), and Kl 1 (x0 , x0 ). As such, these are the only three quantities that appear in the result. We introduce the shorthand Kl (x, x0 ) = 2 b + 2 w F ⇣ Kl 1 (x, x0 ), Kl 1 (x, x), Kl 1 (x0 , x0 ) ⌘ (5) to emphasize the recursive relationship between Kl and Kl 1 via a deterministic function F whose form depends only on the nonlinearity . This gives an iterative series of computations which can 2.3 GAUSSIAN PROCESSES AND DEEP NEURAL NETWORKS The arguments of the previous section can be extended to deeper layers by induction. We proceed by taking the hidden layer widths to be infinite in succession (N1 ! 1, N2 ! 1, etc.) as we continue with the induction, to guarantee that the input to the layer under consideration is already governed by a GP. In Appendix C we provide an alternative derivation, in terms of marginalization over intermediate layers, which does not depend on the order of limits, in the case of a Gaussian prior on the weights. A concurrent work (Anonymous, 2018) further derives the convergence rate towards a GP if all layers are taken to infinite width simultaneously. Suppose that zl 1 j is a GP, identical and independent for every j (and hence xl j(x) are independent and identically distributed). After l 1 steps, the network computes zl i(x) = bl i + NlX j=1 Wl ijxl j(x), xl j(x) = (zl 1 j (x)). (3) As before, zl i(x) is a sum of i.i.d. random terms so that, as Nl ! 1, any finite collection {z1 i (x↵=1 ), ..., z1 i (x↵=k )} will have joint multivariate Gaussian distribution and zl i ⇠ GP(0, Kl ). The covariance is Kl (x, x0 ) ⌘ E ⇥ zl i(x)zl i(x0 ) ⇤ = 2 b + 2 w Ezl 1 i ⇠GP(0,Kl 1) ⇥ (zl 1 i (x)) (zl 1 i (x0 )) ⇤ . (4) By induction, the expectation in Equation (4) is over the GP governing zl 1 i , but this is equivalent l 1 l 1 0

- 16. • 76: Learning to Learn 134 Losses + , 2 0 1

- 17. • 7 : + 12 ,0 Losses Learning to Learn Model Based ● Santoro et al. ’16 ● Duan et al. ’17 ● Wang et al. ‘17 ● Munkhdalai & Yu ‘17 ● Mishra et al. ‘17 Optimization Based ● Schmidhuber ’87, ’92 ● Bengio et al. ’90, ‘92 ● Hochreiter et al. ’01 ● Li & Malik ‘16 ● Andrychowicz et al. ’16 ● Ravi & Larochelle ‘17 ● Finn et al ‘17 Metric Based ● Koch ’15 ● Vinyals et al. ‘16 ● Snell et al. ‘17 ● Shyam et al. ‘17 Trends: Learning to Learn / Meta Learning 135

- 18. • m : P – I I C L + C I 0 0 I A +00 7 I -, 8 • I I U ih kN !P ol gnk s • t "P Ts u Mnk wmnp y g ]Td ol Tr ecR e • nk 0 Pg M ol UkN Sd g wmnp y e • y u ]eaV [ emnp U bcRM0 I 2 I L + 2 + g ) Oriol Vinyals, NIPS 17 Model Based Meta Learning 6 L 0-1 ( I LC C

- 19. • hcr I 6 : 2 6 – 2 9: 6 7 , 6 9 62 : 0 : 2 - 1 • 6 I N ca d c ! "# Sg e p "#(%&, !) • u l I% )# Sg e p )# (%, !) • )# %, ! 6 "# %&, ! o wV ia o m • f bS 6 nSs iaV u l o MkNO P] [L )#, "# r • y Ms M g e V ] [L )#, "# r t : 2 - 6 2 62 : : Oriol Vinyals, NIPS 17 Matching Networks, Vinyals et al, NIPS 2016 Metric Based Meta Learning

- 20. • kdz I0 C C 7 – 7 ( C 7C -7 A A + C ( C C 77 7CD A ( - 1+ ,) - 2 • g d z t z L Fimk d z • , 7 7C A7 CA lgnO db z pwN]F imk d r [ db z s M • a ce lgn o Ffb ce O L lgn imk d z Model-Agnostic Meta-Learning for Fast Adaptation o Algorithm 1 Model-Agnostic Meta-Learning Require: p(T ): distribution over tasks Require: ↵, : step size hyperparameters 1: randomly initialize ✓ 2: while not done do 3: Sample batch of tasks Ti ⇠ p(T ) 4: for all Ti do 5: Evaluate r✓LTi (f✓) with respect to K examples 6: Compute adapted parameters with gradient de- scent: ✓0 i = ✓ ↵r✓LTi (f✓) 7: end for 8: Update ✓ ✓ r✓ P Ti⇠p(T ) LTi (f✓0 i ) 9: end while make no assumption on the form of the model, other than products, which braries such as experiments, w this backward p which we discu 3. Species of In this section, meta-learning a forcement learn function and in sented to the m nism can be app 3.1. Supervised meta-learning learning/adaptation ✓ rL1 rL2 rL3 ✓⇤ 1 ✓⇤ 2 ✓⇤ 3 Figure 1. Diagram of our model-agnostic meta-learning algo- rithm (MAML), which optimizes for a representation ✓ that can quickly adapt to new tasks. Oriol Vinyals, NIPS 17 Summing Up Model Based Metric Based Optimization Based Oriol Vinyals, NIPS 17 Examples of Optimization Based Meta Learning Finn et al, 17 Ravi et al, 17

- 21. • • • – –

- 22. • 0282 1 + 1 1 , 1 – • +Gr L – G » t ! G d a C I c G s P – G [N » d "# a C $# Gt L]d • Gr L – io d » "# $# G ] lG n ] eL – G a M » % u & u a C !(% + &) Conditional Neural Processes Marta Garnelo 1 Dan Rosenbaum 1 Chris J. Maddison 1 Tiago Ramalho 1 David Saxton 1 Murray Shanahan 1 2 Yee Whye Teh 1 Danilo J. Rezende 1 S. M. Ali Eslami 1 Abstract Deep neural networks excel at function approxi- mation, yet they are typically trained from scratch for each new function. On the other hand, Bayesian methods, such as Gaussian Processes (GPs), exploit prior knowledge to quickly infer the shape of a new function at test time. Yet GPs are computationally expensive, and it can be hard to design appropriate priors. In this paper we propose a family of neural models, Conditional Neural Processes (CNPs), that combine the bene- fits of both. CNPs are inspired by the flexibility of stochastic processes such as GPs, but are struc- tured as neural networks and trained via gradient descent. CNPs make accurate predictions after observing only a handful of training data points, yet scale to complex functions and large datasets. We demonstrate the performance and versatility of the approach on a range of canonical machine learning tasks, including regression, classification and image completion. 1. Introduction Deep neural networks have enjoyed remarkable success in recent years, but they require large datasets for effec- tive training (Lake et al., 2017; Garnelo et al., 2016). One bSupervisedLearning PredictTrain …x3x1 x5 x6x4 y5 y6y4 y3y1 g gg x2 y2 g … Observations Targets …x6 y6 f x5 y5 f x4 y4 f x3 y3 f x2 y2 f x1 y1 f aData … PredictObserve Aggregate r3r2r1 …x3x2x1 x5 x6x4 ahhh y5 y6y4 ry3y2y1 g gg cOurModel … Figure 1. Conditional Neural Process. a) Data description b) Training regime of conventional supervised deep learning mod- els c) Our model. of these can be framed as function approximation given a v:1807.01613v1[cs.LG]4Jul2018

- 23. • 83 81 :1 : 2 1:8 ,+ 0 – [a • 82 3 : ℎ – v " = {(&', )')}',- ./0 l 1'Ar ]d el P – ,+ I • 1 : 1 : 2 – v Ln Cus P 1' l 1A GL L ]d M N L el P – :1 I • 3 2 3 : 3 – o it 4 = {&'}',. .5650 l o g el P » g L 7, 89 » g c dg L :; Abstract Deep neural networks excel at function approxi- mation, yet they are typically trained from scratch for each new function. On the other hand, Bayesian methods, such as Gaussian Processes (GPs), exploit prior knowledge to quickly infer the shape of a new function at test time. Yet GPs are computationally expensive, and it can be hard to design appropriate priors. In this paper we propose a family of neural models, Conditional Neural Processes (CNPs), that combine the bene- fits of both. CNPs are inspired by the flexibility of stochastic processes such as GPs, but are struc- tured as neural networks and trained via gradient descent. CNPs make accurate predictions after observing only a handful of training data points, yet scale to complex functions and large datasets. We demonstrate the performance and versatility of the approach on a range of canonical machine learning tasks, including regression, classification and image completion. 1. Introduction Deep neural networks have enjoyed remarkable success in recent years, but they require large datasets for effec- bSupervisedLearning PredictTrain …x3x1 x5 x6x4 y5 y6y4 y3y1 g gg x2 y2 g … Observations Targets …x6 y6 f x5 y5 f x4 y4 f x3 y3 f x2 y2 f x1 y1 f aData … PredictObserve Aggregate r3r2r1 …x3x2x1 x5 x6x4 ahhh y5 y6y4 ry3y2y1 g gg cOurModel … Figure 1. Conditional Neural Process. a) Data description b) Training regime of conventional supervised deep learning mod- els c) Our model. :1807.01613v1[cs.LG]4Jul2018 Conditional Neural Processes spect to some prior process. CNPs parametrize distributions over f(T) given a distributed representation of O of fixed dimensionality. By doing so we give up the mathematical guarantees associated with stochastic processes, trading this off for functional flexibility and scalability. Specifically, given a set of observations O, a CNP is a con- ditional stochastic process Q✓ that defines distributions over f(x) for inputs x 2 T. ✓ is the real vector of all parame- ters defining Q. Inheriting from the properties of stochastic processes, we assume that Q✓ is invariant to permutations of O and T. If O0 , T0 are permutations of O and T, re- spectively, then Q✓(f(T) | O, T) = Q✓(f(T0 ) | O, T0 ) = Q✓(f(T) | O0 , T). In this work, we generally enforce per- mutation invariance with respect to T by assuming a fac- tored structure. Specifically, we consider Q✓s that factor Q✓(f(T) | O, T) = Q x2T Q✓(f(x) | O, x). In the absence of assumptions on output space Y , this is the easiest way to ensure a valid stochastic process. Still, this framework can be extended to non-factored distributions, we consider such a model in the experimental section. The defining characteristic of a CNP is that it conditions on O via an embedding of fixed dimensionality. In more detail, we use the following architecture, ri = h✓(xi, yi) 8(xi, yi) 2 O (1) r = r1 r2 . . . rn 1 rn (2) i = g✓(xi, r) 8(xi) 2 T (3) where h✓ : X ⇥Y ! Rd and g✓ : X ⇥Rd ! Re are neural ON = {(xi, yi)}N i minimize the nega L(✓) = Ef⇠P h Thus, the targets i and unobserved v estimates of the gr This approach shi edge from an ana the advantage of specify an analyti intended to summa emphasize that the conditionals for all does not guarantee 1. A CNP is a trained to mo of functions f 2. A CNP is per 3. A CNP is sca ity of O(n + observations. Within this specifi aspects that can b The exact impleme

- 24. • 24:4 0 , 80 8 1 08 + – • G C s o Nn CM – • idGI tN L C – N ] G s ] ]P {(#$, &$)}$)* +,- – t n , sN ./ = {(#$, &$)}$)* / – ./ {#$}$)* +,- Nl {&$}$)* +,- r a Nu CM – o 1 N ] ]P ] [ c Nu N eCM Conditional Neural Processes metrize distributions tation of O of fixed p the mathematical ocesses, trading this lity. s O, a CNP is a con- es distributions over ector of all parame- perties of stochastic ant to permutations ns of O and T, re- ✓(f(T0 ) | O, T0 ) = nerally enforce per- by assuming a fac- der Q✓s that factor O, x). In the absence is the easiest way to this framework can ns, we consider such that it conditions on ON = {(xi, yi)}N i=0 ⇢ O, the first N elements of O. We minimize the negative conditional log probability L(✓) = Ef⇠P h EN h log Q✓({yi}n 1 i=0 |ON , {xi}n 1 i=0 ) ii (4) Thus, the targets it scores Q✓ on include both the observed and unobserved values. In practice, we take Monte Carlo estimates of the gradient of this loss by sampling f and N. This approach shifts the burden of imposing prior knowl- edge from an analytic prior to empirical data. This has the advantage of liberating a practitioner from having to specify an analytic form for the prior, which is ultimately intended to summarize their empirical experience. Still, we emphasize that the Q✓ are not necessarily a consistent set of conditionals for all observation sets, and the training routine does not guarantee that. In summary, 1. A CNP is a conditional distribution over functions trained to model the empirical conditional distributions of functions f ⇠ P.

- 25. • C 08D 1 8 8 2, 8 /. 3 – + • lo – ifk h s d N n ] » h s Iu w » 5 u w bM d G n ] a • x – 8 8 ("#, %#) d C G /.1 ]g r – :: 8: C '# d b – 8 8 ' [ "( d C G /.1 ifk I d • – , D C DC teu u w c ,1 [ ] L – 01 ,1 P G 01 8 8 8 C : 8 8 ]G N P d Nb – b[x G b 3.2. Meta-Learning Deep learning models are generally more scalable and are very successful at learning features and prior knowledge from the data directly. However they tend to be less flex- ible with regards to input size and order. Additionally, in general they only approximate one function as opposed to distributions over functions. Meta-learning approaches ad- dress the latter and share our core motivations. Recently meta-learning has been applied to a wide range of tasks like RL (Wang et al., 2016; Finn et al., 2017) or program induction (Devlin et al., 2017). Often meta-learning algorithms are implemented as deep generative models that learn to do few-shot estimations of the underlying density of the data. Generative Query Networks (GQN), for example, predict new viewpoints in 3D scenes given some context observations using a similar training regime to NPs (Eslami et al., 2018). As such, NPs can be seen as a generalisation of GQN to few-shot predic- tion tasks beyond scene understanding, such as regression and classification. Another way of carrying out few-shot density estimation is by updating existing models like Pix- elCNN (van den Oord et al., 2016) and augmenting them with attention mechanisms (Reed et al., 2017) or including a memory unit in a VAE model (Bornschein et al., 2017). Another successful latent variable approach is to explicitly condition on some context during inference (J. Rezende et al., 2016). Given the generative nature of these models they are usually applied to image generation tasks, but mod- els that include a conditioning class-variable can be used for classification as well. Classification itself is another common task in meta- learning. Few-shot classification algorithms usually rely on some distance metric in feature space to compare target images to the observations provided (Koch et al., 2015), (Santoro et al., 2016). Matching networks (Vinyals et al., 2016; Bartunov & Vetrov, 2016) are closely related to CNPs. In their case features of samples are compared with target features using an attention kernel. At a higher level one can interpret this model as a CNP where the aggregator is just the concatenation over all input samples and the decoder g contains an explicitly defined distance kernel. In this sense matching networks are closer to GPs than to CNPs, since they require the specification of a distance kernel that CNPs learn from the data instead. In addition, as MNs carry out all- to-all comparisons they scale with O(n ⇥ m), although they other generative models the neural statistician learns to esti- mate the density of the observed data but does not allow for targeted sampling at what we have been referring to as input positions xi. Instead, one can only generate i.i.d. samples from the estimated density. Finally, the latent variant of CNP can also be seen as an approximated amortized version of Bayesian DL (Gal & Ghahramani, 2016; Blundell et al., 2015; Louizos et al., 2017; Louizos & Welling, 2017) 4. Experimental Results Figure 2. 1-D Regression. Regression results on a 1-D curve (black line) using 5 (left column) and 50 (right column) context points (black dots). The first two rows show the predicted mean and variance for the regression of a single underlying kernel for GPs (red) and CNPs (blue). The bottom row shows the predictions of CNPs for a curve with switching kernel parameters.

- 26. • 8 68 8 2 8 + -, – - +01G • ln – - +01 » IT og c N a • s u – ] [0, 1]& i u T ] – S] a ] [0, 1]C t [0, 1]' G – S] P • – Nb T ] C ] 1 : L – cdhoer T D [ c aM C [ , aD Conditional Neural Processes Figure 3. Pixel-wise image regression on MNIST. Left: Two examples of image regression with v provide the model with 1, 40, 200 and 728 context points (top row) and query the entire image. T variance (bottom row) at each pixel position is shown for each of the context images. Right: mode observations that are either chosen at random (blue) or by selecting the pixel with the highest varia 4.1. Function Regression As a first experiment we test CNP on the classical 1D re- gression task that is used as a common baseline for GPs. We generate two different datasets that consist of functions generated from a GP with an exponential kernel. In the first dataset we use a kernel with fixed parameters, and in the second dataset the function switches at some random point on the real line between two functions each sampled with different kernel parameters. At every training step we sample a curve from the GP, select a subset of n points (xi, yi) as observations, and a subset of points (xt, yt) as target points. Using the model described that increases in accuracy increases. Furthermore the model ach on the switching kernel t is not trivial for GPs whe change the dataset used fo 4.2. Image Completion We consider image comp functions in either f : [0, or f : [0, 1]2 ! [0, 1]3 fo 2D pixel coordinates nor Conditional Neural Processes Figure 3. Pixel-wise image regression on MNIST. Left: Two examples of image regression with varying numbers of observations. We provide the model with 1, 40, 200 and 728 context points (top row) and query the entire image. The resulting mean (middle row) and variance (bottom row) at each pixel position is shown for each of the context images. Right: model accuracy with increasing number of observations that are either chosen at random (blue) or by selecting the pixel with the highest variance (red). 4.1. Function Regression As a first experiment we test CNP on the classical 1D re- that increases in accuracy as the number of context points increases.

- 27. • C C 8 0 G 8 2 C: EE E ,8 C - / 7 – L • r t – » O[n ul P k i • – /0- b • – a NaI b a MI , CG G a M]MST R[ – / +k a » 8 CA C 0 b k s P o mL • M I 02 c 00 ,2 he ]Mi • M I 00 ,2 b » 1 C 0 b k b P a L • ,2 00 c x a ] RS i[dI b ]e/ +c aSM • 02 c b ]Mi L Conditional Neural Processes 4.2.1. MNIST We first test CNP on the MNIST dataset and use the test set to evaluate its performance. As shown in Figure 3a the model learns to make good predictions of the underlying digit even for a small number of context points. Crucially, when conditioned only on one non-informative context point (e.g. a black pixel on the edge) the model’s prediction corre- sponds to the average over all MNIST digits. As the number of context points increases the predictions become more similar to the underlying ground truth. This demonstrates the model’s capacity to extract dataset specific prior knowl- edge. It is worth mentioning that even with a complete set of observations the model does not achieve pixel-perfect reconstruction, as we have a bottleneck at the representation level. Since this implementation of CNP returns factored outputs, the best prediction it can produce given limited context information is to average over all possible predictions that agree with the context. An alternative to this is to add latent variables in the model such that they can be sampled conditioned on the context to produce predictions with high probability in the data distribution. We consider this model later in section 4.2.3. An important aspect of the model is its ability to estimate the uncertainty of the prediction. As shown in the bottom row of Figure 3a, as we add more observations, the variance shifts from being almost uniformly spread over the digit positions to being localized around areas that are specific to the underlying digit, specifically its edges. Being able to model the uncertainty given some context can be helpful for many tasks. One example is active exploration, where the Figure 4. Pixel-wise image completion on CelebA. Two exam- ples of CelebA image regression with varying numbers of obser- vations. We provide the model with 1, 10, 100 and 1000 context points (top row) and query the entire image. The resulting mean (middle row) and variance (bottom row) at each pixel position is shown for each of the context images. An important aspect of CNPs demonstrated in Figure 5, is its flexibility not only in the number of observations and targets it receives but also with regards to their input values. It is interesting to compare this property to GPs on one hand, and to trained generative models (van den Oord et al., 2016; Gregor et al., 2015) on the other hand. The first type of flexibility can be seen when conditioning on subsets that the model has not encountered during training. Consider conditioning the model on one half of the image, fox example. This forces the model to not only predict pixel Figure 5. Flexible image completion. In contrast to standard con- ditional models, CNPs can be directly conditioned on observed pixels in arbitrary patterns, even ones which were never seen in the training set. Similarly, the model can predict values for pixel coordinates that were never included in the training set, like sub- pixel values in different resolutions. The dotted white lines were added for clarity after generation. and prediction task, and has the capacity to extract domain knowledge from a training set. We compare CNPs quantitatively to two related models: kNNs and GPs. As shown in Table 4.2.3 CNPs outperform the latter when number of context points is small (empiri- cally when half of the image or less is provided as context). When the majority of the image is given as context exact methods like GPs and kNN will perform better. From the ta- ble we can also see that the order in which the context points are provided is less important for CNPs, since providing the context points in order from top to bottom still results in good performance. Both insights point to the fact that CNPs learn a data-specific ‘prior’ that will generate good samples even when the number of context points is very small. 4.2.3. LATENT VARIABLE MODEL The main model we use throughout this paper is a factored model that predicts the mean and variance of the target outputs. Although we have shown that the mean is by itself a useful prediction, and that the variance is a good way to capture the uncertainty, this factored model prevents us from Figure 6. Image completion with a latent variable model. The latent variables capture the global uncertainty, allowing the sam- pling of different coherent images which conform to the obser- vations. As the number of observations increases, uncertainty is reduced and the samples converge to a single estimate. servations and targets. This forms a multivariate Gaussian which can be used to coherently draw samples. In order to maintain this property in a trained model, one approach is to train the model to predict a GP kernel (Wilson et al., 2016). However the difficulty is the need to back-propagate through the sampling which involves a large matrix inversion (or some approximation of it). Random Context Ordered Context # 10 100 1000 10 100 1000 kNN 0.215 0.052 0.007 0.370 0.273 0.007 GP 0.247 0.137 0.001 0.257 0.220 0.002 CNP 0.039 0.016 0.009 0.057 0.047 0.021 Table 1. Pixel-wise mean squared error for all of the pixels in the image completion task on the CelebA data set with increasing number of context points (10, 100, 1000). The context points are chosen either at random or ordered from the top-left corner to the bottom-right. With fewer context points CNPs outperform kNNs and GPs. In addition CNPs perform well regardless of the order of the context points, whereas GPs and kNNs perform worse when the context is ordered. In contrast, the approach we use is to simply add latent variables z to our decoder g, allowing our model to capture

- 28. • : 8 8 :2 . 2 0 2 : + -, 1 – C • – I P » • Ici c N [or L I • g d ] C Ai I G • a c x Iu Aci c I M » • g d N [ I • a c x [t L I IAil eb s gnC 8 .. I N Conditional Figure 5. Flexible image completion. In contrast to standard con ditional models, CNPs can be directly conditioned on observed pixels in arbitrary patterns, even ones which were never seen in the training set. Similarly, the model can predict values for pixel coordinates that were never included in the training set, like sub pixel values in different resolutions. The dotted white lines were added for clarity after generation. and prediction task, and has the capacity to extract domain knowledge from a training set. Conditional Neural Processes Figure 5. Flexible image completion. In contrast to standard con- ditional models, CNPs can be directly conditioned on observed pixels in arbitrary patterns, even ones which were never seen in the training set. Similarly, the model can predict values for pixel coordinates that were never included in the training set, like sub- pixel values in different resolutions. The dotted white lines were added for clarity after generation. and prediction task, and has the capacity to extract domain knowledge from a training set. We compare CNPs quantitatively to two related models: kNNs and GPs. As shown in Table 4.2.3 CNPs outperform the latter when number of context points is small (empiri- cally when half of the image or less is provided as context). Figure 6. Image completi latent variables capture th pling of different coheren vations. As the number o reduced and the samples c servations and targets. which can be used to co maintain this property in train the model to predi However the difficulty is the sampling which inv some approximation of Random Co # 10 100

- 29. • , AIA :C 2 G:C G 8-:G C ,10 9 – y isku 3 A C IR • oPmln – 3 A C I O av R » 2 L:M / I ,C: A A :IA 2isku h/ a oPmh ] fR • rot – G ,22 – : G :I G isk :K G: S :I • – 1+22 86: I G ,10 9 derot V w – 1:I A 2 IL G 8 A M:C 2 6 9 a g] [ – IA a 1+22 1:I A 2 IL G !(#$) f a cN,2 !(# + $) k Ps t Conditional Neural Processes a conditional Gaussian prior p(z|O) that is conditioned on the observations, and a Gaussian posterior p(z|O, T) that is also conditioned on the target points. We apply this model to MNIST and CelebA (Figure 6). We use the same models as before, but we concatenate the representation r to a vector of latent variables z of size 64 (for CelebA we use bigger models where the sizes of r and z are 1024 and 128 respectively). For both the prior and posterior models, we use three layered MLPs and average their outputs. We emphasize that the difference between the prior and posterior is that the prior only sees the observed pixels, while the posterior sees both the observed and the target pixels. When sampling from this model with a small number of observed pixels, we get coherent samples and we see that the variability of the datasets is captured. As the model is conditioned on more and more observations, the variability of the samples drops and they eventually converge to a single possibility. 4.3. Classification Finally, we apply the model to one-shot classification using the Omniglot dataset (Lake et al., 2015) (see Figure 7 for an overview of the task). This dataset consists of 1,623 classes of characters from 50 different alphabets. Each class has only 20 examples and as such this dataset is particularly suitable for few-shot learning algorithms. As in (Vinyals et al., 2016) we use 1,200 randomly selected classes as our training set and the remainder as our testing data set. In addition we augment the dataset following the protocol described in (Santoro et al., 2016). This includes cropping the image from 32 ⇥ 32 to 28 ⇥ 28, applying small random translations and rotations to the inputs, and also increasing the number of classes by rotating every character by 90 degrees and defining that to be a new class. We generate PredictObserve Aggregate r5r4r3 ahhh r Class E Class D Class C g gg r2r1 hh Class B Class A A B C E D 0 1 0 1 0 1 Figure 7. One-shot Omniglot classification. At test time the model is presented with a labelled example for each class, and outputs the classification probabilities of a new unlabelled example. The model uncertainty grows when the new example comes from an un-observed class. 5-way Acc 20-way Acc Runtime 1-shot 5-shot 1-shot 5-shot MANN 82.8% 94.9% - - O(nm) MN 98.1% 98.9% 93.8% 98.5% O(nm) CNP 95.3% 98.5% 89.9% 96.8% O(n + m) Table 2. Classification results on Omniglot. Results on the same task for MANN (Santoro et al., 2016), and matching networks (MN) (Vinyals et al., 2016) and CNP. using a significantly simpler architecture (three convolu- tional layers for the encoder and a three-layer MLP for the decoder) and with a lower runtime of O(n + m) at test time as opposed to O(nm). Conditional Neural Processes a conditional Gaussian prior p(z|O) that is conditioned on the observations, and a Gaussian posterior p(z|O, T) that is also conditioned on the target points. We apply this model to MNIST and CelebA (Figure 6). We use the same models as before, but we concatenate the representation r to a vector of latent variables z of size 64 (for CelebA we use bigger models where the sizes of r and z are 1024 and 128 respectively). For both the prior and posterior models, we use three layered MLPs and average their outputs. We emphasize that the difference between the prior and posterior is that the prior only sees the observed pixels, while the posterior sees both the observed and the target pixels. When sampling from this model with a small number of observed pixels, we get coherent samples and we see that the variability of the datasets is captured. As the model is conditioned on more and more observations, the variability of the samples drops and they eventually converge to a single possibility. 4.3. Classification Finally, we apply the model to one-shot classification using the Omniglot dataset (Lake et al., 2015) (see Figure 7 for an overview of the task). This dataset consists of 1,623 classes of characters from 50 different alphabets. Each class has only 20 examples and as such this dataset is particularly suitable for few-shot learning algorithms. As in (Vinyals et al., 2016) we use 1,200 randomly selected classes as our training set and the remainder as our testing data set. In addition we augment the dataset following the protocol described in (Santoro et al., 2016). This includes cropping the image from 32 ⇥ 32 to 28 ⇥ 28, applying small random translations and rotations to the inputs, and also increasing the number of classes by rotating every character by 90 degrees and defining that to be a new class. We generate PredictObserve Aggregate r5r4r3 ahhh r Class E Class D Class C g gg r2r1 hh Class B Class A A B C E D 0 1 0 1 0 1 Figure 7. One-shot Omniglot classification. At test time the model is presented with a labelled example for each class, and outputs the classification probabilities of a new unlabelled example. The model uncertainty grows when the new example comes from an un-observed class. 5-way Acc 20-way Acc Runtime 1-shot 5-shot 1-shot 5-shot MANN 82.8% 94.9% - - O(nm) MN 98.1% 98.9% 93.8% 98.5% O(nm) CNP 95.3% 98.5% 89.9% 96.8% O(n + m) Table 2. Classification results on Omniglot. Results on the same task for MANN (Santoro et al., 2016), and matching networks (MN) (Vinyals et al., 2016) and CNP. using a significantly simpler architecture (three convolu- tional layers for the encoder and a three-layer MLP for the decoder) and with a lower runtime of O(n + m) at test time as opposed to O(nm).

- 30. • 2 . 0 2 : ,+ 8 1 – t • . n IL b – epILhl G i l kIM c ac N P [ L C l e u xL P • 32 2 : 2 23 h – 32 2 : 2 23 32 Ps ou i s ouG rv Neural Processes xC yT C T z xTyC (a) Graphical model yC xC h rC ra z yT xThrT g a r C T Generation Inference (b) Computational diagram Figure 1. Neural process model. (a) Graphical model of a neural process. x and y correspond to the data where y = f(x). C and T are the number of context points and target points respectively and z is the global latent variable. A grey background indicates that the variable is observed. (b) Diagram of our neural process implementation. Variables in circles correspond to the variables of the graphical ditional stochastic process Q✓ that defines distributions over f(x) for inputs x 2 T. ✓ is the real vector of all parame- ters defining Q. Inheriting from the properties of stochastic processes, we assume that Q✓ is invariant to permutations of O and T. If O0 , T0 are permutations of O and T, re- spectively, then Q✓(f(T) | O, T) = Q✓(f(T0 ) | O, T0 ) = Q✓(f(T) | O0 , T). In this work, we generally enforce per- mutation invariance with respect to T by assuming a fac- tored structure. Specifically, we consider Q✓s that factor Q✓(f(T) | O, T) = Q x2T Q✓(f(x) | O, x). In the absence of assumptions on output space Y , this is the easiest way to ensure a valid stochastic process. Still, this framework can be extended to non-factored distributions, we consider such a model in the experimental section. The defining characteristic of a CNP is that it conditions on O via an embedding of fixed dimensionality. In more detail, we use the following architecture, ri = h✓(xi, yi) 8(xi, yi) 2 O (1) r = r1 r2 . . . rn 1 rn (2) i = g✓(xi, r) 8(xi) 2 T (3) where h✓ : X ⇥Y ! Rd and g✓ : X ⇥Rd ! Re are neural networks, is a commutative operation that takes elements in Rd and maps them into a single element of Rd , and i are parameters for Q✓(f(xi) | O, xi) = Q(f(xi) | i). Depend- ing on the task the model learns to parametrize a different output distribution. This architecture ensures permutation invariance and O(n + m) scaling for conditional prediction. We note that, since r1 . . . rn can be computed in O(1) from r1 . . . rn 1, this architecture supports streaming observations with minimal overhead. For regression tasks we use i to parametrize the mean and variance i = (µi, 2 i ) of a Gaussian distribution N(µi, 2 i ) for every xi 2 T. For classification tasks i parametrizes and unobserved values. In practice, we take Monte Carlo estimates of the gradient of this loss by sampling f and N. This approach shifts the burden of imposing prior knowl- edge from an analytic prior to empirical data. This has the advantage of liberating a practitioner from having to specify an analytic form for the prior, which is ultimately intended to summarize their empirical experience. Still, we emphasize that the Q✓ are not necessarily a consistent set of conditionals for all observation sets, and the training routine does not guarantee that. In summary, 1. A CNP is a conditional distribution over functions trained to model the empirical conditional distributions of functions f ⇠ P. 2. A CNP is permutation invariant in O and T. 3. A CNP is scalable, achieving a running time complex- ity of O(n + m) for making m predictions with n observations. Within this specification of the model there are still some aspects that can be modified to suit specific requirements. The exact implementation of h, for example, can be adapted to the data type. For low dimensional data the encoder can be implemented as an MLP, whereas for inputs with larger dimensions and spatial correlations it can also include convolutions. Finally, in the setup described the model is not able to produce any coherent samples, as it learns to model only a factored prediction of the mean and the variances, disregarding the covariance between target points. This is a result of this particular implementation of the model. One way we can obtain coherent samples is by introducing a latent variable that we can sample from. We carry out some proof-of-concept experiments on such a model in section 4.2.3. . ditional stochastic process Q✓ that defines distributions over f(x) for inputs x 2 T. ✓ is the real vector of all parame- ters defining Q. Inheriting from the properties of stochastic processes, we assume that Q✓ is invariant to permutations of O and T. If O0 , T0 are permutations of O and T, re- spectively, then Q✓(f(T) | O, T) = Q✓(f(T0 ) | O, T0 ) = Q✓(f(T) | O0 , T). In this work, we generally enforce per- mutation invariance with respect to T by assuming a fac- tored structure. Specifically, we consider Q✓s that factor Q✓(f(T) | O, T) = Q x2T Q✓(f(x) | O, x). In the absence of assumptions on output space Y , this is the easiest way to ensure a valid stochastic process. Still, this framework can be extended to non-factored distributions, we consider such a model in the experimental section. The defining characteristic of a CNP is that it conditions on O via an embedding of fixed dimensionality. In more detail, we use the following architecture, ri = h✓(xi, yi) 8(xi, yi) 2 O (1) r = r1 r2 . . . rn 1 rn (2) i = g✓(xi, r) 8(xi) 2 T (3) where h✓ : X ⇥Y ! Rd and g✓ : X ⇥Rd ! Re are neural networks, is a commutative operation that takes elements in Rd and maps them into a single element of Rd , and i are parameters for Q✓(f(xi) | O, xi) = Q(f(xi) | i). Depend- ing on the task the model learns to parametrize a different output distribution. This architecture ensures permutation invariance and O(n + m) scaling for conditional prediction. We note that, since r1 . . . rn can be computed in O(1) from r1 . . . rn 1, this architecture supports streaming observations with minimal overhead. For regression tasks we use i to parametrize the mean and variance i = (µi, 2 i ) of a Gaussian distribution N(µi, 2 i ) for every xi 2 T. For classification tasks i parametrizes and unobserved values. In practice, we estimates of the gradient of this loss by This approach shifts the burden of imp edge from an analytic prior to empiri the advantage of liberating a practition specify an analytic form for the prior, w intended to summarize their empirical e emphasize that the Q✓ are not necessaril conditionals for all observation sets, and does not guarantee that. In summary, 1. A CNP is a conditional distribut trained to model the empirical cond of functions f ⇠ P. 2. A CNP is permutation invariant in 3. A CNP is scalable, achieving a run ity of O(n + m) for making m observations. Within this specification of the model t aspects that can be modified to suit spe The exact implementation of h, for exam to the data type. For low dimensiona can be implemented as an MLP, wher larger dimensions and spatial correlation convolutions. Finally, in the setup describ able to produce any coherent samples, a only a factored prediction of the mean disregarding the covariance between t is a result of this particular implement One way we can obtain coherent sample a latent variable that we can sample fr some proof-of-concept experiments o section 4.2.3. . Specifically, given a set of observations O, a CNP is a con- ditional stochastic process Q✓ that defines distributions over f(x) for inputs x 2 T. ✓ is the real vector of all parame- ters defining Q. Inheriting from the properties of stochastic processes, we assume that Q✓ is invariant to permutations of O and T. If O0 , T0 are permutations of O and T, re- spectively, then Q✓(f(T) | O, T) = Q✓(f(T0 ) | O, T0 ) = Q✓(f(T) | O0 , T). In this work, we generally enforce per- mutation invariance with respect to T by assuming a fac- tored structure. Specifically, we consider Q✓s that factor Q✓(f(T) | O, T) = Q x2T Q✓(f(x) | O, x). In the absence of assumptions on output space Y , this is the easiest way to ensure a valid stochastic process. Still, this framework can be extended to non-factored distributions, we consider such a model in the experimental section. The defining characteristic of a CNP is that it conditions on O via an embedding of fixed dimensionality. In more detail, we use the following architecture, ri = h✓(xi, yi) 8(xi, yi) 2 O (1) r = r1 r2 . . . rn 1 rn (2) i = g✓(xi, r) 8(xi) 2 T (3) where h✓ : X ⇥Y ! Rd and g✓ : X ⇥Rd ! Re are neural networks, is a commutative operation that takes elements in Rd and maps them into a single element of Rd , and i are parameters for Q✓(f(xi) | O, xi) = Q(f(xi) | i). Depend- ing on the task the model learns to parametrize a different output distribution. This architecture ensures permutation invariance and O(n + m) scaling for conditional prediction. We note that, since r1 . . . rn can be computed in O(1) from r1 . . . rn 1, this architecture supports streaming observations with minimal overhead. For regression tasks we use i to parametrize the mean and variance i = (µi, 2 ) of a Gaussian distribution N(µi, 2 ) Thus, the targets it scores Q✓ on include and unobserved values. In practice, we estimates of the gradient of this loss by This approach shifts the burden of imp edge from an analytic prior to empiri the advantage of liberating a practition specify an analytic form for the prior, w intended to summarize their empirical e emphasize that the Q✓ are not necessaril conditionals for all observation sets, and does not guarantee that. In summary, 1. A CNP is a conditional distribut trained to model the empirical cond of functions f ⇠ P. 2. A CNP is permutation invariant in 3. A CNP is scalable, achieving a run ity of O(n + m) for making m observations. Within this specification of the model t aspects that can be modified to suit spe The exact implementation of h, for exam to the data type. For low dimensiona can be implemented as an MLP, wher larger dimensions and spatial correlation convolutions. Finally, in the setup describ able to produce any coherent samples, a only a factored prediction of the mean disregarding the covariance between t is a result of this particular implement One way we can obtain coherent sample a latent variable that we can sample fr some proof-of-concept experiments o a less informed posterior of the underlying function. This formulation makes it clear that the posterior given a subset of the context points will serve as the prior when additional context points are included. By using this setup, and train- ing with different sizes of context, we encourage the learned model to be flexible with regards to the number and position of the context points. 2.4. The Neural process model In our implementation of NPs we accommodate for two ad- ditional desiderata: invariance to the order of context points and computational efficiency. The resulting model can be boiled down to three core components (see Figure 1b): • An encoder h from input space into representation space that takes in pairs of (x, y)i context values and produces a representation ri = h((x, y)i) for each of the pairs. We parameterise h as a neural network. • An aggregator a that summarises the encoded inputs. We are interested in obtaining a single order-invariant global representation r that parameterises the latent dis- tribution z ⇠ N(µ(r), I (r)). The simplest operation that ensures order-invariance and works well in prac- tice is the mean function r = a(ri) = 1 n Pn i=1 ri. Cru- cially, the aggregator reduces the runtime to O(n + m) where n and m are the number of context and target points respectively. • A conditional decoder g that takes as input the sam- pled global latent variable z as well as the new target locations xT and outputs the predictions ˆyT for the corresponding values of f(xT ) = yT . 3. Related work 3.1. Conditional neural processes Neural Processes (NPs) are a generalisation of Conditional lack a latent variable that allows for global sampl Figure 2c for a diagram of the model). As a resul are unable to produce different function samples same context data, which can be important if model uncertainty is desirable. It is worth mentioning that inal CNP formulation did include experiments with variable in addition to the deterministic connectio ever, given the deterministic connections to the p variables, the role of the global latent variable is n In contrast, NPs constitute a more clear-cut gener of the original deterministic CNP with stronger p to other latent variable models and approximate B methods. These parallels allow us to compare ou to a wide range of related research areas in the fo sections. Finally, NPs and CNPs themselves can be seen a alizations of recently published generative query n (GQN) which apply a similar training procedure to new viewpoints in 3D scenes given some context tions (Eslami et al., 2018). Consistent GQN (CG an extension of GQN that focuses on generating co samples and is thus also closely related to NPs (Kum 2018). 3.2. Gaussian processes We start by considering models that, like NPs, li spectrum between neural networks (NNs) and G processes (GPs). Algorithms on the NN end of the s fit a single function that they learn from a very large of data directly. GPs on the other hand can rep distribution over a family of functions, which is con by an assumption on the functional form of the co between two points. Scattered across this spectrum, we can place recent that has combined ideas from Bayesian non-para with neural networks. Methods like (Calandra et a Huang et al., 2015) remain fairly close to the GPs, b the prior on the context. As such this prior is equivalent to a less informed posterior of the underlying function. This formulation makes it clear that the posterior given a subset of the context points will serve as the prior when additional context points are included. By using this setup, and train- ing with different sizes of context, we encourage the learned model to be flexible with regards to the number and position of the context points. 2.4. The Neural process model In our implementation of NPs we accommodate for two ad- ditional desiderata: invariance to the order of context points and computational efficiency. The resulting model can be boiled down to three core components (see Figure 1b): • An encoder h from input space into representation space that takes in pairs of (x, y)i context values and produces a representation ri = h((x, y)i) for each of the pairs. We parameterise h as a neural network. • An aggregator a that summarises the encoded inputs. We are interested in obtaining a single order-invariant global representation r that parameterises the latent dis- tribution z ⇠ N(µ(r), I (r)). The simplest operation that ensures order-invariance and works well in prac- tice is the mean function r = a(ri) = 1 n Pn i=1 ri. Cru- cially, the aggregator reduces the runtime to O(n + m) where n and m are the number of context and target points respectively. • A conditional decoder g that takes as input the sam- pled global latent variable z as well as the new target locations xT and outputs the predictions ˆyT for the corresponding values of f(xT ) = yT . 3. Related work 3.1. Conditional neural processes a large part of the motivation behind n lack a latent variable that allows for g Figure 2c for a diagram of the model) are unable to produce different funct same context data, which can be import uncertainty is desirable. It is worth men inal CNP formulation did include exper variable in addition to the deterministi ever, given the deterministic connectio variables, the role of the global latent In contrast, NPs constitute a more clea of the original deterministic CNP wit to other latent variable models and app methods. These parallels allow us to to a wide range of related research are sections. Finally, NPs and CNPs themselves ca alizations of recently published genera (GQN) which apply a similar training p new viewpoints in 3D scenes given so tions (Eslami et al., 2018). Consisten an extension of GQN that focuses on g samples and is thus also closely related 2018). 3.2. Gaussian processes We start by considering models that, spectrum between neural networks (N processes (GPs). Algorithms on the NN fit a single function that they learn from of data directly. GPs on the other ha distribution over a family of functions, by an assumption on the functional for between two points. Scattered across this spectrum, we can p that has combined ideas from Bayesi Neural Processes yC zT yT C T z Neural statistician xC yT C T xTyC (c) Conditional neural process xC yT C T z xTyC (d) Neural process elated models (a-c) and of the neural process (d). Gray shading indicates the variable is observed. C T for target variables i.e. the variables to predict given C. . . ]W b b 0 2 : ,+ 8 1 . 0 2 : ,+ 8 1

- 31. • 8C 8 8 2 =8 + , 1 3 – l hp • u – o t I C= 8 := D [ r d P MLT – l y • u – TI o o b ab M] ] y W kc n T I – o t I b ab M] y P sN ei C= 0 C G I IL Neural Processes 10 100 300 784 Number of context points ContextSample1Sample2Sample3 15 30 90 1024 Number of context points ContextSample1Sample2Sample3 Figure 4. Pixel-wise regression on MNIST and CelebA The diagram on the left visualises how pixel-wise image completion can be framed as a 2-D regression task where f(pixel coordinates) = pixel brightness. The figures to the right of the diagram show the results on image completion for MNIST and CelebA. The images on the top correspond to the context points provided to the model. For better clarity the unobserved pixels have been coloured blue for the MNIST images and white for CelebA. Each of the rows corresponds to a

- 32. • C /C DD D C + , 3 C D – le G 2 D D : • – iIg u [ w – 2 D D : ST M d • – / / PR t STle drSR s ophm PR s RWa N] PR r d – 0 8 1 C d PR / / L r d PR d] R L – / [ C 8 C ekIkonIg cR d PTNeural Processes Figure 5. Thompson sampling with neural processes on a 1-D objective function. The plots show the optimisation process over five iterations. Each prediction function (blue) is drawn by sampling a latent variable conditioned on an incresing number of context points (black circles). The underlying ground truth function is depicted as a black dotted line. The red triangle indicates the next evaluation point which corresponds to the minimum value of the sampled NP curve. The red circle in the following iteration corresponds to this evaluation point with its underlying ground truth value that serves as a new context point to the NP. Neural Processes Figure 5. Thompson sampling with neural processes on a 1-D objective function. The plots show the optimisation process over five iterations. Each prediction function (blue) is drawn by sampling a latent variable conditioned on an incresing number of context points (black circles). The underlying ground truth function is depicted as a black dotted line. The red triangle indicates the next evaluation point which corresponds to the minimum value of the sampled NP curve. The red circle in the following iteration corresponds to this evaluation point with its underlying ground truth value that serves as a new context point to the NP. 4.3. Black-box optimisation with Thompson sampling To showcase the utility of sampling entire consistent trajecto- ries we apply neural processes to Bayesian optimisation on 1-D function using Thompson sampling (Thompson, 1933). Thompson sampling (also known as randomised proba- bility matching) is an approach to tackle the exploration- exploitation dilemma by maintaining a posterior distribution over model parameters. A decision is taken by drawing a sample of model parameters and acting greedily under the resulting policy. The posterior distribution is then updated and the process is repeated. Despite its simplicity, Thomp- son sampling has been shown to be highly effective both empirically and in theory. It is commonly applied to black box optimisation and multi-armed bandit problems (e.g. Agrawal & Goyal, 2012; Shahriari et al., 2016). function evaluations increases. Neural process Gaussian process Random Search 0.26 0.14 1.00 Table 1. Bayesian Optimisation using Thompson sampling. Average number of optimisation steps needed to reach the global minimum of a 1-D function generated by a Gaussian process. The values are normalised by the number of steps taken using random search. The performance of the Gaussian process with the correct kernel constitutes an upper bound on performance. 4.4. Contextual bandits

- 33. • e n – u o a – t k G • o C l F – • F lC N • o C G G – • / i C ikG a a C k P • / 3 / – r r bF N Fv r GwN : : – N F G

- 34. • / – 2 NFLO 8 S FS L" /ON JSJON L FT L ODFRRFR" 4N 4/87 " – 2 NFLO 8 S FS L" FT L ODFRRFR" 4N 4/87 VO KRIOP S DK " • 8FS 7F NJNH – J DIJN N 3THO 7 ODIFLLF" :PSJMJY SJON R MO FL GO GFV RIOS LF NJNH" 4N 4/7 " – ?JN LR : JOL FS L" 8 SDIJNH NFSVO KR GO ONF RIOS LF NJNH" 4N 4 " – 1JNN /IFLRF JFSF .CCFFL N F HF 7F JNF" 8O FL HNORSJD MFS LF NJNH GO G RS PS SJON OG FFP NFSVO KR" 4N 4/87 " – NSO O . M FS L" 8FS LF NJNH VJSI MFMO THMFNSF NFT L NFSVO KR" 4N 4/87 " – FF DOSS N O F 1 FJS R : JOL ?JN LR" 0FFP 7F NJNH, DSJDF N FN R" 4N 4 TSO J L " – ?J LR : JOL" 8O FL R :PSJMJY SJON 8FS 7F NJNH" 4N 4 8FS 7F NJNH MPORJTM " • 2 TRRJ N ODFRRFR – JLRON .N FV 2O ON FS L" 0FFP KF NFL LF NJNH" . SJGJDJ L 4NSFLLJHFNDF N S SJRSJDR" " – 6FN LL .LFW N A JN 2 L" I S TNDF S JNSJFR O VF NFF JN C FRJ N FFP LF NJNH GO DOMPTSF JRJON-" 4N 4 VO KRIOP S DK " – 7FF FIOON FS L" 0FFP NFT L NFSVO KR R H TRRJ N P ODFRRFR" 4N 4/7 "

![1

DEEP LEARNING JP

[DL Papers]

http://deeplearning.jp/

Conditional Neural Processes (ICML2018)

Neural Processes (ICML2018Workshop)

Kazuki Fujikawa, DeNA](https://image.slidesharecdn.com/conditionalneuralprocesses-180726141539/85/Conditional-neural-processes-1-320.jpg)

![• ] W

– 006 0 40 0 4241 ,2 4

• e D:! " !, $ N

–

» "(!, $) ++ o snpe ++

» ' [ [

• $, ' C D i r lK

–

• $, ' e a gL

–

(2)

y µX) ,

1

KX,X⇤

.

n matrix of

X⇤ and X.

X and X⇤,

valuated at

s implicitly

dels which

space, and

ese models

or support

of any con-

Indeed the

induced by

ernel func-

In this section we show how we can contruct kernels

which encapsulate the expressive power of deep archi-

tectures, and how to learn the properties of these ker-

nels as part of a scalable probabilistic GP framework.

Specifically, starting from a base kernel k(xi, xj|✓)

with hyperparameters ✓, we transform the inputs (pre-

dictors) x as

k(xi, xj|✓) ! k(g(xi, w), g(xj, w)|✓, w) , (5)

where g(x, w) is a non-linear mapping given by a deep

architecture, such as a deep convolutional network,

parametrized by weights w. The popular RBF kernel

(Eq. (3)) is a sensible choice of base kernel k(xi, xj|✓).

For added flexibility, we also propose to use spectral

mixture base kernels (Wilson and Adams, 2013):

kSM(x, x0

|✓) = (6)

QX

q=1

aq

|⌃q|

1

2

(2⇡)

D

2

exp

✓

1

2

||⌃

1

2

q (x x0

)||2

◆

coshx x0

, 2⇡µqi .

372

he marginal likeli-

des significant ad-

ied to the output

twork. Moreover,

ng non-parametric

very recent KISS-

, 2015) and exten-

iently representing

e deep kernels.

ses (GPs) and the

dictions and kernel

Williams (2006) for

.

(predictor) vectors

on D, which index

(x1), . . . , y(xn))>

.

ection of function

bution,

N (µ, KX,X ) , (1)

e matrix defined

nce kernel of the

2

encodes the inductive bias that function values closer

together in the input space are more correlated. The

complexity of the functions in the input space is deter-

mined by the interpretable length-scale hyperparam-

eter `. Shorter length-scales correspond to functions

which vary more rapidly with the inputs x.

The structure of our data is discovered through

learning interpretable kernel hyperparameters. The

marginal likelihood of the targets y, the probability

of the data conditioned only on kernel hyperparame-

ters , provides a principled probabilistic framework

for kernel learning:

log p(y| , X) / [y>

(K + 2

I) 1

y+log |K + 2

I|] ,

(4)

where we have used K as shorthand for KX,X given .

Note that the expression for the log marginal likeli-

hood in Eq. (4) pleasingly separates into automatically

calibrated model fit and complexity terms (Rasmussen

and Ghahramani, 2001). Kernel learning is performed

by optimizing Eq. (4) with respect to .

The computational bottleneck for inference is solving

the linear system (KX,X + 2

I) 1

y, and for kernel

learning is computing the log determinant log |KX,X +

2

I|. The standard approach is to compute the

Cholesky decomposition of the n ⇥ n matrix KX,X ,

Deep Kernel Learning

. .

h

(L)

1

h

(L)

C

(L)

h1(✓)

h1(✓)

ers

1 layer

y1

yP

Output layer

. .

...

......

...

...

ing: A Gaussian process with

onal inputs x through L para-

by a hidden layer with an in-

ns, with base kernel hyperpa-

an process with a deep kernel

ping with an infinite number

parametrized by = {w, ✓}.

jointly through the marginal

ocess.

For kernel learning, we use the chain rule to compute

derivatives of the log marginal likelihood with respect

to the deep kernel hyperparameters:

@L

@✓

=

@L

@K

@K

@✓

,

@L

@w

=

@L

@K

@K

@g(x, w)

@g(x, w)

@w

.

The implicit derivative of the log marginal likelihood

with respect to our n ⇥ n data covariance matrix K

is given by

@L

@K

=

1

2

(K 1

yy>

K 1

K 1

) , (7)

where we have absorbed the noise covariance 2

I into

our covariance matrix, and treat it as part of the base

kernel hyperparameters ✓.

@K

@✓ are the derivatives of

the deep kernel with respect to the base kernel hyper-

parameters (such as length-scale), conditioned on the

fixed transformation of the inputs g(x, w). Similarly,](https://image.slidesharecdn.com/conditionalneuralprocesses-180726141539/85/Conditional-neural-processes-14-320.jpg)

![• D c a

– +77 7 5: 7 C 9 5 ,5 85 0 7 7 77 1 4

• ,0G] wl nr puRu RL N tW I

75: 4 28::85 4

– ko

»

– ] nr

»

• ] nr s m k P

– i[e ko

»

– ] nr

»

»

denote its output. The ith component of the activations in the lth layer, post-nonlinearity and post-

affine transformation, are denoted xl

i and zl

i respectively. We will refer to these as the post- and

pre-activations. (We let x0

i ⌘ xi for the input, dropping the Arabic numeral superscript, and instead

use a Greek superscript x↵

to denote a particular input ↵). Weight and bias parameters for the lth

layer have components Wl

ij, bl

i, which are independent and randomly drawn, and we take them all to

have zero mean and variances 2

w/Nl and 2

b , respectively. GP(µ, K) denotes a Gaussian process

with mean and covariance functions µ(·), K(·, ·), respectively.

2.2 REVIEW OF GAUSSIAN PROCESSES AND SINGLE-LAYER NEURAL NETWORKS

We briefly review the correspondence between single-hidden layer neural networks and GPs (Neal

(1994a;b); Williams (1997)). The ith component of the network output, z1

i , is computed as,

z1

i (x) = b1

i +

N1X

j=1

W1

ijx1

j (x), x1

j (x) =

✓

b0

j +

dinX

k=1

W0

jkxk

◆

, (1)

where we have emphasized the dependence on input x. Because the weight and bias parameters are

taken to be i.i.d., the post-activations x1

j , x1

j0 are independent for j 6= j0

. Moreover, since z1

i (x) is

a sum of i.i.d terms, it follows from the Central Limit Theorem that in the limit of infinite width

N1 ! 1, z1

i (x) will be Gaussian distributed. Likewise, from the multidimensional Central Limit

Theorem, any finite collection of {z1

i (x↵=1

), ..., z1

i (x↵=k

)} will have a joint multivariate Gaussian

distribution, which is exactly the definition of a Gaussian process. Therefore we conclude that

z1

i ⇠ GP(µ1

, K1

), a GP with mean µ1

and covariance K1

, which are themselves independent of i.

Because the parameters have zero mean, we have that µ1

(x) = E

⇥

z1

i (x)

⇤

= 0 and,

K1

(x, x0

) ⌘ E

⇥

z1

i (x)z1

i (x0

)

⇤

= 2

b + 2

w E

⇥

x1

i (x)x1

i (x0

)

⇤

⌘ 2

b + 2

wC(x, x0

), (2)

where we have introduced C(x, x0

) as in Neal (1994a); it is obtained by integrating against the

distribution of W0

, b0

. Note that, as any two z1

i , z1

j for i 6= j are joint Gaussian and have zero

covariance, they are guaranteed to be independent despite utilizing the same features produced by

the hidden layer.

3

We briefly review the correspondence between single-hidden layer neural networks and GPs (Neal

(1994a;b); Williams (1997)). The ith component of the network output, z1

i , is computed as,

z1

i (x) = b1

i +

N1X

j=1

W1

ijx1

j (x), x1

j (x) =

✓

b0

j +

dinX

k=1

W0

jkxk

◆

, (1)

where we have emphasized the dependence on input x. Because the weight and bias parameters are

taken to be i.i.d., the post-activations x1

j , x1

j0 are independent for j 6= j0

. Moreover, since z1

i (x) is

a sum of i.i.d terms, it follows from the Central Limit Theorem that in the limit of infinite width

N1 ! 1, z1

i (x) will be Gaussian distributed. Likewise, from the multidimensional Central Limit

Theorem, any finite collection of {z1

i (x↵=1

), ..., z1

i (x↵=k

)} will have a joint multivariate Gaussian

distribution, which is exactly the definition of a Gaussian process. Therefore we conclude that

z1

i ⇠ GP(µ1

, K1

), a GP with mean µ1

and covariance K1

, which are themselves independent of i.

Because the parameters have zero mean, we have that µ1

(x) = E

⇥

z1

i (x)

⇤

= 0 and,

K1

(x, x0

) ⌘ E

⇥

z1

i (x)z1

i (x0

)

⇤

= 2

b + 2

w E

⇥

x1

i (x)x1

i (x0

)

⇤

⌘ 2

b + 2

wC(x, x0

), (2)

where we have introduced C(x, x0

) as in Neal (1994a); it is obtained by integrating against the

distribution of W0

, b0

. Note that, as any two z1

i , z1

j for i 6= j are joint Gaussian and have zero

covariance, they are guaranteed to be independent despite utilizing the same features produced by

the hidden layer.

3

2.3 GAUSSIAN PROCESSES AND DEEP NEURAL NETWORKS

The arguments of the previous section can be extended to deeper layers by induction. We proceed

by taking the hidden layer widths to be infinite in succession (N1 ! 1, N2 ! 1, etc.) as we

continue with the induction, to guarantee that the input to the layer under consideration is already

governed by a GP. In Appendix C we provide an alternative derivation, in terms of marginalization

over intermediate layers, which does not depend on the order of limits, in the case of a Gaussian

prior on the weights. A concurrent work (Anonymous, 2018) further derives the convergence rate

towards a GP if all layers are taken to infinite width simultaneously.

Suppose that zl 1

j is a GP, identical and independent for every j (and hence xl

j(x) are independent

and identically distributed). After l 1 steps, the network computes

zl

i(x) = bl

i +

NlX

j=1

Wl

ijxl

j(x), xl

j(x) = (zl 1

j (x)). (3)

As before, zl

i(x) is a sum of i.i.d. random terms so that, as Nl ! 1, any finite collection

{z1

i (x↵=1

), ..., z1

i (x↵=k

)} will have joint multivariate Gaussian distribution and zl

i ⇠ GP(0, Kl

).

The covariance is

Kl

(x, x0

) ⌘ E

⇥

zl

i(x)zl

i(x0

)

⇤

= 2

b + 2

w Ezl 1

i ⇠GP(0,Kl 1)

⇥

(zl 1

i (x)) (zl 1

i (x0

))

⇤

. (4)

By induction, the expectation in Equation (4) is over the GP governing zl 1

i , but this is equivalent

to integrating against the joint distribution of only zl 1

i (x) and zl 1

i (x0

). The latter is described by

a zero mean, two-dimensional Gaussian whose covariance matrix has distinct entries Kl 1

(x, x0

),

continue with the induction, to guarantee that the input to the layer under consideration is already

governed by a GP. In Appendix C we provide an alternative derivation, in terms of marginalization

over intermediate layers, which does not depend on the order of limits, in the case of a Gaussian

prior on the weights. A concurrent work (Anonymous, 2018) further derives the convergence rate

towards a GP if all layers are taken to infinite width simultaneously.

Suppose that zl 1

j is a GP, identical and independent for every j (and hence xl

j(x) are independent

and identically distributed). After l 1 steps, the network computes

zl

i(x) = bl

i +

NlX

j=1

Wl

ijxl

j(x), xl

j(x) = (zl 1

j (x)). (3)

As before, zl

i(x) is a sum of i.i.d. random terms so that, as Nl ! 1, any finite collection

{z1

i (x↵=1

), ..., z1

i (x↵=k

)} will have joint multivariate Gaussian distribution and zl

i ⇠ GP(0, Kl

).

The covariance is

Kl

(x, x0

) ⌘ E

⇥

zl

i(x)zl

i(x0

)

⇤

= 2

b + 2

w Ezl 1

i ⇠GP(0,Kl 1)

⇥

(zl 1

i (x)) (zl 1

i (x0

))

⇤

. (4)

By induction, the expectation in Equation (4) is over the GP governing zl 1

i , but this is equivalent

to integrating against the joint distribution of only zl 1

i (x) and zl 1

i (x0

). The latter is described by

a zero mean, two-dimensional Gaussian whose covariance matrix has distinct entries Kl 1

(x, x0

),

Kl 1

(x, x), and Kl 1

(x0

, x0

). As such, these are the only three quantities that appear in the result.

We introduce the shorthand

Kl

(x, x0

) = 2

b + 2

w F

⇣

Kl 1

(x, x0

), Kl 1

(x, x), Kl 1

(x0

, x0

)

⌘

(5)

to emphasize the recursive relationship between Kl

and Kl 1

via a deterministic function F whose

form depends only on the nonlinearity . This gives an iterative series of computations which can

2.3 GAUSSIAN PROCESSES AND DEEP NEURAL NETWORKS

The arguments of the previous section can be extended to deeper layers by induction. We proceed

by taking the hidden layer widths to be infinite in succession (N1 ! 1, N2 ! 1, etc.) as we

continue with the induction, to guarantee that the input to the layer under consideration is already