Neural Networks: Rosenblatt's Perceptron

- 1. CHAPTER 01 ROSENBLATT’S PERCEPTRON CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq M. Mostafa Computer Science Department Faculty of Computer & Information Sciences AIN SHAMS UNIVERSITY (most of figures in this presentation are copyrighted to Pearson Education, Inc.)

- 2. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq Introduction The Perceptron The Perceptron Convergence Theorem Computer Experiment The Batch Perceptron Algorithm 2 Rosenblatt’s Perceptron

- 3. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq 3 Introduction The Perceptron: the simplest form of a neural network. consists of a single neuron with adjustable synaptic weights and bias. can be used to classify linearly Separable patterns; patterns that lie on opposite sides of a hyperplane. is limited to perform pattern classification with only two classes.

- 4. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq The Perceptron Linearly and nonlinearly separable classes. Figure 1.4 (a) A pair of linearly separable patterns. (b) A pair of non-linearly separable. 4

- 5. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq The Perceptron A nonlinear neuron that is consists of a linear combiner followed by a hard limiter (e.g., signum activation function) Weights are adapted using an error-correction rule. Figure 1.3 Signal-flow graph of the perceptron. 5 01 01 )( v v vy m i iixwv 0 m i ii bxwv 1

- 6. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq The Perceptron The decision boundary A hyperplane defined by For the perceptron to function Properly, the two classes C1 And C2 must be linearly Separable. Figure 1.2 Illustration of the hyperplane (in this example, a straight line) as decision boundary for a two-dimensional, two-class pattern-classification problem. 6 0 1 m i ii bxw

- 7. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq The Perceptron Convergence Algorithm the fixed-increment convergence theorem for the perceptron (Rosenblatt, 1962): Let the subsets of training vectors X1 and X2 be linearly separable. Let the inputs presented to the perceptron originate from these two subsets. The perceptron converges after some noiterations, in the sense: is a solution vector for no nmax . Proof is reading: Pages (82-83 of ch01, Haykin). 7 ...)2()1()( 000 nnn www

- 8. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq The Perceptron Convergence Algorithm We derive the error-correction learning algorithm as follows: We write the input signal, the weights, and the bias: Then The learning algorithms find a weight vector w such that: 8 T m nxnxnxn )(),...,()(,1)( 21x T m nwnwnwnwn )(),...,(),(,)( 210w m i T ii nnnxnwnv 0 )( xw C1orinput vecteveryfor0 xxw T C2orinput vecteveryfor0 xxw T

- 9. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq The Perceptron Convergence Algorithm The learning algorithms find a weight vector W such that: 9 C1orinput vecteveryfor0 xxw T C2orinput vecteveryfor0 xxw T

- 10. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq The Perceptron Convergence Algorithm Given the subsets of training vectors X1 and X2 , then the training problem is then to find a weight vector W such that the previous two inequalities are satisfied. This is achieved when updating the weights as follows: The learning-rate parameter (n) is a positive number which could be variable. For fixed , we have fixed-increment learning rule. The algorithm converges if (n) is a positive value. 10 C2x(n)and0)(if)()()()1( nnnnnn T xwxww C1x(n)and0)(if)()()()1( nnnnnn T xwxww

- 11. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq The Perceptron Convergence Algorithm 11

- 12. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq The Perceptron Convergence Algorithm 12

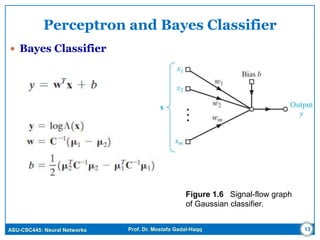

- 13. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq Perceptron and Bayes Classifier Bayes Classifier Figure 1.6 Signal-flow graph of Gaussian classifier. 13

- 14. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq Perceptron and Bayes Classifier Bayes Classifier Figure 1.7 Two overlapping, one-dimensional Gaussian distributions. 14

- 15. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq 15 The Batch Perceptron Algorithm We define the perceptron cost function as where H is the set of samples x misclassified by a perceptron using w as its weight vector the cost function J(w) is differentiable with respect to the weight vector w. Thus, differentiating J(w) with respect to yields the gradient vector In the method of steepest descent, the adjustment to the weight vector w at each time step of the algorithm is applied in a direction opposite to the gradient vector .

- 16. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq 16 The Batch Perceptron Algorithm Accordingly, the algorithm takes the form which embodies the batch perceptron algorithm for computing the weight vector W. The algorithm is said to be of the “batch” kind because at each time-step of the algorithm, a batch of misclassified samples is used to compute the adjustment

- 17. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq 17 Batch Learning Presentation of all the N examples in the training sample constitute one epoch. The cost function of the learning is defined by the average error energy Eav The weights are updated epoch-by-epoch Advantages: Accurate estimation of the gradient vector. Parallelization of the learning process.

- 18. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq Computer Experiment: Pattern Classification 18 Figure 1.8 The double-moon classification problem.

- 19. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq Computer Experiment: Pattern Classification 19 Figure 1.9 Perceptron with the double-moon set at distance d = 1.

- 20. ASU-CSC445: Neural Networks Prof. Dr. Mostafa Gadal-Haqq Computer Experiment: Pattern Classification 20 Figure 1.10 Perceptron with the double-moon set at distance d = -4.

- 21. •Problems: •1.1, 1.4, and 1.5 •Computer Experiment •1.6 Homework 1 21

- 22. Model building Through Regression Next Time 22