Standalone Spark Deployment for Stability and Performance

- 1. Standalone Spark Deployment For Stability and Performance

- 2. Totango ❖ Leading Customer Success Platform ❖ Helps companies retain and grow their customer base ❖ Advanced actionable analytics for subscription and recurring revenue ❖ Founded @ 2010 ❖ Infrastructure on AWS cloud ❖ Spark for batch processing ❖ ElasticSearch for serving layer

- 3. About Me Romi Kuntsman Senior Big Data Engineer @ Totango Working with Apache Spark since v1.0 Working with AWS Cloud since 2008

- 4. Spark on AWS - first attempts ❖ We tried Amazon EMR (Elastic MapReduce) to install Spark on YARN ➢ Performance hit per application (starts Spark instance for each) ➢ Performance hit per server (running services we don't use, like HDFS) ➢ Slow and unstable cluster resizing (often stuck and need to recreate) ❖We tried spark-ec2 script to install Spark Standalone on AWS EC2 machines ➢ Serial (not parallel) initialization of multiple servers - slow! ➢ Unmaintained scripts since availability of Spark on EMR (see above) ➢ Doesn't integrate with our existing systems

- 5. Spark on AWS - road to success ❖ We decided to write our own scripts to integrate and control everything ❖Understood all Spark components and configuration settings ❖Deployment based on Chef, like we do in all servers ❖Integrated monitoring and logging, like we have in all our systems ❖Full server utilization - running exactly what we need and nothing more ❖Cluster hanging or crashing no longer happens ❖Seamless cluster resize without hurting any existing jobs ❖Able to upgrade to any version of Spark (not dependant on third party)

- 6. What we'll discuss ❖Separation of Spark Components ❖Centralized Managed Logging ❖Monitoring Cluster Utilization ❖Auto Scaling Groups ❖Termination Protection ❖Upstart Mechanism ❖NewRelic Integration ❖Chef-based Instantiation Data w/ Romi Ops w/ Alon

- 7. Separation of Components ❖Spark Master Server (single) ➢Master Process - accepts requests to start applications ➢History Process - serves history data of completed applications ❖Spark Slave Server (multiple) ➢Worker Process - handles workload of applications on server ➢External Shuffle Service - handles data exchange between workers ➢Executor Process (one per core - for running apps) - runs actual code

- 8. Configuration - Deploy Spread Out ❖spark.deploy.spreadOut (SPARK_MASTER_OPTS) ➢true = use cores spread across all workers ➢false = fill up all worker cores before getting more

- 9. Configuration - Cleanup ❖spark.worker.cleanup.* (SPARK_WORKER_OPTS) ➢.enabled = true (turn on mechanism to clean up app folders) ➢.interval = 1800 (run every 1800 seconds, or 30 minutes) ➢.appDataTtl = 1800 (remove finished applications after 30 minutes) ❖We have 100s of applications per day, each with it's jars and logs ❖Rapid cleanup is essential to avoid filling up disk space ❖We collect the logs before cleanup - details in following slides ;-) ❖Only cleans up files of completed applications

- 10. External Shuffle Service ❖Preserves shuffle files written by executors ❖Servers shuffle files to other executors who want to fetch them ❖If (when) one executor crashes (OOM etc), others may still access it's shuffle ❖We run the shuffle service itself in a separate process from the executor ❖To enable: spark.shuffle.service.enable=true ❖Config: spark.shuffle.io.* (see documentation)

- 11. Logging - components ❖ Master Log (/logs/spark-runner- org.apache.spark.deploy.master.Master-*) ➢ Application registration, worker coordination ❖History Log (/logs/spark-runner- org.apache.spark.deploy.history.HistoryServer-*) ➢ Access to history, errors reading (e.g. I/O from S3, not found) ❖Worker Log (/logs/spark-runner- org.apache.spark.deploy.worker.Worker-*) ➢ Executor management (launch, kill, ACLs) ❖Shuffle Log (/logs/org.apache.spark.deploy.ExternalShuffleService-*) ➢ External Executor Registrations

- 12. Logging - applications ❖Application Logs (/mnt/spark-work/app-12345/execid/stderr) ➢ All output from executor process, including your own code ❖Using LogStash to gather logs from all applications together input { file { path => "/mnt/spark-work/app-*/*/std*" start_position => beginning } } filter { grok { match => [ "path", "/mnt/spark-work/%{NOTSPACE:application}/.+/%{NOTSPACE:logtype}" ] } } output { file { path => "/logs/applications.log" message_format => "%{application} %{logtype} %{message}" } }

- 13. Monitoring Cluster Utilization ❖ Spark Reports Metrics (Codahale) through Graphite ➢Master metrics - running application and their status ➢Worker metrics - used cores, free cores ➢JVM metrics - memory allocation, GC ❖We use Anodot to view and track metrics trends and anomalies

- 14. And now, to the Ops side... Alon Torres DevOps Engineer @ Totango

- 15. Auto Scaling Group Components ❖Auto Scaling Group ➢ Scale your group up or down flexibly ➢ Supports health checks and load balancing ❖Launch Configuration ➢ Template used by the ASG to launch instances ➢ User Data script for post-launch configuration ❖User Data ➢ Install prerequisites and fetch instance info ➢ Install and start Chef client ➢ Sanity checks throughout Launch Configuratio n Auto Scaling Group EC2 Instance EC2 Instance EC2 Instance EC2 Instance EC2 Instance EC2 Instance User Data

- 16. Auto Scaling Group resizing in AWS ❖ Scheduled ➢ Set the desired size according to a specified schedule ➢ Good for scenarios with predictable, cyclic workloads. ❖Alert-Based ➢ Set specific alerts that trigger a cluster action ➢ Alerts can monitor instance health properties (resource usage) ❖Remote-triggered ➢ Using the AWS API/CLI, resize the cluster however you want

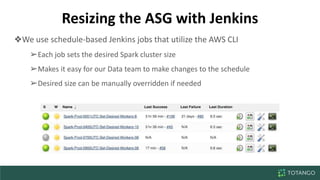

- 17. Resizing the ASG with Jenkins ❖We use schedule-based Jenkins jobs that utilize the AWS CLI ➢ Each job sets the desired Spark cluster size ➢ Makes it easy for our Data team to make changes to the schedule ➢ Desired size can be manually overridden if needed

- 18. Termination Protection ❖When scaling down, ASG treats all nodes as equal termination candidates ❖We want to avoid killing instances with currently running jobs ❖To achieve this, we used a built-in feature of ASG - termination protection ❖Any instance in the ASG can be set as protected, thus preventing termination when scaling down the cluster. if [ $(ps -ef | grep executor | grep spark | wc -l) -ne 0 ]; then aws autoscaling set-instance-protection --protected-from-scale-in … fi

- 19. Upstart Jobs for Spark ❖ Every spark component has an upstart job the does the following ➢ Set Spark Niceness (Process priority in CPU resource distribution) ➢ Start the required Spark component and ensure it stays running ■ The default spark daemon script runs in the background ■ For Upstart, we modified the script to run in the foreground ❖ nohup nice -n "$SPARK_NICENESS"…& vs ❖ nice -n "$SPARK_NICENESS" ...

- 20. NewRelic Monitoring ❖ Cloud-based Application and Server monitoring ❖Supports multiple alert policies for different needs ➢ Who to alert, and what triggers the alerts ❖Newly created instances are auto - assigned the default alert policy

- 21. Policy Assignment using AWS Lambda ❖Spark instances have their own policy in NewRelic ❖Each instance has to ask NewRelic to be reassigned to the new policy ➢Parallel reassignment requests may collide and override each other ❖Solution - during provisioning and shutdown, we do the following: ➢Put a record in an AWS Kinesis stream that contains their hostname and their desired NewRelic policy ID ➢The record triggers an AWS Lambda script that uses the NewRelic API to reassign the hostname given to the policy ID given

- 22. Chef ❖Configuration Management Tool, can provision and configure instances ➢Describe an instance state as code, let chef handle the rest ➢Typically works in server/client mode - client updates every 30m ➢Besides provisioning, also prevents configuration drifts ❖Vast amount of plugins and cookbooks - the sky's the limit! ❖Configures all the instances in our DC

- 23. Spark Instance Provisioning ❖ Setup Spark ➢ Setup prerequisites - users, directories, symlinks and jars ➢ Download and extract spark package from S3 ❖Configure termination protection cron script ❖Configure upstart conf files ❖Place spark config files ❖Assign NewRelic policy ❖Add shutdown scripts ➢ Delete instance from chef database ➢ Remove from NewRelic monitoring policy

- 24. Questions? ❖ Alon Torres, DevOps https://il.linkedin.com/in/alontorres ❖Romi Kuntsman, Senior Big Data Engineer https://il.linkedin.com/in/romik ❖Stay in touch! Totango Engineering Technical Blog http://labs.totango.com/

![Logging - applications

❖Application Logs (/mnt/spark-work/app-12345/execid/stderr)

➢ All output from executor process, including your own code

❖Using LogStash to gather logs from all applications together

input {

file {

path => "/mnt/spark-work/app-*/*/std*"

start_position => beginning

}

}

filter {

grok {

match => [ "path", "/mnt/spark-work/%{NOTSPACE:application}/.+/%{NOTSPACE:logtype}" ]

}

}

output {

file {

path => "/logs/applications.log"

message_format => "%{application} %{logtype} %{message}"

}

}](https://image.slidesharecdn.com/sparkmeetup2016-04-05-160412103222/85/Standalone-Spark-Deployment-for-Stability-and-Performance-12-320.jpg)

![Termination Protection

❖When scaling down, ASG treats all nodes as equal

termination candidates

❖We want to avoid killing instances with currently running jobs

❖To achieve this, we used a built-in feature of ASG -

termination protection

❖Any instance in the ASG can be set as protected, thus

preventing termination when scaling down the cluster.

if [ $(ps -ef | grep executor | grep spark | wc -l) -ne 0 ]; then

aws autoscaling set-instance-protection --protected-from-scale-in …

fi](https://image.slidesharecdn.com/sparkmeetup2016-04-05-160412103222/85/Standalone-Spark-Deployment-for-Stability-and-Performance-18-320.jpg)