Ics21 workshop decoupling compute from memory, storage & io with omi - master

- 1. Allan Cantle - 6/14/2021 Decoupling Compute from Memory, Storage & IO with OMI An Open Source Hardware Initiative Nomenclature : Read “Processor” as CPU and/or Accelerator

- 2. Overview • Why Decouple Compute from Memory, Storage & IO? • Top Down Systems Perspective and Introduction of OCP HPC Concepts • Introduction to the Open Memory Interface, OMI • Decoupling Compute with OCP OAM - HPC Module & OMI

- 3. Why Decouple Compute from Memory, Storage & IO? Rethinking Computing Architecture….. • Because the Data is at the heart of Computing Architecture Today • Compute is rapidly becoming a Commodity • Intel i386 = 276K Transistors = $0.01 retail! • Power Ef f iciency and Cost are Today’s Primary Drivers • Distribute the Compute ef f iciently : It’s not the Center of Attention anymore • Compute therefore needs 1 simple interface for easy re-use everywhere

- 4. So, Let’s Rede f ine Computing Architecture Back to First Principles with a Primary Focus on Power & Latency • In Computing • Latency ≈ Time taken to Move Data • More Clock Cycles = More Latency = More Power • More Distance = More Latency = More Power • Hence Power can be seen as a proxy for Latency & Vice Versa • A Focus on Power & Latency will beget Performance • Heterogeneous Processors effectively do this for Speci f ic algorithm types • For HPC, we now need to Focus our energy at the System Architecture Level

- 5. Cache Memory’s Bipolar relationship with Power It’s Implementation Needs Rethinking • Cache is Very Good for Power ef f iciency • Minimizes data movement on repetitively used data • Cache is very Bad for Power Ef f iciency • Unnecessary Cache thrashing for data that’s touched once or not at all • Many layers of Cache = multiple copies burning more power • Conclusion • Hardware Caching must be ef f iciently managed at the application level

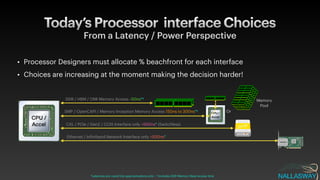

- 6. Today’s Processor interface Choices From a Latency / Power Perspective • Processor Designers must allocate % beachfront for each interface • Choices are increasing at the moment making the decision harder! CPU / Accel CPU / Accel DDR / HBM / OMI Memory Access ~50ns*† SMP / OpenCAPI / Memory Inception Memory Access 150ns to 300ns*† CXL / PCIe / GenZ / CCIX Interface only <500ns* (Switchless) Ethernet / In f iniband Network Interface only >500ns* *Latencies are round trip approximations only : † Includes DDR Memory Read access time Or Memory Pool

- 7. Simplify to a Shared Memory Interface? Successfully Decouple Processors from Memory, Storage & IO • One Standardized, Low Latency, Low Power, Processor Interface • Graceful increase in latency and power beyond local memory • Processor Companies can focus on their core expertise and Support ALL Domain Speci f ic Use Cases CPU / Accel CPU / Accel ~50ns*† *Latencies are round trip approximations only : † Includes Memory Read access time ~50ns*† ~50ns*† ~50ns*† ~50ns*† ~50ns*† CPU / Accel 150ns - 300ns*† >500ns* <500ns* ~50ns*† ~50ns*† ~50ns*† Or Memory Pool

- 8. Overview • Why Decouple Compute from Memory, Storage & IO? • Top Down Systems Perspective and Introduction of OCP HPC Concepts • Introduction to the Open Memory Interface, OMI • Decoupling Compute with OCP OAM - HPC Module & OMI

- 9. S C M M M C IO IO S S S S S S S A Disaggregated Racks to Hyper-converged Chiplets Classic server being torn in opposite directions! Software Composable Expensive Physical composability Baseline Physical Composability Power Ignored Rack Interconnect >20pJ/bit Power Optimized Chiplet Interconnect <1pJ/bit Power Baseline Node Interconnect 5 - 10pJ/bit Node Volume >800 Cubic Inches SIP Volume <1 Cubic Inch Rack Volume >53K Cubic Inches Baseline Latency Poor Latency Optimal Latency

- 10. S C M M M C IO IO S S S S S S S A An OCP OAM & EDSFF Inspired solution? Bringing the bene f its of Disaggregation and Chiplets together Software Composable Expensive Physical composability Baseline Physical Composability Power Ignored Rack Interconnect >20pJ/bit Power Optimized Chiplet Interconnect <1pJ/bit Power Baseline Node Interconnect 5 - 10pJ/bit Node Volume >800 Cubic Inches SIP Volume <1 Cubic Inch Rack Volume >53K Cubic Inches Baseline Latency Poor Latency Optimal Latency Software & Physical Composability Power Optimized Flexible Chiplet Interconnect 1 - 2pJ/bit Optimal Latency Module Volume <150 Cubic Inches OCP OAM - HPC Module Populated with E3.S, NIC - 3.0, & Cable IO

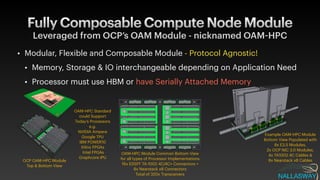

- 11. Fully Composable Compute Node Module Leveraged from OCP’s OAM Module - nicknamed OAM - HPC • Modular, Flexible and Composable Module - Protocol Agnostic! • Memory, Storage & IO interchangeable depending on Application Need • Processor must use HBM or have Serially Attached Memory OCP OAM - HPC Module Top & Bottom View OAM - HPC Module Common Bottom View for all types of Processor Implementations 16x EDSFF TA - 1002 4C/4C+ Connectors + 8x Nearstack x8 Connectors Total of 320x Transceivers OAM - HPC Standard could Support Today’s Processors e.g. NVIDIA Ampere Google TPU IBM POWER10 Xilinx FPGAs Intel FPGAs Graphcore IPU Example OAM - HPC Module Bottom View Populated with 8x E3.S Modules, 2x OCP NIC 3.0 Modules, 4x TA1002 4C Cables & 8x Nearstack x8 Cables

- 12. OMI in E3.S OMI Memory IO is f inally going Serial! • Bringing Memory into the composable world of Storage and IO with E3.S DDR DIMM OMI in DDIMM Format CXL.mem in E3.S Introduced in August 2019 Introduced in May 2021 Proposed in 2020 GenZ in E3.S Introduced in 2020 Dual OMI x8 DDR4/5 Channel CXL x16 DDR5 Channel GenZ x16 DDR4 Channel

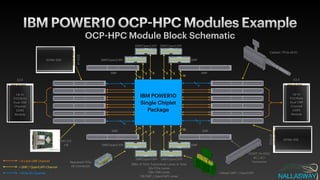

- 13. IBM POWER10 OCP - HPC Modules Example OCP - HPC Module Block Schematic 288x of 320x Transceiver Lanes in Total 32x PCIe Lanes 128x OMI Lanes 128 SMP / OpenCAPI Lanes EDSFF TA - 1002 4C / 4C+ Connector IBM POWER10 Single Chiplet Package 16 16 8 8 8 8 16 16 8 8 8 8 = 8 Lane OMI Channel = SMP / OpenCAPI Channel = PCIe-G5 Channel Nearstack PCIe x8 Connector 4 16 8 4 E3.S Up to 512GByte Dual OMI Channel DDR5 Module E3.S Up to 512GByte Dual OMI Channel DDR5 Module E3.S x4 NVMe SSD NIC 3.0 x16 Cabled / PCIe x8 IO Cabled SMP / OpenCAPI SMP/OpenCAPI SMP/OpenCAPI SMP/OpenCAPI SMP SMP/OpenCAPI SMP/OpenCAPI SMP/OpenCAPI SMP SMP SMP SMP SMP E3.S x4 NVMe SSD

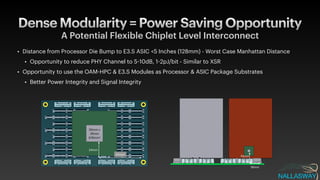

- 14. Dense Modularity = Power Saving Opportunity A Potential Flexible Chiplet Level Interconnect • Distance from Processor Die Bump to E3.S ASIC <5 Inches (128mm) - Worst Case Manhattan Distance • Opportunity to reduce PHY Channel to 5 - 10dB, 1 - 2pJ/bit - Similar to XSR • Opportunity to use the OAM - HPC & E3.S Modules as Processor & ASIC Package Substrates • Better Power Integrity and Signal Integrity 24mm 67mm 26mm x 26mm 676mm2 19mm 18mm

- 15. Overview • Why Decouple Compute from Memory, Storage & IO? • Top Down Systems Perspective and Introduction of OCP HPC Concepts • Introduction to the Open Memory Interface, OMI • Decoupling Compute with OCP OAM - HPC Module & OMI

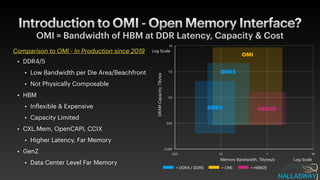

- 16. Introduction to OMI - Open Memory Interface? OMI = Bandwidth of HBM at DDR Latency, Capacity & Cost • DDR4/5 • Low Bandwidth per Die Area/Beachfront • Not Physically Composable • HBM • In f lexible & Expensive • Capacity Limited • CXL.Mem, OpenCAPI, CCIX • Higher Latency, Far Memory • GenZ • Data Center Level Far Memory = DDR4 / DDR5 = OMI = HBM2E DRAM Capacity, TBytes Log Scale 0.01 0.1 1.0 10 0.01 0.1 1 10 Memory Bandwidth, TBytes/s Log Scale OMI HBM2E DDR4 0.001 DDR5 Comparison to OMI - In Production since 2019

- 17. Memory Interface Comparison OMI, the ideal Processor Shared Memory Interface! Speci f ication LRDIMM DDR4 DDR5 HBM2E(8 - High) OMI Protocol Parallel Parallel Parallel Serial Signalling Single-Ended Single-Ended Single-Ended Di ff erential I/O Type Duplex Duplex Simplex Simplex LANES/Channel (Read/ Write) 64 32 512R/512W 8R/8W LANE Speed 3,200MT/s 6,400MT/s 3,200MT/S 32,000MT/s Channel Bandwidth (R+W) 25.6GBytes/s 25.6GBytes/s 400GBytes/s 64GBytes/s Latency 41.5ns ? 60.4ns 45.5ns Driver Area / Channel 7.8mm2 3.9mm2 11.4mm2 2.2mm2 Bandwidth/mm2 3.3GBytes/s/mm2 6.6GBytes/s/mm2 35GBytes/s/mm2 33.9GBytes/s/mm2 Max Capacity / Channel 64GB 256GB 16GB 256GB Connection Multi Drop Multi Drop Point-to-Point Point-to-Point Data Resilience Parity Parity Parity CRC Similar Bandwidth/mm2 provides an opportunity for an HBM Memory with an OMI Interface on its logic layer. Brings Flexibility and Capacity options to Processors with HBM Interfaces!

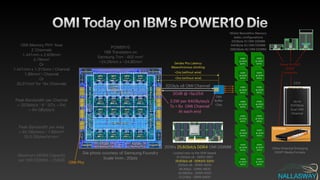

- 18. OMI Today on IBM’s POWER10 Die POWER10 18B Transisters on Samsung 7nm - 602 mm2 ~24.26mm x ~24.82mm Die photo courtesy of Samsung Foundry Scale 1mm : 20pts OMI Memory PHY Area 2 Channels 1.441mm x 2.626mm 3.78mm2 Or 1.441mm x 1.313mm / Channel 1.89mm2 / Channel Or 30.27mm2 for 16x Channels Peak Bandwidth per Channel = 32Gbits/s * 8 * 2(Tx + Rx) = 64 GBytes/s Peak Bandwidth per Area = 64 GBytes/s / 1.89mm2 33.9 GBytes/s/mm2 Maximum DRAM Capacity per OMI DDIMM = 256GB 32Gb/s x8 OMI Channel OMI Bu ff er Chip 30dB @ <5pJ/bit 2.5W per 64GBytes/s Tx + Rx OMI Channel At each end DDR5 @ 4000 MTPS DDR5 @ 4000 MTPS DDR5 @ 4000 MTPS DDR5 @ 4000 MTPS 16Gbit Monolithic Memory Jedec con f igurations 32GByte 1U OMI DDIMM 64GByte 2U OMI DDIMM 256GByte 4U OMI DDIMM DDR5 @ 4000 MTPS DDR5 @ 4000 MTPS DDR5 @ 4000 MTPS DDR5 @ 4000 MTPS DDR5 @ 4000 MTPS DDR5 @ 4000 MTPS DDR5 @ 4000 MTPS DDR5 @ 4000 MTPS DDR5 @ 4000 MTPS DDR5 @ 4000 MTPS DDR5 @ 4000 MTPS DDR5 @ 4000 MTPS Same TA - 1002 EDSFF Connector 2019’s 25.6Gbit/s DDR4 OMI DDIMM Locked ratio to the DDR Speed 21.33Gb/s x8 - DDR4 - 2667 25.6Gb/s x8 - DDR4/5 - 3200 32Gb/s x8 - DDR5 - 4000 38.4Gb/s - DDR5 - 4800 42.66Gb/s - DDR5 - 5333 51.2Gb/s - DDR5 - 6400 <2ns (without wire) <2ns (without wire) Serdes Phy Latency Mesochronous clocking E3.S Other Potential Emerging EDSFF Media Formats Up to 512GByte Dual OMI Channel OMI Phy

- 19. OMI Bandwidth vs SPFLOPs OMI Helping to Address Memory Bound Applications • Tailoring OPS : Bytes/s : Bytes Capacity to Application Needs Die Size shrink = 7x OMI Bandwidth reduction = 2.8x SPFLOPS reduction = 15x Theoretical Maximum of 80 OMI Channels OMI Bandwidth = 5.1 TBytes/s NVidia Ampere Max Reticule Size Die ~30 SPFLOPS Maximum Reticule Size Die @ 7nm 826mm2 ~32.18mm x ~25.66mm 28 OMI Channels = 1.8TByte/s 2 SPTFLOPs 117mm2 10.8 x 10.8 To Scale 10pts : 1mm

- 20. Overview • Why Decouple Compute from Memory, Storage & IO? • Top Down Systems Perspective and Introduction of OCP HPC Concepts • Introduction to the Open Memory Interface, OMI • Decoupling Compute with OCP OAM - HPC Module & OMI

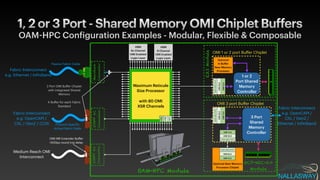

- 21. OAM - HPC Con f iguration Examples - Modular, Flexible & Composable 1, 2 or 3 Port - Shared Memory OMI Chiplet Buffers HBM 8x Channel OMI Enabled Logic Layer HBM 8 Channel OMI Enabled Logic Layer EDSFF 4C Connector Medium Reach OMI Interconnect OMI MR Extender Bu ff er <500ps round trip delay Nearstack Connector Fabric Interconnect e.g. Ethernet / In f iniband Passive Fabric Cable E3.S Module 1 or 2 Port Shared Memory Controller Optional In Bu ff er Near Memory Processor XSR - NRZ PHY OMI DLX OMI TLX OMI 1 or 2 port Bu ff er Chiplet OAM-HPC Module Maximum Reticule Size Processor with 80 OMI XSR Channels EDSFF 4C Connector Fabric Interconnect e.g. OpenCAPI / CXL / GenZ / CCIX Protocol Speci f ic Active Fabric Cable 2 Port OMI Bu ff er Chiplet with integrated Shared Memory A Bu ff er for each Fabric Standard XSR DLX TLX XSR - NRZ PHY OMI DLX OMI TLX XSR - NRZ PHY OMI DLX OMI TLX Optional Near Memory Processor Chiplet XSR - NRZ PHY OMI DLX OMI TLX 3 Port Shared Memory Controller OMI 3 port Bu ff er Chiplet OCP-NIC-3.0 Module Fabric Interconnect e.g. OpenCAPI / CXL / GenZ / Ethernet / In f iniband

- 22. OCP Accelerator Infrastructure, OAI Chassis’

- 23. 8x Cold Plate Mounted OCP OAM - HPC Modules Pluggable into OCP OAI Chassis • Summary • Rede f ine Computing Architecture • With a Focus on Power and Latency • Shared Memory Centric Architecture • Leverage Open Memory Interface, OMI • Dense OCP Modular Platform Approach

- 24. Interested? - How to Get Involved From Silicon Startups to Open Hardware Enthusiasts alike • Step 1 - Join the OpenCAPI consortium & OCP HPC Sub-Project • Step 2 - Replace DDR with Standard OMI interfaces on next processor design • Step 3 - Add low power OMI PHYs to spare beachfront on your Processors • Step 4 - Build OAM - HPC Modules around your large Processor Devices • Step 5 - Help community build Speci f ic 2 & 3 port OMI chiplet buffers • Step 6 - Help community build OMI Buffer enabled E3.S & NIC 3.0 modules etc

- 25. Questions? Contact me at [email protected] Join OpenCAPI Consortium at https://opencapi.org Join OCP HPC Sub-Project Workgroup at https://www.opencompute.org/wiki/HPC