01 introduction fundamentals_of_parallelism_and_code_optimization-www.astek.ir

- 1. [email protected] مقدمه Fundamentals of Parallelism & Code Optimization (C/C++,Fortran) for Data Science with MPI در کدها سازی بهینه و سازی موازی مبانی زبانهای C/C++,Fortranدر حجیم هاي داده برايMPI Amin Nezarat (Ph.D.) Assistant Professor at Payame Noor University [email protected] www.astek.ir - www.hpclab.ir

- 2. عناوین دوره 1.های پردازنده معماری با آشنایی اینتل 2.Vectorizationمعماری در اینتل کامپایلرهای 3.نویسی برنامه با کار و آشنایی درOpenMP 4.با داده تبادل قواعد و اصول حافظه(Memory Traffic)

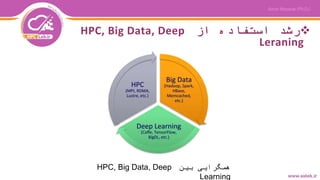

- 4. از استفاده رشدHPC, Big Data, Deep Leraning بین همگراییHPC, Big Data, Deep Learning

- 5. MapReduce, MPI, Spark, etc. Distributed Data Processing is Central to Addressing the Big Data Challenge Source: blog.mayflower.de

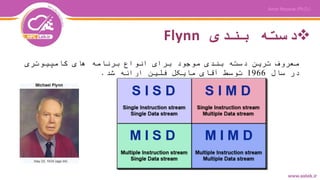

- 7. بندی دستهFlynn کامپیوتری های برنامه انواع برای موجود بندی دسته ترین معروف سال در1966شد ارائه فلین مایکل آقای توسط.

- 8. Single Instruction, Single Data (SISD) •A serial (non-parallel) computer •Single Instruction: Only one instruction stream is being acted on by the CPU during any one clock cycle •Single Data: Only one data stream is being used as input during any one clock cycle •Deterministic execution •This is the oldest type of computer •Examples: older generation mainframes, minicomputers, workstations and single processor/core PCs.

- 9. Single Instruction, Multiple Data (SIMD) • A type of parallel computer • Single Instruction: All processing units execute the same instruction at any given clock cycle • Multiple Data: Each processing unit can operate on a different data element • Two varieties: Processor Arrays and Vector Pipelines • Examples: • Processor Arrays: Thinking Machines CM-2, MasPar MP-1 & MP-2, ILLIAC IV • Vector Pipelines: IBM 9000, Cray X-MP, Y-MP & C90, Fujitsu VP, NEC SX-2, Hitachi S820, ETA10 • Most modern computers, particularly those with graphics processor units (GPUs) employ SIMD instructions and execution units.

- 10. Multiple Instruction, Single Data (MISD) •A type of parallel computer •Multiple Instruction: Each processing unit operates on the data independently via separate instruction streams. •Single Data: A single data stream is fed into multiple processing units. •Few (if any) actual examples of this class of parallel computer have ever existed. •Some conceivable uses might be: •multiple frequency filters operating on a single signal stream •multiple cryptography algorithms attempting to crack a single coded message.

- 11. Multiple Instruction, Multiple Data (MIMD) • A type of parallel computer • Multiple Instruction: Every processor may be executing a different instruction stream • Multiple Data: Every processor may be working with a different data stream • Execution can be synchronous or asynchronous, deterministic or non-deterministic • Currently, the most common type of parallel computer - most modern supercomputers fall into this category. • Examples: most current supercomputers, networked parallel computer clusters and "grids", multi-processor SMP computers, multi-core PCs. • Note: many MIMD architectures also include SIMD execution sub-components

- 12. زمان گذر در پردازش

- 18. Please do not ask me to explain the equations. Thanks. Pictures courtesy of NASA and Wikipedia. Some have models but they want data

- 19. Please do not ask me to explain the equations. Thanks. Pictures courtesy of NASA and Wikipedia. Others have data but they want models

- 20. The Landscape of Parallel Computing Research: A View from Berkeley Krste Asanović et al EECS Department University of California, Berkeley Technical Report No. UCB/EECS-2006-183 December 18, 2006 February 2015 Compute intensive (HPC Dwarfs) Dense and Sparse Linear Algebrae, Spectral Methods, N-Body Methods, Structured and Unstructured Grids, MonteCarlo Data intensive (BigData Ogres) PageRank, Collaborative Filtering, Linear Classifers, Outlier Detection, Clustering, Latent Dirichlet Allocation, Probabilistic Latent Semantic Indexing, Singular Value Decomposition, Multidimentional Scaling, Graphs Algorithms, Neural Networks, Global Optimisation, Agents, Geographical Information Systems Fox, G et al Towards a comprehensive set of big data benchmarks. In: BigData and High Performance Computing, vol 26, p. 47, I did not invent that. Pictures courtesy of Disney and DreamWorks.

- 21. Compute intensive (HPC) Clusters This is caricatural a little inaccurate but it saves me tons of explanation. Pics (c) Disney and Dreamworks Data intensive (BigData) Cloud

- 22. Compute intensive (HPC) Clusters Close to the metal High-end/Dedicated hardware Exclusive access to resources This is caricatural a little inaccurate but it saves me tons of explanation. Pics (c) Disney and Dreamworks Data intensive (BigData) Cloud Instant availability Self-service or Ready-made Elasticity, fault tolerance

- 23. The word ‘cloudster’ does not exist. I made it up. Not related to shoes. Pics (c) Disney and Dreamworks Cloudster(?) Compute intensive (HPC) Data intensive (BigData)

- 24. Bring HPC, Big Data processing, and Deep Learning into a “convergent trajectory”! What are themajor bottlenecks in current Big Data processing and Deep Learning middleware (e.g.Hadoop, Spark)? Can the bottlenecks be alleviated with new designs by taking advantage of HPC technologies? Can RDMA-enabled high-performance interconnects benefit BigData processing and Deep Learning? Can HPC Clusters with high-performance storage systems (e.g. SSD, parallel file systems) benefit Big Data and DeepLearning applications? How much performance benefits can be achieved through enhanced designs? How to design benchmarks for evaluating the performance of BigData and Deep Learning middleware on HPC clusters? 10 How Can HPC Clusters with High- Performance Interconnect and Storage Architectures Benefit Big Data and Deep Learning Applications?

- 25. Can We Run Big Data and Deep Learning Jobs on Existing HPC Infrastructure?

- 26. Can We Run Big Data and Deep Learning Jobs on Existing HPC Infrastructure?

- 27. Can We Run Big Data and Deep Learning Jobs on Existing HPC Infrastructure?

- 28. Can We Run Big Data and Deep Learning Jobs on Existing HPC Infrastructure?

- 29. Designing Communication and I/O Libraries for BigData Systems: Challenges Big Data Middleware Networking Technologies (InfiniBand, 1/10/40/100 GigE and Intelligent NICs) StorageTechnologies (HDD, SSD, NVM, and NVMe- SSD) Programming Models (Sockets) Applications Commodity Computing System Architectures (Multi- and Many-core architectures and accelerators) RDMA? Communication and I/O Library Point-to-Point Communication QoS & FaultTolerance Threaded Models and Synchronization Performance TuningI/O and File Systems Virtualization (SR-IOV) Benchmarks Upper level ( MapReduce, Spark,Intel DAAL, gRPC/TensorFlow, and Memcached)Changes?

- 30. نویسی برنامه های الیه موازی

- 31. سازی موازی مختلف روشهای حافظه بر مبتنی •اشتراکی حافظهShared Memory های پردازنده(Core or Processor)یک از کامپیوتر یک مختلف کنند می استفاده داده نوشتن و خواندن برای مشترک حافظه. مثال:OpenMP •شده توزیع حافظهDistributed Memory برای و دارند را خود اختصاصی حافظه مختلف سیستمهای ارسال از سیستمها پردازنده بین داده تبادل/پیام دریافت شود می استفاده. مثال:MPI •ترکیبی حافظهHybrid Memory گیرد می قرار استفاده مورد باال روش دو ترکیب مثال:PGAS

- 32. اشتراکی حافظهShared Memory •است سازی موازی در روش ترین مرسوم •از اما هستند مستقل مختلف های پردازنده می داده تبادل هم با مشترک حافظه یک طریق کنند •یک توسط حافظه در داده یک تغییرات پردازنده سایر برای مشاهده قابل پردازنده باشد می ها •سطح و نوع نظر از مشترک حافظه ماشینهادی دسته دو به دسترسیUMAوNUMAمی تقسیم شوند: •های حافظهUMAبا عمدتاSMP(Symmetric Multiprocessor)شوند می شناخته •ماشینهای درNUMAبا ها پردازنده تمامی سایر حافظه به توانند می فیزیکی اتصاالت

- 33. شده توزیع حافظهDistributed Memory •سیستمهایباحافظهمشترکرابههم متصلمیکند •نیازبهاتصاالتشبکهایبهگرههای پردازشیوجوددارد •مثال:Ethernet, Infiniband, Omni-Path, Myrinet •هرپردازندهازحافظهاختصاصیخود استفادهمیکندودرصورتنیازبه تبادلدادهباپردازنده،دیگراز طریقشبکهتبادلصورتمیگیرد •ازنظرپردازندهوحافظهمقیاسپذیر استومیتوانبهسرعتتعدادگرههای محاسباتیوحافظهمتعلقبههریکرا افزایش/کاهشداد

- 34. ترکیبیHybrid •و اشتراکی حافظه روش دو مدل این در شوند می ترکیب هم با شده توزیع •مدل هم آنها ترین رایجData Parallelاست و شده توزیع صورت به ها داده که در یکپارچه دهی آدرس با اشتراکیRAM گیرند می قرار محاسباتی های گره کلیه •مثال:PGAS(Partitioned Global Address Space) •Coarray Fortran, Unified Parallel C(UPC) •Global Array, X10, Chapel •است یکپارچه حافظه به دهی آدرس •و مبداء های پردازنده که صورتی در باشند سیستم یک در مقصد اشتراکی حافظه از(OpenMP)در و

- 35. نرم توان می چطور سریعتر را افزارها کرد؟

- 37. مسئله ها پردازنده سرعت PowerWall Overclocking? Free cooling? سرعت با های پردازنده برای مرسوم کنندگی خنک حلهای راه هستند گران یا و نبوده عملی باال

- 38. سازی موازی روشهای انواع مرسوم Bit Level Parallelism Instruction Level Parallelism(ILP) .1(Explicitly Parallel Instruction Computing (EPIC .2Out of Order Execution/Register Renaming .3Speculative Execution .4Vectorization Threading/Multi-threading

- 40. روشInstruction Level Parallelism(ILP) دراینروشکامپایلرسعیمیکنددستوراتیراکهمیتوانندبایکدیگراجرا شوندووابستگیدادهایبهیکدیگرندارنددریکCycleیاClockاجرانماید. •Explicitly Parallel Instruction Computing (EPIC) دریک در روش اینCycleتا دو بین16چه هر که است اجرا قابل دسترالعمل سازی موازی از باالتری درصد باشد کمتر هم به غیروابسته دستورات تعداد کند می ایجاد را. •Out of Order Execution/Register Renaming یک در بیشتری دستورات اجرای امکان روش این درCycleبا که دارد وجود یافت دست حالت این به توان می ها ثبات نام تغییر. •Speculative Execution فرا آن اجرای زمان اینکه از نظر صرف دستورات از بخشی یا تمام اجرای خیر یا است رسیده. •Vectorization مدل از خاص حاالت از یکی این(SIMD (Simple Instruction Multiple DataاقایFlynn کار همزمان صورت به داده چندین روی تواند می دستور یک آن در که است

- 41. و الین پایپ روشهای در تعادلILPدر سازی موازی تداخل ،باید می افزایش ها الین پایپ تعداد که زمانی شود می بیشتر دستورالعملها بین

- 42. اجرای در تعادلSuperscalarوILPدر سازی موازی مستقل دستورالعملهای برای خودکار جستجوی است بیشتری منابع نیازمند

- 43. روش در حافظه مسئلهOut-of- Order کاربردی های برنامه در ها داده به دسترسی الگوی بوسیله است شده محدود

- 44. سازی موازی:و ها هسته بردارها سازی برداری و زیاد هسته: خودکار صورت به نه اما محدودیت بدون رشد فرصت کند می فراهم را

- 45. به رو مسیر در سازی موازی جلوست انرژی مصرف مسئله Power wall دستورات سطح در سازی موازی مسئله ILP wall مسئله حافظه Memory wall کند پیدا ادامه باید نمایش!! •سازی موازی طریق از افزارها سخت هستند تکامل حال در •بماند عقب باید افزار نرم!

- 46. اینتل معماری

- 47. مبتنی محاسباتی های پلترفم اینتل معماری بر

- 48. Intel Xeon Processors ▷ 1-, 2-, 4-way ▷ General-purpose ▷ Highly parallel (44 cores*) ▷ Resource-rich ▷ Forgiving performance ▷ Theor. ∼ 1.0 TFLOP/s in DP* ▷ Meas. ∼ 154 GB/s bandwidth* * 2-way Intel Xeon processor, Broadwell architec- ture (2016), top-of-the-line (e.g., E5-2699 V4)

- 49. Intel Xeon Phi Processors (1st Gen) ▷ PCIe add-in card ▷ Specialized for computing ▷ Highly-parallel (61 cores*) ▷ Balanced for compute ▷ Less forgiving ▷ Theor. ∼ 1.2 TFLOP/s in DP* ▷ Meas. ∼ 176 GB/s bandwidth* * Intel Xeon Phi coprocessor, Knighs Corner ar- chitecture (2012), top-of-the-line (e.g., 7120P)

- 50. Intel Xeon Phi Processors (2nd Gen) ▷ Bootable or PCIe add-in card ▷ Specialized for computing ▷ Highly-parallel (72 cores*) ▷ Balanced for compute ▷ Less forgiving than Xeon ▷ Theor. ∼ 3.0 TFLOP/s in DP* ▷ Meas. ∼ 490 GB/s bandwidth* * Intel Xeon Phi processor, Knighs Landing ar- chitecture (2016), top-of-the-line (e.g., 7290P)

- 51. مدرن کدهای

- 52. همه برای کد یک ها پلتفرم

- 53. و علم در محاسبات و رایانش مهندسی

- 54. به کسانی چهHPCنیاز دارند؟ عنوان با موازی رایانش تاریخی شکل به"the high end of computing" شود می شناختهدر سخت مسائل سازی مدل و حل برای آن از و شود می استفاده علم و مهندسی های حوزه از بسیاری. • Atmosphere, Earth, Environment • Physics - applied, nuclear, particle, condensed matter, high pressure, fusion, photonics • Bioscience, Biotechnology, Genetics • Chemistry, Molecular Sciences • Geology, Seismology • Mechanical Engineering – from prosthetics to spacecraft • Electrical Engineering, Circuit Design, Microelectronics • Computer Science, Mathematics • Defense, Weapons

- 55. به کسانی چهHPCدارند؟ نیاز -ادامه تحلیلهای و تجزیه از بسیاری برای نیز تجاری های حوزه امروزه اند شده محتاج بستر این به خود.باعث ها داده حجم زیاد رشد شود حس بیشتر پردازش به نیاز که است شده.حوزه این جمله از کرد اشاره زیر موارد به توان می ها:• "Big Data", databases, data mining • Oil exploration • Web search engines, web based business services • Medical imaging and diagnosis • Pharmaceutical design • Financial and economic modeling • Management of national and multi-national corporations • Advanced graphics and virtual reality, particularly in the entertainment industry • Networked video and multi-media technologies • Collaborative work environments

- 57. Motivation of Iterative MapReduce Input Output map Map-Only Input map reduce MapReduce Input map reduce iterations Iterative MapReduce Pij MPI and Point-to- Point Sequential Input Output map MapReduce Classic Parallel Runtimes (MPI) Data Centered, QoS Efficient and Proven techniques Expand the Applicability of MapReduce to more classes of Applications

- 58. Parallelism Model Architecture Shuffle M M M M Collective Communication M M M M R R MapCollective ModelMapReduce Model YARN MapReduce V2 Harp MapReduce Applications MapCollective ApplicationsApplication Framework Resource Manager Harp is an open-source project developed at Indiana University, it has: • MPI-like collective communication operations that are highly optimized for big data problems. • Harp has efficient and innovative computation models for different machine learning problems. [3] J. Ekanayake et. al, “Twister: A Runtime for Iterative MapReduce”, in Proceedings of the 1st International Workshop on MapReduce and its Applications of ACM HPDC 2010 conference. [4] T. Gunarathne et. al, “Portable Parallel Programming on Cloud and HPC: Scientific Applications of Twister4Azure”, in Proceedings of 4th IEEE International Conference on Utility and Cloud Computing (UCC 2011). [5] B. Zhang et. al, “Harp: Collective Communication on Hadoop,” in Proceedings of IEEE International Conference on Cloud Engineering (IC2E 2015).

- 59. Intel® DAAL is an open-source project that provides: • Algorithms Kernels to Users • Batch Mode (Single Node) • Distributed Mode (multi nodes) • Streaming Mode (single node) • Data Management & APIs to Developers • Data structure, e.g., Table, Map, etc. • HPC Kernels and Tools: MKL, TBB, etc. • Hardware Support: Compiler • DAAL used inside the container Data management Algorithms Services Data sources Data dictionaries Data model Numeric tables and matrices Compression Analysis Training Prediction Memory allocation Error handling Collections Shared pointers

- 60. •High Level Usability: Python Interface, well documented and packaged modules •Middle Level Data-Centric Abstractions: Computation Model and optimized communication patterns •Low Level optimized for Performance: HPC kernels Intel® DAAL and advanced hardware platforms such as Xeon and Xeon Phi Harp-DAAL Big Model Paramet ers Big Model Paramet ers HPC-ABDS is Cloud-HPC interoperable software with the performance of HPC (High Performance Computing) and the rich functionality of the commodity Apache Big Data Stack. This concept is illustrated by Harp-DAAL. • High Level Usability: Python Interface, well documented and packaged modules • Middle Level Data-Centric Abstractions: Computation Model and optimized communication patterns • Low Level optimized for Performance: HPC kernels Intel® DAAL and advanced hardware platforms such as Xeon and Xeon Phi

- 62. • Datasets: 5 million points, 10 thousand centroids, 10 feature dimensions • 10 to 20 nodes of Intel KNL7250 processors • Harp-DAAL has 15x speedups over Spark MLlib • Datasets: 500K or 1 million data points of feature dimension 300 • Running on single KNL 7250 (Harp- DAAL) vs. single K80 GPU (PyTorch) • Harp-DAAL achieves 3x to 6x speedups • Datasets: Twitter with 44 million vertices, 2 billion edges, subgraph templates of 10 to 12 vertices • 25 nodes of Intel Xeon E5 2670 • Harp-DAAL has 2x to 5x speedups over state-of-the-art MPI-Fascia solution

- 63. Source codes became available on Github in February, 2017. • Harp-DAAL follows the same standard of DAAL’s original codes • Twelve Applications Harp-DAAL Kmeans Harp-DAAL MF-SGD Harp-DAAL MF-ALS Harp-DAAL SVD Harp-DAAL PCA Harp-DAAL Neural Networks Harp-DAAL Naïve Bayes Harp-DAAL Linear Regression Harp-DAAL Ridge Regression Harp-DAAL QR Decomposition Harp-DAAL Low Order Moments Harp-DAAL Covariance Harp-DAAL: Prototype and Production Code Available at https://dsc-spidal.github.io/harp

- 64. Algorithm Category Applications Features Computation Model Collective Communication K-means Clustering Most scientific domain Vectors AllReduce allreduce, regroup+allgather, broadcast+reduce, push+pull Rotation rotate Multi-class Logistic Regression Classification Most scientific domain Vectors, words Rotation regroup, rotate, allgather Random Forests Classification Most scientific domain Vectors AllReduce allreduce Support Vector Machine Classification, Regression Most scientific domain Vectors AllReduce allgather Neural Networks Classification Image processing, voice recognition Vectors AllReduce allreduce Latent Dirichlet Allocation Structure learning (Latent topic model) Text mining, Bioinformatics, Image Processing Sparse vectors; Bag of words Rotation rotate, allreduce Matrix Factorization Structure learning (Matrix completion) Recommender system Irregular sparse Matrix; Dense model vectors Rotation rotate Multi-Dimensional Scaling Dimension reduction Visualization and nonlinear identification of principal components Vectors AllReduce allgarther, allreduce Social network analysis, data mining, Scalable Algorithms implemented using Harp

- 65. Taxonomy for Machine Learning Algorithms Optimization and related issues • Task level only can't capture the traits of computation • Model is the key for iterative algorithms. The structure (e.g. vectors, matrix, tree, matrices) and size are critical for performance • Solver has specific computation and communication pattern

- 66. Computation Models B. Zhang, B. Peng, and J. Qiu, “Model-centric computation abstractions in machine learning applications,” in Proceedings of the 3rd ACM SIGMOD Workshop on Algorithms and Systems for MapReduce and Beyond, BeyondMR@SIGMOD 2016 Data and Model are typically both parallelized over same processes. Computation involves iterative interaction between data and current model to produce new model. Data Immutable Model changes

- 67. (A) Locking • Once a process trains a data item, it locks the related model parameters and prevents other processes from accessing them. When the related model parameters are updated, the process unlocks the parameters. Thus the model parameters used in local computation is always the latest. (C) AllReduce • Each process first fetches all the model parameters required by local computation. When the local computation is completed, modifications of the local model from all processes are gathered to update the model. Harp Computing Models Inter-node (Container) (B) Rotation • Each process first takes a part of the shared model and performs training. Afterwards, the model is shifted between processes. Through model rotation, each model parameters are updated by one process at a time so that the model is consistent. (D) Asynchronous • Each process independently fetches related model parameters, performs local computation, and returns model modifications. Unlike A, workers are allowed to fetch or update the same model parameters in parallel. In contrast to B and C, there is no synchronization barrier.

- 68. Machine Learning Application Machine Learning Algorithm Computation Model Programming Interface Implementation Parallelization of Machine Learning Applications

- 69. براییادگیری می طی را مسیری چه کنید؟

- 70. درس2:معماری در سازی برداری پایه اینتل های بردارهامعماری درSIMD(Simple Instruction Multiple Data)

- 71. درس3:چندنخی نویسی برنامه درOpenMP های هستهمعماری بر مبتنیMIMD(Multiple Instruction Multiple Data

- 72. درس4:اصول و مبانی حافظه ترافیک Cacheهابخشیده تسهیل را دادها از مجدد استفاده اند RAMهاهای داده برایStreamاند شده بهینه ی

- 73. حافظه به دسترسی سرعت تفاوت نوع نظر از •عملیات کلی طور بهI/Oنویسی برنامه در کارایی کننده مهار یک عنوان به شود می تلقی موازی •خواهد کارایی بیشتر کاهش باعث شود انجام شبکه طریق از عملیات این اگر شود می تبدیل گلوگاه یک به خود و شد •اند شده پیشنهاد زیر راهکارهای مسئله این حل برای: (aمثل بیشتر سرعت با های حافظه از بیشتر استفادهRAMعملیات اجرای و آنها در محاسباتی (bموازی نوشتن و خواندن امکان که موازی های سیستم فایل از استفاده از/سازند می میسر را فایلها در: oGPFS: General Parallel File System (IBM) oLustre: for Linux clusters (Intel) oHDFS: Hadoop Distributed File System (Apache) oPanFS: Panasas ActiveScale File System for Linux clusters (Panasas, Inc.) oAnd more - see http://en.wikipedia.org/wiki/List_of_file_system s#Distributed_parallel_file_systems

- 74. نظر از حافظه به دسترسی سرعت تفاوت نوع-ادامه •اند شده پیشنهاد زیر راهکارهای مسئله این حل برای(ادامه:) (cو خواندن عملیات تعداد است پذیر امکان که آنجا تا دهید کاهش را نوشتن (dبر دارد ارجحیت کمتر دفعات در داده بزرگ قطعات نوشتن زیاد دفعات در کوچک قطعات نوشتن (eدفعات تعداد ،برنامه سلایر بخشهای برایI/Oمحدود را کنید (fهای عملیات تمامیI/Oکرده تجمیع را برنامه سرتاسر در

- 75. درس5:محاسباتی کالسترهای وMPI کالسترهاییحافظه سیستمهای از-بوسیله که شده توزیع اند شده متصل هم به شبکه

- 76. دربارهMPI M P I = Message Passing Interface ارائه از اصلی هدفMPIواحد استاندارد یک به دستیابی می شده توزیع حافظه نوع از موازی نویسی برنامه برای یابد دست زیر هدف چهار به کند می سعی که باشد: •Practical •Portable •Efficient •Flexible که است شده ارائه مختلفی های ورژن استاندارد این از آنها آخرینMPI-3.xاست نویسی برنامه زبانهایCوFortran (Fortran90, 2003,2008) شود می پشتیبانی

- 77. دربارهMPI-ادامه از استفاده برای دالیلیMPI: .1Standardization:است موجود استاندارد تنها .2Portability:تغییر به نیاز ها پلتفرم سایر خودبه کد انتقال برای ندارد .3Performance Opportunities:بهینه که دارند را امکان این نویسان برنامه این و دهند انجام مختلف افزارهای سخت با متناسب را خود کد سازی توسط موضوعMPIشود نمی نقض .4Functionality:از بیش430در روتینMPI-3.xکه است شده سازی پیاده آنها از کمی بسیار تعداد به ساده موازی برنامه یک نوشتن برای(کمتر تا ده از)است نیاز

Editor's Notes

- #67: For large scale problems: X distributed; not only the input data, but also the intermediate data during the process of computation not pleasingly parallel, because of dependency determined by the iterative or recursive nature

![Parallelism Model Architecture

Shuffle

M M M M

Collective Communication

M M M M

R R

MapCollective ModelMapReduce Model

YARN

MapReduce V2

Harp

MapReduce

Applications

MapCollective

ApplicationsApplication

Framework

Resource

Manager

Harp is an open-source project developed at Indiana University, it has:

• MPI-like collective communication operations that are highly optimized for big data problems.

• Harp has efficient and innovative computation models for different machine learning problems.

[3] J. Ekanayake et. al, “Twister: A Runtime for Iterative MapReduce”, in Proceedings of the 1st International Workshop on MapReduce and its

Applications of ACM HPDC 2010 conference.

[4] T. Gunarathne et. al, “Portable Parallel Programming on Cloud and HPC: Scientific Applications of Twister4Azure”, in Proceedings of 4th IEEE

International Conference on Utility and Cloud Computing (UCC 2011).

[5] B. Zhang et. al, “Harp: Collective Communication on Hadoop,” in Proceedings of IEEE International Conference on Cloud Engineering (IC2E 2015).](https://image.slidesharecdn.com/01introductionfundamentalsofparallelismandcodeoptimization-www-200426100753/85/01-introduction-fundamentals_of_parallelism_and_code_optimization-www-astek-ir-58-320.jpg)