Classification Continued

- 1. Data Mining Classification: Alternative Techniques

- 2. Rule-Based ClassifierClassify records by using a collection of “if…then…” rulesRule: (Condition) ywhere Condition is a conjunctions of attributes y is the class labelLHS: rule antecedent or conditionRHS: rule consequent

- 3. Characteristics of Rule-Based ClassifierMutually exclusive rulesClassifier contains mutually exclusive rules if the rules are independent of each otherEvery record is covered by at most one ruleExhaustive rulesClassifier has exhaustive coverage if it accounts for every possible combination of attribute valuesEach record is covered by at least one rule

- 4. Building Classification RulesDirect Method: Extract rules directly from data e.g.: RIPPER, CN2, Holte’s 1RIndirect Method: Extract rules from other classification models (e.g. decision trees, neural networks, etc).e.g: C4.5rules

- 5. Direct Method: Sequential CoveringStart from an empty ruleGrow a rule using the Learn-One-Rule functionRemove training records covered by the ruleRepeat Step (2) and (3) until stopping criterion is met

- 6. Aspects of Sequential CoveringRule GrowingInstance EliminationRule EvaluationStopping CriterionRule Pruning

- 7. Contd…Grow a single ruleRemove Instances from rulePrune the rule (if necessary)Add rule to Current Rule SetRepeat

- 8. Indirect Method: C4.5rulesExtract rules from an unpruned decision treeFor each rule, r: A y, consider an alternative rule r’: A’ y where A’ is obtained by removing one of the conjuncts in ACompare the pessimistic error rate for r against all r’sPrune if one of the r’s has lower pessimistic error rateRepeat until we can no longer improve generalization error

- 9. Indirect Method: C4.5rulesInstead of ordering the rules, order subsets of rules (class ordering)Each subset is a collection of rules with the same rule consequent (class)Compute description length of each subset Description length = L(error) + g L(model) g is a parameter that takes into account the presence of redundant attributes in a rule set (default value = 0.5)

- 10. Advantages of Rule-Based ClassifiersAs highly expressive as decision treesEasy to interpretEasy to generateCan classify new instances rapidlyPerformance comparable to decision trees

- 11. Nearest Neighbor ClassifiersRequires three things

- 12. The set of stored records

- 13. Distance Metric to compute distance between records

- 14. The value of k, the number of nearest neighbors to retrieve

- 15. To classify an unknown record:

- 16. Compute distance to other training records

- 17. Identify k nearest neighbors

- 18. Use class labels of nearest neighbors to determine the class label of unknown record (e.g., by taking majority voteDefinition of Nearest NeighborK-nearest neighbors of a record x are data points that have the k smallest distance to x

- 19. Nearest Neighbor Classification…Choosing the value of k:If k is too small, sensitive to noise pointsIf k is too large, neighborhood may include points from other classesScaling issuesAttributes may have to be scaled to prevent distance measures from being dominated by one of the attributesExample: height of a person may vary from 1.5m to 1.8m weight of a person may vary from 90lb to 300lb

- 20. Nearest neighbor Classification…k-NN classifiers are lazy learners It does not build models explicitlyUnlike eager learners such as decision tree induction and rule-based systemsClassifying unknown records are relatively expensive

- 21. Bayes ClassifierA probabilistic framework for solving classification problemsConditional Probability:Bayes theorem:

- 22. Example of Bayes TheoremGiven: A doctor knows that meningitis causes stiff neck 50% of the timePrior probability of any patient having meningitis is 1/50,000Prior probability of any patient having stiff neck is 1/20 If a patient has stiff neck, what’s the probability he/she has meningitis?

- 23. Naïve Bayes ClassifierAssume independence among attributes Ai when class is given: P(A1, A2, …, An |C) = P(A1| Cj) P(A2| Cj)… P(An| Cj)Can estimate P(Ai| Cj) for all Ai and Cj.New point is classified to Cj if P(Cj) P(Ai| Cj) is maximal.

- 24. Naïve Bayes ClassifierIf one of the conditional probability is zero, then the entire expression becomes zeroProbability estimation:c: number of classesp: prior probabilitym: parameter

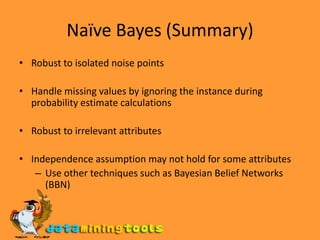

- 25. Naïve Bayes (Summary)Robust to isolated noise pointsHandle missing values by ignoring the instance during probability estimate calculationsRobust to irrelevant attributesIndependence assumption may not hold for some attributesUse other techniques such as Bayesian Belief Networks (BBN)

- 26. Artificial Neural Networks (ANN)Model is an assembly of inter-connected nodes and weighted linksOutput node sums up each of its input value according to the weights of its linksCompare output node against some threshold t

- 27. General Structure of ANNTraining ANN means learning the weights of the neurons

- 28. Algorithm for learning ANNInitialize the weights (w0, w1, …, wk)Adjust the weights in such a way that the output of ANN is consistent with class labels of training examplesObjective function:Find the weights wi’s that minimize the above objective function e.g., backpropagation algorithm

- 29. Ensemble MethodsConstruct a set of classifiers from the training dataPredict class label of previously unseen records by aggregating predictions made by multiple classifiers

- 30. General Idea

- 31. Why does it work?Suppose there are 25 base classifiersEach classifier has error rate, = 0.35Assume classifiers are independentProbability that the ensemble classifier makes a wrong prediction:

- 32. Examples of Ensemble MethodsHow to generate an ensemble of classifiers?BaggingBoosting

- 33. BaggingSampling with replacementBuild classifier on each bootstrap sampleEach sample has probability (1 – 1/n)n of being selected

- 34. BoostingAn iterative procedure to adaptively change distribution of training data by focusing more on previously misclassified recordsInitially, all N records are assigned equal weightsUnlike bagging, weights may change at the end of boosting round

- 35. Visit more self help tutorialsPick a tutorial of your choice and browse through it at your own pace.The tutorials section is free, self-guiding and will not involve any additional support.Visit us at www.dataminingtools.net