Scallable Distributed Deep Learning on OpenPOWER systems

- 1. Scalable Distributed Deep Learning on Modern HPC Systems Dhabaleswar K. (DK) Panda The Ohio State University E-mail: [email protected] http://www.cse.ohio-state.edu/~panda OpenPOWER Workshop, CINECA, Italy (July ’20) by Follow us on https://twitter.com/mvapich

- 2. OpenPOWER-CINECA (July ‘20) 2Network Based Computing Laboratory AI, Deep Learning & Machine Learning Courtesy: https://hackernoon.com/difference-between-artificial-intelligence-machine-learning- and-deep-learning-1pcv3zeg, https://blog.dataiku.com/ai-vs.-machine-learning-vs.-deep-learning • Deep Learning (DL) – A subset of Machine Learning that uses Deep Neural Networks (DNNs) – Perhaps, the most revolutionary subset! • Based on learning data representation • Examples Convolutional Neural Networks, Recurrent Neural Networks, Hybrid Networks • Data Scientist or Developer Perspective 1. Identify DL as solution to a problem 2. Determine Data Set 3. Select Deep Learning Algorithm to Use 4. Use a large data set to train an algorithm

- 3. OpenPOWER-CINECA (July ‘20) 3Network Based Computing Laboratory • Deep Learning has two major tasks 1. Training of the Deep Neural Network 2. Inference (or deployment) that uses a trained DNN • DNN Training – Training is a compute/communication intensive process – can take days to weeks – Faster training is necessary! • Faster training can be achieved by – Using Newer and Faster Hardware – But, there is a limit! – Can we use more GPUs or nodes? • The need for Parallel and Distributed Training Key Phases of Deep Learning

- 4. OpenPOWER-CINECA (July ‘20) 4Network Based Computing Laboratory • Scale-up: Intra-node Communication – Many improvements like: • NVIDIA cuDNN, cuBLAS, NCCL, etc. • CUDA Co-operative Groups • Scale-out: Inter-node Communication – DL Frameworks – most are optimized for single-node only – Distributed (Parallel) Training is an emerging trend • PyTorch – MPI/NCCL2 • TensorFlow – gRPC-based/MPI/NCCL2 • OSU-Caffe – MPI-based Scale-up and Scale-out Scale-upPerformance Scale-out Performance cuDNN gRPC Hadoop MPI MKL-DNN Desired NCCL2

- 5. OpenPOWER-CINECA (July ‘20) 5Network Based Computing Laboratory How to efficiently scale-out a Deep Learning (DL) framework and take advantage of heterogeneous High Performance Computing (HPC) resources? Broad Challenge: Exploiting HPC for Deep Learning

- 6. OpenPOWER-CINECA (July ‘20) 6Network Based Computing Laboratory • gRPC – Officially available and supported – Open-source – can be enhanced by others – Accelerated gRPC (add RDMA to gRPC) • gRPC+X – Use gRPC for bootstrap and rendezvous – Actual communication is in “X” – X MPI, Verbs, GPUDirect RDMA (GDR), etc. • No-gRPC – Baidu – the first one to use MPI Collectives for TF – Horovod – Use NCCL, or MPI, or any other future library (e.g. IBM DDL support recently added) High-Performance Distributed Data Parallel Training with TensorFlow A. A. Awan, J. Bedorf, C-H Chu, H. Subramoni, and DK Panda., “Scalable Distributed DNN Training using TensorFlow and CUDA-Aware MPI: Characterization, Designs, and Performance Evaluation”, CCGrid’19

- 7. OpenPOWER-CINECA (July ‘20) 7Network Based Computing Laboratory Overview of the MVAPICH2 Project • High Performance open-source MPI Library • Support for multiple interconnects – InfiniBand,Omni-Path, Ethernet/iWARP,RDMA over Converged Ethernet(RoCE),and AWS EFA • Support for multiple platforms – x86, OpenPOWER, ARM, Xeon-Phi,GPGPUs (NVIDIA and AMD (upcoming)) • Started in 2001, first open-source version demonstrated at SC ‘02 • Supports the latest MPI-3.1 standard • http://mvapich.cse.ohio-state.edu • Additional optimized versions for different systems/environments: – MVAPICH2-X (Advanced MPI + PGAS), since 2011 – MVAPICH2-GDR with support for NVIDIA GPGPUs, since 2014 – MVAPICH2-MIC with support for Intel Xeon-Phi, since 2014 – MVAPICH2-Virt with virtualization support, since 2015 – MVAPICH2-EA with support for Energy-Awareness, since 2015 – MVAPICH2-Azure for Azure HPC IB instances, since 2019 – MVAPICH2-X-AWS for AWS HPC+EFA instances, since 2019 • Tools: – OSU MPI Micro-Benchmarks(OMB), since 2003 – OSU InfiniBand Network Analysis and Monitoring (INAM),since 2015 • Used by more than 3,100 organizations in 89 countries • More than 789,000 (> 0.7 million) downloads from the OSU site directly • Empowering many TOP500 clusters (June ‘20 ranking) – 4th , 10,649,600-core (Sunway TaihuLight)at NSC, Wuxi, China – 8th, 448, 448 cores (Frontera) at TACC – 12th, 391,680 cores (ABCI)in Japan – 18th, 570,020 cores (Nurion)in South Korea and many others • Available with software stacks of many vendors and Linux Distros (RedHat, SuSE, OpenHPC, and Spack) • Partner in the 8th ranked TACC Frontera system • Empowering Top500 systems for more than 15 years

- 8. OpenPOWER-CINECA (July ‘20) 8Network Based Computing Laboratory MVAPICH2 (MPI)-driven Infrastructure for ML/DL Training MVAPICH2-X for CPU-Based Training MVAPICH2-GDR for GPU-Based Training Horovod TensorFlow PyTorch MXNet ML/DL Applications More details available from: http://hidl.cse.ohio-state.edu

- 9. OpenPOWER-CINECA (July ‘20) 9Network Based Computing Laboratory • MPI-driven Deep Learning – CPU-based Deep Learning – GPU-based Deep Learning • Out-of-core DNN training • Exploiting Hybrid (Data and Model) Parallelism • Use-Case: AI-Driven Digital Pathology • Commercial Support and Products Multiple Approaches taken up by OSU

- 10. OpenPOWER-CINECA (July ‘20) 10Network Based Computing Laboratory MVAPICH2 Software Family (CPU-Based Deep Learning) High-Performance Parallel Programming Libraries MVAPICH2 Support for InfiniBand, Omni-Path, Ethernet/iWARP, and RoCE MVAPICH2-X Advanced MPI features, OSU INAM, PGAS (OpenSHMEM, UPC, UPC++, and CAF), and MPI+PGAS programming models with unified communication runtime MVAPICH2-GDR Optimized MPI for clusters with NVIDIA GPUs and for GPU-enabled Deep Learning Applications MVAPICH2-Virt High-performance and scalable MPI for hypervisor and container based HPC cloud MVAPICH2-EA Energy aware and High-performance MPI MVAPICH2-MIC Optimized MPI for clusters with Intel KNC Microbenchmarks OMB Microbenchmarks suite to evaluate MPI and PGAS (OpenSHMEM, UPC, and UPC++) libraries for CPUs and GPUs Tools OSU INAM Network monitoring, profiling, and analysis for clusters with MPI and scheduler integration OEMT Utility to measure the energy consumption of MPI applications

- 11. OpenPOWER-CINECA (July ‘20) 11Network Based Computing Laboratory 0 0.2 0.4 0.6 0.8 4 8 16 32 64 128 256 512 1K 2K Latency(us) MVAPICH2-X 2.3rc3 SpectrumMPI-10.3.0.01 0.23us Intra-node Point-to-Point Performance on OpenPOWER Platform: Two nodes of OpenPOWER (Power9-ppc64le) CPU using Mellanox EDR (MT4121) HCA Intra-Socket Small Message Latency Intra-Socket Large Message Latency Intra-Socket Bi-directional BandwidthIntra-Socket Bandwidth 0 20 40 60 80 100 4K 8K 16K 32K 64K 128K 256K 512K 1M 2M Latency(us) MVAPICH2-X 2.3rc3 SpectrumMPI-10.3.0.01 0 10000 20000 30000 40000 1 8 64 512 4K 32K 256K 2M Bandwidth(MB/s) MVAPICH2-X 2.3rc3 SpectrumMPI-10.3.0.01 0 10000 20000 30000 40000 1 8 64 512 4K 32K 256K 2M Bandwidth(MB/s) MVAPICH2-X 2.3.rc3 SpectrumMPI-10.3.0.01

- 12. OpenPOWER-CINECA (July ‘20) 12Network Based Computing Laboratory 0 1 2 3 4 1 2 4 8 16 32 64 128 256 512 1K 2K Latency(us) MVAPICH2-X 2.3rc3 SpectrumMPI-10.3.0.01 Inter-node Point-to-Point Performance on OpenPower Platform: Two nodes of OpenPOWER (POWER9-ppc64le) CPU using Mellanox EDR (MT4121) HCA Small Message Latency Large Message Latency Bi-directional BandwidthBandwidth 0 50 100 150 4K 8K 16K 32K 64K 128K 256K 512K 1M 2M Latency(us) MVAPICH2-X 2.3rc3 SpectrumMPI-10.3.0.01 0 10000 20000 30000 1 8 64 512 4K 32K 256K 2M Bandwidth(MB/s) MVAPICH2-X 2.3rc3 SpectrumMPI-10.3.0.01 0 10000 20000 30000 40000 50000 1 8 64 512 4K 32K 256K 2M Bi-Bandwidth(MB/s) MVAPICH2-X 2.3rc3 SpectrumMPI-10.3.0.01 24,743 MB/s 49,249 MB/s

- 13. OpenPOWER-CINECA (July ‘20) 13Network Based Computing Laboratory 0 1000 2000 3000 256K 512K 1M 2M Latency(us) MVAPICH2-2.3 SpectrumMPI-10.1.0 OpenMPI-3.0.0 MVAPICH2-XPMEM 3X 0 100 200 300 16K 32K 64K 128K Latency(us) Message Size MVAPICH2-2.3 SpectrumMPI-10.1.0 OpenMPI-3.0.0 MVAPICH2-XPMEM 34% 0 1000 2000 3000 4000 256K 512K 1M 2M Latency(us) Message Size MVAPICH2-2.3 SpectrumMPI-10.1.0 OpenMPI-3.0.0 MVAPICH2-XPMEM 0 100 200 300 16K 32K 64K 128K Latency(us) MVAPICH2-2.3 SpectrumMPI-10.1.0 OpenMPI-3.0.0 MVAPICH2-XPMEM Optimized MVAPICH2 All-Reduce with XPMEM(Nodes=1,PPN=20) Optimized Runtime Parameters: MV2_CPU_BINDING_POLICY=hybrid MV2_HYBRID_BINDING_POLICY=bunch • Optimized MPI All-Reduce Design in MVAPICH2 – Up to 2X performance improvement over Spectrum MPI and 4X over OpenMPI for intra-node 2X (Nodes=2,PPN=20) 4X 48% 3.3X 2X 2X

- 14. OpenPOWER-CINECA (July ‘20) 14Network Based Computing Laboratory Performance of CNTK with MVAPICH2-X on CPU-based Deep Learning 0 200 400 600 800 28 56 112 224 ExecutionTime(s) No. of Processes Intel MPI MVAPICH2 MVAPICH2-XPMEM CNTK AlexNet Training (B.S=default, iteration=50, ppn=28) 20% 9% • CPU-based training of AlexNet neural network using ImageNet ILSVRC2012 dataset • Advanced XPMEM-based designs show up to 20% benefits over Intel MPI (IMPI) for CNTK DNN training using All_Reduce • The proposed designs show good scalability with increasing system size Designing Efficient Shared Address Space Reduction Collectives for Multi-/Many-cores, J. Hashmi, S. Chakraborty, M. Bayatpour, H. Subramoni, and DK Panda, 32nd IEEE International Parallel & Distributed Processing Symposium(IPDPS '18), May 2018 Available since MVAPICH2-X 2.3rc1 release

- 15. OpenPOWER-CINECA (July ‘20) 15Network Based Computing Laboratory Distributed TensorFlow on TACC Frontera (2,048 CPU nodes) • Scaled TensorFlow to 2048 nodes on Frontera using MVAPICH2 and IntelMPI • MVAPICH2 and IntelMPI give similar performance for DNN training • Report a peak of 260,000 images/sec on 2,048 nodes • On 2048 nodes, ResNet-50 can be trained in 7 minutes! A. Jain, A. A. Awan, H. Subramoni, DK Panda, “Scaling TensorFlow, PyTorch, and MXNet using MVAPICH2 for High-Performance Deep Learning on Frontera”, DLS ’19 (SC ’19 Workshop).

- 16. OpenPOWER-CINECA (July ‘20) 16Network Based Computing Laboratory MVAPICH2 Software Family (GPU-Based Deep Learning) High-Performance Parallel Programming Libraries MVAPICH2 Support for InfiniBand, Omni-Path, Ethernet/iWARP, and RoCE MVAPICH2-X Advanced MPI features, OSU INAM, PGAS (OpenSHMEM, UPC, UPC++, and CAF), and MPI+PGAS programming models with unified communication runtime MVAPICH2-GDR Optimized MPI for clusters with NVIDIA GPUs and for GPU-enabled Deep Learning Applications MVAPICH2-Virt High-performance and scalable MPI for hypervisor and container based HPC cloud MVAPICH2-EA Energy aware and High-performance MPI MVAPICH2-MIC Optimized MPI for clusters with Intel KNC Microbenchmarks OMB Microbenchmarks suite to evaluate MPI and PGAS (OpenSHMEM, UPC, and UPC++) libraries for CPUs and GPUs Tools OSU INAM Network monitoring, profiling, and analysis for clusters with MPI and scheduler integration OEMT Utility to measure the energy consumption of MPI applications

- 17. OpenPOWER-CINECA (July ‘20) 17Network Based Computing Laboratory At Sender: At Receiver: MPI_Recv(r_devbuf, size, …); inside MVAPICH2 • Standard MPI interfaces used for unified data movement • Takes advantage of Unified Virtual Addressing (>= CUDA 4.0) • Overlaps data movement from GPU with RDMA transfers High Performance and High Productivity MPI_Send(s_devbuf, size, …); GPU-Aware (CUDA-Aware) MPI Library: MVAPICH2-GPU

- 18. OpenPOWER-CINECA (July ‘20) 18Network Based Computing Laboratory CUDA-Aware MPI: MVAPICH2-GDR 1.8-2.3.4 Releases • Support for MPI communication from NVIDIA GPU device memory • High performance RDMA-based inter-node point-to-point communication (GPU-GPU, GPU-Host and Host-GPU) • High performance intra-node point-to-point communication for multi-GPU adapters/node (GPU-GPU, GPU-Host and Host-GPU) • Taking advantage of CUDA IPC (available since CUDA 4.1) in intra-node communication for multiple GPU adapters/node • Optimized and tuned collectives for GPU device buffers • MPI datatype support for point-to-point and collective communication from GPU device buffers • Unified memory

- 19. OpenPOWER-CINECA (July ‘20) 19Network Based Computing Laboratory 0 2000 4000 6000 1 2 4 8 16 32 64 128 256 512 1K 2K 4K Bandwidth(MB/s) Message Size (Bytes) GPU-GPU Inter-node Bi-Bandwidth MV2-(NO-GDR) MV2-GDR-2.3 0 1000 2000 3000 4000 1 2 4 8 16 32 64 128 256 512 1K 2K 4K Bandwidth(MB/s) Message Size (Bytes) GPU-GPU Inter-node Bandwidth MV2-(NO-GDR) MV2-GDR-2.3 0 10 20 30 0 1 2 4 8 16 32 64 128 256 512 1K 2K 4K 8K Latency(us) Message Size (Bytes) GPU-GPU Inter-node Latency MV2-(NO-GDR) MV2-GDR 2.3 MVAPICH2-GDR-2.3 Intel Haswell (E5-2687W @ 3.10 GHz) node - 20 cores NVIDIA Volta V100 GPU Mellanox Connect-X4 EDR HCA CUDA 9.0 Mellanox OFED 4.0 with GPU-Direct-RDMA 10x 9x Optimized MVAPICH2-GDR Design 1.85us 11X

- 20. OpenPOWER-CINECA (July ‘20) 20Network Based Computing Laboratory D-to-D Performance on OpenPOWER w/ GDRCopy (NVLink2 + Volta) 0 1 2 1 2 4 8 16 32 64 128 256 512 1K 2K 4K 8K Latency(us) Message Size (Bytes) Intra-Node Latency (Small Messages) 0 50 100 16K 32K 64K 128K 256K 512K 1M 2M 4M Latency(us) Message Size (Bytes) Intra-Node Latency (Large Messages) 0 20000 40000 60000 80000 1 4 16 64 256 1K 4K 16K 64K 256K 1M 4M Bandwidth(MB/s) Message Size (Bytes) Intra-Node Bandwidth 0 5 10 1 2 4 8 16 32 64 128 256 512 1K 2K 4K 8K Latency(us) Message Size (Bytes) Inter-Node Latency (Small Messages) 0 100 200 300 16K 32K 64K 128K 256K 512K 1M 2M 4M Latency(us) Message Size (Bytes) Inter-Node Latency (Large Messages) 0 5000 10000 15000 20000 25000 1 4 16 64 256 1K 4K 16K 64K 256K 1M 4M Bandwidth(MB/s) Message Size (Bytes) Inter-Node Bandwidth Platform: OpenPOWER (POWER9-ppc64le) nodes equipped with a dual-socket CPU, 4 Volta V100 GPUs, and 2port EDR InfiniBand Interconnect Intra-node Bandwidth: 65.48 GB/sec for 4MB (via NVLINK2)Intra-node Latency: 0.76 us (with GDRCopy) Inter-node Bandwidth: 23 GB/sec for 4MB (via 2 Port EDR)Inter-node Latency: 2.18 us (with GDRCopy 2.0)

- 21. OpenPOWER-CINECA (July ‘20) 21Network Based Computing Laboratory MVAPICH2-GDR vs. NCCL2 – Allreduce Operation (DGX-2) • Optimized designs in MVAPICH2-GDR offer better/comparable performance for most cases • MPI_Allreduce (MVAPICH2-GDR) vs. ncclAllreduce (NCCL2) on 1 DGX-2 node (16 Volta GPUs) 1 10 100 1000 10000 Latency(us) Message Size (Bytes) MVAPICH2-GDR-2.3.4 NCCL-2.6 ~2.5X better Platform: Nvidia DGX-2 system (16 Nvidia Volta GPUs connected with NVSwitch), CUDA 10.1 0 5 10 15 20 25 30 35 40 45 50 8 16 32 64 128 256 512 1K 2K 4K 8K 16K 32K 64K 128K Latency(us) Message Size (Bytes) MVAPICH2-GDR-2.3.4 NCCL-2.6 ~4.7X better C.-H. Chu, P. Kousha, A. Awan, K. S. Khorassani, H. Subramoni and D. K. Panda, "NV-Group: Link-Efficient Reductions for Distributed Deep Learning on Modern Dense GPU Systems, " ICS-2020, June-July 2020.

- 22. OpenPOWER-CINECA (July ‘20) 22Network Based Computing Laboratory MVAPICH2-GDR: MPI_Allreduce at Scale (ORNL Summit) • Optimized designs in MVAPICH2-GDR offer better performance for most cases • MPI_Allreduce (MVAPICH2-GDR) vs. ncclAllreduce (NCCL2) up to 1,536 GPUs 0 1 2 3 4 5 6 32M 64M 128M 256M Bandwidth(GB/s) Message Size (Bytes) Bandwidth on 1,536 GPUs MVAPICH2-GDR-2.3.4 NCCL 2.6 1.7X better 0 50 100 150 200 250 300 350 400 450 4 16 64 256 1K 4K 16K Latency(us) Message Size (Bytes) Latency on 1,536 GPUs MVAPICH2-GDR-2.3.4 NCCL 2.6 1.6X better Platform: Dual-socket IBM POWER9 CPU, 6 NVIDIA Volta V100 GPUs, and 2-port InfiniBand EDR Interconnect 0 2 4 6 8 10 24 48 96 192 384 768 1536 Bandwidth(GB/s) Number of GPUs 128MB Message SpectrumMPI 10.3 OpenMPI 4.0.1 NCCL 2.6 MVAPICH2-GDR-2.3.4 1.7X better C.-H. Chu, P. Kousha, A. Awan, K. S. Khorassani, H. Subramoni and D. K. Panda, "NV-Group: Link-Efficient Reductions for Distributed Deep Learning on Modern Dense GPU Systems, " ICS-2020, June-July 2020.

- 23. OpenPOWER-CINECA (July ‘20) 23Network Based Computing Laboratory Scalable TensorFlow using Horovod and MVAPICH2-GDR • ResNet-50 Training using TensorFlow benchmark on 1 DGX-2 node (16 Volta GPUs) 0 1000 2000 3000 4000 5000 6000 7000 1 2 4 8 16 Imagepersecond Number of GPUs NCCL-2.6 MVAPICH2-GDR-2.3.3 9% higher Platform: Nvidia DGX-2 system, CUDA 10.1 0 20 40 60 80 100 1 2 4 8 16 ScalingEfficiency(%) Number of GPUs NCCL-2.6 MVAPICH2-GDR-2.3.3 Scaling Efficiency = Actual throughput Ideal throughput at scale × 100% C.-H. Chu, P. Kousha, A. Awan, K. S. Khorassani, H. Subramoni and D. K. Panda, "NV-Group: Link-Efficient Reductions for Distributed Deep Learning on Modern Dense GPU Systems, " ICS-2020, June-July 2020.

- 24. OpenPOWER-CINECA (July ‘20) 24Network Based Computing Laboratory Distributed TensorFlow on ORNL Summit (1,536 GPUs) • ResNet-50 Training using TensorFlow benchmark on SUMMIT -- 1536 Volta GPUs! • 1,281,167 (1.2 mil.) images • Time/epoch = 3 seconds • Total Time (90 epochs) = 3 x 90 = 270 seconds = 4.5 minutes! 0 100 200 300 400 500 1 2 4 6 12 24 48 96 192 384 768 1536 Imagepersecond Thousands Number of GPUs NCCL-2.6 MVAPICH2-GDR 2.3.4 Platform: The Summit Supercomputer (#2 on Top500.org) – 6 NVIDIA Volta GPUs per node connected with NVLink, CUDA 10.1 *We observed issues for NCCL2 beyond 384 GPUs MVAPICH2-GDR reaching ~0.42 million images per second for ImageNet-1k! ImageNet-1k has 1.2 million images

- 25. OpenPOWER-CINECA (July ‘20) 25Network Based Computing Laboratory • ResNet-50 training using PyTorch + Horovod on Summit – Synthetic ImageNet dataset – Up to 256 nodes, 1536 GPUs • MVAPICH2-GDR can outperform NCCL2 – Up to 30% higher throughput Scaling PyTorch on ORNL Summit using MVAPICH2-GDR 0 50 100 150 200 250 300 350 400 450 500 Images/sec(higherisbetter) Thousands No. of GPUs NCCL-2.6 MVAPICH2-GDR-2.3.4 30% higher C.-H. Chu, P. Kousha, A. Awan, K. S. Khorassani, H. Subramoni and D. K. Panda, "NV-Group: Link-Efficient Reductions for Distributed Deep Learning on Modern Dense GPU Systems, " ICS-2020, June-July 2020. Platform: The Summit Supercomputer (#2 on Top500.org) – 6 NVIDIA Volta GPUs per node connected with NVLink, CUDA 10.1

- 26. OpenPOWER-CINECA (July ‘20) 26Network Based Computing Laboratory • ResNet-50 training using TensorFlow 2.1 + Horovod on Lassen – Synthetic ImageNet dataset – Up to 64 nodes, 256 GPUs • MVAPICH2-GDR outperforms NCCL2 Scaling TensorFlow 2.1 on LLNL Lassen using MVAPICH2-GDR 0 10 20 30 40 50 60 1 2 4 8 16 32 64 128 256 Images/sec(higherisbetter) Thousands No. of GPUs NCCL-2.7 MVAPICH2-GDR-2.3.4 Platform: The Lassen Supercomputer (#14 on Top500.org) – 4 NVIDIA Volta GPUs per node connected with NVLink, CUDA 10.2

- 27. OpenPOWER-CINECA (July ‘20) 27Network Based Computing Laboratory • Deepspeed is an emerging distributed DL framework for PyTorch • ResNet-50 training using PyTorch + Deepspeed on Lassen – Synthetic ImageNet dataset – Up to 64 nodes, 256 GPUs • Early results are promising - Perhaps better scaling for larger models? Early Exploration of Scaling PyTorch on Lassen with Deepspeed 0 10 20 30 40 50 60 70 80 1 2 4 8 16 32 64 128 256 Images/sec(higherisbetter) Thousands No. of GPUs Horovod w/ MVAPICH2-GDR Deepspeed w/ MVAPICH2-GDR Platform: The Lassen Supercomputer (#14 on Top500.org) – 4 NVIDIA Volta GPUs per node connected with NVLink, CUDA 10.2

- 28. OpenPOWER-CINECA (July ‘20) 28Network Based Computing Laboratory • MPI-driven Deep Learning – CPU-based Deep Learning – GPU-based Deep Learning • Out-of-core DNN training • Exploiting Hybrid (Data and Model) Parallelism • Use-Case: AI-Driven Digital Pathology • Commercial Support and Products Multiple Approaches taken up by OSU

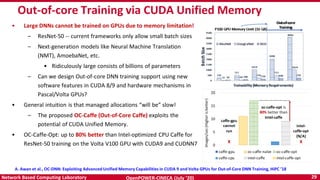

- 29. OpenPOWER-CINECA (July ‘20) 29Network Based Computing Laboratory Out-of-core Training via CUDA Unified Memory • Large DNNs cannot be trained on GPUs due to memory limitation! – ResNet-50 -- current frameworks only allow small batch sizes – Next-generation models like Neural Machine Translation (NMT), AmoebaNet, etc. • Ridiculously large consists of billions of parameters – Can we design Out-of-core DNN training support using new software features in CUDA 8/9 and hardware mechanisms in Pascal/Volta GPUs? • General intuition is that managed allocations “will be” slow! – The proposed OC-Caffe (Out-of-Core Caffe) exploits the potential of CUDA Unified Memory. • OC-Caffe-Opt: up to 80% better than Intel-optimized CPU Caffe for ResNet-50 training on the Volta V100 GPU with CUDA9 and CUDNN7 A. Awan et al., OC-DNN: Exploiting Advanced Unified Memory Capabilities in CUDA 9 and Volta GPUs for Out-of-Core DNN Training, HiPC ’18

- 30. OpenPOWER-CINECA (July ‘20) 30Network Based Computing Laboratory • Data-Parallelism– only for models that fit the memory • Out-of-core models – Deeper model Better accuracy but more memory required! • Model parallelism can work for out-of-core models! • Key Challenges – Model Partitioning is difficult for application programmers – Finding the right partition (grain) size is hard – cut at which layer and why? – Developing a practical system for model-parallelism • Redesign DL Framework or create additional layers? • Existing Communication middleware or extensions needed? HyPar-Flow: Hybrid and Model Parallelism for CPUs A. A. Awan,A. Jain,Q. Anthony, H. Subramoni,and DK Panda, “HyPar-Flow:Exploiting MPIand Kerasfor HybridParallel Trainingof TensorFlowmodels”,ISC ‘20, https://arxiv.org/pdf/1911.05146.pdf

- 31. OpenPOWER-CINECA (July ‘20) 31Network Based Computing Laboratory • ResNet-1001 with variable batch size • Approach: – 48 model-partitions for 56 cores – 512 model-replicas for 512 nodes – Total cores: 48 x 512 = 24,576 • Speedup – 253X on 256 nodes – 481X on 512 nodes • Scaling Efficiency – 98% up to 256 nodes – 93.9% for 512 nodes Out-of-core Training with HyPar-Flow (512 nodes on TACC Frontera) 481x speedup on 512 Intel Xeon Skylake nodes (TACC Frontera) A. A. Awan, A. Jain, Q. Anthony, H. Subramoni, and DK Panda, “HyPar-Flow: Exploiting MPI and Keras for Hybrid Parallel Training of TensorFlow models”, ISC ‘20, https://arxiv.org/pdf/1911.05146.pdf

- 32. OpenPOWER-CINECA (July ‘20) 32Network Based Computing Laboratory • The field of Pathology (like many other medical disciplines) is moving Digital • Traditionally, a pathologist reviews a slide and carries out diagnosis based on prior knowledge and training • Experience matters • Can Deep Learning to be used to train thousands of slides and – Let the computer carry out the diagnosis – Narrow down the diagnosis and help a pathologist to make a final decision • Significant benefits in – Reducing diagnosis time – Making pathologists productive – Reducing health care cost AI-Driven Digital Pathology

- 33. OpenPOWER-CINECA (July ‘20) 33Network Based Computing Laboratory • Pathology whole slide image (WSI) – Each WSI = 100,000 x 100,000 pixels – Can not fit in a single GPU memory – Tiles are extracted to make training possible • Two main problems with tiles – Restricted tile size because of GPU memory limitation – Smaller tiles loose structural information • Can we use Model Parallelism to train on larger tiles to get better accuracy and diagnosis? • Reduced training time significantly on OpenPOWER + NVIDIA V100 GPUs – 7.25 hours (1 node, 4 GPUs) -> 27 mins (32 nodes, 128 GPUs) Exploiting Model Parallelism in AI-Driven Digital Pathology Courtesy: https://blog.kitware.com/digital-slide- archive-large-image-and-histomicstk-open-source- informatics-tools-for-management-visualization-and- analysis-of-digital-histopathology-data/ A. Jain, A. Awan, A. Aljuhani, J. Hashmi, Q. Anthony, H. Subramoni, D. K. Panda, R. Machiraju, and A. Parwani, “GEMS: GPU Enabled Memory Aware Model ParallelismSystem for DistributedDNN Training”,Supercomputing (SC ‘20), Accepted to be Presented.

- 34. OpenPOWER-CINECA (July ‘20) 34Network Based Computing Laboratory • MPI-driven Deep Learning – CPU-based Deep Learning – GPU-based Deep Learning • Out-of-core DNN training • Exploiting Hybrid (Data and Model) Parallelism • Use-Case: AI-Driven Digital Pathology • Commercial Support and Products Multiple Approaches taken up by OSU

- 35. OpenPOWER-CINECA (July ‘20) 35Network Based Computing Laboratory • Supported through X-ScaleSolutions (http://x-scalesolutions.com) • Benefits: – Help and guidance with installation of the library – Platform-specific optimizations and tuning – Timely support for operational issues encountered with the library – Web portal interface to submit issues and tracking their progress – Advanced debugging techniques – Application-specific optimizations and tuning – Obtaining guidelines on best practices – Periodic information on major fixes and updates – Information on major releases – Help with upgrading to the latest release – Flexible Service Level Agreements • Support being provided to Lawrence Livermore National Laboratory (LLNL) and KISTI, Korea Commercial Support for MVAPICH2, HiBD, and HiDL Libraries

- 36. OpenPOWER-CINECA (July ‘20) 36Network Based Computing Laboratory • Silver ISV member of the OpenPOWER Consortium • Provides flexibility: – To have MVAPICH2, HiDL and HiBD libraries getting integrated into the OpenPOWER software stack – A part of the OpenPOWER ecosystem – Can participate with different vendors for bidding, installation and deployment process • Introduced two new integrated products with support for OpenPOWER systems (Presented at the 2019 OpenPOWER North America Summit) – X-ScaleHPC – X-ScaleAI Silver ISV Member for the OpenPOWER Consortium

- 37. OpenPOWER-CINECA (July ‘20) 37Network Based Computing Laboratory X-ScaleHPC Package • Scalable solutions of communication middleware based on OSU MVAPICH2 libraries • “out-of-the-box” fine-tuned and optimal performance on various HPC systems including OpenPOWER platforms and GPUs • Contact us for more details and a free trial!! – [email protected]

- 38. OpenPOWER-CINECA (July ‘20) 38Network Based Computing Laboratory • High-performance solution for distributed training for your complex AI problems • Features: – Integrated package with TensorFlow, PyTorch, MXNet, Horovod, and MVAPICH2 MPI libraries – Targeted for both CPU-based and GPU-based Deep Learning Training – Integrated profiling and introspection support for Deep Learning Applications across the stacks (DeepIntrospect) – Support for OpenPOWER and x86 platforms – Support for InfiniBand, RoCE and NVLink Interconnects – Out-of-the-box optimal performance – One-click deployment and execution • Send an e-mail to [email protected] for free trial!! X-ScaleAI Product

- 39. OpenPOWER-CINECA (July ‘20) 39Network Based Computing Laboratory … … X-ScaleAI Product with DeepIntrospect (DI) Capability Profiling Information (command line output) JSON Format

- 40. OpenPOWER-CINECA (July ‘20) 40Network Based Computing Laboratory X-ScaleAI Product with DeepIntrospect (DI) Capability More capabilities and features are coming …

- 41. OpenPOWER-CINECA (July ‘20) 41Network Based Computing Laboratory • Scalable distributed training is getting important • Requires high-performance middleware designs while exploiting modern interconnects • Provided a set of solutions to achieve scalable distributed training – MPI (MVAPICH2)-driven solution with Horovod for TensorFlow, PyTorch and MXNet – Optimized collectives for CPU-based training – CUDA-aware MPI with optimized collectives for GPU-based training – Out-of-core training and Hybrid Parallelism – Commercial support and product with Deep Introspection Capability • Will continue to enable the DL community to achieve scalability and high-performance for their distributed training Conclusions

- 42. OpenPOWER-CINECA (July ‘20) 42Network Based Computing Laboratory Funding Acknowledgments Funding Support by Equipment Support by

- 43. OpenPOWER-CINECA (July ‘20) 43Network Based Computing Laboratory Personnel Acknowledgments Current Students (Graduate) – Q. Anthony (Ph.D.) – M. Bayatpour (Ph.D.) – C.-H. Chu (Ph.D.) – A. Jain (Ph.D.) – K. S. Khorassani(Ph.D.) Past Students – A. Awan (Ph.D.) – A. Augustine (M.S.) – P. Balaji (Ph.D.) – R. Biswas (M.S.) – S. Bhagvat (M.S.) – A. Bhat (M.S.) – D. Buntinas (Ph.D.) – L. Chai (Ph.D.) – B. Chandrasekharan (M.S.) – S. Chakraborthy (Ph.D.) – N. Dandapanthula (M.S.) – V. Dhanraj (M.S.) – R. Rajachandrasekar (Ph.D.) – D. Shankar (Ph.D.) – G. Santhanaraman (Ph.D.) – N. Sarkauskas (B.S.) – A. Singh (Ph.D.) – J. Sridhar (M.S.) – S. Sur (Ph.D.) – H. Subramoni (Ph.D.) – K. Vaidyanathan (Ph.D.) – A. Vishnu (Ph.D.) – J. Wu (Ph.D.) – W. Yu (Ph.D.) – J. Zhang (Ph.D.) Past Research Scientist – K. Hamidouche – S. Sur – X. Lu Past Post-Docs – D. Banerjee – X. Besseron – H.-W. Jin – T. Gangadharappa (M.S.) – K. Gopalakrishnan (M.S.) – J. Hashmi (Ph.D.) – W. Huang (Ph.D.) – W. Jiang (M.S.) – J. Jose (Ph.D.) – S. Kini (M.S.) – M. Koop (Ph.D.) – K. Kulkarni (M.S.) – R. Kumar (M.S.) – S. Krishnamoorthy (M.S.) – K. Kandalla (Ph.D.) – M. Li (Ph.D.) – P. Lai (M.S.) – J. Liu (Ph.D.) – M. Luo (Ph.D.) – A. Mamidala (Ph.D.) – G. Marsh (M.S.) – V. Meshram (M.S.) – A. Moody (M.S.) – S. Naravula (Ph.D.) – R. Noronha (Ph.D.) – X. Ouyang (Ph.D.) – S. Pai (M.S.) – S. Potluri (Ph.D.) – K. Raj (M.S.) – P. Kousha (Ph.D.) – N. S. Kumar (M.S.) – B. Ramesh (Ph.D.) – K. K. Suresh (Ph.D.) – N. Sarkauskas (B.S.) – J. Lin – M. Luo – E. Mancini Past Programmers – D. Bureddy – J. Perkins Current ResearchSpecialist – J. Smith – S. Marcarelli – A. Ruhela – J. Vienne Current Post-doc – M. S. Ghazimeersaeed – K. Manian Current Student (Undergraduate) – V. Gangal (B.S.) Past Research Specialist – M. Arnold Current ResearchScientist – A. Shafi – H. Subramoni – S. Srivastava (M.S.) – S. Xu (Ph.D.) – Q. Zhou (Ph.D.) – H. Wang Current Senior Research Associate – J. Hashmi

- 44. OpenPOWER-CINECA (July ‘20) 44Network Based Computing Laboratory Thank You! Network-Based Computing Laboratory http://nowlab.cse.ohio-state.edu/ [email protected] The High-Performance MPI/PGAS Project http://mvapich.cse.ohio-state.edu/ The High-Performance Deep Learning Project http://hidl.cse.ohio-state.edu/ The High-Performance Big Data Project http://hibd.cse.ohio-state.edu/