Cray HPC Environments for Leading Edge Simulations

- 1. HPC Environments for Leading Edge Simulations 1 Greg Clifford Manufacturing Segment Manager [email protected]

- 2. Topics: ● Cray, Inc and some customer examples ● Application scaling examples ● The Manufacturing segment is set for a significant step forward in performance 2

- 3. Manufacturing Earth Sciences Energy Life Sciences Financial Services Cray Industry Solutions Cray Inc. – 2013 3 Anything That Can Be Simulated Needs a Cray Computation Analysis Storage/Data

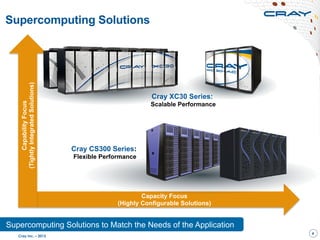

- 4. Capacity Focus (Highly Configurable Solutions) Supercomputing Solutions to Match the Needs of the Application Supercomputing Solutions Cray Inc. – 2013 4 CapabilityFocus (TightlyIntegratedSolutions) Cray CS300 Series: Flexible Performance Cray XC30 Series: Scalable Performance

- 5. Cray Specializes in Large Systems… Over 45 PF’s in XE6 and XK7 Systems 5 Cray Higher-Ed Roundtable, July 22, 2013

- 6. New Clothes: NERSC - Edison 10/3/13 6 Cray Higher-Ed Roundtable, July 22, 2013

- 7. Running Large Jobs… NERSC “Now Computing” Snapshot (taken Sept. 4th 2013) 10/3/13 Cray Higher-Ed Roundtable, July 22, 2013 7

- 8. WRF Hurricane Sandy Simulation on Blue Waters Cray Confidential 8 ● Initial analysis of WRF output is showing some very striking features of Hurricane Sandy. Level of detail between a 3km WRF simulation and BW 500meter run is apparent in these radar reflectivity results 3km WRF results Blue Waters 500meter WRF results 10/3/13

- 9. WRF Hurricane Sandy Simulation on Blue Waters Cray Confidential 9 10/3/13

- 10. Cavity Flow Studies using HECToR (Cray XE6) S. Lawson, et.al. University of Liverpool 10 ● 1.1 Billion grid point model ● Scaling to 24,000 cores ● Good agreement between experiments and CFD * Ref: http://www.hector.ac.uk/casestudies/ucav.php

- 11. 11 CTH Shock Physics CTH is a multi-material, large deformation, strong shock wave, solid mechanics code and is one of the most heavily used computational structural mechanics codes on DoD HPC platforms. “For large models, CTH will show linear scaling to over 10,000 cores. We have not seen a limit to the scalability of the CTH application” “A single parametric study can easily consume all of the ORNL Jaguar resources” CTH developer

- 12. Seismic processing Compute requirements 1212 petaFLOPS 0,1 1 10 1000 100 1995 2000 2005 2010 2015 2020 0,5 Seismic Algorithm complexity Visco elastic FWI Petro-elastic inversion Elastic FWI Visco elastic modeling Isotropic/anisotropic FWI Elastic modeling/RTM Isotropic/anisotropic RTM Isotropic/anisotropic modeling Paraxial isotropic/anisotropic imaging Asymptotic approximation imaging A petaflop scale system is required to deliver the capability to move to a new level of seismic imaging. One petaflop

- 13. 13 Compute requirements in CAE Simulation Fidelity Robust Design Design Optimization Design Exploration Multiple runs Departmental cluster 100 cores Desktop 16 cores Single run Central Compute Cluster 1000 cores Supercomputing Environment >2000 cores “Simulation allows engineers to know, not guess – but only if IT can deliver dramatically scaled up infrastructure for mega simulations…. 1000’s of cores per mega simulation” CAE developer 13

- 14. CAE Application Workload 14 CFD (30%) Structures (20%) Impact/Crash (40%) Vast majority of large simulations are MPI parallel Basically the same codes used across all industries

- 15. CAE Workload status ● ISV codes dominate the CAE commercial workload ● Many large manufacturing companies have >>10,000 cores HPC systems ● Even for large organizations very few jobs use more than 256 MPI ranks ● There is a huge discrepancy between the scalability in production at large HPC centers and the commercial CAE environment 15 Why aren’t commercial CAE environments leveraging scaling for better performance

- 16. 16 Often the full power available is not being leveraged

- 17. 10/3/13 17 Innovations in the field of Combustion

- 18. c. 2003, high density, fast interconnect Crash & CFD c. 1983, Cray X-MP, Convex MSC/NASTRAN c. 1988, Cray Y-MP, SGI Crash 18 c.1978 Cray-1, Vector processing Serial c. 2007, Extreme scalability Proprietary interconnect 1000’s cores Requires “end-to-end parallel” c. 1983 Cray X-MP, SMP 2-4 cores c. 1998, MPI Parallel “Linux cluster”, low density, slow interconnect ~100 MPI ranks c. 2013 Cray XE6 driving apps: CFD, CEM, ??? Propagation of HPC to commercial CAE Early adoption Common in Industry

- 19. Obstacles to extreme scalability using ISV CAE codes 19 1. Most CAE environments are configured for capacity computing — Difficult to schedule 1000‘s of cores for one simulation — Simulation size and complexity driven by available compute resource — This will change as compute environments evolve 2. Application license fees are an issue — Application cost can be 2-5 times the hardware costs — ISVs are encouraging scalable computing and are adjusting their licensing models 3. Applications must deliver “end-to-end” scalability — “Amdahl’s Law” requires vast majority of the code to be parallel — This includes all of the features in a general purpose ISV code — This is an active area of development for CAE ISVs

- 20. Copyright © 2013 Altair Engineering, Inc. Proprietary and Confidential. All rights reserved. Collaboration with Cray • Fine-tuned AcuSolve for maximum efficiency on Cray hardware • Using Cray MPI libraries • Efficient core placement • AcuSolve package built specifically for Cray’s Extreme Scalability Mode(ESM) -- and for the first time shipped in V12.0 • Extensively tested the code for various Cray systems (XE6, XC30) 20

- 21. Copyright © 2013 Altair Engineering, Inc. Proprietary and Confidential. All rights reserved. Version 12.0: More Scalable • Optimized domain decomposition for hybrid mpi/openmp • Added MPI performance optimizer • Nearly perfect scalability seen down to ~4k nodes/subdomain Parallel performance on a Linux Cluster with IB interconnect 21

- 22. Copyright © 2013 Altair Engineering, Inc. Proprietary and Confidential. All rights reserved. Version 12.0: Larger Problems • In V12.0 the capacity of AcuSolve is increased to efficiently solve problem sizes exceeding 1 billion elements • Example: transient, DDES simulation of F1 drafting on ~1 billion elements 22

- 23. Copyright © 2013 Altair Engineering, Inc. Proprietary and Confidential. All rights reserved. Case studies are real-life engineering problems All tests are performed in-house by Cray • Case Study #1: Aerodynamics of a car model (ASMO) referred as 70M • 70 million elements • Transient incompressible flow (implicit solve) Case Studies 23

- 24. Copyright © 2013 Altair Engineering, Inc. Proprietary and Confidential. All rights reserved. • Case Study #2: Cabin comforter model referred as 140M • 140 million elements • Steady incompressible flow + heat transfer (implicit solve) + radiation Case Studies 24

- 25. Copyright © 2013 Altair Engineering, Inc. Proprietary and Confidential. All rights reserved. Performance Results (combined) 140M Cray XC30 (SandyBridge + Aries) 70M Cray XC30 (SandyBridge + Aries) 70M Cray XE6 (AMD + Gemini) 70M Linux cluster (SandyBridge + IB) Ideal small core count 25

- 26. Copyright © 2013 Altair Engineering, Inc. Proprietary and Confidential. All rights reserved. Webinar Conclusions • Cray’s XC30 demonstrated the best performance in terms of both scalability and throughput • Parallel performance of XE6 and XC30 interconnect was superior to IB • For small number of cores (approximately less than 750), AcuSolve parallel performance is satisfactory across multiple platforms • Throughput is mostly affected by core type (e.g. SandyBridge v.s. Westmere) 26

- 27. Summary of Cray Value 1. Extreme fidelity simulations require HPC performance and extreme scalability is the only option to achieve this performance 2. Cray systems are designed for large production HPC environments, whether it is a single simulation using 10,000 cores or 100 simulations each using 100 cores. 3. The technology is in place for CAE environments to leverage >>1000s of cores per simulation and we are over due to see extreme scaling leveraged in commercial environments

- 28. Copyright © 2013 Altair Engineering, Inc. Proprietary and Confidential. All rights reserved. Questions? 28