Putting some "logic" in LVM.

- 1. LVM It's only logical. Steven Lembark Workhorse Computing [email protected]

- 2. What's so logical about LVM?

- 3. What's so logical about LVM? Simple: It isn't phyiscal.

- 4. What's so logical about LVM? Simple: It isn't phyiscal. Think of it as "virtual memory" on a disk.

- 6. Begin at the beginning... Disk drives were 5, maybe 10Mb. Booting from tape takes too long. Can't afford a whole disk for swap. What to do? Tar overlays? RA-70 packs?

- 7. Partitions save the day! Divide the drive for swap, boot, O/S. Allows separate mount points. New partitions == New mount points.

- 8. What you used to do Partition the drive. Remembering to keep a separate partition for /boot. Using parted once the original layout was outgrown. Figuring out how to split space with new disks...

- 9. Size matters. Say you have something big: 20MB of data. Tape overlays take too long. RA-70's require remounts. How can we manage it?

- 10. Making it bigger We need an abstraction: Vnodes. Instead of "hardware".

- 11. Veritas & HP Developed different ways to do this. Physical drives. Grouped into "blocks". Allocated into "volumes". Fortunately linux uses HP's scheme.

- 12. First few steps pvcreate initialize physical storage. whole disk or partition. vgcreate multiple drives into pool of blocks. lvcreate re-partition blocks into mountable units.

- 13. Example: single-disk desktop grub2 speaks lvm – goodby boot partitions! Two partitions: primary swap + everything else. Call them /dev/sda{1,2}. swap== 2 * RAM rest == lvm

- 14. Example: single-disk desktop grub2 speaks lvm – goodby boot partitions! Two partitions: primary swap + everything else. Call them /dev/sda{1,2}. swap is for hibernate and recovery. otherwise use LVM.

- 15. Example: single-disk desktop # fdisk /dev/sda; # sda1 => 82, sda2 => 8e

- 16. Example: single-disk desktop # fdisk /dev/sda; # sda1 => 82, sda2 => 8e # pvcreate /dev/sda2;

- 17. Example: single-disk desktop # fdisk /dev/sda; # sda1 => 82, sda2 => 8e # pvcreate /dev/sda2; # vgcreate vg00 /dev/sda2;

- 18. Example: single-disk desktop # fdisk /dev/sda; # sda1 => 82, sda2 => 8e # pvcreate /dev/sda2; # vgcreate vg00 /dev/sda2; # lvcreate -L 8Gi -n root vg00;

- 19. Example: single-disk desktop # fdisk /dev/sda; # sda1 => 82, sda2 => 8e # pvcreate /dev/sda2; # vgcreate vg00 /dev/sda2; # lvcreate -L 8Gi -n root vg00; # mkfs.xfs -blog=12 -L root /dev/vg00/root;

- 20. Example: single-disk desktop # fdisk /dev/sda; # sda1 => 82, sda2 => 8e # pvcreate /dev/sda2; # vgcreate vg00 /dev/sda2; # lvcreate -L 8Gi -n root vg00; # mkfs.xfs -blog=12 -L root /dev/vg00/root; # mount /dev/vg00/root /mnt/gentoo;

- 21. Finding yourself Ever get sick of UUID? Labels? Device paths?

- 22. Finding yourself Ever get sick of UUID? Labels? Device paths? LVM assigns UUIDs to PV, VG, LV.

- 23. Finding yourself Ever get sick of UUID? Labels? Device paths? Let LVM do the walking: vgscan -v

- 24. Give linux the boot mount -t proc none /proc; mount -t sysfs none /sys; /sbin/mdadm --verbose --assemble --scan; /sbin/vgscan –verbose; /sbin/vgchange -a y; /sbin/mount /dev/vg00/root /mnt/root; exec /sbin/switch_root /mnt/root /sbin/init;

- 25. Then root fills up... Say goodby to parted. lvextend -L12Gi /dev/vg00/root; xfs_growfs /dev/vg00/root; Notice the lack of any umount.

- 26. Add a new disk /sbin/fdisk /dev/sdb; # sdb1 => 8e pvcreate /dev/sdb1; vgextend vg00 /dev/sdb1; lvextend -L24Gi /dev/vg00/root; xfs_growfs /dev/vg00/root;

- 27. And another disk, and another... Let's say you've scrounged ten disks. One large VG.

- 28. And another disk, and another... Let's say you've scrounged ten disks. One large VG. Then one disk fails.

- 29. And another disk, and another... Let's say you've scrounged ten disks. One large VG. Then one disk fails. And the entire VG with it.

- 30. Adding volume groups Lesson: Over-large VG's become fragile. Fix: Multiple VG's partition the vulnerability. One disk won't bring down everyhing.

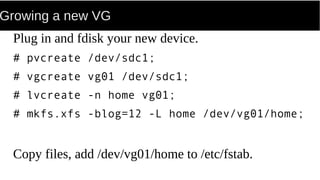

- 31. Growing a new VG Plug in and fdisk your new device. # pvcreate /dev/sdc1; # vgcreate vg01 /dev/sdc1; # lvcreate -n home vg01; # mkfs.xfs -blog=12 -L home /dev/vg01/home; Copy files, add /dev/vg01/home to /etc/fstab.

- 32. Your backups just got easier Separate mount points for "scratch". "find . -xdev" Mount /var/spool, /var/tmp. Back up persistent portion of /var with rest of root.

- 33. More smaller volumes More /etc/fstab entries. Isolate disk failures to non-essential data. Back up by mount point. Use different filesystems (xfs vs. ext4).

- 34. RAID + LVM - LV with copies using LVM. - Or make PV's out of mdadm volumes. LV's simplify handling huge RAID volumes.

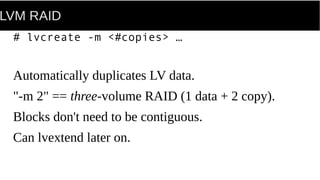

- 35. LVM RAID # lvcreate -m <#copies> … Automatically duplicates LV data. "-m 2" == three-volume RAID (1 data + 2 copy). Blocks don't need to be contiguous. Can lvextend later on.

- 36. LVM on RAID Division of labor: - mdadm for RAID. - LVM for mount points. Use mdadm to create space. Use LVM to manage it.

- 37. "Stride" for RAID > 1 LV blocks == RAID page size. Good for RAID 5, 6, 10. Meaningless for mirror (LVM or hardware).

- 38. Monitored LVM # lvmcreate –monitor y ... Use dmeventd for monitoring. Know about I/O errors. What else would you want to do at 3am?

- 39. Real SysAdmin's don't need sleep! Archiving many GB takes time. Need stable filesystems. Easy: Kick everyone off, run the backups at 0300.

- 40. Real SysAdmin's don't need sleep! Archiving many GB takes time. Need stable filesystems. Easy: Kick everyone off, run the backups at 0300. If you enjoy that sort of thing.

- 41. Snapshots: mounted backups Not a hot copy. Snapshot pool == stable version of COW blocks. Stable version of LV being backed up. Size == max pages that might change during lifetime.

- 42. Lifecycle of a snapshot Find mountpoint. Snapshot mount point. Work with static contents. Drop snapshot.

- 43. Most common: backup Live database Spool directory Disk cache Home dirs

- 44. Backup a database Data and config under /var/postgres. Data on single LV /dev/vg01/postgres. At 0300 up to 1GB per hour. Daily backup takes about 30 minutes. VG keeps 8GB free for snapshots.

- 45. Backup a database # lvcreate -L1G -s -n pg-tmp /dev/vg01/postgres; 1G == twice the usual amount. Updates to /dev/vg01/postgres continue. Original pages stored in /dev/vg01/pg-backup. I/O error in pg-backup if > 1GB written.

- 46. Backup a database # lvcreate -L1G -s -n pg-tmp /dev/vg01/postgres; # mount --type xfs -o'ro,norecovery,nouuid' /dev/vg01/pg-tmp /mnt/backup; # find /mnt/backup -xdev … /mnt/backup is stable for duration of backup. /var/postgres keeps writing.

- 47. Backup a database One downside: Duplicate running database. Takes extra steps to restart. Not difficult. Be Prepared.

- 48. Giving away what you ain't got "Thin volumes". Like sparse files. Pre-allocate pool of blocks. LV's grow as needed. Allows overcommit.

- 49. "Thin"? "Thick" == allocate LV blocks at creation time. "—thin" assigns virtual size. Physical size varies with use.

- 50. Why bother? Filesystems that change over time. No need to pre-allocate all of the space. Add physical storage as needed. ETL intake. Archival. User scratch.

- 51. Example: Scratch space for users. Say you have ~30GB of free space. And three users. Each "needs" 40GB of space. No problem.

- 52. The pool is an LV. "--thinpool" labels the LV as a pool. Allocate 30GB into /dev/vg00/scatch. # lvcreate -L 30Gi --thinpool scratch vg00;

- 53. Virtually allocate LV "lvcreate -V" allocates space out of the pool. "-V" == "virtual" # lvcreate -V 40Gi --thin -n thin_one /dev/vg00/scratch; # lvcreate -V 40Gi ...

- 54. Virtually yours Allocated 120Gi using 30GB of disk. lvdisplay shows 0 used for each volume??

- 55. Virtually yours Allocated 60GiB of 50GB. lvdisplay shows 0 used for each volume?? Right: None used. Yet. 40GiB is a limit.

- 56. Pure magic! Make a filesytem. Mount the lvols. df shows them as 40GiB. Everyone is happy!

- 57. Pure magic! Make a filesytem. Mount the lvols. df shows them as 20GiB. Everyone is happy... Until 30GB is used up.

- 58. Size does matter. No blocks left to allocate. Now what? Writing procs are "killable blocked". Hold queue until "kill -KILL" or space available.

- 59. One fix: scrounge a disk vgextend vg00; lvextend -L<whatever> /dev/vg00/scratch; Bingo: free blocks.

- 60. Reduce, reuse, recycle fstrim(8) removed unused blocks from a filesystem. Reduces virtual allocations. Allows virtual volumes to re-grow: ftrim --all -verbose; cron is your friend.

- 61. Highly dynamic environment Weekly doesn't cut it: download directory. uncompress space compile large projects. Or a notebook with small SSD.

- 62. Automatic real-time trimming 3.0+ kernel w/ "TRIM". Device needs "FITRIM". http://xfs.org/index.php/FITRIM/discard

- 63. Sanity check: discard avaiable? $ cat /sys/block/sda/queue/discard_max_bytes; 2147450880 $ cat /sys/block/dm-8/queue/discard_max_bytes; 2147450880 So far so good...

- 64. Do the deed /dev/vg00/scratch_one /scratch/jowbloe xfs defaults,discard 0 1 or mount -o defaults,discard /foo /mnt/foo;

- 65. What you see is all you get Dynamic discard == overhead. Often not worse than network mounts. YMMV... Avoids "just in case" provisioning. Good with SSD, battery-backed RAID.

- 66. LVM benefits - Saner mount points. - Hot backups. - Dynamic space manglement.

- 67. LVM benefits - Saner mount points. - Hot backups. - Dynamic space manglement. Seems logical?