running Tensorflow in Production

- 1. Tensorflow meetup 07 Aug 2018, Ghent

- 2. Today KubeFlow Robbe Sneyders @RobbeSneyders TensorFlow Transform Matthias Feys @FsMatt Tensorflow Hub & TensorFlow Serving Stijn Decubber @sdcubber

- 3. - Next meetup: 08/10/2018 - PyTorch vs Keras - ... - ... - ... Next time

- 5. What is tf.Transform? Library for preprocessing data with TensorFlow ● Structured way of analyzing and transforming big datasets ● Remove "training-serving skew"

- 6. Why tf.Transform? {weight:100, x:99, y:12} Batch Process Stream Process

- 7. Why tf.Transform? {weight:100, x:99, y:12} tf.Transform

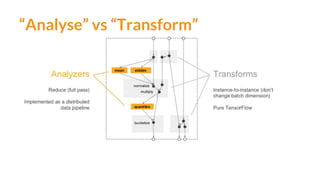

- 8. How does it work? 1. “Analyze” step similar to scikit learn “fit” step ○ Iterates over the complete dataset and creates a TF Graph 2. “Transform” step similar to scikit learn “transform” step ○ Uses the TF Graph from the “Analyze step” ○ Transforms the complete dataset 3. Same TF Graph can be used during serving “Analyze” and “Transform” step both use the same preprocessing function

- 9. Preprocessing function in tf.Transform

- 10. Preprocessing function in tf.Transform

- 11. Preprocessing function in tf.Transform

- 12. Preprocessing function in tf.Transform

- 13. Preprocessing function in tf.Transform

- 14. Preprocessing function in tf.Transform

- 16. Goal of “Analyze” step

- 17. Running on Apache Beam ● Open source, unified model for defining both batch and streaming data-parallel processing pipelines. ● Using one of the open source Beam SDKs, you build a program that defines the pipeline. ● The pipeline is then executed by one of Beam’s supported distributed processing back-ends, which include Apache Apex, Apache Flink, Apache Spark, and Google Cloud Dataflow. Beam Model: Fn Runners Apache Flink Apache Spark Beam Model: Pipeline Construction Other LanguagesBeam Java Beam Python Execution Execution Cloud Dataflow Execution Source: https://beam.apache.org

- 18. Apache Beam Key Concepts ● Pipelines: data processing job made of a series of computations including input, processing, and output ● PCollections: bounded (or unbounded) datasets which represent the input, intermediate and output data in pipelines ● PTransforms: data processing step in a pipeline in which one or more PCollections are an input and output ● I/O Sources and Sinks: APIs for reading and writing data which are the roots and endpoints of the pipeline. Source: https://beam.apache.org

- 19. Demo time (repo: http://bit.ly/tftransformdemo)

- 20. tf.Transform Library for preprocessing data with TensorFlow ● Structured way of analyzing and transforming big datasets ● Remove "training-serving skew"

- 21. TensorFlow Hub

- 22. Why TF Hub? Many state-of-the-art ML models are trained on huge datasets (ImageNet) and require massive amounts of compute to train (VGG, Inception…) However, reusing these models for other applications (transfer learning) can: ● Improve training speed ● Improve generalization and accuracy ● Allow to train with smaller datasets

- 23. Weights of the module can be retrained or fixed What is TF Hub? https://www.tensorflow.org/hub/ ● TF Hub is a library for the publication, and consumption of ML models ● Similar to Caffe model zoo, Keras applications… ● But easier for everyone to publish and host models ● A module is a self-contained piece of a graph together with weights and assets

- 24. How to use it? m = hub.Module("https://tfhub.dev/google/progan-128/1") The model graph and weights are downloaded when a Module is instantiated: with tf.Graph().as_default(): module_url = "https://tfhub.dev/google/nnlm-en-dim128-with-normalization/1" embed = hub.Module(module_url) embeddings = embed(["A long sentence.", "single-word", "http://example.com"]) with tf.Session() as sess: sess.run(tf.global_variables_initializer()) sess.run(tf.tables_initializer()) print(sess.run(embeddings)) After that, the module will be added to the graph each time it is called: Returns embeddings without training a model (NASNet: you get 62000+ GPU-hours)

- 25. Exporting and Hosting Modules "https://tfhub.dev/google/progan-128/1" repo publisher model version Hosting: export the trained model, create a tarball and upload it def module_fn(): inputs = tf.placeholder(dtype=tf.float32, shape=[None, 50]) layer1 = tf.layers.fully_connected(inputs, 200) layer2 = tf.layers.fully_connected(layer1, 100) outputs = dict(default=layer2, hidden_activations=layer1) # Add default signature. hub.add_signature(inputs=inputs, outputs=outputs) spec = hub.create_module_spec(module_fn) Exporting: define a graph, add signature, call create_model_spec

- 26. TF Hub Applications Images Natural language More coming… (video, audio…)

- 28. What is Serving? Serving is how you apply a model, after you’ve trained it

- 29. What is Serving? Client side message prediction message Server side request response

- 30. Why TF Serving? ● Online, low-latency ● Multiple models, multiple versions ● Should scale with demand: K8S Goals Data Model Application?

- 31. What is TF Serving? ● Flexible, high-performance serving system for machine learning models, designed for production environments ● Can be hosted on for example kubernetes ○ ~ ML Engine in your own kubernetes cluster https://github.com/tensorflow/serving Data Model Application Serving

- 32. Main Architecture TF Serving Libraries File System Model v1 Model v2 Scan and load models Servable handler Loader Version Manager gRPC/REST requests Publish new versions Serves the model TensorFlow Serving Server sideClient side

- 33. How? General pipeline: Train the model Export model Host server Make requests Custom TF Keras Estimators TF serving HTTP gRPC

- 34. Exporting a model Three APIs 1. Regress: 1 input tensor - 1 output tensor 2. Classify: 1 input tensor - outputs: classes & scores 3. Predict: arbitrary many input and output tensors SavedModel is the universal serialization format for TF models ● Supports multiple graphs that share variables ● SignatureDef fully specifies inference computation by inputs - outputs Universal format for many models: Estimators - Keras - custom TF ... Model graph Model weights

- 35. Custom TF models Idea: specify inference graph and store it together with the model weights SignatureDef: specify the inference computation Serving key: identifies the metagraph Builder combines the model weights and {key: signaturedef} mapping Exporting a model

- 36. Custom models - simplified TensorFlow provides a convenience method that is sufficient for most cases SignatureDef: implicitly defined with default signature key Exporting a model

- 37. Keras models Work just fine with the simple_save() method Save model in context of the Keras session Use the Keras Model instance as a convenient wrapper to define the SignatureDef Exporting a model

- 38. Using the Estimator API ● Trained estimator has export_savedmodel() method ● Expects a serving_input_fn: ○ Serving time equivalent of input_fn ○ Returns a ServingInputReceiver object ○ Role: receive a request, parse it, send it to model for inference ● Requires a feature specification to provide placeholders parsed from serialized Examples (parsing input receiver) or from raw tensors (raw input receiver) Feature spec: Receiver fn: Export: Exporting a model

- 39. Result: metagraph + variables Model graph Model weights Model version: root folder of the model files should be an integer that denotes the model version. TF serving infers the model version from the folder name. Inspect this folder with the SavedModel CLI tool! Exporting a model

- 40. Setting up a TF Server tensorflow_model_server --model_base_path=$(pwd) --rest_api_port=9000 --model_name=MyModel tf_serving/core/basic_manager] Successfully reserved resources to load servable {name: MyModel version: 1} tf_serving/core/loader_harness.cc] Loading servable version {name: MyModel version: 1} external/org_tensorflow/tensorflow/cc/saved_model/loader.cc] Loading MyModel with tags: { serve }; external/org_tensorflow/tensorflow/cc/saved_model/loader.cc] SavedModel load for tags { serve }; Status: success. Took 1048518 microseconds. tf_serving/core/loader_harness.cc] Successfully loaded servable version {name: MyModel version: 1} tf_serving/model_servers/main.cc] Exporting HTTP/REST API at:localhost:9000 ...

- 41. Submitting a request Via HTTP: using the python requests module Via gRPC: by populating a request protobuf via Python bindings and passing it through a PredictionService stub

- 42. Demo time

- 43. Getting started ● Docs: https://www.tensorflow.org/serving/ ● Source code: https://github.com/tensorflow/serving ● Installation: Dockerfiles are available, also for GPU ● End-to-end example blogpost with tf.Keras: https://blog.ml6.eu/training-and-serving-ml-models-with-tf-keras-3d29b41e066c

- 44. KubeFlow

- 45. Perception: ML products are mostly about ML

- 46. Reality: ML requires DevOps, lots of it

- 47. You know what is really good at DevOps?

- 49. Kubernetes is ● An open-source system ● For managing containerized applications ● Across multiple hosts in a cluster

- 51. Can run anywhere

- 53. Advantages of containerized applications ● Runs anywhere ○ OS is packaged with container ● Consistent environment ○ Runs the same on laptop as on cloud ● Isolation ○ Every container has his own OS and filesystem ● Dev and Ops separation of concern ○ Software development can be separated from deployment ● Microservices ○ Applications are broken into smaller, independent pieces and can be deployed and managed dynamically ○ Separate pieces can be developed independently

- 54. Orchestration across nodes in a cluster Nodes

- 55. Oh, you want to use ML on K8s? ● Containers ● Packaging ● Kubernetes service endpoints ● Persistent volumes ● Scaling ● Immutable deployments ● GPUs, Drivers & the GPL ● Cloud APIs ● DevOps ● ...

- 56. Kubeflow Build portable ML products using Kubernetes

- 57. What is Kubeflow? “The Kubeflow project is dedicated to making deployments of machine learning (ML) workflows on Kubernetes simple, portable and scalable. Our goal is not to recreate other services, but to provide a straightforward way to deploy best-of-breed open-source systems for ML to diverse infrastructures. Anywhere you are running Kubernetes, you should be able to run Kubeflow.”

- 58. Why use Kubeflow

- 59. Why use Kubeflow

- 60. Composability

- 61. Composability

- 62. Composability

- 63. Composability Integration of popular third party tools ● JupyterHub ○ Experiment in Jupyter Notebooks ● Tensorflow operator ○ Run TensorFlow code ● PyTorch operator ○ Run Pytorch code ● Caffe2 operator ○ Run Caffe2 code ● Katib ○ Hyperparameter tuning Extendable to more tools

- 64. Why use Kubeflow

- 65. Portability

- 66. Portability

- 67. Portability

- 68. Portability

- 69. Portability

- 70. Why use Kubeflow

- 71. Scalability ● Built-in accelerator support (GPU, TPU) ● Kubernetes native ○ All scaling advantages of kubernetes ○ Integration with third party tools like Istio

- 72. How to use Kubeflow Three large parts: ● Jupyterhub ● TF Jobs ● TF serving

- 74. Kubeflow for Machine Learning

- 75. Workflow

- 77. Still in alpha V0.2.2 ● Still some parts and pieces missing ● Development at rapid pace ● V1.0 planned for end of year

![How to use it?

m = hub.Module("https://tfhub.dev/google/progan-128/1")

The model graph and weights are downloaded when a Module is instantiated:

with tf.Graph().as_default():

module_url = "https://tfhub.dev/google/nnlm-en-dim128-with-normalization/1"

embed = hub.Module(module_url)

embeddings = embed(["A long sentence.", "single-word",

"http://example.com"])

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

sess.run(tf.tables_initializer())

print(sess.run(embeddings))

After that, the module will be added to the graph each time it is called:

Returns embeddings without training a

model

(NASNet: you get 62000+ GPU-hours)](https://image.slidesharecdn.com/tfmeetup201808071-180808163943/85/running-Tensorflow-in-Production-24-320.jpg)

![Exporting and Hosting Modules

"https://tfhub.dev/google/progan-128/1"

repo publisher model version

Hosting: export the trained model, create a tarball and upload it

def module_fn():

inputs = tf.placeholder(dtype=tf.float32, shape=[None, 50])

layer1 = tf.layers.fully_connected(inputs, 200)

layer2 = tf.layers.fully_connected(layer1, 100)

outputs = dict(default=layer2, hidden_activations=layer1)

# Add default signature.

hub.add_signature(inputs=inputs, outputs=outputs)

spec = hub.create_module_spec(module_fn)

Exporting: define a graph, add signature, call create_model_spec](https://image.slidesharecdn.com/tfmeetup201808071-180808163943/85/running-Tensorflow-in-Production-25-320.jpg)

![Setting up a TF Server

tensorflow_model_server --model_base_path=$(pwd) --rest_api_port=9000 --model_name=MyModel

tf_serving/core/basic_manager] Successfully reserved resources to load servable {name: MyModel version: 1}

tf_serving/core/loader_harness.cc] Loading servable version {name: MyModel version: 1}

external/org_tensorflow/tensorflow/cc/saved_model/loader.cc] Loading MyModel with tags: { serve };

external/org_tensorflow/tensorflow/cc/saved_model/loader.cc] SavedModel load for tags { serve }; Status:

success. Took 1048518 microseconds.

tf_serving/core/loader_harness.cc] Successfully loaded servable version {name: MyModel version: 1}

tf_serving/model_servers/main.cc] Exporting HTTP/REST API at:localhost:9000 ...](https://image.slidesharecdn.com/tfmeetup201808071-180808163943/85/running-Tensorflow-in-Production-40-320.jpg)