MongoDB Performance Tuning

- 2. www.tothenew.com About Me Puneet Behl Associate Technical Lead TO THE NEW Digital [email protected] GitHub: https://github.com/puneetbehl/ Twitter: @puneetbhl LinkedIn: https://in.linkedin.com/in/puneetbhl

- 3. www.tothenew.com ● Performance Issues ● Tuning Queries ● Tuning Architecture & System configurations Agenda

- 4. www.tothenew.com ● High Disk I/O Utilization ● Queries getting slow as data increases ● Timeout Issues Performance Issues

- 5. www.tothenew.com ● Database Profiler ● Explain Tuning Queries

- 7. www.tothenew.com ● Collect fine-grained data about Mongo operations ● Writes all the data in “system.profile” collection ● “system.profile” is a capped collection ● Helps identifying what queries need to be tuned Database Profiler

- 8. www.tothenew.com ● 0 - the profiler is off ● 1 - collects profiling data only for slow operations ( > 100 millis ) ● 2 - collects profiling data for all database operations Database Profiler - Profiling Levels

- 9. www.tothenew.com ● Enable for all using “db.setProfilingLevel(2)” ● Specify the threshold for slow operations “db.setProfilingLevel(0, 20)” ● Check profiling level “db.getProfilingLevel()” Database Profiler - Enable Profiling

- 10. www.tothenew.com “mongod --profile 1 --slows 15” Database Profiler - Enable Profiling for Entire Instance

- 11. www.tothenew.com ● Enable profiling on each mongod instance in a cluster :-P Database Profiler - Enable Profiling in a Shard Cluster

- 12. www.tothenew.com db.system.profile.find( { millis: { $gt: 100 } } ) Database Profiler - Analyzing Output

- 13. www.tothenew.com db.system.profile.find().sort( { $natural: -1 } ).limit(20) Database Profiler - Analyzing Output

- 14. www.tothenew.com db.system.profile.find( {“op”: “query” } ) Database Profiler - Analyzing Output

- 15. www.tothenew.com db.system.profile.aggregate({ $group : { _id :"$op", count:{$sum:1}, "max response time":{$max:"$millis"}, "avg response time":{$avg:"$millis"} }}); Result: { "result" : [ { "_id" : "command", "count" : 1, "max response time" : 0, "avg response time" : 0 }, { "_id" : "query", "count" : 12, "max response time" : 571, "avg response time" : 5 }, { "_id" : "update", "count" : 842, "max response time" : 111, "avg response time" : 40 }, { "_id" : "insert", "count" : 1633, "max response time" : 2, "avg response time" : 1 } ], "ok" : 1 } Database Profiler - Analyzing Output

- 16. www.tothenew.com db.system.profile.aggregate( {$group : { _id :"$ns", count:{$sum:1}, "max response time":{$max:"$millis"}, "avg response time":{$avg:"$millis"} } }, {$sort: { "max response time":-1} } ); Result on next slide ... Database Profiler - Analyzing Output

- 17. www.tothenew.com Result: { "result" : [ { "_id" : "game.players","count" : 787, "max response time" : 111, "avg response time" : 0}, {"_id" : "game.games","count" : 1681,"max response time" : 71, "avg response time" : 60}, {"_id" : "game.events","count" : 841,"max response time" : 1,"avg response time" : 0}, … ], "ok" : 1 } Database Profiler - Analyzing Output

- 18. www.tothenew.com See more examples at: https://docs.mongodb.com/manual/tutorial/manage-the-database-profiler/#exampl e-profiler-data-queries Database Profiler - Analyzing Output

- 19. www.tothenew.com ● “db.collection.explain()” ● Return information on query plan and execution stats ● Present the query plan as tree of stages ● Pass the query identified in profile to explain Explain

- 20. www.tothenew.com { "queryPlanner" : { "plannerVersion" : <int>, "namespace" : <string>, "indexFilterSet" : <boolean>, "parsedQuery" : { ... }, Continued on next slide ... Explain - Sample Output

- 21. www.tothenew.com ... "winningPlan" : { "stage" : <STAGE1>, ... "inputStage" : { "stage" : <STAGE2>, ... "inputStage" : { ... } } }, "rejectedPlans" : [ <candidate plan 1>, ... ] } Explain - Sample Output

- 23. www.tothenew.com "executionStats" : { "executionSuccess" : <boolean>, "nReturned" : <int>, "executionTimeMillis" : <int>, "totalKeysExamined" : <int>, "totalDocsExamined" : <int>, "executionStages" : { // on next slide }, "allPlansExecution" : [ { <partial executionStats1> }, { <partial executionStats2> }, ... ] } Explain - executionStats

- 24. www.tothenew.com "executionStats" : { ... "executionStages" : { "stage" : <STAGE1> "nReturned" : <int>, "executionTimeMillisEstimate" : <int>, "works" : <int>, "advanced" : <int>, "needTime" : <int>, "needYield" : <int>, "isEOF" : <boolean>, ... continued on next slide Explain - executionStats

- 25. www.tothenew.com "executionStages" : { ... "inputStage" : { "stage" : <STAGE2>, ... "nReturned" : <int>, "executionTimeMillisEstimate" : <int>, "keysExamined" : <int>, "docsExamined" : <int>, ... "inputStage" : { ... } } }, Explain - executionStats

- 26. www.tothenew.com db.events.find({ "user_id":35991},{"_id":0,"user_id":1}).explain() { "cursor" : "BtreeCursor user_id_1", "isMultiKey" : false, "n" : 2, "nscannedObjects" : 2, "nscanned" : 2, "nscannedObjectsAllPlans" : 2, "nscannedAllPlans" : 2, "scanAndOrder" : false, "indexOnly" : true, "nYields" : 0, "nChunkSkips" : 0, "millis" : 0, "indexBounds" : { "user_id" : [ [ 35991, 35991 ] ] }, } PS: This example is from v2.x of MongoDB Examples

- 27. www.tothenew.com Memory, Network and Disks are the system resources important to MongoDB Tuning Architecture & System Configurations

- 28. www.tothenew.com ● Setting Linux Ulimit ● Deploy these limits by adding a file in “/etc/security/limits.d” (or appending to “/etc/security/limits.conf” if there is no “limits.d”) ● Following is example file for linux users “/etc/security/limits.d/mongod.conf” mongod soft nproc 64000 mongod hard nproc 64000 mongod soft nofile 64000 mongod hard nofile 64000 Tuning Linux for MongoDB

- 29. www.tothenew.com ● Setting Swappiness ● Update /etc/sysctl.conf vm.swappiness = 1 or 10 Tuning Linux for MongoDB

- 30. www.tothenew.com ● NUMA (Non-Uniform Memory Access) Architecture ● Disable via on/off switch in in BIOS ● Update via: numactl --interleave=all mongod <options here> Tuning Linux for MongoDB

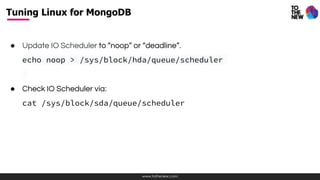

- 31. www.tothenew.com ● Update IO Scheduler to “noop” or “deadline”. echo noop > /sys/block/hda/queue/scheduler ● Check IO Scheduler via: cat /sys/block/sda/queue/scheduler Tuning Linux for MongoDB

- 32. www.tothenew.com ● Update “Read-Ahead” settings via: sudo blockdev --getra /dev/sda ● Check current RA value via: sudo blockdev --getra /dev/sda Tuning Linux for MongoDB

- 33. www.tothenew.com ● File System options ● Recommended to use “ext4” or “XFS” ● Disable access-time updates by adding the flag “noatime” to the filesystem options field in the file “/etc/fstab” (4th field) for the disk serving MongoDB data: /dev/mapper/data-mongodb /var/lib/mongo ext4 defaults,noatime 0 0 Tuning Linux for MongoDB

Editor's Notes

- #7: MongoDB provides a database profiler that shows performance characteristics of each operation against the database. Use the profiler to locate any queries or write operations that are running slow. You can use this information, for example, to determine what indexes to create.

- #9: 0 - the profiler is off, does not collect any data. mongod always writes operations longer than the slowOpThresholdMs threshold to its log. This is the default profiler level. 1 - collects profiling data for slow operations only. By default slow operations are those slower than 100 milliseconds. You can modify the threshold for “slow” operations with the slowOpThresholdMs runtime option or the setParameter command. See the Specify the Threshold for Slow Operations section for more information. 2 - collects profiling data for all database operations.

- #10: You can enable database profiling from the mongo shell or through a driver using the profile command. This section will describe how to do so from the mongo shell. See your driver documentation if you want to control the profiler from within your application. When you enable profiling, you also set the profiling level. The profiler records data in thesystem.profile collection. MongoDB creates the system.profile collection in a database after you enable profiling for that database. To enable profiling and set the profiling level, use the db.setProfilingLevel() helper in the mongoshell, passing the profiling level as a parameter. For example, to enable profiling for all database operations, consider the following operation in the mongo shell: db.setProfilingLevel(2) The shell returns a document showing the previous level of profiling. The "ok" : 1 key-value pair indicates the operation succeeded: { "was" : 0, "slowms" : 100, "ok" : 1 } To verify the new setting, see the Check Profiling Level section.

- #11: For development purposes in testing environments, you can enable database profiling for an entire mongodinstance. The profiling level applies to all databases provided by the mongod instance. To enable profiling for a mongod instance, pass the following parameters to mongod at startup or within the configuration file: mongod --profile 1 --slowms 15 This sets the profiling level to 1, which collects profiling data for slow operations only, and defines slow operations as those that last longer than 15 milliseconds.

- #12: For development purposes in testing environments, you can enable database profiling for an entire mongodinstance. The profiling level applies to all databases provided by the mongod instance. To enable profiling for a mongod instance, pass the following parameters to mongod at startup or within the configuration file: mongod --profile 1 --slowms 15 This sets the profiling level to 1, which collects profiling data for slow operations only, and defines slow operations as those that last longer than 15 milliseconds.

- #13: This returns all operations that lasted longer than 100 milliseconds. Ensure that the value specified here (100, in this example) is above the slowOpThresholdMs threshold.

- #14: This returns all operations that lasted longer than 100 milliseconds. Ensure that the value specified here (100, in this example) is above the slowOpThresholdMs threshold.

- #15: This will list only queries but the proble is lot of data

- #16: Use of aggregation to differentiate operations contrast how many of an item vs response time ● contrast average response time vs max ● prioritize op type

- #17: Use of aggregation to differentiate collections keep this data over time! contrast how many of an item vs response time contrast average response time vs max

- #18: keep this data over time! contrast how many of an item vs response time contrast average response time vs max

- #21: explain.queryPlanner Contains information on the selection of the query plan by the query optimizer. explain.queryPlanner.namespace A string that specifies the namespace (i.e., <database>.<collection>) against which the query is run. explain.queryPlanner.indexFilterSet A boolean that specifies whether MongoDB applied an index filter for the query shape.

- #22: explain.queryPlanner.winningPlan A document that details the plan selected by the query optimizer. MongoDB presents the plan as a tree of stages; i.e. a stage can have an inputStage or, if the stage has multiple child stages,inputStages. explain.queryPlanner.winningPlan.stage A string that denotes the name of the stage. Each stage consists of information specific to the stage. For instance, an IXSCAN stage will include the index bounds along with other data specific to the index scan. If a stage has a child stage or multiple child stages, the stage will have an inputStage or inputStages. explain.queryPlanner.winningPlan.inputStage A document that describes the child stage, which provides the documents or index keys to its parent. The field is present if the parent stage has only one child. explain.queryPlanner.winningPlan.inputStages An array of documents describing the child stages. Child stages provide the documents or index keys to the parent stage. The field is present if the parent stage has multiple child nodes. For example, stages for $or expressions or index intersection consume input from multiple sources. explain.queryPlanner.rejectedPlans Array of candidate plans considered and rejected by the query optimizer. The array can be empty if there were no other candidate plans For sharded collections, the winning plan includes the shards array which contains the plan information for each accessed shard. For details, see Sharded Collection.

- #23: Contains statistics that describe the completed query execution for the winning plan. For write operations, completed query execution refers to the modifications that would be performed, but doesnot apply the modifications to the database.

- #24: explain.executionStats.nReturned Number of documents that match the query condition. nReturned corresponds to the n field returned by cursor.explain() in earlier versions of MongoDB. explain.executionStats.executionTimeMillis Total time in milliseconds required for query plan selection and query execution. executionTimeMillis corresponds to the millis field returned by cursor.explain() in earlier versions of MongoDB. explain.executionStats.totalKeysExamined Number of index entries scanned. totalKeysExamined corresponds to the nscanned field returned by cursor.explain() in earlier versions of MongoDB. explain.executionStats.totalDocsExamined Number of documents scanned. In earlier versions of MongoDB, totalDocsExaminedcorresponds to the nscannedObjects field returned by cursor.explain() in earlier versions of MongoDB.

- #27: Fastest query

- #28: Working with databases, we often focus on the queries, patterns and tunings that happen inside the database process itself. This means we sometimes forget that the operating system below it is the life-support of database, the air that it breathes so-to-speak. Of course, a highly-scalable database such as MongoDB runs fine on these general-purpose defaults without complaints, but the efficiency can be equivalent to running in regular shoes instead of sleek runners. At small scale, you might not notice the lost efficiency, but at large scale (especially when data exceeds RAM) improved tunings equate to fewer servers and less operational costs. For all use cases and scale, good OS tunings also provide some improvement in response times and removes extra “what if…?” questions when troubleshooting.

- #29: Talk about linux having some system resource constraints on processes, file handler a

- #30: “Swappiness” is a Linux kernel setting that influences the behavior of the Virtual Memory manager when it needs to allocate a swap, ranging from 0-100. A setting of “0“ tells the kernel to swap only to avoid out-of-memory problems. A setting of 100 tells it to swap aggressively to disk. The Linux default is usually 60, which is not ideal for database usage. https://www.percona.com/blog/2014/04/28/oom-relation-vm-swappiness0-new-kernel/

- #31: Non-Uniform Memory Access is a recent memory architecture that takes into account the locality of caches and CPUs for lower latency. Unfortunately, MongoDB is not “NUMA-aware” and leaving NUMA setup in the default behavior can cause severe memory in-balance. To check mongod’s NUMA setting: sudo numastat -p $(pidof mongod) Per-node process memory usage (in MBs) for PID 7516 (mongod) Node 0 Total --------------- --------------- Huge 0.00 0.00 Heap 28.53 28.53 Stack 0.20 0.20 Private 7.55 7.55 ---------------- --------------- --------------- Total 36.29 36.29

- #32: The IO scheduler is an algorithm the kernel will use to commit reads and writes to disk. By default most Linux installs use the CFQ (Completely-Fair Queue) scheduler. This is designed to work well for many general use cases, but with little latency guarantees. Two other popular schedulers are “deadline” and “noop”. Deadline excels at latency-sensitive use cases (like databases) and noop is closer to no scheduling at all.

- #33: Read-ahead is a per-block device performance tuning in Linux that causes data ahead of a requested block on disk to be read and then cached into the filesystem cache. Read-ahead assumes that there is a sequential read pattern and something will benefit from those extra blocks being cached. MongoDB tends to have very random disk patterns and often does not benefit from the default read-ahead setting, wasting memory that could be used for more hot data. Most Linux systems have a default setting of 128KB/256 sectors (128KB = 256 x 512-byte sectors). This means if MongoDB fetches a 64kb document from disk, 128kb of filesystem cache is used and maybe the extra 64kb is never accessed later, wasting memory.

- #34: It is recommended that MongoDB uses only the ext4 or XFS filesystems for on-disk database data. ext3 should be avoided due to its poor pre-allocation performance. If you’re using WiredTiger (MongoDB 3.0+) as a storage engine, it is strongly advised that you ONLY use XFS due to serious stability issues on ext4. Each time you read a file, the filesystems perform an access-time metadata update by default. However, MongoDB (and most applications) does not use this access-time information. This means you can disable access-time updates on MongoDB’s data volume. A small amount of disk IO activity that the access-time updates cause stops in this case.

![www.tothenew.com

db.system.profile.aggregate({ $group : { _id :"$op",

count:{$sum:1},

"max response time":{$max:"$millis"},

"avg response time":{$avg:"$millis"}

}});

Result:

{

"result" : [

{ "_id" : "command", "count" : 1, "max response time" : 0, "avg response time" : 0 },

{ "_id" : "query", "count" : 12, "max response time" : 571, "avg response time" : 5 },

{ "_id" : "update", "count" : 842, "max response time" : 111, "avg response time" : 40 },

{ "_id" : "insert", "count" : 1633, "max response time" : 2, "avg response time" : 1 }

],

"ok" : 1

}

Database Profiler - Analyzing Output](https://image.slidesharecdn.com/tuioai4jroasqamdhnue-signature-b103d2ebecf5b4a88b093025e8fd81c2a0384f67423c57cfa5b19f7859a95223-poli-170124172125/85/MongoDB-Performance-Tuning-15-320.jpg)

![www.tothenew.com

Result:

{

"result" : [

{ "_id" : "game.players","count" : 787, "max response time" : 111, "avg response time" : 0},

{"_id" : "game.games","count" : 1681,"max response time" : 71, "avg response time" : 60}, {"_id"

: "game.events","count" : 841,"max response time" : 1,"avg response time" : 0},

…

],

"ok" : 1

}

Database Profiler - Analyzing Output](https://image.slidesharecdn.com/tuioai4jroasqamdhnue-signature-b103d2ebecf5b4a88b093025e8fd81c2a0384f67423c57cfa5b19f7859a95223-poli-170124172125/85/MongoDB-Performance-Tuning-17-320.jpg)

![www.tothenew.com

...

"winningPlan" : {

"stage" : <STAGE1>,

...

"inputStage" : {

"stage" : <STAGE2>,

...

"inputStage" : {

...

}

}

},

"rejectedPlans" : [

<candidate plan 1>,

...

]

}

Explain - Sample Output](https://image.slidesharecdn.com/tuioai4jroasqamdhnue-signature-b103d2ebecf5b4a88b093025e8fd81c2a0384f67423c57cfa5b19f7859a95223-poli-170124172125/85/MongoDB-Performance-Tuning-21-320.jpg)

![www.tothenew.com

"executionStats" : {

"executionSuccess" : <boolean>,

"nReturned" : <int>,

"executionTimeMillis" : <int>,

"totalKeysExamined" : <int>,

"totalDocsExamined" : <int>,

"executionStages" : {

// on next slide

},

"allPlansExecution" : [

{ <partial executionStats1> },

{ <partial executionStats2> },

...

]

}

Explain - executionStats](https://image.slidesharecdn.com/tuioai4jroasqamdhnue-signature-b103d2ebecf5b4a88b093025e8fd81c2a0384f67423c57cfa5b19f7859a95223-poli-170124172125/85/MongoDB-Performance-Tuning-23-320.jpg)

![www.tothenew.com

db.events.find({ "user_id":35991},{"_id":0,"user_id":1}).explain()

{

"cursor" : "BtreeCursor user_id_1", "isMultiKey" : false,

"n" : 2,

"nscannedObjects" : 2,

"nscanned" : 2,

"nscannedObjectsAllPlans" : 2,

"nscannedAllPlans" : 2,

"scanAndOrder" : false,

"indexOnly" : true,

"nYields" : 0,

"nChunkSkips" : 0,

"millis" : 0,

"indexBounds" : { "user_id" : [ [ 35991, 35991 ] ] },

}

PS: This example is from v2.x of MongoDB

Examples](https://image.slidesharecdn.com/tuioai4jroasqamdhnue-signature-b103d2ebecf5b4a88b093025e8fd81c2a0384f67423c57cfa5b19f7859a95223-poli-170124172125/85/MongoDB-Performance-Tuning-26-320.jpg)