10 Key Challenges for AI within the EU Data Protection Framework.pdf

- 1. SESSION ID: #RSAC Dr. Valerie Lyons 10 Key Challenges For AI Within The EU Data Protection Framework Chief Operations Officer and Director BH Consulting, Ireland Twitter/X @priv_I_see IAIS-R05

- 2. Disclaimer Presentations are intended for educational purposes only and do not replace independent professional judgment. Statements of fact and opinions expressed are those of the presenters individually and, unless expressly stated to the contrary, are not the opinion or position of RSA Conference or any other co-sponsors. RSA Conference does not endorse or approve, and assumes no responsibility for, the content, accuracy or completeness of the information presented. Attendees should note that sessions may be audio- or video-recorded and may be published in various media, including print, audio and video formats without further notice. The presentation template and any media capture are subject to copyright protection. © 2024 RSA Conference LLC or its affiliates. The RSA Conference logo and other trademarks are proprietary. All rights reserved. 2

- 3. Educate + Learn = Apply 3 Anticipate and address the key privacy challenges that your organization will face if leveraging, distributing or developing AI tools To learn the key challenges that are presented at the intersection of AI & European Data Protection To distil and communicate in an easily digestible way – the ten most complex challenges for AI and GDPR

- 4. Personal Data Data Subject Data Protection Personally Identifiable Information (PII) Individual/Consumer Privacy

- 6. How Long Has AI Been Around? 6

- 7. 7 Source Image : https://www.economist.com/the-economist-explains/2023/08/24/how-europes-new-digital-law-will-change-the-internet

- 8. 1. Approaches & Terminology 2. Accountability & Governance 3. Data Subject Rights 4. Data Minimization 5. Legal Basis for Processing 6. Inferential Data 7. Transparency 8. Accuracy 9. Bias 10. Responsibility for Assessment Ten Key Challenges of Adapting AI into the EU Data Protection Framework

- 9. 9

- 10. 1. Approaches and Terminology

- 11. EU AI Act EU GDPR All Processing of Personal Data, Sensitive Personal Data All AI Systems: Unacceptable Risk, High Risk, Limited/No Risk AI Systems processing personal data Allows new uses of special category data for certain purposes, such as detecting and correcting bias. Penalties of up to 7% or €35 million for the most significant infringements. Penalties of up to 4% or €20 million for the most significant infringements. Restricts the use and collection of special category data

- 12. CA is a legal obligation designed to foster accountability = ‘process of verifying compliance with certain requirements (set out in Chapter 2 of AI Act) Risk Approaches: Image Source: Grabowicz, Perello, and Zick (2023), “Towards an AI Accountability Policy”

- 14. EXTRA TERRITORIAL SCOPE OF EU REGULATION

- 15. 2. Accountability & Governance

- 16. 16

- 17. DATA SUBJECTS Image adapted from John Cole, NC Newsline

- 18. • European Data Protection Supervisor (EDPS) has noted importance of international standards/tools, highlighting ISO and NIST. • Follow responsible use guidelines, and ethical codes for AI development. • Develop employee usage policies, and assess compliance. • Include human rights, ethics, fairness in GDPR’s impact assessments (DPIAs) HOW TO ADDRESS THE CHALLENGE?

- 19. 3. Data Subject Rights

- 21. What happens inside the box? (Processing/Algorithms) - Source? - Am I informed? - Can I object/challenge? - Is it legal/compliant? What data comes out of the box? - Am I informed? - Can I object/challenge? - Can I request delete? - Can I request change? - Can I request access? - Is it legal/compliant? - Am I informed? - Can I object/challenge? - Is it Legal/Compliant? - Is it profiling/predicting/inferring What data goes into the box?

- 22. What happens inside the box? (Processing/Algorithms) - Publish privacy notices - Establish a legal basis - Implement compliant basis What data comes out of the box? - Easy data removal - Use differential privacy model (where personal data can be deleted without impacting data value) - Upgrade SARs process? - Conduct a DPIA - GDPR Key Principles What data goes into the box? HOW TO ADDRESS THE CHALLENGE?

- 23. 4. Data Minimization/Purpose Limitation

- 24. 24 The AI Act explicitly provides that GDPR principles apply to training, validation, and testing datasets of AI systems (Recital (44a) AI Act). “it is nearly impossible to perform any meaningful purpose limitation (or data minimization) for data used to train general AI systems.” (Wolff, Lehr, & Yoo. 2023) “Developers who rely on very broad purposes (e.g. “processing data for the purpose of developing a generative AI model”) are likely to have difficulties ….”.

- 25. 25

- 26. HOW TO ADDRESS THE CHALLENGE? • Pseudonymize, Anonymize, or Differential privacy techniques. • Be upfront - explain your purposes for using AI. [Explore exceptions under Article 14 GDPR if applicable]. • If using AI to make solely automated decisions about people with legal/significant effects, tell them what information you use, why it is relevant and the likely impact. • Consider using just-in-time notices/dashboards – lets user control further uses of their personal data.

- 27. 5. Legal Basis For Processing (relating to Purpose Limitation)

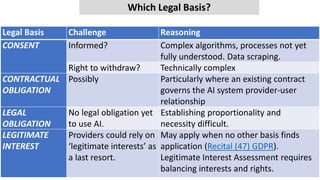

- 28. Legal Basis Challenge Reasoning CONSENT Informed? Complex algorithms, processes not yet fully understood. Data scraping. Right to withdraw? Technically complex CONTRACTUAL OBLIGATION Possibly Particularly where an existing contract governs the AI system provider-user relationship LEGAL OBLIGATION No legal obligation yet to use AI. Establishing proportionality and necessity difficult. LEGITIMATE INTEREST Providers could rely on ‘legitimate interests’ as a last resort. May apply when no other basis finds application (Recital (47) GDPR). Legitimate Interest Assessment requires balancing interests and rights. Which Legal Basis?

- 29. The ICO has provided helpful guidance for data scraping exemptions:

- 30. 30

- 32. • Aug 2022 the CJEU issued ruling Lithuanian case concerning national anti- corruption legislation. CJEU assessed whether personal data that can indirectly reveal sexual orientation falls under protections for “special category data”: CJEU interpreted that processing personal data liable to disclose sexual orientation constituted processing of special category data. • Norway’s DPA fined Grindr for breaches of GDPR, as someone using Grindr inferentially indicates their sexual orientation, and therefore this constitutes special category data. • Spain’s DPA disagreed, finding Grindr did not process special category data.

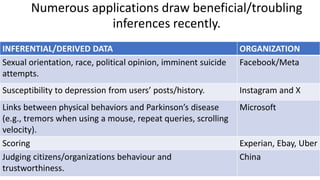

- 33. Numerous applications draw beneficial/troubling inferences recently. 33 INFERENTIAL/DERIVED DATA ORGANIZATION Sexual orientation, race, political opinion, imminent suicide attempts. Facebook/Meta Susceptibility to depression from users’ posts/history. Instagram and X Links between physical behaviors and Parkinson’s disease (e.g., tremors when using a mouse, repeat queries, scrolling velocity). Microsoft Scoring Experian, Ebay, Uber Judging citizens/organizations behaviour and trustworthiness. China

- 34. The main vehicle privacy laws provide for addressing predictions is to provide individuals with a right to access and consequently the right to correction. This does not work for predictions, as they pertain to events that have not yet occurred - accurate or inaccurate.

- 35. 35

- 36. 7. Transparency

- 37. 37 Transparency “To fully comprehend the decision-making processes of these algorithms, it is necessary to consider the collective data of all individuals included in the algorithm's dataset. But this data can’t be provided to individuals without violating the privacy of the people in the dataset; nor is it feasible for individuals to analyze this enormous magnitude of data”. Professor Daniel Solove, AI and Privacy, 2024

- 38. • Collect and process data in an ‘explanation aware’ manner • Build AI systems to ensure that relevant information for a range of explanation types can be extrapolated. • Implement robust data governance to authorized specific persons • Have a range of specific privacy notices and policies • Use a differential privacy model where personal data can be deleted without impacting data value HOW TO ADDRESS THE CHALLENGE?

- 39. 8. Accuracy (including ‘truth’)

- 42. 42

- 43. 43

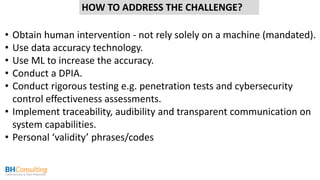

- 44. • Obtain human intervention - not rely solely on a machine (mandated). • Use data accuracy technology. • Use ML to increase the accuracy. • Conduct a DPIA. • Conduct rigorous testing e.g. penetration tests and cybersecurity control effectiveness assessments. • Implement traceability, audibility and transparent communication on system capabilities. • Personal ‘validity’ phrases/codes HOW TO ADDRESS THE CHALLENGE?

- 45. 9. Bias (Fairness) Professor Sandra Mason :“a racially unequal past will produce racially unequal outputs.”

- 46. ***** **** ****

- 47. 47

- 48. 48 Image Source: https://twitter.com/KeithWoodsYT/

- 49. 49 1. Diverse and Representative Data. 2. Bias Detection, Correction and Mitigation. 3. Transparency and Explainability. 4. Diverse Development Teams. 5. Regulations and Standards. 6. Ethical Frameworks and Impact Assessments. 7. User Feedback and Accountability. 8. Education and Awareness. HOW TO ADDRESS THE CHALLENGE?

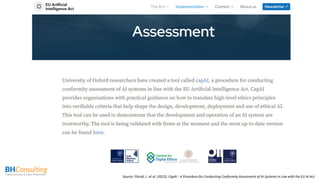

- 52. Source: Floridi, L. et al. (2022). CapAI - A Procedure for Conducting Conformity Assessment of AI Systems in Line with the EU AI Act

- 53. Apply What You Have Learned Today • Next week you should: • Examine the interaction between AI Governance and privacy/data protection governance in your organization. • Examine your current DPIA templates and extend them to include AI related risks as outlined in this presentation. • In the first three months following this presentation you should: • Understand your use of AI (Deployer/User/`Distributor/Developer etc.) and how the AI Act applies. • Develop an AI policy that reflects that use and to plug the gap not covered by the privacy policy already in place. • Define AI Governance controls appropriate to your AI use/ deployment/ distribution/ development. • Within six months you should: • Have established AI governance as part of the formal risk register along with privacy and cybersecurity • Have established awareness and culture of responsible and ethical AI use 53

![HOW TO ADDRESS THE CHALLENGE?

• Pseudonymize, Anonymize, or Differential privacy techniques.

• Be upfront - explain your purposes for using AI. [Explore

exceptions under Article 14 GDPR if applicable].

• If using AI to make solely automated decisions about people with

legal/significant effects, tell them what information you use, why it

is relevant and the likely impact.

• Consider using just-in-time notices/dashboards – lets user control

further uses of their personal data.](https://image.slidesharecdn.com/10keychallengesforaiwithintheeudataprotectionframework-250618092537-3cc9a2da/85/10-Key-Challenges-for-AI-within-the-EU-Data-Protection-Framework-pdf-26-320.jpg)