QMC Opening Workshop, High Accuracy Algorithms for Interpolating and Integrating Multivariate Functions Defined by Sparse Samples in High Dimensions - James (Mac) Hyman, Aug 29, 2017

- 1. High Accuracy Algorithms for Interpolating and Integrating Multivariate Functions Defined by Sparse Samples in High Dimensions Mac Hyman Tulane University Joint work with Jeremy Dewar, Lin Li, and Mu Tian (SUNY), SAMSI 2017, August 29, 2017 Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 1 / 24

- 2. The Problem: Accurate integration of multivariate functions ( ΩGoal:To estimate the integral I = f (x)dx, where f (x) : Ω → R, Ω ⊂ Rd We are focused on situations where: Situations where the effective dimension is relatively small, x ∈Rd , d < 20; Function evaluations (samples)f (x ) are(very) expensive,suchasa large- scale simulation, and additional samples may not be obtainable; Little a prior information about f (x ) is available; and Wemight not havecontrol over the samplelocations, which canbe far from a desired distribution (e.g. missing data). Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 2 / 24

- 3. The Problem: Accurate integration of multivariate functions ( ΩGoal:To estimate the integral I = f (x)dx, where f (x) : Ω → R, Ω ⊂ Rd We are focused on situations where: Situations where the effective dimension is relatively small, x ∈Rd , d < 20; Function evaluations (samples)f (x ) are(very) expensive,suchasa large- scale simulation, and additional samples may not be obtainable; Little a prior information about f (x ) is available; and Wemight not havecontrol over the samplelocations, which canbe far from a desired distribution (e.g. missing data). SAMSI working group being formed for Samplingand Analysis in High Dimensions When Samples Are Expensive Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 3 / 24

- 4. The Problem: Accurate integration of multivariate functions Standard MC integration methods approximate the integral of f (x) by the mean value of the sample points. i.i.d If {xi} ∼ Uniform(Ω = [0, 1]d ) then Lawof large numbers =⇒ : ˆnI (f ) := 1 n i n→∞ Ω f (X ) → I (f ) := E[f (X )] = f (x)dx i.i.d. Pseudo Monte Carlo (PMC): n 1√ ( n Ω 2||e ||= O( σ(f )), where σ(f ) = [ (f (x) − I(f )) dx] 1/2 n Low Discrepancy Sample (LDS) Quasi Monte Carlo(QMC): 1 n (d−1)||e || ≤ O( V [f](log n) ), where V [f ] measures the variations in f . Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 4 / 24

- 5. Even for small samples, usually the QMC error < PMC error Error Iˆ(f) − I (f ) Distributions I (f ) = ( [0,1]6 ) , i cos(ixi)dx Error Distributions for PMC (top) and LDS/QMC (bottom). (200 runs, 6D, 600 points) The x-axis bounds are 10 times smaller for the QMC samples. Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 5 / 24

- 6. Detrending beforeIntegrating Detrending first approximates the underlying function with an easily integrated surrogate model (covariate). The integral is then estimated by the exact integral of surrogate + an approximation of the residual. For example, the , f (x ) can be approximatedby a linear combination of ) , t i =1 βiψi(x),simple basisfunctions, suchasLegendre polynomials, p(x ) = which can be integrated exactly, and define Ω I (f) = f (x)dx (1) = p(x)dx + [f (x) − p(x)]dx . Ω Ω (2) Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 6 / 24

- 7. Define the surrogate model with least-squares fit We represent our global surrogate model (covariate) p(x) as a sum of orthogonal basisfunctions t i ip(x) = β ψ(x) i=1 Forexample,ψi(x ) could bethe tensor product of Legendrepolynomials and the βi are defined by the solution of least squares problem n s sp(x ) = argmin [p(x ) − f (x )] 2 p∈P(K) s=1 If A is the design matrix of basis functions defined at the sample points, s c∈Rt 2 2 T −1 Tψ(x ), then β = argmin||Aβ − f || , and β = [A A] A f . Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 7 / 24

- 8. Separate the original problem into two parts Defect Corrections Approach: 1. Approximate f (x ) with byaquasi-interpolant (control variate) p(x ) that can be integrated exactly, and ( 2. Approximate the integral by the sum of the exact integral of p plus( an approximation of integral of the smaller residual f − p. Ω Ω f (x)dx = [p(x) + f (x) − p(s)]dx = p(x)dx + [f (x) − p(x)]dx ≈ p(x )dx + wi [f (xi ) − p(xi )] ≈ p(x)dx + 1 n [f (xi) − p(xi)] (exact) + (approximation) Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 8 / 24

- 9. Separate the original problem into two parts The error for the detrended integral is proportional to the standard deviation of the residual p(x) − f (x), not f (x) The residual errors are the only errors in the integration approximation Ω I (f) = f (x)dx (3) Ω ≈ Iˆ(f ) = p(x)dx + 1 n [f (xi) − p(xi)] (4) PMC error bound: || n 1√ n e ||= O( σ(f − p)) and n ≤ 1 n (d−1)QMC error bound: ||e || O( V [f − p](log n) ) 1.The error bounds arebasedon σ(f − p) and V [f − p] instead of σ(f ) and V [f ]. The least squares fit reduces these quantities. 2. The convergence rates are the same; the constants are reduced. Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 9 / 24

- 10. Cubic detrending reduces MC and QMC errors by factor of10 Error Iˆ(f) − I (f ) Distributions I (f ) = ( [0,1]6 ) , i cos(ixi)dx Error Distributions (6D, 600points) for PMC (top) and LDS QMC (bottom) for detrending with acubic, K = 3, multivariate polynomial. The x-axis bounds are 10 times smaller for the LDS/QMC samples. Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 10 / 24

- 11. Quintic detrending reduces MC and QMC errors by factor of100 Error Iˆ(f) − I (f ) Distributions I (f ) = ( [0,1]6 ) , i cos(ixi)dx Error Distributions (6D, 600points) for PMC (top) and LDS QMC (bottom) for detrending with a cubic and quintic, K = 3, 5,polynomial. The x-axis bounds are 10 times smaller for the LDS/QMC samples. Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 11 / 24

- 12. Detrending reduces the error constant, not the convergencerates Detrending doesn’t change the convergence rates PMC −1 2 −1 (O(n )) and QMC (O(n )) for [0,1]6 ( ) , ii cos(ix )dx Errors for PMC (upper lines −−) and QMC (lower lines − · −) for constant K = 0 (left), cubic K = 3 (center), and quintic K = 5 (right). Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 12 / 24

- 13. Detrending reduces the error constant, not the convergencerates Mean errors for ( [0,1]5 ) , i cos(ixi )dx with detrending Detrending errors: degreesK = 0, 1, 2, 3, 4, 5 for 500− 4000samples. Convergence rates don’t chance, but the constant is reduced by 0.001 Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 13 / 24

- 14. Detrending reduces the errors for most smooth examples Genz Product Peak I (f ) = 6 −2 i=1 i(a + (xi i− b ) )2 −1 Error distributions with detrending with degreesK = 0, 3, 5. Note the severe biased error for the high degree detrending. Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 14 / 24

- 15. Advantages of QMC samples is less for higher degree detrending PMC and QMC Errors for 6D Genz oscillatory example f (x ) = cos(2πr+ ) , d i =1 βi xi ): Errors for PMC (blue) and QMC(green) with detrending degrees K=0, 1, 2, 3, 4, 5 for 500− 4000 samples. Convergence rates don’t change, the error constant is reduce by 10−5 Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 15 / 24

- 16. Cross-validation error bounds and estimates are accurate The cross-validation error estimates − − − are close to to the true errors − ◦− ◦− for ( [0,1]5 ), i cos(ixi)dx PMC (top) and QMC (lower) plots of the worse case error bounds (red), true error (black), cross-validation error estimates (dashed). The cross-validation error estimates are better for QMC than PMC. Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 16 / 24

- 17. Tensor product orthogonal multivariate basis functions The tensor product form of orthogonal multivariate basis functions provides an effective data structure. ∗ψ = A basisfunctions, ψ∗(x ), of degree j is decomposedinto the product of orthogonal 1D basis functions TT ), k≤j φk(xi ) . The one dimensional basis functions φk are ordered so the maximumde- igree of φk(xi ) is x k . Here xi isthe i th component of x. Typically, weusethe shifted orthogonal Legendreor Chebyshevpolynomi- als to the interval of interest, e.g.[0, 1]. Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 17 / 24

- 18. Curse of Dimensionality for polynomialdetrending High degree polynomials in high dimensions are quickly constrained by the Curse of Dimensionality Forthe least squarescoefficients to beidentifiable, the number of samples must be ≥ the number of coefficients in the detrending function. Degree Dimension 1 2 3 4 5 10 20 0 1 2 3 4 5 10 1 1 1 1 1 1 1 2 3 4 5 6 11 21 3 6 10 15 21 66 231 4 10 20 35 56 286 1,771 5 15 35 70 126 1,001 10,626 6 21 56 126 252 3,003 53,130 11 66 286 1,001 3,003 184,756 30,045,015 The mixed variable terms in multivariate polynomials create anexplosion in the number of terms as a function of the degree and dimension. Forexample,to uniquely define a5th degreepolynomial in 20dimensions, we needat least 53,130 points. To overcomethis restriction, wefind osparse solutions t the least-squaresproblem using aLASSO-likepenalty function. Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 18 / 24

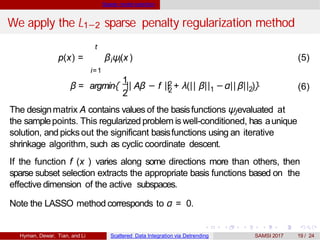

- 19. Sparse model selection We apply the L1−2 sparse penalty regularization method t i=1 i ip(x) = β ψ(x ) (5) 1 2 β = argmin{ || 2 2 Aβ − f || + λ(|| β|| −α||1 2β|| )} (6) The designmatrix A contains values of the basisfunctions ψjevaluated at the samplepoints. This regularized problem is well-conditioned, has aunique solution, and picksout the significant basisfunctions using an iterative shrinkage algorithm, such as cyclic coordinate descent. If the function f (x ) varies along some directions more than others, then sparse subset selection extracts the appropriate basis functions based on the effective dimension of the active subspaces. Note the LASSO method corresponds to α = 0. Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 19 / 24

- 20. Sparse model selection Lasso detrending allows high degree polynomial dictionaries for sparse sample distributions. [0,1]6 ( ) , ii cos(ix )dx ; Errors PMC (top) and QMC (bottom)degrees K = 0, 3, 5. K = 5fits keep 35%(PMC) or 29%(QMC) of terms. Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 20 / 24

- 21. Least squares detrending = a weighted quadraturerule Alternative approach: Define weights so the weighted quadrature rule is exact of a class of basis functions. Forexample,given asampleset, {xi},solvefor the weights, {wi},sothe weighted quadraturerule: I (f ) = p(x )dx ≡ wi f (xi ) isexact if p(x ) isapolynomial of degree< K . Wedefine the designma- trix A to bethe valuesof the basisfunction evaluated at the data points. Define the quadrature array V = (v1, v2, ...vk), where vi isthe inte- gral of the basis function ψi over the domain. The quadrature weights W = (w1, w2, ...wn) canbedefinedasthe solution of the systemsof ten- sor equations W = V (AT A)−1AT . This isanO(n3) operation. That is, instead of equal quadrature weights, wesolvefor the weights that make the rule exact for polynomials of degree < K . Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 21 / 24

- 22. Summary and Conclusions Detrending is a simple powerful algorithm to improve the accuracy of both PMC and QMC integration algorithms. In moderately low dimensions(d ≤ 6) using the full polynomial for degrees K ≤ 5 is computationally feasible and efficient; In high-dimensions, or high-degree polynomials, then lasso is an efficient, and effective, algorithm for dynamically identifying the effective dimensions and appropriate basisfunctions; the cross-validation error estimatesarereasonablyaccurate for the problems we have tested;and the detrending can introduce significant bias in the integration error. Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 22 / 24

- 23. Summary and Conclusions Current Research Directions: Mapping LDS to Chebyshev distributions to reduce errors; Adaptive sampling based on interpolation model errorestimates; Investigate local shapepreserving (positivity, monotonicity, convexity) interpolants that preserve locally structure in the samples; and SAMSI working group being formed for Samplingand Analysis in High Dimensions When Samples Are Expensive Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 23 / 24

![The Problem: Accurate integration of multivariate functions

Standard MC integration methods approximate the

integral of f (x) by the mean value of the sample points.

i.i.d

If {xi} ∼ Uniform(Ω = [0, 1]d ) then Lawof large numbers =⇒ :

ˆnI (f ) :=

1

n i

n→∞

Ω

f (X ) → I (f ) := E[f (X )] = f (x)dx

i.i.d. Pseudo Monte Carlo (PMC):

n

1√

(

n Ω

2||e ||= O( σ(f )), where σ(f ) = [ (f (x) − I(f )) dx] 1/2

n

Low Discrepancy Sample (LDS) Quasi Monte Carlo(QMC):

1

n

(d−1)||e || ≤ O( V [f](log n) ), where V [f ] measures the variations in f .

Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 4 / 24](https://image.slidesharecdn.com/5hymanmcqmcsamsi2017-rev-170830154009/85/QMC-Opening-Workshop-High-Accuracy-Algorithms-for-Interpolating-and-Integrating-Multivariate-Functions-Defined-by-Sparse-Samples-in-High-Dimensions-James-Mac-Hyman-Aug-29-2017-4-320.jpg)

![Even for small samples, usually the QMC error < PMC error

Error Iˆ(f) − I (f ) Distributions I (f ) =

(

[0,1]6

) ,

i cos(ixi)dx

Error Distributions for PMC (top) and LDS/QMC (bottom).

(200 runs, 6D, 600 points)

The x-axis bounds are 10 times smaller for the QMC samples.

Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 5 / 24](https://image.slidesharecdn.com/5hymanmcqmcsamsi2017-rev-170830154009/85/QMC-Opening-Workshop-High-Accuracy-Algorithms-for-Interpolating-and-Integrating-Multivariate-Functions-Defined-by-Sparse-Samples-in-High-Dimensions-James-Mac-Hyman-Aug-29-2017-5-320.jpg)

![Detrending beforeIntegrating

Detrending first approximates the underlying function

with an easily integrated surrogate model (covariate).

The integral is then estimated by the exact integral of

surrogate + an approximation of the residual.

For example, the , f (x ) can be approximatedby a linear combination of

) , t i

=1 βiψi(x),simple basisfunctions, suchasLegendre polynomials, p(x ) =

which can be integrated exactly, and define

Ω

I (f) = f (x)dx (1)

= p(x)dx + [f (x) − p(x)]dx .

Ω Ω

(2)

Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 6 / 24](https://image.slidesharecdn.com/5hymanmcqmcsamsi2017-rev-170830154009/85/QMC-Opening-Workshop-High-Accuracy-Algorithms-for-Interpolating-and-Integrating-Multivariate-Functions-Defined-by-Sparse-Samples-in-High-Dimensions-James-Mac-Hyman-Aug-29-2017-6-320.jpg)

![Define the surrogate model with least-squares fit

We represent our global surrogate model (covariate) p(x)

as a sum of orthogonal basisfunctions

t

i ip(x) = β ψ(x)

i=1

Forexample,ψi(x ) could bethe tensor product of Legendrepolynomials and

the βi are defined by the solution of least squares problem

n

s sp(x ) = argmin [p(x ) − f (x )] 2

p∈P(K) s=1

If A is the design matrix of basis functions defined at the sample points,

s

c∈Rt

2

2

T −1 Tψ(x ), then β = argmin||Aβ − f || , and β = [A A] A f .

Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 7 / 24](https://image.slidesharecdn.com/5hymanmcqmcsamsi2017-rev-170830154009/85/QMC-Opening-Workshop-High-Accuracy-Algorithms-for-Interpolating-and-Integrating-Multivariate-Functions-Defined-by-Sparse-Samples-in-High-Dimensions-James-Mac-Hyman-Aug-29-2017-7-320.jpg)

![Separate the original problem into two parts

Defect Corrections Approach:

1. Approximate f (x ) with byaquasi-interpolant (control variate) p(x )

that can be integrated exactly, and

(

2. Approximate the integral by the sum of the exact integral of p plus(

an approximation of integral of the smaller residual f − p.

Ω Ω

f (x)dx = [p(x) + f (x) − p(s)]dx

= p(x)dx + [f (x) − p(x)]dx

≈ p(x )dx + wi [f (xi ) − p(xi )]

≈ p(x)dx +

1

n

[f (xi) − p(xi)]

(exact) + (approximation)

Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 8 / 24](https://image.slidesharecdn.com/5hymanmcqmcsamsi2017-rev-170830154009/85/QMC-Opening-Workshop-High-Accuracy-Algorithms-for-Interpolating-and-Integrating-Multivariate-Functions-Defined-by-Sparse-Samples-in-High-Dimensions-James-Mac-Hyman-Aug-29-2017-8-320.jpg)

![Separate the original problem into two parts

The error for the detrended integral is proportional to the

standard deviation of the residual p(x) − f (x), not f (x)

The residual errors are the only errors in the integration approximation

Ω

I (f) = f (x)dx (3)

Ω

≈ Iˆ(f ) = p(x)dx +

1

n

[f (xi) − p(xi)] (4)

PMC error bound: || n

1√

n

e ||= O( σ(f − p)) and

n ≤

1

n

(d−1)QMC error bound: ||e || O( V [f − p](log n) )

1.The error bounds arebasedon σ(f − p) and V [f − p] instead of σ(f ) and V

[f ]. The least squares fit reduces these quantities.

2. The convergence rates are the same; the constants are reduced.

Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 9 / 24](https://image.slidesharecdn.com/5hymanmcqmcsamsi2017-rev-170830154009/85/QMC-Opening-Workshop-High-Accuracy-Algorithms-for-Interpolating-and-Integrating-Multivariate-Functions-Defined-by-Sparse-Samples-in-High-Dimensions-James-Mac-Hyman-Aug-29-2017-9-320.jpg)

![Cubic detrending reduces MC and QMC errors by factor of10

Error Iˆ(f) − I (f ) Distributions I (f ) =

(

[0,1]6

) ,

i cos(ixi)dx

Error Distributions (6D, 600points) for PMC (top) and LDS QMC

(bottom) for detrending with acubic, K = 3, multivariate polynomial. The

x-axis bounds are 10 times smaller for the LDS/QMC samples.

Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 10 / 24](https://image.slidesharecdn.com/5hymanmcqmcsamsi2017-rev-170830154009/85/QMC-Opening-Workshop-High-Accuracy-Algorithms-for-Interpolating-and-Integrating-Multivariate-Functions-Defined-by-Sparse-Samples-in-High-Dimensions-James-Mac-Hyman-Aug-29-2017-10-320.jpg)

![Quintic detrending reduces MC and QMC errors by factor of100

Error Iˆ(f) − I (f ) Distributions I (f ) =

(

[0,1]6

) ,

i cos(ixi)dx

Error Distributions (6D, 600points) for PMC (top) and LDS QMC

(bottom) for detrending with a cubic and quintic, K = 3, 5,polynomial.

The x-axis bounds are 10 times smaller for the LDS/QMC samples.

Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 11 / 24](https://image.slidesharecdn.com/5hymanmcqmcsamsi2017-rev-170830154009/85/QMC-Opening-Workshop-High-Accuracy-Algorithms-for-Interpolating-and-Integrating-Multivariate-Functions-Defined-by-Sparse-Samples-in-High-Dimensions-James-Mac-Hyman-Aug-29-2017-11-320.jpg)

![Detrending reduces the error constant, not the convergencerates

Detrending doesn’t change the convergence rates PMC

−1

2

−1

(O(n )) and QMC (O(n )) for [0,1]6

( ) ,

ii cos(ix )dx

Errors for PMC (upper lines −−) and QMC (lower lines − · −) for

constant K = 0 (left), cubic K = 3 (center), and quintic K = 5 (right).

Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 12 / 24](https://image.slidesharecdn.com/5hymanmcqmcsamsi2017-rev-170830154009/85/QMC-Opening-Workshop-High-Accuracy-Algorithms-for-Interpolating-and-Integrating-Multivariate-Functions-Defined-by-Sparse-Samples-in-High-Dimensions-James-Mac-Hyman-Aug-29-2017-12-320.jpg)

![Detrending reduces the error constant, not the convergencerates

Mean errors for

(

[0,1]5

) ,

i cos(ixi )dx with detrending

Detrending errors: degreesK = 0, 1, 2, 3, 4, 5 for 500− 4000samples.

Convergence rates don’t chance, but the constant is reduced by 0.001

Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 13 / 24](https://image.slidesharecdn.com/5hymanmcqmcsamsi2017-rev-170830154009/85/QMC-Opening-Workshop-High-Accuracy-Algorithms-for-Interpolating-and-Integrating-Multivariate-Functions-Defined-by-Sparse-Samples-in-High-Dimensions-James-Mac-Hyman-Aug-29-2017-13-320.jpg)

![Cross-validation error bounds and estimates are accurate

The cross-validation error estimates − − − are close to to

the true errors − ◦− ◦− for

(

[0,1]5

),

i cos(ixi)dx

PMC (top) and QMC (lower) plots of the worse case error bounds (red),

true error (black), cross-validation error estimates (dashed).

The cross-validation error estimates are better for QMC than PMC.

Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 16 / 24](https://image.slidesharecdn.com/5hymanmcqmcsamsi2017-rev-170830154009/85/QMC-Opening-Workshop-High-Accuracy-Algorithms-for-Interpolating-and-Integrating-Multivariate-Functions-Defined-by-Sparse-Samples-in-High-Dimensions-James-Mac-Hyman-Aug-29-2017-16-320.jpg)

![Tensor product orthogonal multivariate basis functions

The tensor product form of orthogonal multivariate basis

functions provides an effective data structure.

∗ψ =

A basisfunctions, ψ∗(x ), of degree j is decomposedinto the product of

orthogonal 1D basis functions

TT

),

k≤j

φk(xi ) .

The one dimensional basis functions φk are ordered so the maximumde-

igree of φk(xi ) is x k . Here xi isthe i th component of x.

Typically, weusethe shifted orthogonal Legendreor Chebyshevpolynomi- als

to the interval of interest, e.g.[0, 1].

Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 17 / 24](https://image.slidesharecdn.com/5hymanmcqmcsamsi2017-rev-170830154009/85/QMC-Opening-Workshop-High-Accuracy-Algorithms-for-Interpolating-and-Integrating-Multivariate-Functions-Defined-by-Sparse-Samples-in-High-Dimensions-James-Mac-Hyman-Aug-29-2017-17-320.jpg)

![Sparse model selection

Lasso detrending allows high degree polynomial

dictionaries for sparse sample distributions.

[0,1]6

( ) ,

ii cos(ix )dx ; Errors PMC (top) and QMC (bottom)degrees

K = 0, 3, 5. K = 5fits keep 35%(PMC) or 29%(QMC) of terms.

Hyman, Dewar, Tian, and Li Scattered Data Integration via Detrending SAMSI 2017 20 / 24](https://image.slidesharecdn.com/5hymanmcqmcsamsi2017-rev-170830154009/85/QMC-Opening-Workshop-High-Accuracy-Algorithms-for-Interpolating-and-Integrating-Multivariate-Functions-Defined-by-Sparse-Samples-in-High-Dimensions-James-Mac-Hyman-Aug-29-2017-20-320.jpg)