Accelerating Ceph Performance with High Speed Networks and Protocols - Qingchun Song

- 1. Accelerating Ceph Performance with High Speed Networks and Protocols Qingchun Song Sr. Director of Market Development-APJ & China

- 2. Company Headquarters Mellanox Overview Ticker: MLNX Yokneam, Israel Sunnyvale, California Worldwide Offices ~2,900Employees worldwide

- 3. Leadership in Storage Platforms Delivering the Highest Data Center Return on Investment SMB Direct

- 4. Storage & Connectivity Evolution 1990 2000 2010 2015 Internal Storage Distributed Storage Shared Network File & Block Data External Storage Storage Consolidation Dedicated FC SAN Block/Structured Data Flash/ Convergence Media Migration Dedicated Eth/IB/FC All Data Types Virtualization/ Cloud Data Specific Storage Lossless IB/Eth Unstructured Data 2020 NVMe/ Big Data Many Platforms Dedicated Eth/IB Right Data for Platform

- 5. Where to Draw the Line? Traditional Storage Legacy DC – FC SAN Scale-out Storage Modern DC – Ethernet Storage Fabric

- 7. Storage or Data Bottleneck: Bandwidth OSD read: • Client(App <-> RBD <-> RADOS) <-> NIC <-> Leaf <-> Spine <-> Leaf <-> NIC <->OSD <-> NVMe OSD write: • Client(App <-> RBD <-> RADOS) <-> NIC <-> Leaf <-> Spine <-> Leaf <-> NIC <->OSD <-> NVMe <-> OSD <-> NIC <-> Leaf <-> Spine <-> Leaf <-> NIC <->OSD <-> NVMe

- 8. Ceph Bandwidth Performance Improvement • Aggregate performance of 4 Ceph servers • 25GbE has 92% more bandwidth than 10GbE • 25GbE has 86% more IOPS than 10GbE • Internet search results seem to recommend one 10GbE NIC for each ~15 HDDs in an OSD • Mirantis, Red Hat, Supermicro, etc.

- 9. Storage or Data Bottleneck: Latency Servers • Higher processing capability • High-density virtualization Storage • Move to All-flash • Faster protocols – NVMe-oF cost Risk Complexity Data Center modernization requires Future Proof, faster, lossless Ethernet Storage Fabrics High bandwidth, low latency, zero packet loss Predictable Performance, Deterministic & Secure Fabrics Fabrics Simplified security and management Faster, more predictable performance Block, file, and object storage

- 10. RDMA Is The Key For Storage Latency adapter based transport

- 11. RDMA Enables Efficient Data Movement • Without RDMA • 5.7 GB/s throughput • 20-26% CPU utilization • 4 cores 100% consumed by moving data • With Hardware RDMA • 11.1 GB/s throughput at half the latency • 13-14% CPU utilization • More CPU power for applications, better ROI x x x x 100GbE With CPU Onload 100 GbE With Network Offload CPU Onload Penalties • Half the Throughput • Twice the Latency • Higher CPU Consumption 2X Better Bandwidth Half the Latency 33% Lower CPU See the demo: https://www.youtube.com/watch?v=u8ZYhUjSUoI

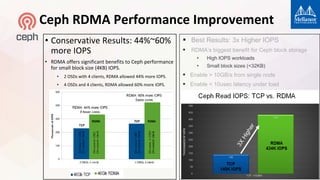

- 12. Best Results: 3x Higher IOPS RDMA’s biggest benefit for Ceph block storage • High IOPS workloads • Small block sizes (<32KB) Enable > 10GB/s from single node Enable < 10usec latency under load Ceph RDMA Performance Improvement • Conservative Results: 44%~60% more IOPS • RDMA offers significant benefits to Ceph performance for small block size (4KB) IOPS. • 2 OSDs with 4 clients, RDMA allowed 44% more IOPS. • 4 OSDs and 4 clients, RDMA allowed 60% more IOPS.

- 13. RDMA: Mitigates Meltdown Mess, Stops Spectre Security Slowdown 0% -47% Performance Impact: 0% Performance Impact: -47% Before – Before applying software patches of Meltdown & Spectre After – After applying software patches of Meltdown & Spectre

- 14. CePH RDMA Status • CePH RDMA working group • Mellanox • Xsky • Samsung • SanDisk • RedHat • The latest stable CePH RDMA version • https://github.com/Mellanox/ceph/tree/luminous-12.1.0-rdma • Bring Up Ceph RDMA - Developer's Guide • https://community.mellanox.com/docs/DOC-2721 • RDMA/RoCE Configuration Guide • https://community.mellanox.com/docs/DOC-2283

- 15. Storage or Data Bottleneck: Storage Fabric www.zeropacketloss.com www.Mellanox.com/tolly 5.2 8.4 9.6 9.7 0.3 0.9 1.0 1.1 64B 512B 1.5B 9KB MaxBurstSize(MB) Packet size Microburst Absorption Capability Spectrum Tomahawk Congestion Management Good Bad Fairness GoodBad Avoidable Packet Loss GoodBad Latency Test Results Ethernet Storage Fabric

- 16. Summary • Ceph Benefits from Faster Network • 10GbE is not enough! • RDMA further optimizes Ceph performance • Reduce the impact from Meltdown/Spectre fixes • ESF(Ethernet Storage Fabric) is trend

- 17. Thank You