“Accelerating Newer ML Models Using the Qualcomm AI Stack,” a Presentation from Qualcomm

- 1. Accelerating Newer ML Models Using Qualcomm® AI Stack Dr. Vinesh Sukumar Sr Director – AI/ML Product Qualcomm Technologies, Inc. Snapdragon and Qualcomm branded products are products of Qualcomm Technologies, Inc. and/or its subsidiaries.

- 2. On-device intelligence is paramount Process data closest to the source, complement the cloud Privacy Reliability Low latency Efficient use of network bandwidth Center of Gravity Moving to the Edge… Historically Increased Demand Personalization Security Autonomy Efficiency © 2023 Qualcomm Technologies Inc. 2

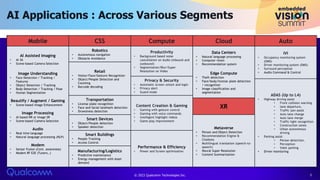

- 3. AI Applications : Across Various Segments Mobile CSS Compute Cloud Auto AI Assisted Imaging • AI 3A • Scene-based Camera Selection Image Understanding • Face Detection / Tracking / Features • Object Detection / Tracking • Body Detection / Tracking / Pose • Human Segmentation Beautify / Augment / Gaming • Scene-based Image Enhancement Image Processing • AI based NR or Image SR • Scene-based Camera Selection Audio • Real time language • Natural language processing (NLP) Modem • Sensor Fusion (Cont. awareness) • Modem RF E2E (Tuners..) Robotics • Autonomous navigation • Obstacle Avoidance Productivity • Background based noise cancellation on Audio (inbound and outbound) • Segmentation/Blur/Super Resolution on Video Data Centers • Natural language processing • Computer vision • Recommendation system Edge Compute • Theft detection • Face/body/license plate detection / recognition • Image classification and segmentation IVI • Occupancy monitoring system (OMS) • Driver monitoring system (DMS) • Surround perception • Audio Command & Control Retail • Visitor/Face/Gesture Recognition • Object/People Detection and Counting • Barcode decoding Privacy & Security • Automatic screen unlock and login • Privacy alert • Guard mode ADAS (Up to L4) • Highway driving assist • Front collision warning • lane departure, • Traffic jam assist • Auto lane change • Auto lane merge • Traffic light recognition • Construction zones • Urban autonomous driving • Parking assist • Person detection, • Perception • Valet parking • Driver monitoring Transportation • License plate recognition • Face and facial landmark detection • Drowsiness detection Content Creation & Gaming • Gaming with gesture control • Gaming with voice commands • Intelligent highlight videos • Game play improvement XR Smart Devices • Object/People detection • Speaker detection Metaverse • Person and Object Detection • Recommendation Engine & Chatbots • Multilingual translation (speech-to- speech) • Neural Super Resolution • Content Summarization Smart Buildings • People Tracking • Access Control Performance & Efficiency • Power and Screen optimization Manufacturing/Logistics • Predictive maintenance • Energy management with Asset demand © 2023 Qualcomm Technologies Inc. 3

- 4. Emerging AI Models – For the Various Markets Emerging Deep Learning Models Generative networks (Image to Image Transformation) Time series networks (Behavior to Text Transformation) Transformer networks (NLP/NLU) (Sequence to Sequence transformation) Canvas networks (Virtual Transformation for Avatars) © 2023 Qualcomm Technologies Inc. 4

- 5. Vision: Accelerate Solution Deployment Performance Scalability Innovation Tools Accelerate “out of box” operator functionality and performance Ability to have programming consistency from Cloud to Edge Accelerate AI solution deployment with investment in tools Innovation to drive product leadership (Pre-emption, DFS, Multi chaining) © 2023 Qualcomm Technologies Inc. 5

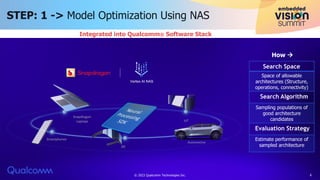

- 6. STEP: 1 -> Model Optimization Using NAS Integrated into Qualcomm® Software Stack Space of allowable architectures (Structure, operations, connectivity) Sampling populations of good architecture candidates Estimate performance of sampled architecture Search Space Search Algorithm Evaluation Strategy How © 2023 Qualcomm Technologies Inc. 6

- 7. NAS Results: Observations from ML Models Category Model Task Dataset Results CNNs EfficientNet-B0 Image Classification ImageNet +1.0% accuracy 33% latency reduction ResNet-18 ImageNet +2.2% accuracy 31% latency reduction RetinaNet 2D Object Detection Pascal +1.5 mAP accuracy 11% latency reduction EfficientDet-D0 COCO +0.8 mAP accuracy 30% latency reduction RNNs CRNN Keyword Spotting Google Speech Commands v2 +1.0% accuracy similar model size Transformers MobileBERT Question & Answering SQuAD v1.1 On-par accuracy 12% latency reduction © 2023 Qualcomm Technologies Inc. 7

- 8. 1: FP32 model compared to quantized model Promising results show that low-precision integer inference can become widespread Virtually the same accuracy between a FP32 and quantized AI model through: • Automated, data free, post-training methods • Automated training-based mixed-precision method Automated reduction in precision of weights and activations while maintaining accuracy Models trained at high precision 32-bit floating point 3452.3194 8-bit Integer 255 Increase in performance per watt from savings in memory and compute Inference at lower precision 16-bit Integer 3452 01010101 up to 4X 4-bit Integer 15 01010101 up to 16X up to 64X 01010101 0101 01010101 01010101 01010101 01010101 STEP: 2 -> New Techniques to Quantize Models Integrated into Qualcomm Software Stack © 2023 Qualcomm Technologies Inc. 8

- 9. Pushing the Limits – For Quantization & Pruning Data-free quantization Created an automated method that addresses bias and imbalance in weight ranges: No training Data free How can we make quantization as simple as possible? AdaRound Created an automated method for finding the best rounding choice: No training Minimal unlabeled data Is rounding to the nearest value the best approach for quantization? SOTA 8-bit results Making 8-bit weight quantization ubiquitous <1% Accuracy drop for MobileNet V2 against FP32 model Making 4-bit weight quantization ubiquitous <2.5% Accuracy drop for MobileNet V2 against FP32 model Bayesian bits Created a novel method to learn mixed-precision quantization: Training required Training data required Jointly learns bit-width precision and pruning Can we quantize layers to different bit widths based on precision sensitivity? SOTA mixed-precision results Automating mixed-precision quantization and enabling the tradeoff between accuracy and kernel bit-width <1% Accuracy drop for MobileNet V2 against FP32 model for mixed precision model with computational complexity equivalent to a 4-bit weight model SOTA 4-bit weight results Highest Focus of Attention © 2023 Qualcomm Technologies Inc. 9

- 10. Moving towards W4A8 – Newer ML Models With better PTQ and QAT techniques, increasingly more models will be able to use W4A8, resulting in better energy efficiency This is going to be major push for AI solution deployment on the edge Model FP32 INT4 Accuracy Comments ResNet50 76.1% 75.4% Using Post-training Quantization (PTQ) ResNet18 69.8% 69% EfficientNet-Lite 75.3% 74.3% Regnext 78.3% 77.2% Mobilenet-v2 71.7% 71.3% Using Quantization Aware Training (QAT) 8bit Weights 4bit Weights Segmentation Models: Seeing >20% power + >40% in memory footprint saving © 2023 Qualcomm Technologies Inc. 10

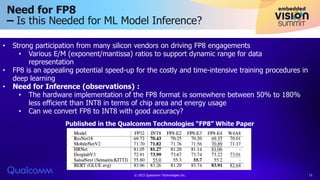

- 11. Need for FP8 – Is this Needed for ML Model Inference? Published in the Qualcomm Technologies “FP8” White Paper • Strong participation from many silicon vendors on driving FP8 engagements • Various E/M (exponent/mantissa) ratios to support dynamic range for data representation • FP8 is an appealing potential speed-up for the costly and time-intensive training procedures in deep learning • Need for Inference (observations) : • The hardware implementation of the FP8 format is somewhere between 50% to 180% less efficient than INT8 in terms of chip area and energy usage • Can we convert FP8 to INT8 with good accuracy? © 2023 Qualcomm Technologies Inc. 11

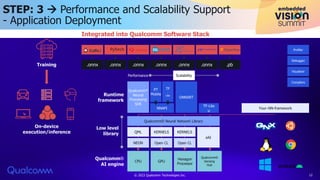

- 12. STEP: 3 Performance and Scalability Support - Application Deployment Integrated into Qualcomm Software Stack Your–NN-framework Training .onnx .onnx .onnx .onnx .onnx .onnx .pb CPU Qualcomm® Neural Network Library QML NEON KERNELS KERNELS eAI Open CL Open CL Performance Qualcomm® Neural Processing SDK ONNXRT PT Mobile TF - Lite NNAPI TF-Lite µ GPU Hexagon Processor Qualcomm® Sensing Hub Profiler Debugger Visualizer Compilers Scalability Runtime framework Low level library Qualcomm® AI engine On-device execution/inference © 2023 Qualcomm Technologies Inc. 12

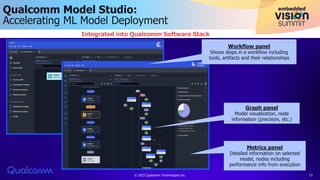

- 13. Qualcomm Model Studio: Accelerating ML Model Deployment Workflow panel Shows steps in a workflow including tools, artifacts and their relationships Graph panel Model visualization, node information (precision, etc.) Metrics panel Detailed information on selected model, nodes including performance info from execution Integrated into Qualcomm Software Stack © 2023 Qualcomm Technologies Inc. 13

- 14. © 2023 Qualcomm Technologies Inc.

- 15. Recently Deployed Applications – Using Qualcomm AI Stack Industry’s first low power gesture control + context awareness to service recommendation – Launched on Honor Windows 11 features for video + audio AI – Launched on ThinkPad X13S © 2023 Qualcomm Technologies Inc. 15

- 16. Conclusions • AI applications expanding beyond modalities of computer vision to linguistics, communication, commerce and language understanding • With evolution of AI applications, this continues to stress on support for new DL architectures & models • Qualcomm AI Stack expands to enable support for any developer and drive innovation in performance, latency, QoS among others. Focus on • Advanced quantization mechanics • Support for newer data types • Neural architecture support • Flexible run time for performance & portability © 2023 Qualcomm Technologies Inc. 16

- 17. Resources © 2023 Qualcomm Technologies Inc. 17 Qualcomm® Mobile AI Mobile AI | On-Device AI | Qualcomm Qualcomm Technologies & Google NAS Qualcomm Technologies and Google Cloud Announce Collaboration on Neural Architecture Search for the Connected Intelligent Edge | Qualcomm Dr. Vinesh Sukumar Senior Director, Product Management – AI/ML [email protected] 2023 Embedded Vision Summit 4:15 pm: Develop Next-Gen Camera Apps Using Snapdragon Computer Vision Technologies - Judd Heape, VP of Product Management for Camera, Computer Vision and Video Technology, Qualcomm Technologies Qualcomm Wireless Academy Fundamentals of AI Available for free until October 2023

- 18. THANK YOU © 2023 Qualcomm Technologies Inc. 18