Adaptive Thread Scheduling Techniques for Improving Scalability of Software Transactional Memory

- 1. Adaptive Thread Scheduling Techniques for Improving Scalability of Software Transactional Memory Kinson Chan, King Tin Lam, Cho-Li Wang Presenter: Kinson Chan Date: 16 February 2010 PDCN 2011, Innsbruck, Austria DEPARTMENT OF COMPUTER SCIENCE THE UNIVERSITY OF HONG KONG

- 2. Outline • Motivation – ‣ hardware trend and software transactional memory • Background – ‣ performance scalability ‣ ratio-based concurrency control and its myth • Solution – ‣ our rate-based heuristic, Probe. • Evaluation – ‣ performance comparison 2

- 3. Motivation What is the current computing hardware trend, and why is software transactional memory relevant?

- 4. Hardware trend: multicores • Multicore processors ‣ a.k.a. chip multiprocessing ‣ multiple cores on a processor die ‣ cores share a common cache ‣ faster data sharing among threads ‣ more running threads per cabinet • Chip Multithreading ‣ e.g. hyperthreading, coolthreads ‣ more than one threads per core ‣ hide the data load latency 4 L1! L1! L1! L1! L2! L2! L2! L2! L3! 1! 2! 3! 4! 5! 6! 7! 8! a typical modern processor

- 5. Hardware trend: multicores • Multicore processors ‣ a.k.a. chip multiprocessing ‣ multiple cores on a processor die ‣ cores share a common cache ‣ faster data sharing among threads ‣ more running threads per cabinet • Chip Multithreading ‣ e.g. hyperthreading, coolthreads ‣ more than one threads per core ‣ hide the data load latency 4 L1! L1! L1! L1! L2! L2! L2! L2! L3! 1! 2! 3! 4! 5! 6! 7! 8! Multiple cores a typical modern processor

- 6. Hardware trend: multicores • Multicore processors ‣ a.k.a. chip multiprocessing ‣ multiple cores on a processor die ‣ cores share a common cache ‣ faster data sharing among threads ‣ more running threads per cabinet • Chip Multithreading ‣ e.g. hyperthreading, coolthreads ‣ more than one threads per core ‣ hide the data load latency 4 L1! L1! L1! L1! L2! L2! L2! L2! L3! 1! 2! 3! 4! 5! 6! 7! 8! Mutli-thread per core a typical modern processor

- 7. Now and future multicores 5 Micro- architecture Clock rate Cores Threads per core Threads per package Shared cache Memory arrangement IBM Power 7 ~ 3 GHz 4 ~ 8 4 32 Max 4 MB shared L3 NUMA Sun Niagara2 1.2 ~ 1.6 GHz 4 ~ 8 8 64 Max 4 MB shared L2 NUMA Intel Westmere ~ 2 GHz 4 ~ 8 2 16 Max 12 ~ 24 MB shared L3 NUMA Intel Harpertown ~ 3 GHz 2 x 2 2 8 2 x 6 MB shared L3 UMA AMD Bulldozer ~ 2 GHz 2 x 6 ~ 2 x 8 1 16 Max 8 MB shared L3 NUMA AMD Magny- Cours ~ 3 GHz 8 modules 2 per module 16 Max 8 MB shared L3 NUMA Intel Terascale ~ 4 GHz 80? 1? 80? 80 x 2 KB dist. cache NUCA

- 8. Now and future multicores 5 Micro- architecture Clock rate Cores Threads per core Threads per package Shared cache Memory arrangement IBM Power 7 ~ 3 GHz 4 ~ 8 4 32 Max 4 MB shared L3 NUMA Sun Niagara2 1.2 ~ 1.6 GHz 4 ~ 8 8 64 Max 4 MB shared L2 NUMA Intel Westmere ~ 2 GHz 4 ~ 8 2 16 Max 12 ~ 24 MB shared L3 NUMA Intel Harpertown ~ 3 GHz 2 x 2 2 8 2 x 6 MB shared L3 UMA AMD Bulldozer ~ 2 GHz 2 x 6 ~ 2 x 8 1 16 Max 8 MB shared L3 NUMA AMD Magny- Cours ~ 3 GHz 8 modules 2 per module 16 Max 8 MB shared L3 NUMA Intel Terascale ~ 4 GHz 80? 1? 80? 80 x 2 KB dist. cache NUCA How can we scale our program to have these many threads?

- 10. Multi-threading and synchronization 6 Coarse grain locking Easy / Correct (few locks, predictable) Difficult to scale (excessive mutual exclusion)

- 11. Multi-threading and synchronization 6 Coarse grain locking Easy / Correct (few locks, predictable) Difficult to scale (excessive mutual exclusion) Fine-grain locking Error prone (deadlock, forget to lock, ...) Scales better (allows more parallelism)

- 12. Multi-threading and synchronization 6 Coarse grain locking Easy / Correct (few locks, predictable) Difficult to scale (excessive mutual exclusion) Fine-grain locking Error prone (deadlock, forget to lock, ...) Scales better (allows more parallelism) Do we have anything in between? Easy / Correct Scales good

- 13. STM optimistic execution 7 Begin Begin Proceed Proceed Commit Commit Retry Commit x=x+4 y=y-4 x=x+2 y=y-2 Begin Proceed Begin Proceed Commit Commit x=x+4 y=y-4 w=w+5 z=w Thread 1 Thread 2 Thread 3 Thread 1 Thread 2 Thread 3 x=x+2 y=y-2 Success Success Success Success conflict detection conflict detection begin; x=x+4; y=y-4; commit; begin; x=x+2; y=y-2; commit; begin; x=x+4; y=y-4; commit; begin; w=w+5; z=w; commit; begin; x = x + 4; y = y - 4; commit;

- 14. STM optimistic execution 7 Begin Begin Proceed Proceed Commit Commit Retry Commit x=x+4 y=y-4 x=x+2 y=y-2 Begin Proceed Begin Proceed Commit Commit x=x+4 y=y-4 w=w+5 z=w Thread 1 Thread 2 Thread 3 Thread 1 Thread 2 Thread 3 x=x+2 y=y-2 Success Success Success Success conflict detection conflict detection begin; x=x+4; y=y-4; commit; begin; x=x+2; y=y-2; commit; begin; x=x+4; y=y-4; commit; begin; w=w+5; z=w; commit;

- 15. STM optimistic execution 7 Begin Begin Proceed Proceed Commit Commit Retry Commit x=x+4 y=y-4 x=x+2 y=y-2 Begin Proceed Begin Proceed Commit Commit x=x+4 y=y-4 w=w+5 z=w Thread 1 Thread 2 Thread 3 Thread 1 Thread 2 Thread 3 x=x+2 y=y-2 Success Success Success Success conflict detection conflict detection begin; x=x+4; y=y-4; commit; begin; x=x+2; y=y-2; commit; begin; x=x+4; y=y-4; commit; begin; w=w+5; z=w; commit; case 1: two transactions conflicts: rollback and retry one of them.

- 16. STM optimistic execution 7 Begin Begin Proceed Proceed Commit Commit Retry Commit x=x+4 y=y-4 x=x+2 y=y-2 Begin Proceed Begin Proceed Commit Commit x=x+4 y=y-4 w=w+5 z=w Thread 1 Thread 2 Thread 3 Thread 1 Thread 2 Thread 3 x=x+2 y=y-2 Success Success Success Success conflict detection conflict detection begin; x=x+4; y=y-4; commit; begin; x=x+2; y=y-2; commit; begin; x=x+4; y=y-4; commit; begin; w=w+5; z=w; commit; case 1: two transactions conflicts: rollback and retry one of them. case 2: two transactions do not conflict: they execute together, achieving better parallelism.

- 17. C. J. Rossbach, O. S. Hofmann and Emmett Witchel, Is transactional programming actually easier, In Proceedings of the 15th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, pages 45–56, 2010. STM is easy • In the University of Texas at Austin, 237 students taking Operating System courses were instructed to program the same problem with coarse locks, fine-grained locks, monitors and transactions... 8 Development Time Errors Code Complexity

- 18. C. J. Rossbach, O. S. Hofmann and Emmett Witchel, Is transactional programming actually easier, In Proceedings of the 15th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, pages 45–56, 2010. STM is easy • In the University of Texas at Austin, 237 students taking Operating System courses were instructed to program the same problem with coarse locks, fine-grained locks, monitors and transactions... 8 Development Time Errors Code Complexity LongShort TMCoarse Fine

- 19. C. J. Rossbach, O. S. Hofmann and Emmett Witchel, Is transactional programming actually easier, In Proceedings of the 15th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, pages 45–56, 2010. STM is easy • In the University of Texas at Austin, 237 students taking Operating System courses were instructed to program the same problem with coarse locks, fine-grained locks, monitors and transactions... 8 Development Time Errors Code Complexity LongShort TMCoarse Fine Simple Complex TMCoarse Fine

- 20. C. J. Rossbach, O. S. Hofmann and Emmett Witchel, Is transactional programming actually easier, In Proceedings of the 15th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, pages 45–56, 2010. STM is easy • In the University of Texas at Austin, 237 students taking Operating System courses were instructed to program the same problem with coarse locks, fine-grained locks, monitors and transactions... 8 Development Time Errors Code Complexity LongShort TMCoarse Fine Simple Complex TMCoarse Fine Less More TM Coarse Fine

- 21. STM is a research toy 9

- 22. STM is a research toy 9 STM SXM OSTM DSTM ASTM TL2 TinySTM TLRW SwissTM NOrec TML RingTM InvalTM DeuceTM D2STM

- 23. STM is a research toy 9 STM SXM OSTM DSTM ASTM TL2 TinySTM TLRW SwissTM NOrec TML RingTM InvalTM DeuceTM D2STM not Company Products Research Sun Dynamic STM library DSTM, TL2, TLRW, Rock processor, ... Intel Intel C++ STM compiler McRT-STM, ... IBM C/C++ for TM on AIX STM extension on X10, ... Microsoft STM.NET STM on Haskell, ... AMD ASF instruction set extension

- 24. Background What affects the transactional memory performance? How can we adjust concurrency for best performance?

- 26. Threads and performance 11 thread# attempt# more threads, more transactional attempts

- 27. Threads and performance 11 ×thread# attempt# more threads, more transactional attempts thread#commitprob. more threads, smaller portion of transactions to commit

- 28. Threads and performance 11 × ∝thread# attempt# more threads, more transactional attempts thread#commitprob. more threads, smaller portion of transactions to commit thread# performance concave curve of performance

- 29. Threads and performance 11 × ∝thread# attempt# more threads, more transactional attempts thread#commitprob. more threads, smaller portion of transactions to commit thread# performance concave curve of performance optimal

- 30. Ratio- vs rate-based concurrency controls 12

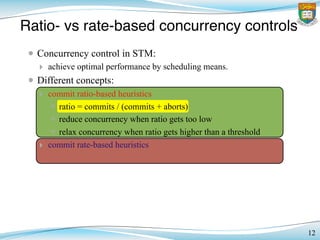

- 31. Ratio- vs rate-based concurrency controls 12 • Concurrency control in STM: ‣ achieve optimal performance by scheduling means.

- 32. Ratio- vs rate-based concurrency controls 12 • Concurrency control in STM: ‣ achieve optimal performance by scheduling means. • Different concepts:

- 33. Ratio- vs rate-based concurrency controls 12 • Concurrency control in STM: ‣ achieve optimal performance by scheduling means. • Different concepts: ‣ commit ratio-based heuristics

- 34. Ratio- vs rate-based concurrency controls 12 • Concurrency control in STM: ‣ achieve optimal performance by scheduling means. • Different concepts: ‣ commit ratio-based heuristics ✴ ratio = commits / (commits + aborts)

- 35. Ratio- vs rate-based concurrency controls 12 • Concurrency control in STM: ‣ achieve optimal performance by scheduling means. • Different concepts: ‣ commit ratio-based heuristics ✴ ratio = commits / (commits + aborts) ✴ reduce concurrency when ratio gets too low ✴ relax concurrency when ratio gets higher than a threshold

- 36. Ratio- vs rate-based concurrency controls 12 • Concurrency control in STM: ‣ achieve optimal performance by scheduling means. • Different concepts: ‣ commit ratio-based heuristics ✴ ratio = commits / (commits + aborts) ✴ reduce concurrency when ratio gets too low ✴ relax concurrency when ratio gets higher than a threshold ‣ commit rate-based heuristics

- 37. Ratio- vs rate-based concurrency controls 12 • Concurrency control in STM: ‣ achieve optimal performance by scheduling means. • Different concepts: ‣ commit ratio-based heuristics ✴ ratio = commits / (commits + aborts) ✴ reduce concurrency when ratio gets too low ✴ relax concurrency when ratio gets higher than a threshold ‣ commit rate-based heuristics ✴ rate = commits / time

- 38. Ratio- vs rate-based concurrency controls 12 • Concurrency control in STM: ‣ achieve optimal performance by scheduling means. • Different concepts: ‣ commit ratio-based heuristics ✴ ratio = commits / (commits + aborts) ✴ reduce concurrency when ratio gets too low ✴ relax concurrency when ratio gets higher than a threshold ‣ commit rate-based heuristics ✴ rate = commits / time ‣ queuing after winner transactions ✴ kernel-level programming, conditional waiting...

- 39. Ratio-based solutions • Ansari, et al.: ‣ introduces total commit [ratio] (TCR) ‣ increases / decreases threads by comparing TCR and set-point (70%) • Yoo and Lee: ‣ introduces per-thread contention intensity (CI) ‣ (likelihood of a thread to encounter contentions) ‣ stalls for acquiring mutex when CI goes above a value (70%) • Dolev, et al.: ‣ activates hotspot detection when CI goes above a value (40%) ‣ a thread stalls for acquiring mutex when hotspot is detected 13

- 40. 14 Myths of ratio-based heuristics • We want an application finishes faster ‣ i.e. more transactions committed per unit time ‣ (assumption: constant number of transactions) • High commit ratio ≠ high performance ‣ 1 thread + 100% ratio vs 4 threads + 40% commit ratio ‣ engine rotation ≠ vehicle velocity • Watching commit ratio is an inexact science ‣ happens to be close estimation, though • Drawbacks ‣ over-serialization when commit ratio is low ‣ over-relaxation when the commit ratio is high

- 41. 14 Myths of ratio-based heuristics • We want an application finishes faster ‣ i.e. more transactions committed per unit time ‣ (assumption: constant number of transactions) • High commit ratio ≠ high performance ‣ 1 thread + 100% ratio vs 4 threads + 40% commit ratio ‣ engine rotation ≠ vehicle velocity • Watching commit ratio is an inexact science ‣ happens to be close estimation, though • Drawbacks ‣ over-serialization when commit ratio is low ‣ over-relaxation when the commit ratio is high

- 42. • At any instance, we can only pick a thread count ‣ “what if” questions not allowed in run-time • What is high and what is low? ‣ commit ratio goes between 0% and 100% ‣ commit rate depends on transaction lengths • Changing patterns ‣ transaction nature may change along execution: ‣ getting longer / shorter ‣ getting more / less contentions • Pre-defined bounds not acceptable ‣ the optimal spot changes across execution timeline. 15 Challenges with rate-based solution

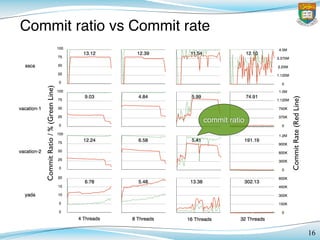

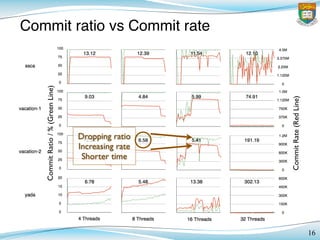

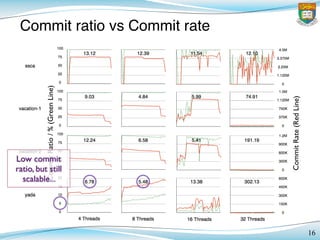

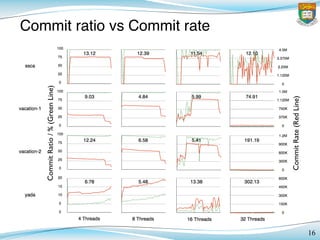

- 43. 16 Commit ratio vs Commit rate CommitRatio/%(GreenLine)! CommitRate(RedLine)!

- 44. 16 Commit ratio vs Commit rate CommitRatio/%(GreenLine)! CommitRate(RedLine)! commit ratio

- 45. 16 Commit ratio vs Commit rate CommitRatio/%(GreenLine)! CommitRate(RedLine)! commit rate

- 46. 16 Commit ratio vs Commit rate CommitRatio/%(GreenLine)! CommitRate(RedLine)! More threads results in better performance

- 47. 16 Commit ratio vs Commit rate CommitRatio/%(GreenLine)! CommitRate(RedLine)! Excessive threads kills performance

- 48. 16 Commit ratio vs Commit rate CommitRatio/%(GreenLine)! CommitRate(RedLine)! Changing application natures

- 49. 16 Commit ratio vs Commit rate CommitRatio/%(GreenLine)! CommitRate(RedLine)! Excessive threads yields fluctuations

- 50. 16 Commit ratio vs Commit rate CommitRatio/%(GreenLine)! CommitRate(RedLine)! Dropping ratio Increasing rate Shorter time

- 51. 16 Commit ratio vs Commit rate CommitRatio/%(GreenLine)! CommitRate(RedLine)! Low commit ratio, but still scalable...

- 52. 16 Commit ratio vs Commit rate CommitRatio/%(GreenLine)! CommitRate(RedLine)!

- 53. Solution Now we know ratio-based solutions are not right. What shall we do for a rate-based alternative?

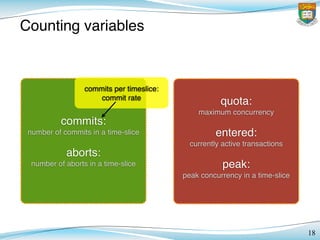

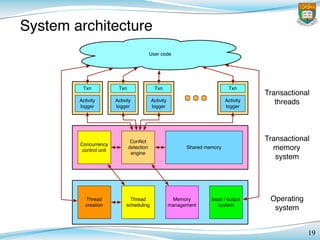

- 54. Counting variables 18 commits: number of commits in a time-slice aborts: number of aborts in a time-slice quota: maximum concurrency entered: currently active transactions peak: peak concurrency in a time-slice

- 55. Counting variables 18 commits: number of commits in a time-slice aborts: number of aborts in a time-slice quota: maximum concurrency entered: currently active transactions peak: peak concurrency in a time-slice commits per timeslice: commit rate

- 56. Counting variables 18 commits: number of commits in a time-slice aborts: number of aborts in a time-slice quota: maximum concurrency entered: currently active transactions peak: peak concurrency in a time-slice commit ratio

- 57. Counting variables 18 commits: number of commits in a time-slice aborts: number of aborts in a time-slice quota: maximum concurrency entered: currently active transactions peak: peak concurrency in a time-slice quota ≤ entered: no more new transactions stall new comers with pthread_sched();

- 58. Counting variables 18 commits: number of commits in a time-slice aborts: number of aborts in a time-slice quota: maximum concurrency entered: currently active transactions peak: peak concurrency in a time-slice quota ≥ peak: unused quota reduce quota for a tight limit...

- 59. Counting variables 18 commits: number of commits in a time-slice aborts: number of aborts in a time-slice quota: maximum concurrency entered: currently active transactions peak: peak concurrency in a time-slice

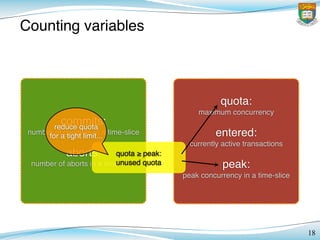

- 60. System architecture 19 Thread creation Thread scheduling Memory management Input / output system Concurrency control unit Conflict detection engine Shared memory Activity logger Txn Activity logger Txn Activity logger Txn Activity logger Txn User code Transactional threads Transactional memory system Operating system

- 61. System architecture 19 Thread creation Thread scheduling Memory management Input / output system Concurrency control unit Conflict detection engine Shared memory Activity logger Txn Activity logger Txn Activity logger Txn Activity logger Txn User code Transactions execute as normal, with conflict detection Transactional threads Transactional memory system Operating system

- 62. System architecture 19 Thread creation Thread scheduling Memory management Input / output system Concurrency control unit Conflict detection engine Shared memory Activity logger Txn Activity logger Txn Activity logger Txn Activity logger Txn User code Concurrency control unit is added as a hook, monitoring the performance Transactional threads Transactional memory system Operating system

- 63. System architecture 19 Thread creation Thread scheduling Memory management Input / output system Concurrency control unit Conflict detection engine Shared memory Activity logger Txn Activity logger Txn Activity logger Txn Activity logger Txn User code Scheduler is invoked to stall some new transactions, if appropriate Transactional threads Transactional memory system Operating system

- 64. System architecture 19 Thread creation Thread scheduling Memory management Input / output system Concurrency control unit Conflict detection engine Shared memory Activity logger Txn Activity logger Txn Activity logger Txn Activity logger Txn User code Transactional threads Transactional memory system Operating system

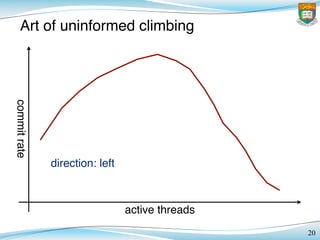

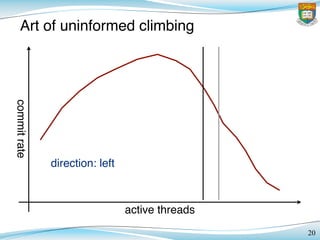

- 65. Art of uninformed climbing 20 commitrate active threads direction: left

- 66. Art of uninformed climbing 20 commitrate active threads direction: left

- 67. Art of uninformed climbing 20 commitrate active threads direction: left

- 68. Art of uninformed climbing 20 commitrate active threads direction: left

- 69. Art of uninformed climbing 20 commitrate active threads direction: left

- 70. Art of uninformed climbing 20 commitrate active threads direction: left

- 71. Art of uninformed climbing 20 commitrate active threads direction: left

- 72. Art of uninformed climbing 20 commitrate active threads direction: left

- 73. Art of uninformed climbing 20 commitrate active threads direction: left

- 74. Art of uninformed climbing 20 commitrate active threads direction: left decreasing rate! change direction!

- 75. Art of uninformed climbing 20 commitrate active threads direction: right

- 76. Art of uninformed climbing 20 commitrate active threads direction: right

- 77. Art of uninformed climbing 20 commitrate active threads direction: right

- 78. Art of uninformed climbing 20 commitrate active threads direction: right decreasing rate! change direction!

- 79. Art of uninformed climbing 20 commitrate active threads direction: left

- 80. Art of uninformed climbing 20 commitrate active threads direction: left resultant probing region

- 81. Performance Evaluation How does our rate-based solution compares with other ratio-based ones?

- 82. Evaluation Platform • Dell PowerEdge M610 Blade Server ‣ 2x Intel “Nehalem” Xeon E5540 2.53 GHz (8 cores, 16 threads) ‣ ECC DDR3-1066 36 GB main memory • STAMP Benchmark ‣ original from Stanford University – http://stamp.stanford.edu/ ‣ modified version for TinySTM: http://www.tinystm.org/ • TinySTM 0.9.5 ‣ open-source version – http://www.tinystm.org/ • Yoo’s and Shrink concurrency control ‣ from EPFL Distributed Programming Laboratory – http://lpd.epfl.ch/site/research/tmeval/ 22

- 83. Probing in effect vs other heuristics 23 throttle2 probe2 basic yoo shrink 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K 4 3 2 0 1 16 12 8 0 4 CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ TransactionsperSecond StalledThreads(BlueDashedLine) Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications found Probe more favourable than Throttle, as well r concurrency control policies. We have also found s are sensitive to the cache sharing, and refined our ion accordingly. we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pages 289–300, 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza- tion: Double ended queues as an example. In Proceedings of the 23rd International Conference on Distributed Computing Systems, pages throttle2 probe2 basic yoo shrink kmeans-2 16 threads yada 8 threads 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications We have found Probe more favourable than Throttle, as well as two other concurrency control policies. We have also found our solutions are sensitive to the cache sharing, and refined our implementation accordingly. In future we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pa 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free tion: Double ended queues as an example. In Proceedin International Conference on Distributed Computing S dolev

- 84. Probing in effect vs other heuristics 23 throttle2 probe2 basic yoo shrink 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K 4 3 2 0 1 16 12 8 0 4 CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ TransactionsperSecond StalledThreads(BlueDashedLine) Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications found Probe more favourable than Throttle, as well r concurrency control policies. We have also found s are sensitive to the cache sharing, and refined our ion accordingly. we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pages 289–300, 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza- tion: Double ended queues as an example. In Proceedings of the 23rd International Conference on Distributed Computing Systems, pages throttle2 probe2 basic yoo shrink kmeans-2 16 threads yada 8 threads 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications We have found Probe more favourable than Throttle, as well as two other concurrency control policies. We have also found our solutions are sensitive to the cache sharing, and refined our implementation accordingly. In future we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pa 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free tion: Double ended queues as an example. In Proceedin International Conference on Distributed Computing S commit ratio dolev

- 85. Probing in effect vs other heuristics 23 throttle2 probe2 basic yoo shrink 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K 4 3 2 0 1 16 12 8 0 4 CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ TransactionsperSecond StalledThreads(BlueDashedLine) Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications found Probe more favourable than Throttle, as well r concurrency control policies. We have also found s are sensitive to the cache sharing, and refined our ion accordingly. we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pages 289–300, 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza- tion: Double ended queues as an example. In Proceedings of the 23rd International Conference on Distributed Computing Systems, pages throttle2 probe2 basic yoo shrink kmeans-2 16 threads yada 8 threads 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications We have found Probe more favourable than Throttle, as well as two other concurrency control policies. We have also found our solutions are sensitive to the cache sharing, and refined our implementation accordingly. In future we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pa 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free tion: Double ended queues as an example. In Proceedin International Conference on Distributed Computing S commit rate dolev

- 86. Probing in effect vs other heuristics 23 throttle2 probe2 basic yoo shrink 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K 4 3 2 0 1 16 12 8 0 4 CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ TransactionsperSecond StalledThreads(BlueDashedLine) Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications found Probe more favourable than Throttle, as well r concurrency control policies. We have also found s are sensitive to the cache sharing, and refined our ion accordingly. we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pages 289–300, 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza- tion: Double ended queues as an example. In Proceedings of the 23rd International Conference on Distributed Computing Systems, pages throttle2 probe2 basic yoo shrink kmeans-2 16 threads yada 8 threads 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications We have found Probe more favourable than Throttle, as well as two other concurrency control policies. We have also found our solutions are sensitive to the cache sharing, and refined our implementation accordingly. In future we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pa 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free tion: Double ended queues as an example. In Proceedin International Conference on Distributed Computing S threads stalled dolev

- 87. Probing in effect vs other heuristics 23 throttle2 probe2 basic yoo shrink 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K 4 3 2 0 1 16 12 8 0 4 CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ TransactionsperSecond StalledThreads(BlueDashedLine) Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications found Probe more favourable than Throttle, as well r concurrency control policies. We have also found s are sensitive to the cache sharing, and refined our ion accordingly. we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pages 289–300, 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza- tion: Double ended queues as an example. In Proceedings of the 23rd International Conference on Distributed Computing Systems, pages throttle2 probe2 basic yoo shrink kmeans-2 16 threads yada 8 threads 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications We have found Probe more favourable than Throttle, as well as two other concurrency control policies. We have also found our solutions are sensitive to the cache sharing, and refined our implementation accordingly. In future we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pa 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free tion: Double ended queues as an example. In Proceedin International Conference on Distributed Computing S low commit ratio Original TinySTM dolev

- 88. Probing in effect vs other heuristics 23 throttle2 probe2 basic yoo shrink 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K 4 3 2 0 1 16 12 8 0 4 CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ TransactionsperSecond StalledThreads(BlueDashedLine) Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications found Probe more favourable than Throttle, as well r concurrency control policies. We have also found s are sensitive to the cache sharing, and refined our ion accordingly. we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pages 289–300, 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza- tion: Double ended queues as an example. In Proceedings of the 23rd International Conference on Distributed Computing Systems, pages throttle2 probe2 basic yoo shrink kmeans-2 16 threads yada 8 threads 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications We have found Probe more favourable than Throttle, as well as two other concurrency control policies. We have also found our solutions are sensitive to the cache sharing, and refined our implementation accordingly. In future we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pa 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free tion: Double ended queues as an example. In Proceedin International Conference on Distributed Computing S mild adjustment mild adjustment Probe: rate-based concurrency control dolev

- 89. Probing in effect vs other heuristics 23 throttle2 probe2 basic yoo shrink 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K 4 3 2 0 1 16 12 8 0 4 CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ TransactionsperSecond StalledThreads(BlueDashedLine) Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications found Probe more favourable than Throttle, as well r concurrency control policies. We have also found s are sensitive to the cache sharing, and refined our ion accordingly. we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pages 289–300, 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza- tion: Double ended queues as an example. In Proceedings of the 23rd International Conference on Distributed Computing Systems, pages throttle2 probe2 basic yoo shrink kmeans-2 16 threads yada 8 threads 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications We have found Probe more favourable than Throttle, as well as two other concurrency control policies. We have also found our solutions are sensitive to the cache sharing, and refined our implementation accordingly. In future we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pa 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free tion: Double ended queues as an example. In Proceedin International Conference on Distributed Computing S higher commit rate higher commit rate Probe: rate-based concurrency control dolev

- 90. Probing in effect vs other heuristics 23 throttle2 probe2 basic yoo shrink 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K 4 3 2 0 1 16 12 8 0 4 CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ TransactionsperSecond StalledThreads(BlueDashedLine) Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications found Probe more favourable than Throttle, as well r concurrency control policies. We have also found s are sensitive to the cache sharing, and refined our ion accordingly. we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pages 289–300, 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza- tion: Double ended queues as an example. In Proceedings of the 23rd International Conference on Distributed Computing Systems, pages throttle2 probe2 basic yoo shrink kmeans-2 16 threads yada 8 threads 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications We have found Probe more favourable than Throttle, as well as two other concurrency control policies. We have also found our solutions are sensitive to the cache sharing, and refined our implementation accordingly. In future we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pa 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free tion: Double ended queues as an example. In Proceedin International Conference on Distributed Computing S aim: high ratio aim: high ratio dolev Yoo’s and Dolev’s ratio-based concurrency control

- 91. Probing in effect vs other heuristics 23 throttle2 probe2 basic yoo shrink 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K 4 3 2 0 1 16 12 8 0 4 CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ TransactionsperSecond StalledThreads(BlueDashedLine) Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications found Probe more favourable than Throttle, as well r concurrency control policies. We have also found s are sensitive to the cache sharing, and refined our ion accordingly. we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pages 289–300, 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza- tion: Double ended queues as an example. In Proceedings of the 23rd International Conference on Distributed Computing Systems, pages throttle2 probe2 basic yoo shrink kmeans-2 16 threads yada 8 threads 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications We have found Probe more favourable than Throttle, as well as two other concurrency control policies. We have also found our solutions are sensitive to the cache sharing, and refined our implementation accordingly. In future we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pa 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free tion: Double ended queues as an example. In Proceedin International Conference on Distributed Computing S large adjustment large adjustment dolev Yoo’s and Dolev’s ratio-based concurrency control

- 92. Probing in effect vs other heuristics 23 throttle2 probe2 basic yoo shrink 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K 4 3 2 0 1 16 12 8 0 4 CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ TransactionsperSecond StalledThreads(BlueDashedLine) Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications found Probe more favourable than Throttle, as well r concurrency control policies. We have also found s are sensitive to the cache sharing, and refined our ion accordingly. we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pages 289–300, 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza- tion: Double ended queues as an example. In Proceedings of the 23rd International Conference on Distributed Computing Systems, pages throttle2 probe2 basic yoo shrink kmeans-2 16 threads yada 8 threads 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications We have found Probe more favourable than Throttle, as well as two other concurrency control policies. We have also found our solutions are sensitive to the cache sharing, and refined our implementation accordingly. In future we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pa 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free tion: Double ended queues as an example. In Proceedin International Conference on Distributed Computing S even lower rate... even lower rate... dolev Yoo’s and Dolev’s ratio-based concurrency control

- 93. Probing in effect vs other heuristics 23 throttle2 probe2 basic yoo shrink 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K 4 3 2 0 1 16 12 8 0 4 CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ TransactionsperSecond StalledThreads(BlueDashedLine) Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications found Probe more favourable than Throttle, as well r concurrency control policies. We have also found s are sensitive to the cache sharing, and refined our ion accordingly. we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pages 289–300, 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza- tion: Double ended queues as an example. In Proceedings of the 23rd International Conference on Distributed Computing Systems, pages throttle2 probe2 basic yoo shrink kmeans-2 16 threads yada 8 threads 100 75 50 0 25 100 75 50 0 25 680K 510K 340K 0 170K 1.6M 1.2M 800K 0 400K CommitRt(GreenDottedLine)/% CommitRate(RedSolidLine)/ Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications We have found Probe more favourable than Throttle, as well as two other concurrency control policies. We have also found our solutions are sensitive to the cache sharing, and refined our implementation accordingly. In future we may consider new adaptive scheduling policies International Symposium on Computer Architecture, pa 1993. [10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free tion: Double ended queues as an example. In Proceedin International Conference on Distributed Computing S dolev

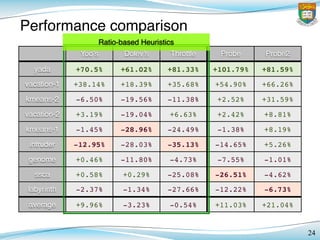

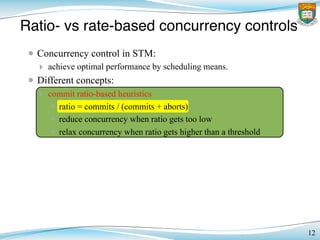

- 94. Yoo’s Dolev’s Throttle Probe Probe2 yada vacation-1 kmeans-2 vacation-2 kmeans-1 intruder genome ssca labyrinth average +70.5% +61.02% +81.33% +101.79% +81.59% +38.14% +18.39% +35.68% +54.90% +66.26% -6.50% -19.56% -11.38% +2.52% +31.59% +3.19% -19.04% +6.63% +2.42% +8.81% -1.45% -28.96% -24.49% -1.38% +8.19% -12.95% -28.03% -35.13% -14.65% +5.26% +0.46% -11.80% -4.73% -7.55% -1.01% +0.58% +0.29% -25.08% -26.51% -4.62% -2.37% -1.34% -27.66% -12.22% -6.73% +9.96% -3.23% -0.54% +11.03% +21.04% Performance comparison 24

- 95. Yoo’s Dolev’s Throttle Probe Probe2 yada vacation-1 kmeans-2 vacation-2 kmeans-1 intruder genome ssca labyrinth average +70.5% +61.02% +81.33% +101.79% +81.59% +38.14% +18.39% +35.68% +54.90% +66.26% -6.50% -19.56% -11.38% +2.52% +31.59% +3.19% -19.04% +6.63% +2.42% +8.81% -1.45% -28.96% -24.49% -1.38% +8.19% -12.95% -28.03% -35.13% -14.65% +5.26% +0.46% -11.80% -4.73% -7.55% -1.01% +0.58% +0.29% -25.08% -26.51% -4.62% -2.37% -1.34% -27.66% -12.22% -6.73% +9.96% -3.23% -0.54% +11.03% +21.04% Performance comparison 24 Ratio-based Heuristics

- 96. Yoo’s Dolev’s Throttle Probe Probe2 yada vacation-1 kmeans-2 vacation-2 kmeans-1 intruder genome ssca labyrinth average +70.5% +61.02% +81.33% +101.79% +81.59% +38.14% +18.39% +35.68% +54.90% +66.26% -6.50% -19.56% -11.38% +2.52% +31.59% +3.19% -19.04% +6.63% +2.42% +8.81% -1.45% -28.96% -24.49% -1.38% +8.19% -12.95% -28.03% -35.13% -14.65% +5.26% +0.46% -11.80% -4.73% -7.55% -1.01% +0.58% +0.29% -25.08% -26.51% -4.62% -2.37% -1.34% -27.66% -12.22% -6.73% +9.96% -3.23% -0.54% +11.03% +21.04% Performance comparison 24 Ratio-based Heuristics Naive: scales for 25% ~ 75% of ratio

- 97. Yoo’s Dolev’s Throttle Probe Probe2 yada vacation-1 kmeans-2 vacation-2 kmeans-1 intruder genome ssca labyrinth average +70.5% +61.02% +81.33% +101.79% +81.59% +38.14% +18.39% +35.68% +54.90% +66.26% -6.50% -19.56% -11.38% +2.52% +31.59% +3.19% -19.04% +6.63% +2.42% +8.81% -1.45% -28.96% -24.49% -1.38% +8.19% -12.95% -28.03% -35.13% -14.65% +5.26% +0.46% -11.80% -4.73% -7.55% -1.01% +0.58% +0.29% -25.08% -26.51% -4.62% -2.37% -1.34% -27.66% -12.22% -6.73% +9.96% -3.23% -0.54% +11.03% +21.04% Performance comparison 24 Rate-based Heuristics

- 98. Yoo’s Dolev’s Throttle Probe Probe2 yada vacation-1 kmeans-2 vacation-2 kmeans-1 intruder genome ssca labyrinth average +70.5% +61.02% +81.33% +101.79% +81.59% +38.14% +18.39% +35.68% +54.90% +66.26% -6.50% -19.56% -11.38% +2.52% +31.59% +3.19% -19.04% +6.63% +2.42% +8.81% -1.45% -28.96% -24.49% -1.38% +8.19% -12.95% -28.03% -35.13% -14.65% +5.26% +0.46% -11.80% -4.73% -7.55% -1.01% +0.58% +0.29% -25.08% -26.51% -4.62% -2.37% -1.34% -27.66% -12.22% -6.73% +9.96% -3.23% -0.54% +11.03% +21.04% Performance comparison 24 Rate-based Heuristics Better number counting strategy

- 99. Yoo’s Dolev’s Throttle Probe Probe2 yada vacation-1 kmeans-2 vacation-2 kmeans-1 intruder genome ssca labyrinth average +70.5% +61.02% +81.33% +101.79% +81.59% +38.14% +18.39% +35.68% +54.90% +66.26% -6.50% -19.56% -11.38% +2.52% +31.59% +3.19% -19.04% +6.63% +2.42% +8.81% -1.45% -28.96% -24.49% -1.38% +8.19% -12.95% -28.03% -35.13% -14.65% +5.26% +0.46% -11.80% -4.73% -7.55% -1.01% +0.58% +0.29% -25.08% -26.51% -4.62% -2.37% -1.34% -27.66% -12.22% -6.73% +9.96% -3.23% -0.54% +11.03% +21.04% Performance comparison 24

- 100. Yoo’s Dolev’s Throttle Probe Probe2 yada vacation-1 kmeans-2 vacation-2 kmeans-1 intruder genome ssca labyrinth average +70.5% +61.02% +81.33% +101.79% +81.59% +38.14% +18.39% +35.68% +54.90% +66.26% -6.50% -19.56% -11.38% +2.52% +31.59% +3.19% -19.04% +6.63% +2.42% +8.81% -1.45% -28.96% -24.49% -1.38% +8.19% -12.95% -28.03% -35.13% -14.65% +5.26% +0.46% -11.80% -4.73% -7.55% -1.01% +0.58% +0.29% -25.08% -26.51% -4.62% -2.37% -1.34% -27.66% -12.22% -6.73% +9.96% -3.23% -0.54% +11.03% +21.04% Performance comparison 24 over- relaxation

- 101. Yoo’s Dolev’s Throttle Probe Probe2 yada vacation-1 kmeans-2 vacation-2 kmeans-1 intruder genome ssca labyrinth average +70.5% +61.02% +81.33% +101.79% +81.59% +38.14% +18.39% +35.68% +54.90% +66.26% -6.50% -19.56% -11.38% +2.52% +31.59% +3.19% -19.04% +6.63% +2.42% +8.81% -1.45% -28.96% -24.49% -1.38% +8.19% -12.95% -28.03% -35.13% -14.65% +5.26% +0.46% -11.80% -4.73% -7.55% -1.01% +0.58% +0.29% -25.08% -26.51% -4.62% -2.37% -1.34% -27.66% -12.22% -6.73% +9.96% -3.23% -0.54% +11.03% +21.04% Performance comparison 24 over- reaction

- 102. Yoo’s Dolev’s Throttle Probe Probe2 yada vacation-1 kmeans-2 vacation-2 kmeans-1 intruder genome ssca labyrinth average +70.5% +61.02% +81.33% +101.79% +81.59% +38.14% +18.39% +35.68% +54.90% +66.26% -6.50% -19.56% -11.38% +2.52% +31.59% +3.19% -19.04% +6.63% +2.42% +8.81% -1.45% -28.96% -24.49% -1.38% +8.19% -12.95% -28.03% -35.13% -14.65% +5.26% +0.46% -11.80% -4.73% -7.55% -1.01% +0.58% +0.29% -25.08% -26.51% -4.62% -2.37% -1.34% -27.66% -12.22% -6.73% +9.96% -3.23% -0.54% +11.03% +21.04% Performance comparison 24

- 103. Conclusions • Trend of multicore urges us to write parallel computer programs • Software transactional memory is part of future computation ‣ easier to program, less errors, neat code ‣ but it needs concurrency control for the best performance • Ratio-based vs rate-based concurrency heuristics ‣ ratio-based heuristics are inexact approximations ‣ watching ratio only causes over-reaction / over-relaxation • Rate-based concurrency heuristics, Probe, outperforms 25

- 105. Thank You! Contact me: [email protected] HKU CS Systems Research Group: http://www.srg.cs.hku.hk/ Dr. Cho-Li Wang’s Webpage: http://www.cs.hku.hk/~clwang/

![Ratio-based solutions

• Ansari, et al.:

‣ introduces total commit [ratio] (TCR)

‣ increases / decreases threads by comparing TCR and set-point (70%)

• Yoo and Lee:

‣ introduces per-thread contention intensity (CI)

‣ (likelihood of a thread to encounter contentions)

‣ stalls for acquiring mutex when CI goes above a value (70%)

• Dolev, et al.:

‣ activates hotspot detection when CI goes above a value (40%)

‣ a thread stalls for acquiring mutex when hotspot is detected

13](https://image.slidesharecdn.com/pdcn2011-slideshare-150408123908-conversion-gate01/85/Adaptive-Thread-Scheduling-Techniques-for-Improving-Scalability-of-Software-Transactional-Memory-39-320.jpg)

![Probing in effect vs other heuristics

23

throttle2 probe2 basic yoo shrink

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

4

3

2

0

1

16

12

8

0

4

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

TransactionsperSecond

StalledThreads(BlueDashedLine)

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

found Probe more favourable than Throttle, as well

r concurrency control policies. We have also found

s are sensitive to the cache sharing, and refined our

ion accordingly.

we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pages 289–300,

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza-

tion: Double ended queues as an example. In Proceedings of the 23rd

International Conference on Distributed Computing Systems, pages

throttle2 probe2 basic yoo shrink

kmeans-2

16 threads

yada

8 threads

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

We have found Probe more favourable than Throttle, as well

as two other concurrency control policies. We have also found

our solutions are sensitive to the cache sharing, and refined our

implementation accordingly.

In future we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pa

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free

tion: Double ended queues as an example. In Proceedin

International Conference on Distributed Computing S

dolev](https://image.slidesharecdn.com/pdcn2011-slideshare-150408123908-conversion-gate01/85/Adaptive-Thread-Scheduling-Techniques-for-Improving-Scalability-of-Software-Transactional-Memory-83-320.jpg)

![Probing in effect vs other heuristics

23

throttle2 probe2 basic yoo shrink

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

4

3

2

0

1

16

12

8

0

4

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

TransactionsperSecond

StalledThreads(BlueDashedLine)

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

found Probe more favourable than Throttle, as well

r concurrency control policies. We have also found

s are sensitive to the cache sharing, and refined our

ion accordingly.

we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pages 289–300,

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza-

tion: Double ended queues as an example. In Proceedings of the 23rd

International Conference on Distributed Computing Systems, pages

throttle2 probe2 basic yoo shrink

kmeans-2

16 threads

yada

8 threads

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

We have found Probe more favourable than Throttle, as well

as two other concurrency control policies. We have also found

our solutions are sensitive to the cache sharing, and refined our

implementation accordingly.

In future we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pa

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free

tion: Double ended queues as an example. In Proceedin

International Conference on Distributed Computing S

commit ratio

dolev](https://image.slidesharecdn.com/pdcn2011-slideshare-150408123908-conversion-gate01/85/Adaptive-Thread-Scheduling-Techniques-for-Improving-Scalability-of-Software-Transactional-Memory-84-320.jpg)

![Probing in effect vs other heuristics

23

throttle2 probe2 basic yoo shrink

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

4

3

2

0

1

16

12

8

0

4

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

TransactionsperSecond

StalledThreads(BlueDashedLine)

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

found Probe more favourable than Throttle, as well

r concurrency control policies. We have also found

s are sensitive to the cache sharing, and refined our

ion accordingly.

we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pages 289–300,

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza-

tion: Double ended queues as an example. In Proceedings of the 23rd

International Conference on Distributed Computing Systems, pages

throttle2 probe2 basic yoo shrink

kmeans-2

16 threads

yada

8 threads

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

We have found Probe more favourable than Throttle, as well

as two other concurrency control policies. We have also found

our solutions are sensitive to the cache sharing, and refined our

implementation accordingly.

In future we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pa

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free

tion: Double ended queues as an example. In Proceedin

International Conference on Distributed Computing S

commit rate

dolev](https://image.slidesharecdn.com/pdcn2011-slideshare-150408123908-conversion-gate01/85/Adaptive-Thread-Scheduling-Techniques-for-Improving-Scalability-of-Software-Transactional-Memory-85-320.jpg)

![Probing in effect vs other heuristics

23

throttle2 probe2 basic yoo shrink

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

4

3

2

0

1

16

12

8

0

4

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

TransactionsperSecond

StalledThreads(BlueDashedLine)

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

found Probe more favourable than Throttle, as well

r concurrency control policies. We have also found

s are sensitive to the cache sharing, and refined our

ion accordingly.

we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pages 289–300,

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza-

tion: Double ended queues as an example. In Proceedings of the 23rd

International Conference on Distributed Computing Systems, pages

throttle2 probe2 basic yoo shrink

kmeans-2

16 threads

yada

8 threads

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

We have found Probe more favourable than Throttle, as well

as two other concurrency control policies. We have also found

our solutions are sensitive to the cache sharing, and refined our

implementation accordingly.

In future we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pa

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free

tion: Double ended queues as an example. In Proceedin

International Conference on Distributed Computing S

threads

stalled

dolev](https://image.slidesharecdn.com/pdcn2011-slideshare-150408123908-conversion-gate01/85/Adaptive-Thread-Scheduling-Techniques-for-Improving-Scalability-of-Software-Transactional-Memory-86-320.jpg)

![Probing in effect vs other heuristics

23

throttle2 probe2 basic yoo shrink

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

4

3

2

0

1

16

12

8

0

4

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

TransactionsperSecond

StalledThreads(BlueDashedLine)

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

found Probe more favourable than Throttle, as well

r concurrency control policies. We have also found

s are sensitive to the cache sharing, and refined our

ion accordingly.

we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pages 289–300,

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza-

tion: Double ended queues as an example. In Proceedings of the 23rd

International Conference on Distributed Computing Systems, pages

throttle2 probe2 basic yoo shrink

kmeans-2

16 threads

yada

8 threads

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

We have found Probe more favourable than Throttle, as well

as two other concurrency control policies. We have also found

our solutions are sensitive to the cache sharing, and refined our

implementation accordingly.

In future we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pa

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free

tion: Double ended queues as an example. In Proceedin

International Conference on Distributed Computing S

low

commit ratio

Original

TinySTM

dolev](https://image.slidesharecdn.com/pdcn2011-slideshare-150408123908-conversion-gate01/85/Adaptive-Thread-Scheduling-Techniques-for-Improving-Scalability-of-Software-Transactional-Memory-87-320.jpg)

![Probing in effect vs other heuristics

23

throttle2 probe2 basic yoo shrink

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

4

3

2

0

1

16

12

8

0

4

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

TransactionsperSecond

StalledThreads(BlueDashedLine)

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

found Probe more favourable than Throttle, as well

r concurrency control policies. We have also found

s are sensitive to the cache sharing, and refined our

ion accordingly.

we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pages 289–300,

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza-

tion: Double ended queues as an example. In Proceedings of the 23rd

International Conference on Distributed Computing Systems, pages

throttle2 probe2 basic yoo shrink

kmeans-2

16 threads

yada

8 threads

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

We have found Probe more favourable than Throttle, as well

as two other concurrency control policies. We have also found

our solutions are sensitive to the cache sharing, and refined our

implementation accordingly.

In future we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pa

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free

tion: Double ended queues as an example. In Proceedin

International Conference on Distributed Computing S

mild

adjustment

mild

adjustment

Probe: rate-based

concurrency control

dolev](https://image.slidesharecdn.com/pdcn2011-slideshare-150408123908-conversion-gate01/85/Adaptive-Thread-Scheduling-Techniques-for-Improving-Scalability-of-Software-Transactional-Memory-88-320.jpg)

![Probing in effect vs other heuristics

23

throttle2 probe2 basic yoo shrink

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

4

3

2

0

1

16

12

8

0

4

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

TransactionsperSecond

StalledThreads(BlueDashedLine)

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

found Probe more favourable than Throttle, as well

r concurrency control policies. We have also found

s are sensitive to the cache sharing, and refined our

ion accordingly.

we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pages 289–300,

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza-

tion: Double ended queues as an example. In Proceedings of the 23rd

International Conference on Distributed Computing Systems, pages

throttle2 probe2 basic yoo shrink

kmeans-2

16 threads

yada

8 threads

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

We have found Probe more favourable than Throttle, as well

as two other concurrency control policies. We have also found

our solutions are sensitive to the cache sharing, and refined our

implementation accordingly.

In future we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pa

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free

tion: Double ended queues as an example. In Proceedin

International Conference on Distributed Computing S

higher

commit rate

higher

commit rate

Probe: rate-based

concurrency control

dolev](https://image.slidesharecdn.com/pdcn2011-slideshare-150408123908-conversion-gate01/85/Adaptive-Thread-Scheduling-Techniques-for-Improving-Scalability-of-Software-Transactional-Memory-89-320.jpg)

![Probing in effect vs other heuristics

23

throttle2 probe2 basic yoo shrink

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

4

3

2

0

1

16

12

8

0

4

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

TransactionsperSecond

StalledThreads(BlueDashedLine)

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

found Probe more favourable than Throttle, as well

r concurrency control policies. We have also found

s are sensitive to the cache sharing, and refined our

ion accordingly.

we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pages 289–300,

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza-

tion: Double ended queues as an example. In Proceedings of the 23rd

International Conference on Distributed Computing Systems, pages

throttle2 probe2 basic yoo shrink

kmeans-2

16 threads

yada

8 threads

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

We have found Probe more favourable than Throttle, as well

as two other concurrency control policies. We have also found

our solutions are sensitive to the cache sharing, and refined our

implementation accordingly.

In future we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pa

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free

tion: Double ended queues as an example. In Proceedin

International Conference on Distributed Computing S

aim: high

ratio

aim: high

ratio

dolev

Yoo’s and Dolev’s

ratio-based concurrency control](https://image.slidesharecdn.com/pdcn2011-slideshare-150408123908-conversion-gate01/85/Adaptive-Thread-Scheduling-Techniques-for-Improving-Scalability-of-Software-Transactional-Memory-90-320.jpg)

![Probing in effect vs other heuristics

23

throttle2 probe2 basic yoo shrink

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

4

3

2

0

1

16

12

8

0

4

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

TransactionsperSecond

StalledThreads(BlueDashedLine)

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

found Probe more favourable than Throttle, as well

r concurrency control policies. We have also found

s are sensitive to the cache sharing, and refined our

ion accordingly.

we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pages 289–300,

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza-

tion: Double ended queues as an example. In Proceedings of the 23rd

International Conference on Distributed Computing Systems, pages

throttle2 probe2 basic yoo shrink

kmeans-2

16 threads

yada

8 threads

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

We have found Probe more favourable than Throttle, as well

as two other concurrency control policies. We have also found

our solutions are sensitive to the cache sharing, and refined our

implementation accordingly.

In future we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pa

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free

tion: Double ended queues as an example. In Proceedin

International Conference on Distributed Computing S

large

adjustment

large

adjustment

dolev

Yoo’s and Dolev’s

ratio-based concurrency control](https://image.slidesharecdn.com/pdcn2011-slideshare-150408123908-conversion-gate01/85/Adaptive-Thread-Scheduling-Techniques-for-Improving-Scalability-of-Software-Transactional-Memory-91-320.jpg)

![Probing in effect vs other heuristics

23

throttle2 probe2 basic yoo shrink

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

4

3

2

0

1

16

12

8

0

4

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

TransactionsperSecond

StalledThreads(BlueDashedLine)

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

found Probe more favourable than Throttle, as well

r concurrency control policies. We have also found

s are sensitive to the cache sharing, and refined our

ion accordingly.

we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pages 289–300,

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza-

tion: Double ended queues as an example. In Proceedings of the 23rd

International Conference on Distributed Computing Systems, pages

throttle2 probe2 basic yoo shrink

kmeans-2

16 threads

yada

8 threads

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

We have found Probe more favourable than Throttle, as well

as two other concurrency control policies. We have also found

our solutions are sensitive to the cache sharing, and refined our

implementation accordingly.

In future we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pa

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free

tion: Double ended queues as an example. In Proceedin

International Conference on Distributed Computing S

even

lower rate...

even

lower rate...

dolev

Yoo’s and Dolev’s

ratio-based concurrency control](https://image.slidesharecdn.com/pdcn2011-slideshare-150408123908-conversion-gate01/85/Adaptive-Thread-Scheduling-Techniques-for-Improving-Scalability-of-Software-Transactional-Memory-92-320.jpg)

![Probing in effect vs other heuristics

23

throttle2 probe2 basic yoo shrink

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

4

3

2

0

1

16

12

8

0

4

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

TransactionsperSecond

StalledThreads(BlueDashedLine)

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

found Probe more favourable than Throttle, as well

r concurrency control policies. We have also found

s are sensitive to the cache sharing, and refined our

ion accordingly.

we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pages 289–300,

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free synchroniza-

tion: Double ended queues as an example. In Proceedings of the 23rd

International Conference on Distributed Computing Systems, pages

throttle2 probe2 basic yoo shrink

kmeans-2

16 threads

yada

8 threads

100

75

50

0

25

100

75

50

0

25

680K

510K

340K

0

170K

1.6M

1.2M

800K

0

400K

CommitRt(GreenDottedLine)/%

CommitRate(RedSolidLine)/

Figure 3. Commit Ratio, Commit Rate and Number of Stalled Threads of Some TM Applications

We have found Probe more favourable than Throttle, as well

as two other concurrency control policies. We have also found

our solutions are sensitive to the cache sharing, and refined our

implementation accordingly.

In future we may consider new adaptive scheduling policies

International Symposium on Computer Architecture, pa

1993.

[10] M. Herlihy, V. Luchangco, and M. Moir. Obstruction free

tion: Double ended queues as an example. In Proceedin

International Conference on Distributed Computing S

dolev](https://image.slidesharecdn.com/pdcn2011-slideshare-150408123908-conversion-gate01/85/Adaptive-Thread-Scheduling-Techniques-for-Improving-Scalability-of-Software-Transactional-Memory-93-320.jpg)