Advances in Accelerator-based CFD Simulation

- 1. 1 © 2014 ANSYS, Inc. September 25, 2014 Advances in Accelerator-based CFD Simulation “New Trends in CFD II”, DANSIS seminar Sep 24th, 2014, Denmark Wim Slagter, PhD ANSYS, Inc.

- 2. 2 © 2014 ANSYS, Inc. September 25, 2014 Introduction Why Accelerator-based CFD Status of Current Accelerator-based Solver Support Guidelines Licensing Next Steps and Future Directions Overview

- 3. 3 © 2014 ANSYS, Inc. September 25, 2014 *BusinessWeek, FORTUNE ANSYS, Inc. FOCUSED Engineering Simulation is all we do. Leading product technologies in all physics areas Largest development team focused on simulation CAPABLE 2,600+ 75 40 employees locations countries TRUSTED FORTUNE 500 Industrials 96 of the top 100 ISO 9001 and NQA-1 certified PROVEN Recognized as one of the world’s MOST INNOVATIVE AND FASTEST-GROWING COMPANIES* INDEPENDENT Long-term financial stability CAD agnostic

- 4. 4 © 2014 ANSYS, Inc. September 25, 2014 Why HPC? Impact product design Enable large models Allow parametric studies Turbulence Combustion Particle Tracking Assemblies CAD-to-mesh Capture fidelity Multiple design ideas Optimize the design Ensure product integrity Courtesy of Red Bull Racing

- 5. 5 © 2014 ANSYS, Inc. September 25, 2014 Scale-up of HPC A Software Development Imperative Today’s multi-core / many-core hardware evolution makes HPC a software development imperative. Clock Speed – Levelling off Core Counts • Growing (Intel & AMD) • Exploding (NVIDIA GPUs) Future performance depends on highly scalable parallel software

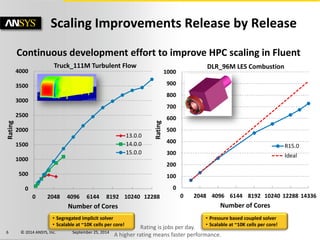

- 6. 6 © 2014 ANSYS, Inc. September 25, 2014 Scaling Improvements Release by Release Continuous development effort to improve HPC scaling in Fluent • Segregated implicit solver • Scalable at ~10K cells per core! 0 500 1000 1500 2000 2500 3000 3500 4000 0 2048 4096 6144 8192 10240 12288 Rating Number of Cores 13.0.0 14.0.0 15.0.0 Rating is jobs per day. A higher rating means faster performance. Truck_111M Turbulent Flow 0 100 200 300 400 500 600 700 800 900 1000 0 2048 4096 6144 8192 10240 12288 14336 Rating Number of Cores DLR_96M LES Combustion R15.0 Ideal • Pressure based coupled solver • Scalable at ~10K cells per core!

- 7. 7 © 2014 ANSYS, Inc. September 25, 2014 Scaling Improvements Release by Release Continuous development effort to improve HPC scaling in Fluent • Segregated implicit solver • Scalable at ~10K cells per core! 0 500 1000 1500 2000 2500 3000 3500 4000 0 2048 4096 6144 8192 10240 12288 Rating Number of Cores 13.0.0 14.0.0 15.0.0 Rating is jobs per day. A higher rating means faster performance. Truck_111M Turbulent Flow Scaling Improvements at 10,000+ Cores Yield Benefits for Smaller Jobs!

- 8. 8 © 2014 ANSYS, Inc. September 25, 2014 Extreme Scaling – ANSYS Fluent 15.0 Source: “Industrial HPC Applications Scalability and Challenges”, Seid Korić, ISC 2014

- 9. 9 © 2014 ANSYS, Inc. September 25, 2014 Industry’s HPC Challenges Go Even Further… Source: “Exascale Challenges of European Academic & Industrial Applications”, S. Requena, ISC’14, 22-26 June 2014, Leipzig Source: “NASA Vision 2030 CFD Code – Final Technical Review”, Contract # NNL08AA16B, November 14, 2013, NASA Langley Research Center Source: “Computational Science and Engineering Grand Challenges in Rolls-Royce”, Leigh Lapworth, Networkshop42, 1-3 April 2014, University of Leeds

- 10. 10 © 2014 ANSYS, Inc. September 25, 2014 HPC Challenges & Emerging Technologies Traditional CPU technology may be no longer capable of scaling performance sufficiently to address industry’s HPC demand HPC hardware challenges include: • Power consumption (limited) • Energy efficiency (“Green Computing”) • Cooling (the lower the better) Hence the evolution of: •Quantum or bio-computing… •Hardware accelerators: –Graphics Processing Units (GPUs) from NVIDIA® and AMD® –Intel® Xeon Phi™ coprocessors (previously called Intel MIC)

- 11. 11 © 2014 ANSYS, Inc. September 25, 2014 Motivations for Accelerator-Based CFD • Accelerators are getting more powerful, e.g. – Number of GPU cores are increasing – GPU memory is getting bigger to the point where it can fit a large CFD problem – Intel Knight’s Landing come into the market (addressing memory and I/O performance challenges) • Problems do exist in CFD which can use large computing power – Coupled solver takes 60-70% time in solving the linear equation system – Stiff chemistry problems in species can take 90-95% time in ODE solver – Radiation models depending on their complexity can consume majority of the processing time Courtesy of Erich Strohmaier – Supercomputing centers have been driving adoption of new accelerators for Top500-class machines – Delivering the highest performance energy efficiency All good reasons to explore Accelerators for CFD • HPC industry is moving toward heterogeneous computing systems, where CPUs and accelerators work together to perform general-purpose computing tasks

- 12. 12 © 2014 ANSYS, Inc. September 25, 2014 Full Product Support in Current Release ANSYS Fluent 15.0 GPU-based Model: Radiation Heat Transfer using OptiX, Product in R14.5 GPU-based Solver: Coupled Algebraic Multigrid (AMG) PBNS linear solver Operating Systems: Both Linux and Win64 for workstations and servers Parallel Methods: Shared memory in R14.5; distributed memory in R15.0 Supported GPUs: Tesla K20, Tesla K40, and Quadro 6000 Multi-GPU Support: Single GPU for R14.5; full multi-GPU, multi-node R15.0 Model Suitability: Size of 3M cells or less in R14.5; unlimited in R15.0 Evolution of GPU-Accelerated Solver Support

- 13. 13 © 2014 ANSYS, Inc. September 25, 2014 Water jacket model Unsteady RANS model Fluid: water Internal flow Coupled PBNS, DP CPU: Intel Xeon E5-2680; 8 cores GPU: 2 X Tesla K40 NOTE: Times for 20 time steps Faster Coupled Solver with GPUs - ANSYS Fluent 15.0 CPU + GPU ANSYS Fluent Time (Sec) AMG solver time 5.9x 2.5x Lower is Better Solution time CPU only CPU + GPU CPU only 4557 775 6391 2520

- 14. 14 © 2014 ANSYS, Inc. September 25, 2014 GPU Value Proposition - ANSYS Fluent 15.0 16 Jobs/day 25 Jobs/day CPU Benefit 100% 25% 100% 56% GPU Cost Simulation productivity from CPU-only system Additional productivity from GPUs CPU-only solution cost Additional cost of adding GPUs Simulation productivity (with an HPC Workgroup 64 license) 64 CPU cores 56 CPU cores + 8 Tesla K40 Truck Model External aerodynamics 14 million cells Steady, k-ε turbulence Coupled PBNS, DP Intel Xeon E5-2680; 64 CPU cores on 8 sockets 8 Tesla K40 GPUs Higher is Better

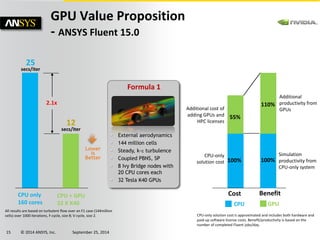

- 15. 15 © 2014 ANSYS, Inc. September 25, 2014 GPU Value Proposition - ANSYS Fluent 15.0 Formula 1 External aerodynamics 144 million cells Steady, k-ε turbulence Coupled PBNS, SP 8 Ivy Bridge nodes with 20 CPU cores each 32 Tesla K40 GPUs Lower is Better All results are based on turbulent flow over an F1 case (144million cells) over 1000 iterations, F-cycle, size 8; V-cycle, size 2. CPU only 160 cores CPU + GPU 32 X K40 25 secs/iter 12 secs/iter 2.1x CPU Benefit 100% 55% 100% 110% GPU Cost CPU-only solution cost is approximated and includes both hardware and paid-up software license costs. Benefit/productivity is based on the number of completed Fluent jobs/day. CPU-only solution cost Simulation productivity from CPU-only system Additional productivity from GPUs Additional cost of adding GPUs and HPC licenses

- 16. 16 © 2014 ANSYS, Inc. September 25, 2014 GPU Guidelines for ANSYS Fluent Yes No Pressure- based coupled solver? Pressure–based coupled solver Best-fit for GPUs Segregated solver Is it a steady-state analysis? No Consider switching to the pressure-based coupled solver for better performance (faster convergence) and further speedups with GPUs. Yes Is it single-phase & flow dominant? Not ideal for GPUs ANSYS Fluent analysis No

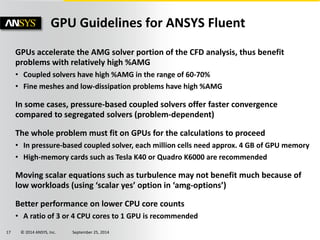

- 17. 17 © 2014 ANSYS, Inc. September 25, 2014 GPU Guidelines for ANSYS Fluent GPUs accelerate the AMG solver portion of the CFD analysis, thus benefit problems with relatively high %AMG • Coupled solvers have high %AMG in the range of 60-70% • Fine meshes and low-dissipation problems have high %AMG In some cases, pressure-based coupled solvers offer faster convergence compared to segregated solvers (problem-dependent) The whole problem must fit on GPUs for the calculations to proceed • In pressure-based coupled solver, each million cells need approx. 4 GB of GPU memory •High-memory cards such as Tesla K40 or Quadro K6000 are recommended Moving scalar equations such as turbulence may not benefit much because of low workloads (using ‘scalar yes’ option in ‘amg-options’) Better performance on lower CPU core counts •A ratio of 3 or 4 CPU cores to 1 GPU is recommended

- 18. 18 © 2014 ANSYS, Inc. September 25, 2014 GPU Solution Fit for ANSYS Fluent - Supported Hardware Configurations CPU GPU CPU GPU CPU GPU CPU GPU Some nodes with 16 processes and some with 12 processes Some nodes with 2 GPUs some with 1 GPU 15 processes not divisible by 2 GPUs ● Homogeneous process distribution ● Homogeneous GPU selection ● Number of processes be an exact multiple of number of GPUs

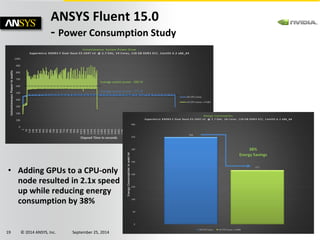

- 19. 19 © 2014 ANSYS, Inc. September 25, 2014 ANSYS Fluent 15.0 - Power Consumption Study • Adding GPUs to a CPU-only node resulted in 2.1x speed up while reducing energy consumption by 38%

- 20. 20 © 2014 ANSYS, Inc. September 25, 2014 Application Example Benefit of GPU-Accelerated Workstation - Shorter Time to Solution Objective Meeting engineering services schedule & budget, and technical excellence are imperative for success. ANSYS Solution • PSI evaluates and implements the new technology in software (ANSYS 15.0) and hardware (NVIDIA GPU) as soon as possible. •GPU produces a 43% reduction in Fluent solution time on an Intel Xeon E5-2687 (8 core, 64GB) workstation equipped with an NVIDIA K40 GPU Design Impact Increased simulation throughput allows meeting delivery-time requirements for engineering services. Images courtesy of Parametric Solutions, Inc.

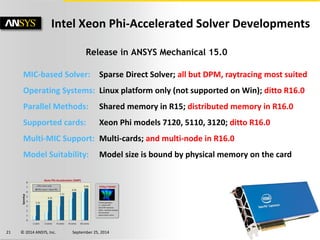

- 21. 21 © 2014 ANSYS, Inc. September 25, 2014 Release in ANSYS Mechanical 15.0 MIC-based Solver: Sparse Direct Solver; all but DPM, raytracing most suited Operating Systems: Linux platform only (not supported on Win); ditto R16.0 Parallel Methods: Shared memory in R15; distributed memory in R16.0 Supported cards: Xeon Phi models 7120, 5110, 3120; ditto R16.0 Multi-MIC Support: Multi-cards; and multi-node in R16.0 Model Suitability: Model size is bound by physical memory on the card Intel Xeon Phi-Accelerated Solver Developments

- 22. 22 © 2014 ANSYS, Inc. September 25, 2014 Intel Xeon Phi Coprocessor Product Lineup 3 Family Parallel Computing Solution Performance/$ 3120P MM# 927501 3120A MM# 927500 5 Family Optimized for High Density Environments Performance/Watt 5110P MM# 924044 5120D (no thermal) MM# 927503 8GB GDDR5 >300GB/s >1TF DP 225-245W TDP 6GB GDDR5 240GB/s >1TF DP 300W TDP 7 Family Highest Performance Most Memory Performance 7120P MM# 927499 7120X (No Thermal Solution) MM# 927498 16GB GDDR5 352GB/s >1.2TF DP 300W TDP 7120A MM# 934878 7120D (Dense Form Factor) MM# 932330 Software and workloads used in performance tests may have been optimized for performance only on Intel microprocessors. Performance tests, such as SYSmark and MobileMark, are measured using specific computer systems, components, software, operations and functions. Any change to any of those factors may cause the results to vary. You should consult other information and performance tests to assist you in fully evaluating your contemplated purchases, including the performance of that product when combined with other products. For more information go to http://www.intel.com/performance

- 23. 23 © 2014 ANSYS, Inc. September 25, 2014 15.0 HPC Licensing Enabling GPU Acceleration - One HPC Task Required to Unlock one GPU! 6 CPU Cores + 2 GPUs 1 x ANSYS HPC Pack 4 CPU Cores + 4 GPUs Licensing Examples: Total 8 HPC Tasks (4 GPUs Max) 2 x ANSYS HPC Pack Total 32 HPC Tasks (16 GPUs Max) Example of Valid Configurations: 24 CPU Cores + 8 GPUs (Total Use of 2 Compute Nodes) . . . . . (Applies to all license schemes: ANSYS HPC, ANSYS HPC Pack, ANSYS HPC Workgroup) NEW at R15.0

- 24. 24 © 2014 ANSYS, Inc. September 25, 2014 Next Steps and Future Directions Next steps on “How to use HPC/GPUs” • Recorded webinar: “How to Speed Up ANSYS 15.0 with GPUs” • Technical brief: “Accelerating ANSYS Fluent 15.0 Using NVIDIA GPUs” • Recorded webinar: “Understanding Hardware Selection for ANSYS 15.0” Future directions: • Accelerate radiation modeling with discrete ordinate method by using AmgX • Provide user control to pick and choose which equation to run on GPU • Explore possibilities of further improvements via use of advanced AmgX features like direct GPU communication • Explore possibilities of performance improvements for segregated solver

- 25. 25 © 2014 ANSYS, Inc. September 25, 2014 • Connect with Me – [email protected] • Connect with ANSYS, Inc. – LinkedIn ANSYSInc – Twitter @ANSYS_Inc – Facebook ANSYSInc • Follow our Blog – ansys-blog.com Thank You!