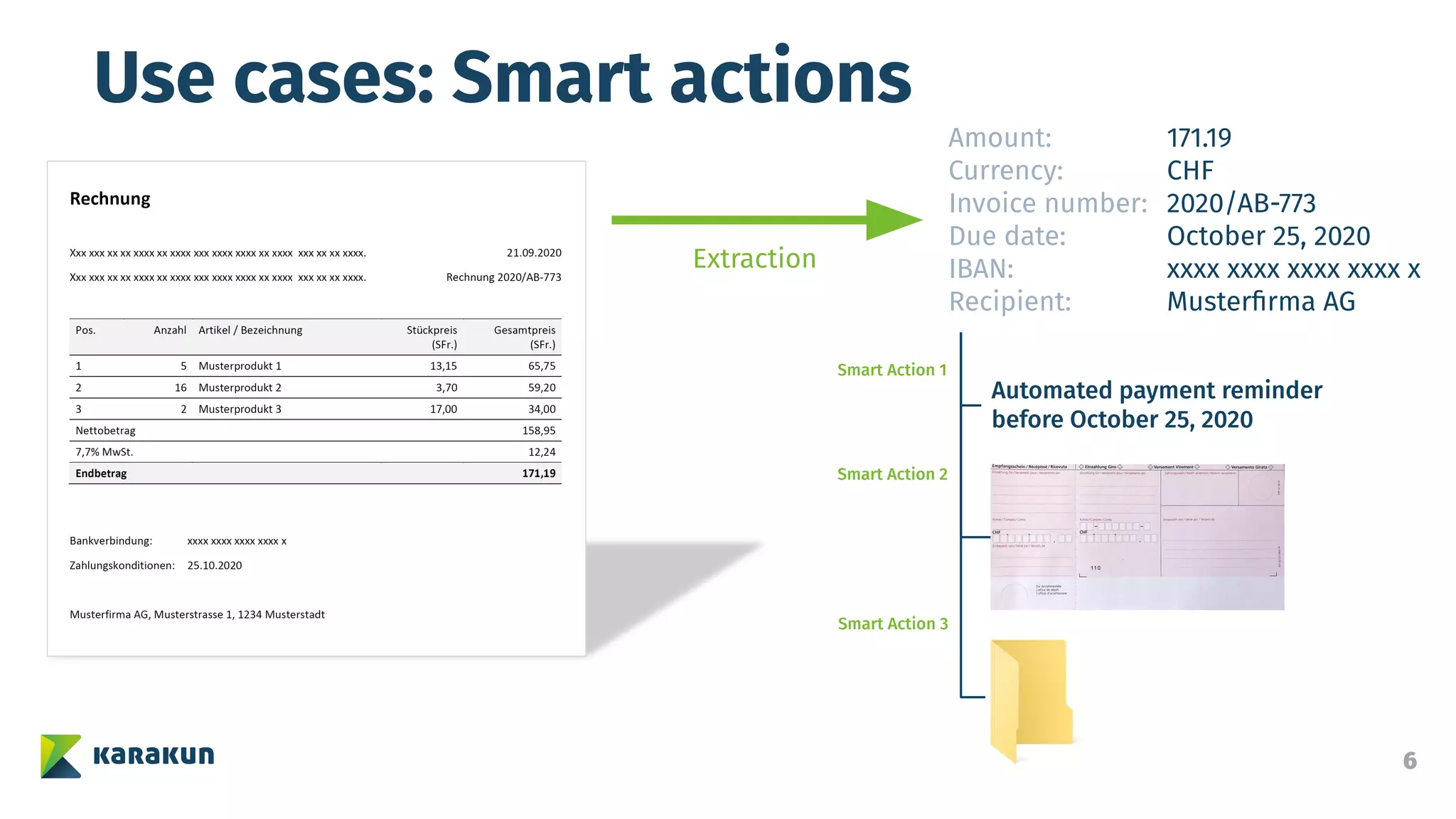

The document discusses a collaborative research project involving Karakun, DSwiss, and SUPSI, focused on leveraging pre-trained language models for document classification and information extraction. It emphasizes the use of models like BERT to improve classification performance with fewer training samples and outlines various applications such as invoice processing and automated actions. Key challenges include noisy OCR outputs and the need for extensive and diverse training data to minimize biases and improve accuracy.