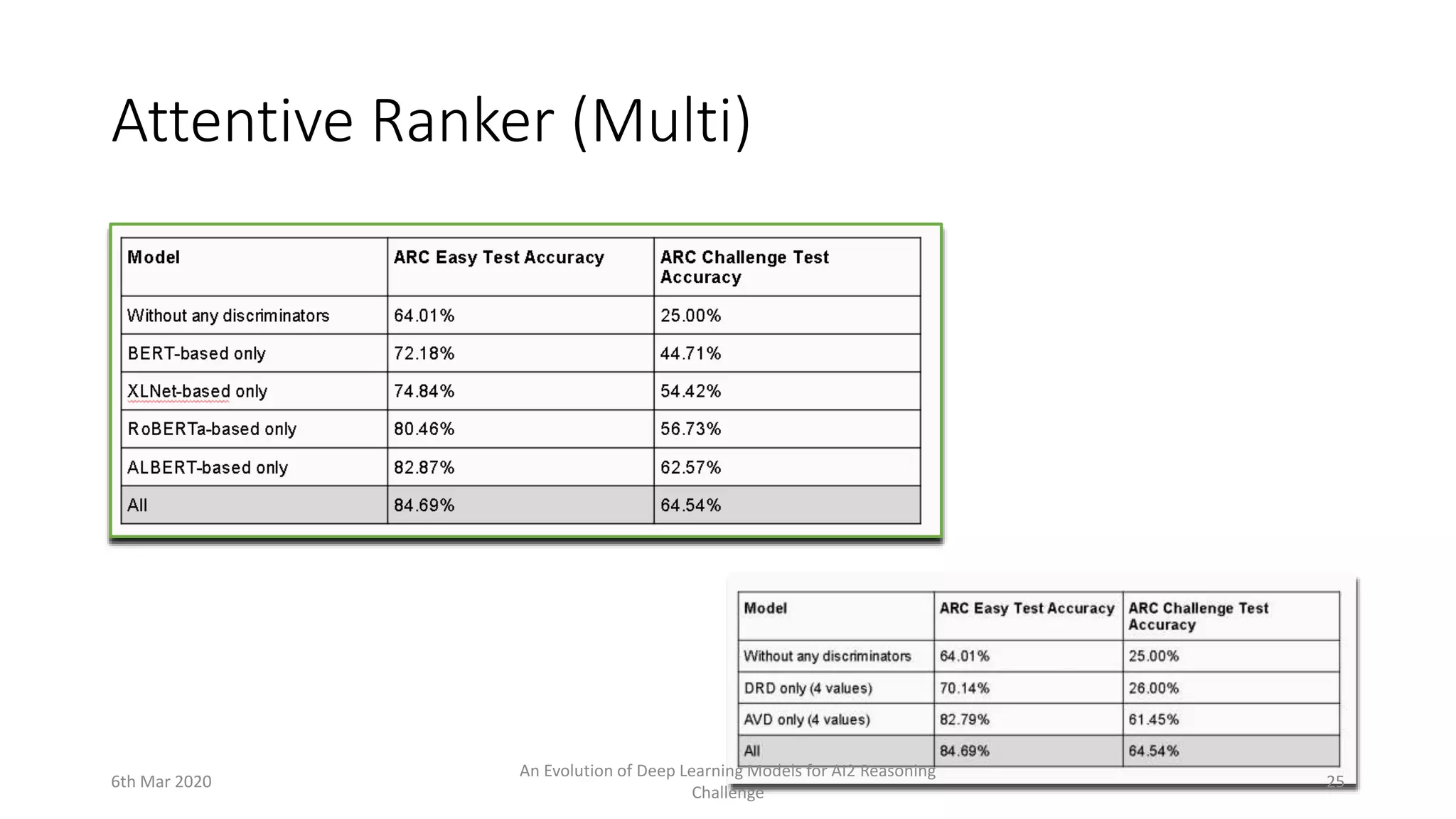

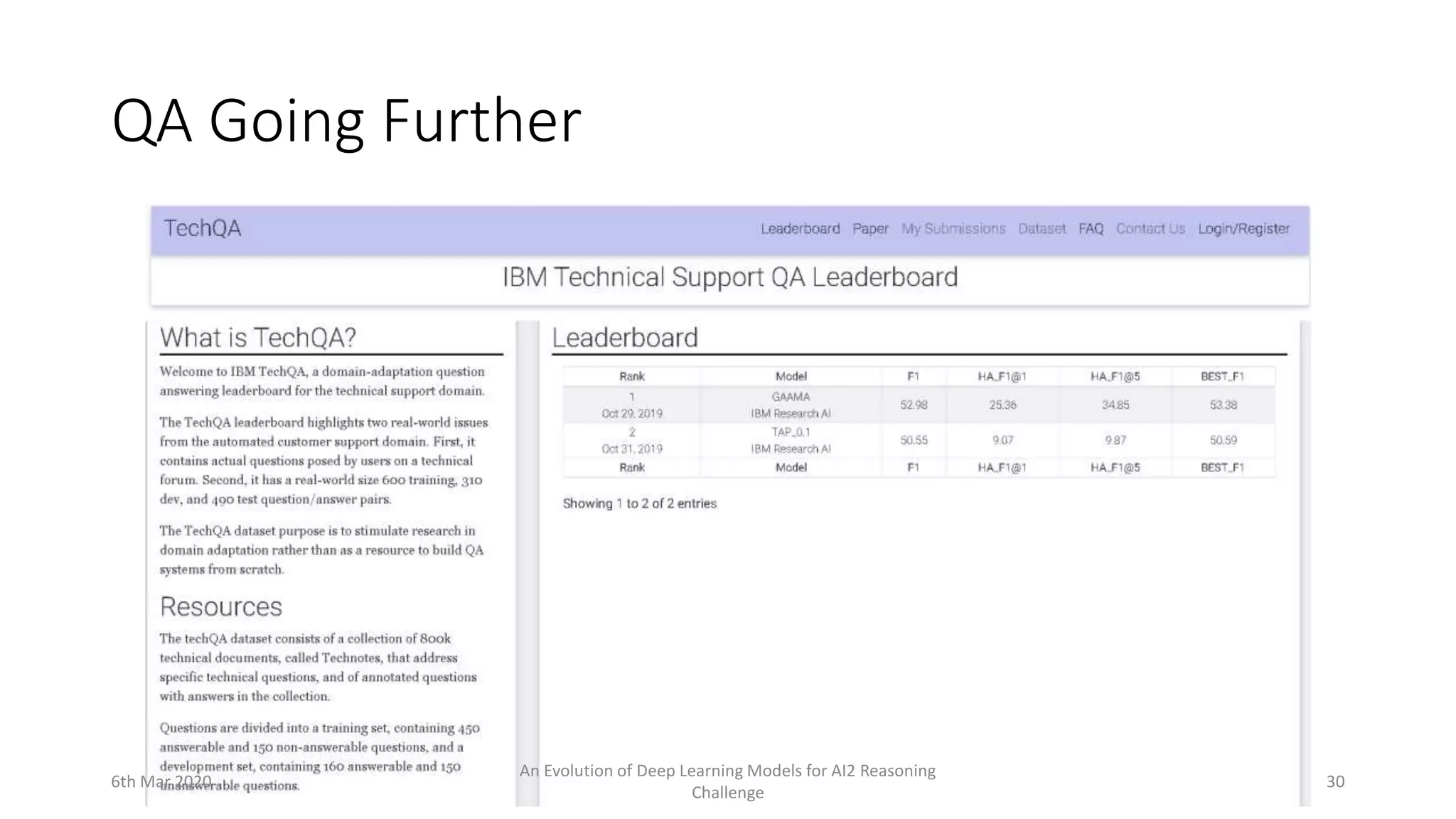

The document discusses the evolution of deep learning models in the context of the AI2 Reasoning Challenge, particularly focusing on various question answering (QA) methodologies and datasets like SQuAD, HotPotQA, and ARC. It highlights the challenges of current models, showcases improvements such as the two-stage inference model and the attentive ranker, and notes that while advancements have been made, human-level performance remains elusive in complex reasoning tasks. Key conclusions emphasize the ongoing need for better models, datasets, and techniques such as adversarial training to further enhance QA systems.