An Updated Performance Comparison of Virtual Machines and Linux Containers

- 1. An Updated Performance Comparison of Virtual Machines and Linux Containers Wes Felter♰, Alexandre Ferreira♰, Ram Rajamony♰ and Juan Rubio♰ ♰ IBM Research Division, Austin Research Laboratory IBM Research Report, RC25482 (AUS1407-001), 2014. IEEE International Symposium on Performance Analysis of Systems and Software, pp.171-172, 2015. January 23th, 2017 Ph.D. Student Kento Aoyama Akiyama Laboratory Graduate Major in Artificial Intelligence Department of Computer Science School of Computing Tokyo Institute of Technology

- 2. What do this? • Comparing the performance of Virtual Machine & Linux Container. Why do this? • VM performance is important component of overall cloud performance. How to do it? • Running benchmarks (CPU, memory, network, File I/O) • Evaluating database application (Redis, MySQL) Summary 2

- 3. Di Tommaso P, Palumbo E, Chatzou M, Prieto P, Heuer ML, Notredame C. (2015) The impact of Docker containers on the performance of genomic pipelines. PeerJ 3:e1273 https://doi.org/10.7717/peerj.1273 Research Example (1/2) 3 Genomic pipelines consist of several pieces of third party software and, because of their experimental nature, frequent changes and updates are commonly necessary thus raising serious deployment and reproducibility issues. Docker containers are emerging as a possible solution for many of these problems, as … → They evaluated the performance of genomic pipelines in Docker container.

- 4. Galaxy • Official Site : https://galaxyproject.org/ • Github : https://github.com/bgruening/docker-galaxy-stable > The Galaxy Docker Image is an easy distributable full-fledged Galaxy installation, that can be used for testing, teaching and presenting new tools and features. … Enis Afgan, Dannon Baker, Marius van den Beek, Daniel Blankenberg, Dave Bouvier, Martin Čech, John Chilton, Dave Clements, Nate Coraor, Carl Eberhard, Björn Grüning, Aysam Guerler, Jennifer Hillman-Jackson, Greg Von Kuster, Eric Rasche, Nicola Soranzo, Nitesh Turaga, James Taylor, Anton Nekrutenko, and Jeremy Goecks. The Galaxy platform for accessible, reproducible and collaborative biomedical analyses: 2016 update. Nucleic Acids Research (2016) doi: 10.1093/nar/gkw343 Research Example (2/2) 4

- 5. 1. Introduction 2. Background 3. Evaluation 4. Discussion 5. Conclusion 6. Related Works Outline 5

- 6. Cloud Computing • Cloud computing services have been spreading rapidly in recent years. • e.g. MS Azure, Amazon EC2, Google Platform, Heroku, etc… • Virtual machines are used extensively in cloud computing. • VM performance is crucial component of overall cloud performance. • Once a hypervisor has added overhead, no higher layer can remove it. Introduction 6

- 7. Container-based Virtualization • Container-based virtualization provides an alternative to virtual machines in the cloud. • The concepts of container technology is based on Linux namespace [34]. Docker [45] • “Docker is the world’s leading software containerization platform” • “Build, Ship, and Run Any App, Anywhere” • Docker has emerged as a standard runtime, image format, and build system for Linux. • Docker provides quick and easy deployment and reproducibility. Introduction 7 [34] Rob Pike, Dave Presotto, Ken Thompson, Howard Trickey, and Phil Winterbottom. The Use of Name Spaces in Plan 9. In Proceedings of the 5th Workshop on ACM SIGOPS European Workshop: Models and Paradigms for Distributed Systems Structuring, pages 1–5, 1992. [45] Solomon Hykes and others. What is Docker? https://www.docker.com/ whatisdocker/. But, we will focus on only performance in this time

- 8. Purpose • Comparing the performance of a set of workloads • compute, memory, network, and I/O bandwidth • two real applications, viz., Redis and MySQL • To isolate and understand the overhead of • Non-virtualized Linux (native) • Virtual Machines (specifically KVM) • Containers (specifically Docker) • The scripts to run the experiments from this paper are available • https://github.com/thewmf/kvm-docker-comparison Introduction 8

- 9. Background 9

- 10. Motivation and requirements • Unix traditionally does NOT strongly implement the principle of least privilege. • Most objects are globally visible to all users. • Resource Isolation • To isolate the system resource (filesystem, process, network stack, etc.) from other users. • Systems must be designed NOT to compromise security. • Configuration isolation • To isolate the application configurations (settings, shared libraries, etc.) from other applications. • Modern applications use many libraries and different applications require different versions of the same library. • Sharing Environment • Sharing any code, data or configurations of application • To provide reproducibility on every environment. Why Virtualize? 10

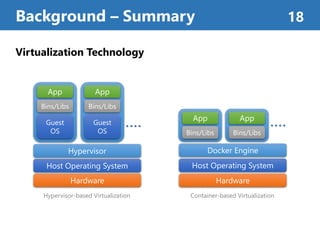

- 11. KVM (Kernel-Based Virtual Machine) [25] • Type-1 Hypervisor Virtualization • Guest OS (VM) works inside a Linux process • Using Hardware acceleration and paravirtual I/O to reduce virtualization overhead. Typical Features • VMs managed as a Linux process. • scheduling, cgroups (hierarchical resource control), etc. • VMs require virtual CPUs and RAM, and is bounded. • 1 virtual CPU CANNOT use more than 1 real CPU worth of cycles. • Each page of virtual RAM maps to at most one page of physical RAM (+ nested page table). • Communicating to outside of VM has large overhead. • VMs must use limited number of hypercalls or emulated devices (controlled hypervisor) Virtual Machine (KVM) 11 [25] Avi Kivity, Yaniv Kamay, Dor Laor, Uri Lublin, and Anthony Liguori. “KVM: the Linux virtual machine monitor”. In Proceedings of the Linux Symposium, volume 1, pages 225–230, Ottawa, Ontario, Canada, June, 2007. Hardware Host Operating System Hypervisor-based Virtualization App Guest OS Bins/Libs App Guest OS Bins/Libs Hypervisor

- 12. Linux Container • Concept of Linux container based on Linux namespace. • No visibility or access to objects outside the container • Containers can be viewed as another level of access control in addition to the user and group permission system. namespace [17] • namespace can isolates and virtualizes system resources of a collection of processes. • namespace allows creating separate instances of global namespaces. • Processes running inside the container • They are sharing the host OS kernel. • They have its own root directory and mount table. • They appear to be running on a normal Linux system. • namespaces feature, originally motivated by difficulties in dealing with high performance computing clusters [17]. Linux Container (namespace) 12 [17] E. W. Biederman. “Multiple instances of the global Linux namespaces.”, In Proceedings of the 2006 Ottawa Linux Symposium, 2006. Figure: https://access.redhat.com/documentation/en/red-hat-enterprise-linux-atomic-host/7/paged/overview-of-containers-in-red-hat-systems/

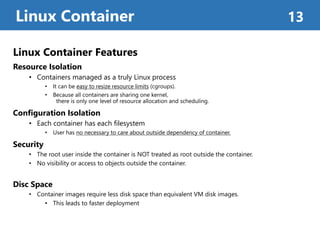

- 13. Linux Container Features Resource Isolation • Containers managed as a truly Linux process • It can be easy to resize resource limits (cgroups). • Because all containers are sharing one kernel, there is only one level of resource allocation and scheduling. Configuration Isolation • Each container has each filesystem • User has no necessary to care about outside dependency of container. Security • The root user inside the container is NOT treated as root outside the container. • No visibility or access to objects outside the container. Disc Space • Container images require less disk space than equivalent VM disk images. • This leads to faster deployment Linux Container 13

- 14. Docker • One of the Linux Container Management Tool • Open Source Software • Layered filesystem images (AUFS) • Easy to share on DockerHub • Docker Toolkit is very substantial • Docker Compose • Docker Machine • Docker Swarm … Typical Features • Layered filesystem images (AUFS) • Docker Network Option (NAT, net=host, …) • Filesystem Mount (Volume option) • Sharing Environment (DockerHub) Docker 14 Container-based Virtualization (Docker) Hardware Host Operating System Docker Engine App Bins/Libs App Bins/Libs

- 15. AUFS (Advanced multi layered unification filesystem) [12] • AUFS is a unification filesystem • stacking them on top of each other, and provides a single unified view • reducing space usage and simplifying filesystem management • Layers can be reused in other container image • Easy to redo the operation Docker – AUFS 15 [12] Advanced multi layered unification filesystem. http://aufs.sourceforge.net, 2014.

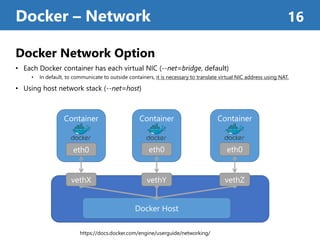

- 16. Docker Network Option • Each Docker container has each virtual NIC (--net=bridge, default) • In default, to communicate to outside containers, it is necessary to translate virtual NIC address using NAT. • Using host network stack (--net=host) Docker – Network 16 ContainerContainer Docker Host vethX vethY vethZ Container eth0 eth0 eth0 https://docs.docker.com/engine/userguide/networking/

- 17. Volume Option • With the volume option (-v) you can mount the host filesystem on a container. • Even if the container exited, the data in the volume is saved in the host file system. • As it is expected that the container perform a single role, it is recommended that I/O data be stored on the volume. Docker – Volume Option 17 Docker Host Container /var/volume/var/volume App --volume-from /var/volume

- 18. Virtualization Technology Background – Summary 18 Container-based Virtualization Hardware Host Operating System Docker Engine App Bins/Libs App Bins/Libs Hardware Host Operating System Hypervisor-based Virtualization App Guest OS Bins/Libs App Guest OS Bins/Libs Hypervisor

- 19. Evaluation 19

- 20. Case Benchmarks (Tools) Measurement Target A PXZ [11] CPU Performance (Compression) B Linpack [21] CPU Performance (Solve dense linear equation using LU factorization) C Stream [21] Memory Bandwidth (Sequential) D RandomAccess [21] Memory Bandwidth (Random Memory Access) E nuttcp [7] Network Bandwidth F netperf Network Latency G fio [13] Block I/O H Redis [43, 47] Real Application Performance (Key-Value Store Database) I MySQL (sysbench)[6] Real Application Performance (Relational Database) Benchmarks and Applications 20

- 21. Environments 21 System IBM System x3650 CPU Intel Sandy Bridge-EP Xeon E5-2665 (8 cores, 2.4-3.0 GHz)×2 socket (+ HyperThreading) Memory 256 GB Host OS Ubuntu 13.10 (Saucy) 64-bit with Linux kernel 3.11.0 Docker Engine Docker 1.0 Docker Container / VM Ubuntu 13.10

- 22. Result – Summary (Benchmarks) 22 Case Perf. Category Docker KVM A, B CPU Good Bad* C Memory Bandwidth (sequential) Good Good D Memory Bandwidth (Random) Good Good E Network Bandwidth Acceptable* Acceptable* F Network Latency Bad Bad G Block I/O (Sequential) Good Good G Block I/O (RandomAccess) Good (Volume Option) Bad Comparing to native performance … equal = Good a little worse = Acceptable worse = Bad * = depends case or tuning

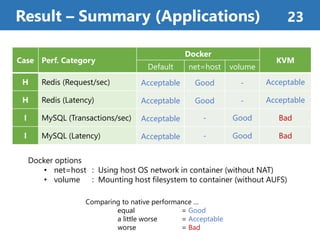

- 23. Result – Summary (Applications) 23 Case Perf. Category Docker KVM Default net=host volume H Redis (Request/sec) Acceptable Good - Acceptable H Redis (Latency) Acceptable Good - Acceptable I MySQL (Transactions/sec) Acceptable - Good Bad I MySQL (Latency) Acceptable - Good Bad Comparing to native performance … equal = Good a little worse = Acceptable worse = Bad Docker options • net=host : Using host OS network in container (without NAT) • volume : Mounting host filesystem to container (without AUFS)

- 24. A. CPU-PXZ 24 Compression Performance • PXZ [11] is a parallel lossless data compression utility (LZMA algorithm, e.g. 7-Zip, xz). • PXZ 4.999.9beta (build 20130528) to compress enwik9 [29] (1 GB Wikipedia dump) • To focus on compression performance (rather than I/O) • using 32 threads • input file is cached in RAM • output is piped to /dev/null • Native and Docker performance are very similar while KVM is 22% slower. • Tuning KVM by vCPU pinning and exposing cache topology makes little difference to the performance. • By default, KVM does not expose topology information to VMs, so the guest OS believes it is running on a uniform 32-socket system with one core per. [11] PXZ—parallel LZMA compressor using liblzma, https://jnovy.fedorapeople.org/pxz/, 2012. [29] Matt Mahoney, “Large text compression benchmark”, http://mattmahoney.net/dc/textdata.html, 2011.

- 25. B. HPC - Linpack 25 Solve Dense Linear Equation (LU factorization) • Linpack [21] solves a dense system of linear equations • LU factorization with partial pivoting • Huge compute operations (likes 𝑩 += 𝛼𝑨, double-precision floating operations) • Linpack Benchmark is used in Top500 (Supercomputer Ranking) • optimized Linpack binary (version 11.1.2.005)[3] based on the Intel Math Kernel Library. • Performance is almost identical on both Linux and Docker. • Untuned KVM performance is markedly worse • showing the costs of abstracting/hiding hardware details from a workload that can take advantage of it. • Tuning KVM to pin vCPUs to their corresponding CPUs and expose the underlying cache topology increases performance nearly to par with native. [21] J. Dongarra and P. Luszczek. “Introduction to the HPCChallenge Benchmark Suite”. Technical report, ICL Technical Report, 10 2005. ICL-UT-05-01. [3] Intel Math Kernel Library—LINPACK Download. https://software.intel.com/en-us/articles/intel-math- kernel-library-linpack-download

- 26. C. Memory bandwidth - Stream 26 Measurement of Memory Bandwidth (Sequential) • STREAM [21] benchmark • is a simple synthetic benchmark program that measures sustainable memory bandwidth. • performs simple operations on vectors • All data are prefetched by hardware prefetchers. • Performance on Linux, Docker, and KVM is almost identical • the median data exhibiting a difference of only 1.4% across the three execution environments. [21] J. Dongarra and P. Luszczek. “Introduction to the HPCChallenge Benchmark Suite”. Technical report, ICL Technical Report, 10 2005. ICL-UT-05-01.

- 27. D. Random Memory Access - RandomAccess 27 Measurement of Memory Bandwidth (Random) • RandomAccess benchmark [21] • is specially designed to stress random memory performance. 1. Initializes a huge memory (larger than the reach of the caches or the TLB ) 2. Random 8-byte words are read, modified (XOR operation), and written back. • RandomAccess performs workloads with large working sets and minimal computation • e.g. in-memory hash tables, in-memory databases. • Performance is almost identical in all cases. • RandomAccess significantly exercised the hardware page table walker that handles TLB misses. • On our two-socket system, this has the same overheads for both virtualized and non-virtualized environments. [21] J. Dongarra and P. Luszczek. “Introduction to the HPCChallenge Benchmark Suite”. Technical report, ICL Technical Report, 10 2005. ICL-UT-05-01.

- 28. E. Network Bandwidth - nuttcp 28 Measurement of Network Bandwidth • Using nuttcp tool [7] to measure network bandwidth between host and guest (container) • Bulk data transfer over a single TCP connection with standard 1500-byte (MTU) Configurations • Machines connected using a direct 10 Gbps Ethernet link between two Mellanox ConnectX-2 EN NICs. • Docker attaches all containers on the host to a bridge and connects the bridge to the network via NAT. • KVM configuration, we use virtio and vhost to minimize virtualization overhead. • The bottleneck in this test is the NIC, leaving other resources mostly idle. [7] The “nuttcp” Network Performance Measurement Tool. http://www.nuttcp.net/.

- 29. E. Network Bandwidth - nuttcp 29 • Docker’s use of bridging and NAT noticeably increases the transmit path length • Docker containers that do not use NAT have identical performance to native Linux (net=host?) • vhost-net is fairly efficient at transmitting but has high overhead on the receive side. • KVM have struggled to provide line-rate networking due to circuitous I/O paths that sent every packet through userspace. • vhost, which allows the VM to communicate directly with the host kernel, solves the network throughput problem in a straightforward way.

- 30. F. Network latency - netperf 30 Measurement of Network Latency • Using netperf request-response benchmark to measure round-trip network latency. 1. netperf client → → → netperf server (100 byte request) 2. netperf client ← ← ← netperf server (200 byte response) • client waits for the response before sending another request. • Docker NAT doubles latency • KVM adds 30µs of overhead (+80%) • Virtualization overhead cannot be reduced in this Test.

- 31. G. Block I/O - fio 31 Measurement of Block I/O Performance • Using fio [13] 2.0.8 with the libaio (Asynchronous I/O, O_DIRECT mode) • Running several tests against a 16 GB file stored on the SSD. • Using O_DIRECT allows accesses to bypass the OS caches. Storage Configurations • 20 TB IBM FlashSystem 840 flash SSD • two 8 Gbps Fibre Channel links to a QLogic ISP2532-based dual-port HBA with dm_multipath Docker & VM Configurations • Docker : Storage is mapped into the container (using –v) (avoiding AUFS overhead) • VM : Block device is mounted inside the VM (using virtio)

- 32. G. Block I/O - fio 32 Sequential Block I/O throughput • Sequential read and write performance averaged over 60 seconds using a typical 1 MB I/O size (Fig.5) • Docker and KVM introduce negligible overhead in this case. • KVM has roughly four times the performance variance as the other cases. • Similar to the network, the Fibre Channel HBA appears to be the bottleneck in this test.

- 33. G. Block I/O - fio 33 Random Block I/O throughput (IOPS) • Random read, write and mixed (70% read, 30% write) workloads performance (Fig.6) • 4 kB block size and concurrency of 128 (maximum performance for this particular SSD). • Docker introduces no overhead compared to Linux. • KVM performs only half as many IOPS because each I/O operation must go through QEMU[16]. • QEMU provides virtual I/O functions, but it used more CPU cycles per I/O operations. • KVM increases read latency by 2-3x, a crucial metric for some real workloads (Fig.7) [16] Fabrice Bellard. QEMU, “a fast and portable dynamic translator.” In Proceedings of the Annual Conference on USENIX Annual Technical Conference, ATEC ’05, pages 41–41, Berkeley, CA, USA, 2005.

- 34. H. Redis 34 • Memory-based key-value storage is commonly used in cloud. • Used by PaaS providers (e.g., Amazon Elasticache, Google Compute Engine). • Real applications require a network round-trip between the client and the server. • Applications sensitive to network latency • Large number of concurrent clients, sending very small network packets Test Conditions • Clients requests to the server (50% read, 50% write) • Each client has TCP connection (10 concurrent requests over connection). • Keys : 10 characters • Values : 50 bytes (Average) • Scale the number of client connections until Redis server is saturated of the CPU.

- 35. H. Redis 35 • All cases were saturated in around 110 k request per second. • Adding more clients results in requests being queued and an increase in the average latency. • Docker (net=host) performs almost identical throughput and latency as the native case. • NAT consumes CPU cycles, thus it preventing the Redis deployment from reaching peak performance. • In KVM case, Redis appears to be network-bound. KVM adds approximately 83µs of latency. • Beyond 100 connections, the throughput of both deployments are practically identical. • Little’s Law; because network latency is higher under KVM, Redis needs more concurrency to fully utilize the system.

- 36. I. MySQL 36 • MySQL is a popular relational database that is widely used in the cloud. • We ran the SysBench [6] oltp benchmark against a single instance of MySQL 5.5.37. • database preloaded with 2 million records • executes a fixed set of read/write transactions (2 UPDATE, 1 DELETE, 1 INSERT) • The measurements are transaction latency and throughput of transactions per second. • The number of clients was varied until saturation Test Configurations • 3GB cache was enabled (sufficient to cache all reads) • Five different configurations (Table.III) [6] Sysbench benchmark. https://launchpad.net/sysbench. * qcow is a file format for disk image files used by QEMU.

- 37. I. MySQL 37 MySQL Throughput • Docker has similar performance to native, with the difference asymptotically approaching 2% at higher concurrency. • NAT also introduces a little overhead but this workload is not network-intensive. • AUFS introduces significant overhead which demonstrates the difference of filesystem virtualization. • KVM has much higher overhead, higher than 40% in all measured cases. • We tested different KVM storage protocols and found that they make no difference • KVM shows that saturation was achieved in the network but not in the CPUs (Fig.11). • Since the benchmark uses synchronous requests, a increase in latency also reduces throughput at a given concurrency level.

- 38. I. MySQL 38 MySQL Latency • Native Linux is able to achieve higher peak CPU utilization. • Docker is not able to achieve that same level, a difference of around 1.5%. • Docker increases the latency faster for moderate levels of load which explains the lower throughput at low concurrency levels.

- 40. • Docker and VMs impose almost no overhead on CPU and memory usage; they only impact I/O and OS interaction. • This overhead comes in the form of extra cycles for each I/O operation. • Unfortunately, real applications often cannot batch work into large I/Os. • Docker adds several useful features such as AUFS and NAT, but these features come at a performance cost. • Thus Docker using default settings may be no faster than KVM. • Applications that are filesystem or disk intensive should bypass AUFS by using volumes. • NAT overhead can be easily eliminated by using –net=host, but this gives up the benefits of network namespaces. • Ultimately we believe that the model of one IP address per container as proposed by the Kubernetes project can provide flexibility and performance. • While KVM can provide very good performance, its configurability is a weakness. • Good CPU performance requires careful configuration of large pages, CPU model, vCPU pinning, and cache topology. • These features are poorly documented and required trial and error to configure. Discussion 40

- 41. • Docker equals or exceeds KVM performance. • KVM and Docker introduce negligible overhead for CPU and memory performance. • For I/O-intensive workloads, both forms of virtualization should be used carefully. • KVM performance has improved considerably since its creation. • KVM still adds some overhead to every I/O operation. • Thus, KVM is less suitable for workloads that are latency-sensitive or have high I/O rates. • Although containers themselves have almost no overhead, Docker is not without performance gotchas. • Docker volumes have noticeably better performance than files stored in AUFS. • Docker’s NAT also introduces overhead for workloads with high packet rates. • These features represent a tradeoff (management – performance). • Our results can give some guidance about how cloud infrastructure should be built. • Container-based IaaS can offer better performance or easier deployment. Conclusion 41

![Container-based Virtualization

• Container-based virtualization provides an alternative to virtual machines in the cloud.

• The concepts of container technology is based on Linux namespace [34].

Docker [45]

• “Docker is the world’s leading software containerization platform”

• “Build, Ship, and Run Any App, Anywhere”

• Docker has emerged as a standard runtime, image format, and build system for Linux.

• Docker provides quick and easy deployment and reproducibility.

Introduction 7

[34] Rob Pike, Dave Presotto, Ken Thompson, Howard Trickey, and Phil Winterbottom. The Use of Name Spaces in

Plan 9. In Proceedings of the 5th Workshop on ACM SIGOPS European Workshop: Models and Paradigms for

Distributed Systems Structuring, pages 1–5, 1992.

[45] Solomon Hykes and others. What is Docker? https://www.docker.com/ whatisdocker/.

But, we will focus on only

performance in this time](https://image.slidesharecdn.com/20170123aoyama-170328034646/85/An-Updated-Performance-Comparison-of-Virtual-Machines-and-Linux-Containers-7-320.jpg)

![KVM (Kernel-Based Virtual Machine) [25]

• Type-1 Hypervisor Virtualization

• Guest OS (VM) works inside a Linux process

• Using Hardware acceleration and paravirtual I/O

to reduce virtualization overhead.

Typical Features

• VMs managed as a Linux process.

• scheduling, cgroups (hierarchical resource control), etc.

• VMs require virtual CPUs and RAM, and is bounded.

• 1 virtual CPU CANNOT use more than 1 real CPU worth of cycles.

• Each page of virtual RAM maps to at most one page of physical RAM (+ nested page table).

• Communicating to outside of VM has large overhead.

• VMs must use limited number of hypercalls or emulated devices (controlled

hypervisor)

Virtual Machine (KVM) 11

[25] Avi Kivity, Yaniv Kamay, Dor Laor, Uri Lublin, and Anthony Liguori. “KVM: the Linux virtual machine monitor”. In Proceedings of

the Linux Symposium, volume 1, pages 225–230, Ottawa, Ontario, Canada, June, 2007.

Hardware

Host Operating System

Hypervisor-based Virtualization

App

Guest

OS

Bins/Libs

App

Guest

OS

Bins/Libs

Hypervisor](https://image.slidesharecdn.com/20170123aoyama-170328034646/85/An-Updated-Performance-Comparison-of-Virtual-Machines-and-Linux-Containers-11-320.jpg)

![Linux Container

• Concept of Linux container based on Linux namespace.

• No visibility or access to objects outside the container

• Containers can be viewed as another level of access control

in addition to the user and group permission system.

namespace [17]

• namespace can isolates and virtualizes

system resources of a collection of processes.

• namespace allows creating separate instances of global namespaces.

• Processes running inside the container

• They are sharing the host OS kernel.

• They have its own root directory and mount table.

• They appear to be running on a normal Linux system.

• namespaces feature, originally motivated by difficulties in dealing with high performance computing clusters [17].

Linux Container (namespace) 12

[17] E. W. Biederman. “Multiple instances of the global Linux namespaces.”, In Proceedings of the 2006 Ottawa Linux Symposium, 2006.

Figure: https://access.redhat.com/documentation/en/red-hat-enterprise-linux-atomic-host/7/paged/overview-of-containers-in-red-hat-systems/](https://image.slidesharecdn.com/20170123aoyama-170328034646/85/An-Updated-Performance-Comparison-of-Virtual-Machines-and-Linux-Containers-12-320.jpg)

![AUFS (Advanced multi layered unification filesystem) [12]

• AUFS is a unification filesystem

• stacking them on top of each other, and provides a single unified view

• reducing space usage and simplifying filesystem management

• Layers can be reused in other container image

• Easy to redo the operation

Docker – AUFS 15

[12] Advanced multi layered unification filesystem. http://aufs.sourceforge.net, 2014.](https://image.slidesharecdn.com/20170123aoyama-170328034646/85/An-Updated-Performance-Comparison-of-Virtual-Machines-and-Linux-Containers-15-320.jpg)

![Case Benchmarks (Tools) Measurement Target

A PXZ [11] CPU Performance (Compression)

B Linpack [21] CPU Performance

(Solve dense linear equation using LU factorization)

C Stream [21] Memory Bandwidth (Sequential)

D RandomAccess [21] Memory Bandwidth (Random Memory Access)

E nuttcp [7] Network Bandwidth

F netperf Network Latency

G fio [13] Block I/O

H Redis [43, 47] Real Application Performance (Key-Value Store Database)

I MySQL (sysbench)[6] Real Application Performance (Relational Database)

Benchmarks and Applications 20](https://image.slidesharecdn.com/20170123aoyama-170328034646/85/An-Updated-Performance-Comparison-of-Virtual-Machines-and-Linux-Containers-20-320.jpg)

![A. CPU-PXZ 24

Compression Performance

• PXZ [11] is a parallel lossless data compression utility (LZMA algorithm, e.g. 7-Zip, xz).

• PXZ 4.999.9beta (build 20130528) to compress enwik9 [29] (1 GB Wikipedia dump)

• To focus on compression performance (rather than I/O)

• using 32 threads

• input file is cached in RAM

• output is piped to /dev/null

• Native and Docker performance are very similar while KVM is 22% slower.

• Tuning KVM by vCPU pinning and exposing cache topology makes little difference to the

performance.

• By default, KVM does not expose topology information to VMs,

so the guest OS believes it is running on a uniform 32-socket system with one core per.

[11] PXZ—parallel LZMA compressor using liblzma, https://jnovy.fedorapeople.org/pxz/, 2012.

[29] Matt Mahoney, “Large text compression benchmark”, http://mattmahoney.net/dc/textdata.html, 2011.](https://image.slidesharecdn.com/20170123aoyama-170328034646/85/An-Updated-Performance-Comparison-of-Virtual-Machines-and-Linux-Containers-24-320.jpg)

![B. HPC - Linpack 25

Solve Dense Linear Equation (LU factorization)

• Linpack [21] solves a dense system of linear equations

• LU factorization with partial pivoting

• Huge compute operations (likes 𝑩 += 𝛼𝑨, double-precision floating operations)

• Linpack Benchmark is used in Top500 (Supercomputer Ranking)

• optimized Linpack binary (version 11.1.2.005)[3] based on the Intel Math Kernel Library.

• Performance is almost identical on both Linux and Docker.

• Untuned KVM performance is markedly worse

• showing the costs of abstracting/hiding hardware details from a workload that can take advantage of it.

• Tuning KVM to pin vCPUs to their corresponding CPUs and expose the underlying cache

topology increases performance nearly to par with native.

[21] J. Dongarra and P. Luszczek. “Introduction to the HPCChallenge Benchmark Suite”. Technical report,

ICL Technical Report, 10 2005. ICL-UT-05-01.

[3] Intel Math Kernel Library—LINPACK Download. https://software.intel.com/en-us/articles/intel-math-

kernel-library-linpack-download](https://image.slidesharecdn.com/20170123aoyama-170328034646/85/An-Updated-Performance-Comparison-of-Virtual-Machines-and-Linux-Containers-25-320.jpg)

![C. Memory bandwidth - Stream 26

Measurement of Memory Bandwidth (Sequential)

• STREAM [21] benchmark

• is a simple synthetic benchmark program that measures sustainable memory bandwidth.

• performs simple operations on vectors

• All data are prefetched by hardware prefetchers.

• Performance on Linux, Docker, and KVM is almost identical

• the median data exhibiting a difference of only 1.4% across the three execution environments.

[21] J. Dongarra and P. Luszczek. “Introduction to the

HPCChallenge Benchmark Suite”. Technical report, ICL

Technical Report, 10 2005. ICL-UT-05-01.](https://image.slidesharecdn.com/20170123aoyama-170328034646/85/An-Updated-Performance-Comparison-of-Virtual-Machines-and-Linux-Containers-26-320.jpg)

![D. Random Memory Access - RandomAccess 27

Measurement of Memory Bandwidth (Random)

• RandomAccess benchmark [21]

• is specially designed to stress random memory performance.

1. Initializes a huge memory (larger than the reach of the caches or the TLB )

2. Random 8-byte words are read, modified (XOR operation), and written back.

• RandomAccess performs workloads with large working sets and minimal computation

• e.g. in-memory hash tables, in-memory databases.

• Performance is almost identical in all cases.

• RandomAccess significantly exercised the hardware page table walker that handles TLB misses.

• On our two-socket system,

this has the same overheads for both virtualized and non-virtualized environments.

[21] J. Dongarra and P. Luszczek. “Introduction to the HPCChallenge Benchmark Suite”. Technical report,

ICL Technical Report, 10 2005. ICL-UT-05-01.](https://image.slidesharecdn.com/20170123aoyama-170328034646/85/An-Updated-Performance-Comparison-of-Virtual-Machines-and-Linux-Containers-27-320.jpg)

![E. Network Bandwidth - nuttcp 28

Measurement of Network Bandwidth

• Using nuttcp tool [7] to measure network bandwidth between host and guest (container)

• Bulk data transfer over a single TCP connection with standard 1500-byte (MTU)

Configurations

• Machines connected using a direct 10 Gbps Ethernet link between two Mellanox

ConnectX-2 EN NICs.

• Docker attaches all containers on the host to a bridge and connects the bridge to

the network via NAT.

• KVM configuration, we use virtio and vhost to minimize virtualization overhead.

• The bottleneck in this test is the NIC, leaving other resources mostly idle.

[7] The “nuttcp” Network Performance Measurement Tool. http://www.nuttcp.net/.](https://image.slidesharecdn.com/20170123aoyama-170328034646/85/An-Updated-Performance-Comparison-of-Virtual-Machines-and-Linux-Containers-28-320.jpg)

![G. Block I/O - fio 31

Measurement of Block I/O Performance

• Using fio [13] 2.0.8 with the libaio (Asynchronous I/O, O_DIRECT mode)

• Running several tests against a 16 GB file stored on the SSD.

• Using O_DIRECT allows accesses to bypass the OS caches.

Storage Configurations

• 20 TB IBM FlashSystem 840 flash SSD

• two 8 Gbps Fibre Channel links to a QLogic ISP2532-based dual-port HBA with

dm_multipath

Docker & VM Configurations

• Docker : Storage is mapped into the container (using –v)

(avoiding AUFS overhead)

• VM : Block device is mounted inside the VM (using virtio)](https://image.slidesharecdn.com/20170123aoyama-170328034646/85/An-Updated-Performance-Comparison-of-Virtual-Machines-and-Linux-Containers-31-320.jpg)

![G. Block I/O - fio 33

Random Block I/O throughput (IOPS)

• Random read, write and mixed (70% read, 30% write) workloads performance (Fig.6)

• 4 kB block size and concurrency of 128 (maximum performance for this particular SSD).

• Docker introduces no overhead compared to Linux.

• KVM performs only half as many IOPS because each I/O operation must go through QEMU[16].

• QEMU provides virtual I/O functions, but it used more CPU cycles per I/O operations.

• KVM increases read latency by 2-3x, a crucial metric for some real workloads (Fig.7)

[16] Fabrice Bellard. QEMU, “a fast and portable dynamic translator.” In Proceedings of the Annual

Conference on USENIX Annual Technical Conference, ATEC ’05, pages 41–41, Berkeley, CA, USA, 2005.](https://image.slidesharecdn.com/20170123aoyama-170328034646/85/An-Updated-Performance-Comparison-of-Virtual-Machines-and-Linux-Containers-33-320.jpg)

![I. MySQL 36

• MySQL is a popular relational database that is widely used in the cloud.

• We ran the SysBench [6] oltp benchmark against a single instance of MySQL 5.5.37.

• database preloaded with 2 million records

• executes a fixed set of read/write transactions (2 UPDATE, 1 DELETE, 1 INSERT)

• The measurements are transaction latency and throughput of transactions per second.

• The number of clients was varied until saturation

Test Configurations

• 3GB cache was enabled (sufficient to cache all reads)

• Five different configurations (Table.III)

[6] Sysbench benchmark. https://launchpad.net/sysbench.

* qcow is a file format for disk image files used by QEMU.](https://image.slidesharecdn.com/20170123aoyama-170328034646/85/An-Updated-Performance-Comparison-of-Virtual-Machines-and-Linux-Containers-36-320.jpg)