Apache Kafka from 0.7 to 1.0, History and Lesson Learned

- 1. Guozhang Wang Kafka Meetup Shanghai, Oct. 21, 2018 Apache Kafka from 0.7 to 1.0 History and Lesson Learned

- 2. A Short History of Kafka 2

- 3. LI @ 2010: Point-to-Point Data Pipeline KV-Store Doc-Store RDBMS Tracking Logs / Metrics Hadoop / DW Monitoring Rec. Engine Social GraphSearchingSecurity … 3

- 4. KV-Store Doc-Store RDBMS Tracking Logs / Metrics Hadoop / DW Monitoring Rec. Engine Social GraphSearchingSecurity … LI @ 2010: Point-to-Point Data Pipeline What we want: a centralized data pipeline 4

- 5. A Short History • 2010.10: First commit of Kafka 5

- 6. Kafka Concepts: the Log 4 5 5 7 8 9 10 11 12... Producer Write Consumer1 Reads (offset 7) Consumer2 Reads (offset 10) Messages 3 6

- 7. Topic 1 Topic 2 Partitions Producers Producers Consumers Consumers Brokers Kafka Concepts: the Log 7

- 8. A Short History • 2010.10: First commit of Kafka • 2011.07: Enters Apache Incubator • Release 0.7.0: compression, mirror-maker 8

- 9. Kafka 0.7 Message Format … Offset = 0 Offset = M Offset = M+N M Bytes N Bytes Message offsets are Physical 9

- 10. Kafka 0.7 Message Format … Offset = 0 Offset = M Offset = M+N M Bytes N Bytes Internal messages of a compressed message are not offset-addressable 10

- 11. Kafka 0.7 Message Format … Offset = 0 Offset = M Offset = M+N M Bytes N Bytes Internal messages of a compressed message are not offset-addressable Consumer can only checkpoint offset M for this message 11

- 12. Drawbacks of Kafka 0.7 • Hard to checkpoint within compressed message set • At-least-once: could consume twice • Hard to rewind consumption by #.messages • Similarly, tricky to monitor consumption lag • Unsuitable for features like log compaction (will talk later) 12

- 13. BUT! • Very dumb efficient (high-throughput) • Just bytes-in-bytes-out for brokers • Hence the bottleneck was predominantly network • 1Gbps NICs are saturated most of the time • CPU usages usually < 10% • IO Flops are low thanks to “zero-copy” 13

- 14. Example: Pub-Sub Messaging Tracking Logs / Metrics Hadoop / DW Apache Kafka … 14

- 15. A Short History • 2010.10: First commit of Kafka • 2011.07: Enters Apache Incubator • Release 0.7.0: compression, mirror-maker • 2012.10: Graduated to top-level project • Release 0.8.0: intra-cluster replication 15

- 16. Topic 1 Topic 2 Partitions Producers Producers Consumers Consumers Brokers High-Availability: Must-have 16

- 17. Kafka 0.8: Replicas and Layout Logs Broker-1 topic1-part1 topic1-part3 topic1-part2 Logs topic1-part2 topic1-part1 topic1-part3 Logs topic1-part3 topic1-part2 topic1-part1 Broker-2 Broker-3 17

- 18. Consensus for Log Replication Logs Broker-1 Logs Logs Broker-2 Broker-3 Write Consensus Protocol Consensus Protocol 18

- 19. Kafka 0.8 Message Format … Offset = 0 Offset = 1 Offset = 2 M Bytes N Bytes Message offsets are Logical and Continuous 19

- 20. Kafka 0.8 Message Format … Offset = 2 Offset = 4 Offset = 7 3 Messages 2 Messages Brokers need to assign internal message offsets on receiving Compressed message offset is the largest offset among internal messages 0 1 2 3 4 5 6 7 20

- 21. Kafka 0.8 Message Format Offset = 2 Offset = 4 3 Messages 2 Messages Next offset is 5 21

- 22. Kafka 0.8 Message Format Offset = 2 Offset = 4 3 Messages 2 Messages Decompress Next offset is 5 22

- 23. Kafka 0.8 Message Format Offset = 2 Offset = 4 3 Messages 2 Messages Assign offsets 5 6 7Next offset is 5 23

- 24. Kafka 0.8 Message Format Offset = 2 Offset = 4 3 Messages 2 Messages Offset = 7 Re-compress Next offset is 5 24

- 25. Kafka 0.8 Message Format Offset = 2 Offset = 4 3 Messages 2 Messages Offset = 7 Append 25

- 26. Example: Centralized Data Pipeline KV-Store Doc-Store RDBMS Tracking Logs / Metrics Hadoop / DW Monitoring Rec. Engine Social GraphSearchingSecurity Apache Kafka … 26

- 27. Shifting Bottleneck • Predominantly network in 0.7 • Just bytes-in-bytes-out for brokers • Still network in 0.8, but tilting towards CPU / Storage • More CPU cost due to decompress / re-compress • Data replication, consensus protocol: • One message now copied X times for replication 27

- 28. A Short History • 2010.10: First commit of Kafka • 2011.07: Enters Apache Incubator • Release 0.7.0: compression, mirror-maker • 2012.10: Graduated to top-level project • Release 0.8.0: intra-cluster replication • 2014.11: Confluent founded • Release 0.8.2: new producer • Release 0.9.0: new consumer, quota, security 28

- 29. One naughty client can bother everyone .. 29

- 30. One naughty client can bother everyone .. 30

- 31. One naughty client can bother everyone .. 31

- 32. One naughty client can bother everyone .. 32

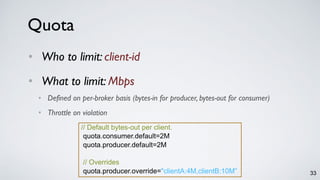

- 33. Quota • Who to limit: client-id • What to limit: Mbps • Defined on per-broker basis (bytes-in for producer, bytes-out for consumer) • Throttle on violation // Default bytes-out per client. quota.consumer.default=2M quota.producer.default=2M // Overrides quota.producer.override="clientA:4M,clientB:10M” 33

- 34. Security • Authentication (SSL) and authorization • Who (client-id) can do what (create, read, write, etc) • Minor impact on throughput • Forego “zero-copy” optimization • CPU overhead to decrypt / encrypt 34

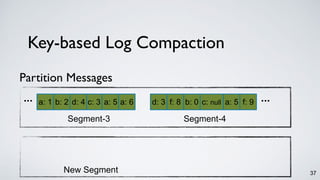

- 35. Key-based Log Compaction ... Partition Messages Segment-3 Segment-4 Segment-5 * 35

- 36. Key-based Log Compaction d: 3 f: 8 b: 0 c: null... Partition Messages c: 3 a: 5 a: 6 a: 5 f: 9 ... Segment-3 Segment-4 b: 2 d: 4a: 1 36

- 37. Key-based Log Compaction New Segment Partition Messages d: 3 f: 8 b: 0 c: null... c: 3 a: 5 a: 6 a: 5 f: 9 ... Segment-3 Segment-4 b: 2 d: 4a: 1 37

- 38. Key-based Log Compaction New Segment Partition Messages d: 3 f: 8 b: 0 c: null... c: 3 a: 5 a: 6 a: 5 f: 9 ... Segment-3 Segment-4 b: 2 d: 4a: 1 c: 3 a: 5 a: 6b: 2 d: 4a: 1 38

- 39. Key-based Log Compaction ... d: 3 f: 8 b: 0 c: null a: 5 f: 9 ... Segment-3 Segment-4 c: 3 a: 5 a: 6b: 2 d: 4a: 1 c: null a: 5 f: 9 New Segment Partition Messages c: 3b: 2 d: 4a: 1 a: 5 39

- 40. Key-based Log Compaction ... d: 3 f: 8 b: 0 c: null a: 5 f: 9 ... Segment-3 Segment-4 c: 3 a: 5 a: 6b: 2 d: 4a: 1 c: null a: 5 f: 9 New Segment Partition Messages c: 3b: 2 d: 4 a: 5 a: 5 40

- 41. Key-based Log Compaction ... d: 3 f: 8 b: 0 c: null a: 5 f: 9 ... Segment-3 Segment-4 c: 3 a: 5 a: 6b: 2 d: 4a: 1 c: null a: 5 f: 9 New Segment Partition Messages a: 6 d: 3 f: 8 b: 0c: 3b: 2 d: 4 41

- 42. Key-based Log Compaction ... d: 3 f: 8 b: 0 c: null a: 5 f: 9 ... Segment-3 Segment-4 c: 3 a: 5 a: 6b: 2 d: 4a: 1 New Segment Partition Messages d: 3 b: 0 a: 5 f: 9 42

- 43. Key-based Log Compaction ... d: 3 f: 8 b: 0 c: null a: 5 f: 9 ... Segment-3 Segment-4 c: 3 a: 5 a: 6b: 2 d: 4a: 1 New Segment Partition Messages d: 3 b: 0 a: 5 f: 9 43

- 44. Example: Data Store Geo-Replication Apache Local Stores User Apps User Apps Local Stores Apache Region 2Region 1 write read append log mirroring apply log 44

- 45. Shifting Bottleneck • Predominantly network in 0.7 • Just bytes-in-bytes-out for brokers • Storage and network in 0.8 • 1Gbps NICs • Increasing CPU in 0.9 • New hardware since 2015: bigger disks, XFS, 10Gbps NICs.. • De(re)compress, de(en)crypt, compaction, coordination, etc.. 45

- 46. A Short History • 2010.10: First commit of Kafka • 2011.07: Enters Apache Incubator • Release 0.7.0: compression, mirror-maker • 2012.10: Graduated to top-level project • Release 0.8.0: intra-cluster replication • 2014.11: Confluent founded • Release 0.8.2: new producer • Release 0.9.0: new consumer, quota, security • Release 0.10.0: timestamps, rack awareness 46

- 47. Coarsened “Time” under Kafka 0.9 ... Partition Messages Segment-3 Segment-4 Segment-5 Message time is the mtime of the segment file, so one stamp per-segment 47

- 48. Kafka 0.10 Message Format … Offset = 0 Offset = 1 Offset = 2 M Bytes N Bytes Timestamp = 25 Timestamp = 55 Timestamp = 60 48

- 49. Finer “Time” in Kafka 0.10 ... Partition Messages Segment-3 Segment-4 Segment-5 Timestamp per message (create time) or per-message-set (append time) time offset time offset time offset 49

- 50. Finer “Time” in Kafka 0.10 ... Partition Messages Segment-3 Segment-4 Segment-5 time offset time offset time offset Timestamp per message (create time) or per-message-set (append time) Finer-grained time-based lookup (fetch offset request) 50

- 51. Finer “Time” in Kafka 0.10 ... Partition Messages Segment-3 Segment-4 Segment-5 time offset time offset time offset Timestamp per message (create time) or per-message-set (append time) More accurate time-based log rolling / log retention 51

- 52. A Short History • 2016.04: First Kafka Summit @San Francisco • Release 0.9.0: Kafka Connect • Release 0.10.0: Kafka Streams 52

- 53. Kafka Streams (0.10+) • New client library besides producer and consumer • Powerful yet easy-to-use • Event-at-a-time, Stateful • Windowing with out-of-order handling • Highly scalable, distributed, fault tolerant • and more.. [BIRTE 2015] 53

- 54. Anywhere, anytime Ok. Ok. Ok. Ok. 54

- 56. Processor Topology 56Kafka Streams Kafka

- 57. Stream Partitions and Tasks 57 Kafka Topic B Kafka Topic A P1 P2 P1 P2

- 58. Stream Partitions and Tasks 58 Kafka Topic B Kafka Topic A Processor Topology P1 P2 P1 P2

- 59. Stream Partitions and Tasks 59 Kafka Topic AKafka Topic B

- 60. Kafka Topic B Stream Threads 60 Kafka Topic A MyApp.1 MyApp.2 Task2Task1

- 61. But how to get data in / out Kafka? 61

- 62. 62

- 63. Connectors (0.9+) • 45+ since first release • 30 from & partners 63

- 64. A Short History • 2016.04: First Kafka Summit @San Francisco • Release 0.9.0: Kafka Connect • Release 0.10.0: Kafka Streams • 2017.08: Apache Kafka Goes 1.0 • Release 0.11.0: Exactly-Once 64

- 65. Stream Processing with Kafka Process State Ads Clicks Ads Displays Billing Updates Fraud Suspects Your App 65

- 66. Stream Processing with Kafka Process State Ads Clicks Ads Displays Billing Updates Fraud Suspects ack ack commit Your App 66

- 67. Error Scenario #1: Duplicate Writes Process State Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D ack Streams App 67

- 68. Error Scenario #1: Duplicate Writes Process State Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D ack producer config: retries = N Streams App 68

- 69. Error Scenario #2: Re-process Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D commit ack ack State Process Streams App 69

- 70. Error Scenario #2: Re-process State Process Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D State Streams App 70

- 71. Exactly-Once An application property for stream processing, .. that for each received record, .. its process results will be reflected exactly once, .. even under failures 71

- 72. Process State Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D Life before 0.11: At-least-once + Dedup 72

- 73. Process State Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D ack ack Life before 0.11: At-least-once + Dedup 73

- 74. Process State Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D ack ack commit Life before 0.11: At-least-once + Dedup 74

- 75. 2 2 3 3 4 4 Life before 0.11: At-least-once + Dedup 75

- 76. Exactly-once, the Kafka Way!(0.11+) 76

- 77. • Building blocks to achieve exactly-once • Idempotence: de-duped sends in order per partition • Transactions: atomic multiple-sends across topic partitions • Kafka Streams: enable exactly-once in a single knob Exactly-once, the Kafka Way!(0.11+) 77

- 79. Shifting Bottleneck, Continued.. • Storage and network in 0.7 / 0.8 • Increasing CPU in 0.9 - 0.11 • TLS, CRC, Quota, protocol down-conversion • Txn coordination, etc.. • Operation efficiency on 0.11+ • Leader balancing, partition migration, cluster expansion.. • ZK-dependency, multi-DC support .. 79

- 80. A Short History • 2016.04: First Kafka Summit @San Francisco • Release 0.9.0: Kafka Connect • Release 0.10.0: Kafka Streams • 2017.08: Apache Kafka Goes 1.0 • Release 0.11.0: Exactly-Once • Release 1.0+: More security and operability (controller re-design, Java9 withTLS / CRC, etc) • Release 1.0+: Better scalability (JBOD, etc) • Release 1.0+: Online-evolvability (down-conversion optimization, etc) 80

- 81. • One broker in a cluster acts as controller • Monitor the liveness of brokers (via ZK) • Select new leaders on broker failures • Communicate new leaders to brokers • Controller re-elected on failures (via ZK) Example: Controller Re-design 81

- 82. Logs Broker-1 * Logs Logs Broker-2 Broker-3 Example: Controlled Shutdown SIG_TERM Zookeeper ISR {1, 2, 3} 82

- 83. Logs Broker-1 * Logs Logs Broker-2 Broker-3 Example: Controlled Shutdown Zookeeper ISR {1, 2, 3} 83

- 84. Logs Broker-1 * Logs Logs Broker-2 Broker-3 Example: Controlled Shutdown Zookeeper ISR {1, 2, 3} Zookeeper ISR {2, 3} 84

- 85. Logs Broker-1 * Logs Logs Broker-2 Broker-3 Example: Controlled Shutdown Zookeeper ISR {2, 3}ISR {2, 3}ISR {2, 3} 85

- 86. Logs Broker-1 Logs Logs Broker-2 * Broker-3 Example: Controlled Shutdown Zookeeper ISR {2, 3} 86

- 87. Logs Broker-1 Logs Logs Broker-2 * Broker-3 Example: Controlled Shutdown Zookeeper ISR {2, 3} 87

- 88. Logs Broker-1 Logs Logs Broker-2 * Broker-3 Issues with Controlled Shutdown (pre 1.1) Zookeeper ISR {2, 3} ISR {2, 3} ISR {2, 3} Writes to ZK are serial Impact: longer shutdown time Comm. of new leaders not batched Impact: client timeout 88

- 89. Results for Controlled Shutdown (post 1.1) • 5 Zookeeper nodes, 5 brokers on different racks • 25k topics, 1 partition per topic, 2 replicas • 10k partitions per brokers Kafka 1.0.0 Kafka 1.1.0 Controlled shutdown time 6.5 minutes 3 seconds 89

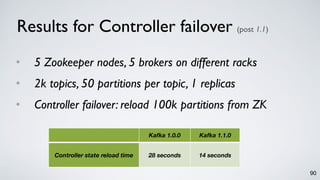

- 90. Results for Controller failover (post 1.1) • 5 Zookeeper nodes, 5 brokers on different racks • 2k topics, 50 partitions per topic, 1 replicas • Controller failover: reload 100k partitions from ZK Kafka 1.0.0 Kafka 1.1.0 Controller state reload time 28 seconds 14 seconds 90

- 91. A Short History • 2016.04: First Kafka Summit @San Francisco • Release 0.9.0: Kafka Connect • Release 0.10.0: Kafka Streams • 2017.08: Apache Kafka Goes 1.0 • Release 0.11.0: Exactly-Once • Release 1.0+: More security and operability (controller re-design, Java9 withTLS / CRC, etc) • Release 1.0+: Better scalability (JBOD, etc) • Release 1.0+: Online-evolvability (down-conversion optimization, etc) • Future: • Global, Infinite and Cloud-Native Kafka 91

- 92. What we have learned? 92

- 93. Lesson 1: Build evolvable systems 93

- 94. Upgrade your Kafka cluster is like .. 94

- 95. Kafka: Evolvable System • Zero down-time • Maintenance outage? No such thing. • All protocols versioned • Brokers can talk to older versioned clients • And vice versa since 0.10.2! • One should do no more than rolling bounces • Staging before production 95

- 96. Lesson 2: What gets measured gets fixed 96

- 97. 97

- 98. Lesson 3: APIs stay forever 98

- 99. The Story of KAFKA-1481 99

- 100. The Story of KAFKA-1481 100

- 101. 101

- 102. 102

- 103. 103

- 104. Lesson 4: Service needs gatekeepers 104

- 105. Remember This? 105

- 106. Multi-tenancy Services (in the Cloud) • Security from ground up • End-to-end encryption • ACL / RBAC definitions • Resources under control • Quota on bytes rate / request rate, CPU resources • Capacity preservation / allocation to quotas • Limit on num.connections, etc 106

- 107. So What is Kafka, Really? 107

- 108. What is Kafka, Really? [NetDB 2011]a scalable pub-sub messaging system.. 108

- 109. Example: Pub-Sub Messaging Tracking Logs / Metrics Hadoop / DW Apache Kafka … 109

- 110. What is Kafka, Really? [NetDB 2011] [Hadoop Summit 2013] a scalable pub-sub messaging system.. a real-time data pipeline.. 110

- 111. Example: Centralized Data Pipeline KV-Store Doc-Store RDBMS Tracking Logs / Metrics Hadoop / DW Monitoring Rec. Engine Social GraphSearchingSecurity Apache Kafka … 111

- 112. What is Kafka, Really? [NetDB 2011] [Hadoop Summit 2013] [VLDB 2015] a scalable pub-sub messaging system.. a real-time data pipeline.. a distributed and replicated log.. 112

- 113. Example: Data Store Geo-Replication Apache Local Stores User Apps User Apps Local Stores Apache Region 2Region 1 write read append log mirroring apply log 113

- 114. What is Kafka, Really? a scalable pub-sub messaging system.. [NetDB 2011] a real-time data pipeline.. [Hadoop Summit 2013] a distributed and replicated log.. [VLDB 2015] a unified data integration stack.. [CIDR 2015] 114

- 116. What is Kafka, Really? a scalable pub-sub messaging system.. [NetDB 2011] a real-time data pipeline.. [Hadoop Summit 2013] a distributed and replicated log.. [VLDB 2015] a unified data integration stack.. [CIDR 2015] All of them! 116

- 117. Kafka: Streaming Platform • Publish / Subscribe • Move data around as online streams • Store • “Source-of-truth” continuous data • Process • React / process data in real-time 117

- 118. Kafka adoption World-Wide 6 of the top 10 travel companies 8 of the top 10 insurance companies 7 of the top 10 global banks 9 of the top 10 telecom companies 118

- 120. THANKS! Guozhang Wang | [email protected] | @guozhangwang 120

![Kafka Streams (0.10+)

• New client library besides producer and consumer

• Powerful yet easy-to-use

• Event-at-a-time, Stateful

• Windowing with out-of-order handling

• Highly scalable, distributed, fault tolerant

• and more..

[BIRTE 2015]

53](https://image.slidesharecdn.com/kafkafrom0-181022094046/85/Apache-Kafka-from-0-7-to-1-0-History-and-Lesson-Learned-53-320.jpg)

![What is Kafka, Really?

[NetDB 2011]a scalable pub-sub messaging system..

108](https://image.slidesharecdn.com/kafkafrom0-181022094046/85/Apache-Kafka-from-0-7-to-1-0-History-and-Lesson-Learned-108-320.jpg)

![What is Kafka, Really?

[NetDB 2011]

[Hadoop Summit 2013]

a scalable pub-sub messaging system..

a real-time data pipeline..

110](https://image.slidesharecdn.com/kafkafrom0-181022094046/85/Apache-Kafka-from-0-7-to-1-0-History-and-Lesson-Learned-110-320.jpg)

![What is Kafka, Really?

[NetDB 2011]

[Hadoop Summit 2013]

[VLDB 2015]

a scalable pub-sub messaging system..

a real-time data pipeline..

a distributed and replicated log..

112](https://image.slidesharecdn.com/kafkafrom0-181022094046/85/Apache-Kafka-from-0-7-to-1-0-History-and-Lesson-Learned-112-320.jpg)

![What is Kafka, Really?

a scalable pub-sub messaging system.. [NetDB 2011]

a real-time data pipeline.. [Hadoop Summit 2013]

a distributed and replicated log.. [VLDB 2015]

a unified data integration stack.. [CIDR 2015]

114](https://image.slidesharecdn.com/kafkafrom0-181022094046/85/Apache-Kafka-from-0-7-to-1-0-History-and-Lesson-Learned-114-320.jpg)

![What is Kafka, Really?

a scalable pub-sub messaging system.. [NetDB 2011]

a real-time data pipeline.. [Hadoop Summit 2013]

a distributed and replicated log.. [VLDB 2015]

a unified data integration stack.. [CIDR 2015]

All of them!

116](https://image.slidesharecdn.com/kafkafrom0-181022094046/85/Apache-Kafka-from-0-7-to-1-0-History-and-Lesson-Learned-116-320.jpg)