Apache Kafka - Scalable Message-Processing and more !

- 1. BASEL BERN BRUGG DÜSSELDORF FRANKFURT A.M. FREIBURG I.BR. GENF HAMBURG KOPENHAGEN LAUSANNE MÜNCHEN STUTTGART WIEN ZÜRICH Apache Kafka Scalable Message Processing and more! Guido Schmutz – 10.5.2017 @gschmutz guidoschmutz.wordpress.com

- 2. Guido Schmutz Working at Trivadis for more than 20 years Oracle ACE Director for Fusion Middleware and SOA Consultant, Trainer Software Architect for Java, Oracle, SOA and Big Data / Fast Data Head of Trivadis Architecture Board Technology Manager @ Trivadis More than 30 years of software development experience Contact: [email protected] Blog: http://guidoschmutz.wordpress.com Slideshare: http://www.slideshare.net/gschmutz Twitter: gschmutz Apache Kafka - Scalable Message Processing and more!

- 3. COPENHAGEN MUNICH LAUSANNE BERN ZURICH BRUGG GENEVA HAMBURG DÜSSELDORF FRANKFURT STUTTGART FREIBURG BASEL VIENNA With over 600 specialists and IT experts in your region. 14 Trivadis branches and more than 600 employees 200 Service Level Agreements Over 4,000 training participants Research and development budget: CHF 5.0 million Financially self-supporting and sustainably profitable Experience from more than 1,900 projects per year at over 800 customers

- 4. Agenda 1. Introduction & Motivation 2. Kafka Core 3. Kafka Connect 4. Kafka Streams 5. Kafka and "Big Data" / "Fast Data" Ecosystem 6. Kafka in Enterprise Architecture 7. Confluent Data Platform 8. Summary

- 6. Apache Kafka - Overview Distributed publish-subscribe messaging system Designed for processing of real time activity stream data (logs, metrics collections, social media streams, …) Initially developed at LinkedIn, now part of Apache Does not use JMS API and standards Kafka maintains feeds of messages in topics

- 7. Apache Kafka - Motivation LinkedIn’s motivation for Kafka was: • "A unified platform for handling all the real-time data feeds a large company might have." Must haves • High throughput to support high volume event feeds • Support real-time processing of these feeds to create new, derived feeds. • Support large data backlogs to handle periodic ingestion from offline systems • Support low-latency delivery to handle more traditional messaging use cases • Guarantee fault-tolerance in the presence of machine failures

- 9. Apache Kafka - Unix Analogy $ cat < in.txt | grep "kafka" | tr a-z A-Z > out.txt Kafka Connect API Kafka Connect APIKafka Streams API Kafka Core (Cluster) Source: Confluent

- 10. Kafka Core

- 11. Kafka High Level Architecture The who is who • Producers write data to brokers. • Consumers read data from brokers. • All this is distributed. The data • Data is stored in topics. • Topics are split into partitions, which are replicated. Kafka Cluster Consumer Consumer Consumer Producer Producer Producer Broker 1 Broker 2 Broker 3 Zookeeper Ensemble

- 12. Apache Kafka - Architecture Kafka Broker Movement Processor Movement Topic Engine-Metrics Topic 1 2 3 4 5 6 Engine Processor1 2 3 4 5 6 Truck

- 13. Apache Kafka - Architecture Kafka Broker Movement Processor Movement Topic Engine-Metrics Topic 1 2 3 4 5 6 Engine Processor Partition 0 1 2 3 4 5 6 Partition 0 1 2 3 4 5 6 Partition 1 Movement Processor Truck

- 14. Apache Kafka Kafka Broker 1 Movement Processor Truck Movement Topic P 0 Movement Processor 1 2 3 4 5 P 2 1 2 3 4 5 Kafka Broker 2 Movement Topic P 2 1 2 3 4 5 P 1 1 2 3 4 5 Kafka Broker 3 Movement Topic P 0 1 2 3 4 5 P 1 1 2 3 4 5 Movement Processor

- 15. Kafka Topics Creating a topic • Command line interface • Using AdminUtils.createTopic method • Auto-create via auto.create.topics.enable = true Modifying a topic https://kafka.apache.org/documentation.html#basic_ops_modify_topic Deleting a topic • Command Line interface $ kafka-topics.sh –zookeeper zk1:2181 --create --topic my.topic –-partitions 3 –-replication-factor 2 --config x=y

- 16. Kafka Producer • Write Ahead Log / Commit Log • Producers always append to tail (think append to file) • Preserves Order of messages within partition Kafka Broker Movement Topic 1 2 3 4 5 Truck 6 6

- 17. Kafka Producer – High Level Overiew Producer Client Kafka Broker Movement Topic Partition 0 Partitioner Movement Topic Serializer Producer Record message 1 message 2 message 3 message 4 Batch Movement Topic Partition 1 message 1 message 2 message 3 Batch Partition 0 Partition 1 Retry ? Fail ? Topic Message [ Partition ] [ Key ] Value yes yes if can’t retry: throw exception successful: return metadata Compression (optional)

- 18. Kafka Producer - Durability Guarantees Producer can configure acknowledgements Value Description Throughput Latency Durability 0 • Producer doesn’t wait for leader high low low (no guarantee) 1 (default) • Producer waits for leader • Leader sends ack when message written to log • No wait for followers medium medium medium (leader) all (-1) • Producer waits for leader • Leader sends ack when all In-Sync Replica have acknowledged low high high (ISR)

- 19. Kafka Producer - Java API Constructing a Kafka Producer private Properties kafkaProps = new Properties(); kafkaProps.put("bootstrap.servers","broker1:9092,broker2:9092"); kafkaProps.put("key.serializer", "...StringSerializer"); kafkaProps.put("value.serializer", "...StringSerializer"); producer = new KafkaProducer<String, String>(kafkaProps);

- 20. Kafka Producer - Java API Sending Message Synchronously (no control if message has been sent successful) Sending Message Synchronously (wait until reply from Kafka arrives back) ProducerRecord<String, String> record = new ProducerRecord<>("topicName", "Key", "Value"); try { producer.send(record); } catch (Exception e) {} ProducerRecord<String, String> record = new ProducerRecord<>("topicName", "Key", "Value"); try { producer.send(record).get(); } catch (Exception e) {}

- 21. Kafka Producer - Java API Sending Message Asynchronously private class DemoProducerCallback implements Callback { @Override public void onCompletion(RecordMetadata recordMetadata, Exception e) { if (e != null) { e.printStackTrace(); } } } ProducerRecord<String, String> record = new ProducerRecord<>("topicName", "key", "value"); producer.send(record, new DemoProducerCallback());

- 22. Kafka Consumer - Partition offsets Offset: messages in the partitions are each assigned a unique (per partition) and sequential id called the offset • Consumers track their pointers via (offset, partition, topic) tuples Consumer Group A Consumer Group B

- 23. Kafka Consumer - Consumer Groups Kafka Movement Topic Partition 0 Consumer Group 1 Consumer 1 Partition 1 Partition 2 Partition 3 Kafka Movement Topic Partition 0 Consumer Group 1 Partition 1 Partition 2 Partition 3 Kafka Movement Topic Partition 0 Consumer Group 1 Partition 1 Partition 2 Partition 3 Kafka Movement Topic Partition 0 Consumer Group 1 Partition 1 Partition 2 Partition 3 Consumer 1 Consumer 2 Consumer 3 Consumer 4 Consumer 1 Consumer 2 Consumer 3 Consumer 4 Consumer 5 Consumer 1 Consumer 2 2 Consumers / each get messages from 2 partitions 1 Consumer / get messages from all partitions 5 Consumers / one gets no messages 4 Consumers / each get messages from 1 partition

- 24. Kafka Consumer - Consumer Groups it’s very common to have multiple applications that read data from the same topic each application should get all of the messages assign a unique consumer group to each application number of consumers (threads) can be different Kafka scales to large number of consumers without impacting performance Kafka Movement Topic Partition 0 Consumer Group 1 Consumer 1 Partition 1 Partition 2 Partition 3 Consumer 2 Consumer 3 Consumer 4 Consumer Group 2 Consumer 1 Consumer 2

- 25. Kafka Consumer - Java API Constructing a Kafka Consumer private Properties kafkaProps = new Properties(); kafkaProps.put("bootstrap.servers","broker1:9092,broker2:9092"); kafkaProps.put("group.id","MovementsConsumerGroup"); kafkaProps.put("key.serializer", "...StringSerializer"); kafkaProps.put("value.serializer", "...StringSerializer"); consumer = new KafkaConsumer<String, String>(kafkaProps);

- 26. Kafka Consumer - Java API Kafka Consumer Poll Loop (with synchronous offset commit) consumer.subscribe(Collections.singletonList("topic")); try { while (true) { ConsumerRecords<String, String> records = consumer.poll(100); for (ConsumerRecord<String, String> record : records) { // process message, available information: // record.topic(), record.partition(), record.offset(), // record.key(), record.value()); } consumer.commitSync(); } } finally { consumer.close(); }

- 27. Data Retention – 3 options 1. Never 2. Time based (TTL) log.retention.{ms | minutes | hours} 3. Size based log.retention.bytes 4. Log compaction based (entries with same key are removed) kafka-topics.sh --zookeeper localhost:2181 --create --topic customers --replication-factor 1 --partitions 1 --config cleanup.policy=compact

- 28. Apache Kafka – Some numbers Kafka at LinkedIn => over 1800+ broker machines / 79K+ Topics Kafka Performance at our own infrastructure => 6 brokers (VM) / 1 cluster • 445’622 messages/second • 31 MB / second • 3.0405 ms average latency between producer / consumer 1.3 Trillion messages per day 330 Terabytes in/day 1.2 Petabytes out/day Peak load for a single cluster 2 million messages/sec 4.7 Gigabits/sec inbound 15 Gigabits/sec outbound http://engineering.linkedin.com/kafka/benchmarking-apache-kafka-2-million-writes-second-three-cheap-machines https://engineering.linkedin.com/kafka/running-kafka-scale

- 29. Kafka Connect

- 31. Kafka Connector Hub – Certified Connectors Source: http://www.confluent.io/product/connectors

- 32. Kafka Connector Hub – Additional Connectors Source: http://www.confluent.io/product/connectors

- 33. Kafka Connect – Twitter example ./connect-standalone.sh ../demo-config/connect-simple-source-standalone.properties ../demo-config/twitter-source.properties name=twitter-source connector.class=com.eneco.trading.kafka.connect.twitter.TwitterSourceConnector tasks.max=1 topic=tweets twitter.consumerkey=<consumer-key> twitter.consumersecret=<consumer-secret> twitter.token=<token> twitter.secret=<token-secret> track.terms=bigdata bootstrap.servers=localhost:9095,localhost:9096,localhost:9097 key.converter=org.apache.kafka.connect.storage.StringConverter value.converter=org.apache.kafka.connect.storage.StringConverter ...

- 34. Kafka Streams

- 35. Kafka Streams • Designed as a simple and lightweight library in Apache Kafka • no external dependencies on systems other than Apache Kafka • Part of open source Apache Kafka, introduced in 0.10+ • Leverages Kafka as its internal messaging layer • agnostic to resource management and configuration tools • Supports fault-tolerant local state • Event-at-a-time processing (not microbatch) with millisecond latency • Windowing with out-of-order data using a Google DataFlow-like model

- 36. Streams API in the context of Kafka Source: Confluent

- 37. Kafka and "Big Data" / "Fast Data" Ecosystem

- 38. Kafka and the Big Data / Fast Data ecosystem Kafka integrates with many popular products / frameworks • Apache Spark Streaming • Apache Flink • Apache Storm • Apache NiFi • Streamsets • Apache Flume • Oracle Stream Analytics • Oracle Service Bus • Oracle GoldenGate • Spring Integration Kafka Support • …Storm built-in Kafka Spout to consume events from Kafka

- 39. Kafka in "Enterprise Architecture"

- 40. Hadoop Clusterd Hadoop Cluster Big Data Cluster Traditional Big Data Architecture BI Tools Enterprise Data Warehouse Billing & Ordering CRM / Profile Marketing Campaigns File Import / SQL Import SQL Search Online & Mobile Apps Search NoSQL Parallel Batch Processing Distributed Filesystem • Machine Learning • Graph Algorithms • Natural Language Processing

- 41. Event Hub Event Hub Hadoop Clusterd Hadoop Cluster Big Data Cluster Event Hub – handle event stream data BI Tools Enterprise Data Warehouse Location Social Click stream Sensor Data Billing & Ordering CRM / Profile Marketing Campaigns Event Hub Call Center Weather Data Mobile Apps SQL Search Online & Mobile Apps Search Data Flow NoSQL Parallel Batch Processing Distributed Filesystem • Machine Learning • Graph Algorithms • Natural Language Processing

- 42. Hadoop Clusterd Hadoop Cluster Big Data Cluster Event Hub – taking Velocity into account Location Social Click stream Sensor Data Billing & Ordering CRM / Profile Marketing Campaigns Call Center Mobile Apps Batch Analytics Streaming Analytics Event Hub Event Hub Event Hub NoSQL Parallel Batch Processing Distributed Filesystem Stream Analytics NoSQL Reference / Models SQL Search Dashboard BI Tools Enterprise Data Warehouse Search Online & Mobile Apps File Import / SQL Import Weather Data

- 43. Container Hadoop Clusterd Hadoop Cluster Big Data Cluster Event Hub – Asynchronous Microservice Architecture Location Social Click stream Sensor Data Billing & Ordering CRM / Profile Marketing Campaigns Call Center Mobile Apps Event Hub Event Hub Event Hub Parallel Batch ProcessingDistributed Filesystem Microservice NoSQLRDBMS SQL Search BI Tools Enterprise Data Warehouse Search Online & Mobile Apps File Import / SQL Import Weather Data { } API

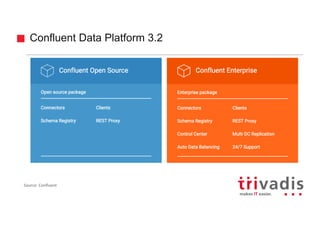

- 45. Confluent Data Platform 3.2 Source: Confluent

- 46. Confluent Data Platform 3.2 Source: Confluent

- 47. Confluent Enterprise – Control Center Source: Confluent

- 48. Summary

- 49. Summary • Kafka can scale to millions of messages per second, and more • Easy to start in a Proof of Concept (PoC), but more to invest to setup a production environment • Monitoring is key • Vibrant community and ecosystem • Fast paced technology • Confluent provides distribution and support for Apache Kafka • Oracle Event Hub Service offers a Kafka Managed Service

- 50. Guido Schmutz Technology Manager [email protected] @gschmutz guidoschmutz.wordpress.com

![Kafka Producer – High Level Overiew

Producer Client

Kafka Broker

Movement Topic

Partition 0

Partitioner

Movement Topic

Serializer

Producer Record

message 1

message 2

message 3

message 4

Batch

Movement Topic

Partition 1

message 1

message 2

message 3

Batch

Partition 0

Partition 1

Retry

?

Fail

?

Topic

Message

[ Partition ]

[ Key ]

Value

yes

yes

if can’t retry:

throw exception

successful:

return metadata

Compression (optional)](https://image.slidesharecdn.com/kafka-scalable-stream-processing-and-more-v1-170510192447/85/Apache-Kafka-Scalable-Message-Processing-and-more-17-320.jpg)