Apache Spark Introduction and Resilient Distributed Dataset basics and deep dive

- 1. © 2015 IBM Corporation Apache Hadoop Day 2015 Intro to Apache Spark LIGHTENING FAST CLUSTER COMPUTING

- 2. © 2015 IBM Corporation

- 3. © 2015 IBM Corporation Apache Hadoop Day 2015 Mapreduce Limitations • Lots of boilerplate , makes it complex to program in MR. • Disk based approach not good for iterative usecases. • Batch processing not fit for real time. In short no single solution, people build specialized systems as workarounds.

- 4. © 2015 IBM Corporation Spark Goal Batch Interactiv e Streamin g Single Framework! Support batch, streaming, and interactive computations… … in a unified framework Easy to develop sophisticated algorithms (e.g., graph, ML algos)

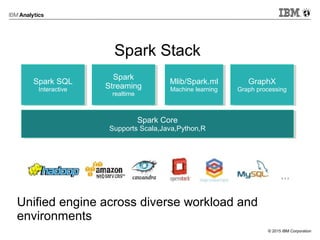

- 5. © 2015 IBM Corporation Spark Core Supports Scala,Java,Python,R Spark Core Supports Scala,Java,Python,R Spark SQL Interactive Spark SQL Interactive Spark Streaming realtime Spark Streaming realtime Mlib/Spark.ml Machine learning Mlib/Spark.ml Machine learning GraphX Graph processing GraphX Graph processing Spark Stack Unified engine across diverse workload and environments

- 6. © 2015 IBM Corporation Data processing landscape GraphLa b Girap h … Graph Grap h Graph Pre gel Googl e Apache Dato

- 7. © 2015 IBM Corporation Data processing landscape Dreme l GraphLa b Girap h Drill Impala … SQ L Graph Grap h SQ L Graph Pre gel Googl e Google SQL Apach e Apache Cloudera Dato

- 8. © 2015 IBM Corporation Data processing landscape Dreme l GraphLa b Girap h Drill Impala … SQ L Graph Grap h SQ L Graph Pre gel Googl e Google SQL Apach e Apache DAG Tez Apache Cloudera Stream Stor m Apache Dato

- 9. © 2015 IBM Corporation Data processing landscape Dr e aphLa b Girap h Graph Grap h G Pregel Go Apache DAG ez Apache raph ogle SQL Drill Apache SQL mel T Google SQL Impala Cloudera Storm Stream … Gr Apache Dato Stop surarmement now !

- 10. © 2015 IBM Corporation Spark Unifies batch, streaming, interactive comp. Easy to build sophisticated applications Support iterative, graph-parallel algorithms Powerful APIs in Scala, Python, Java Spark Spark Streaming Shark SQL BlinkDB GraphX MLlib Streami ng Batch, Interactiv e Batch, Interactive Interacti ve Data-parallel, Iterative Sophisticated algos.

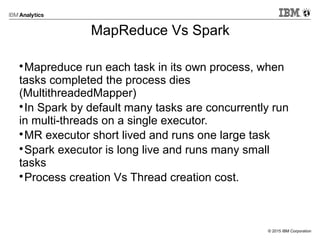

- 11. © 2015 IBM Corporation MapReduce Vs Spark Mapreduce run each task in its own process, when tasks completed the process dies (MultithreadedMapper) In Spark by default many tasks are concurrently run in multi-threads on a single executor. MR executor short lived and runs one large task Spark executor is long live and runs many small tasks Process creation Vs Thread creation cost.

- 12. © 2015 IBM Corporation Problems in Spark Applications cannot share data(mostly RDDs in Spark Context) without writing to external Storage. Resource allocation inefficiency [spark.dynamicAllocation.enabled]. Not Exactly designed for interactive applications.

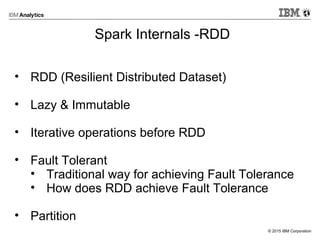

- 13. © 2015 IBM Corporation Spark Internals -RDD • RDD (Resilient Distributed Dataset) • Lazy & Immutable • Iterative operations before RDD • Fault Tolerant • Traditional way for achieving Fault Tolerance • How does RDD achieve Fault Tolerance • Partition

- 14. © 2015 IBM Corporation Apache Hadoop Day 2015 Spark Internals – RDDs sc.textFile(“hdfs://<input>”) .filter(_.startsWith(“ERROR”)) .map(_.split(“ “)(1)) .saveAsTextFile(“hdfs://<output>”) Stage-1 HDFS HDFSHadoopRDD FilteredRDD MappedRDD

- 15. © 2015 IBM Corporation Apache Hadoop Day 2015 Spark Internals – RDDS Narrow Vs Wide Dependency •Narrow dependency – Each partition of parent is Used by at max one partition of child •Wide dependency – multiple child partition may depend on one parent.

- 16. © 2015 IBM Corporation Apache Hadoop Day 2015 Narrow/Shuffle Dependency – class diagram

- 17. © 2015 IBM Corporation Apache Hadoop Day 2015 Task Scheduler Task Thread Block Manager Spark Internal – Job Scheduling RDD Object DAG Scheduler Task Scheduler Executor Split DAG into Stages and Tasks Submit each Stage as ready Launches individual tasks Execute tasks Stores & serves blocks Rdd1.join(rdd2) .groupBy(…) .filter(…)

- 18. © 2015 IBM Corporation Apache Hadoop Day 2015 Resource Allocation • Dynamic Resource Allocation. • Resource Allocation Policy. Request Policy Remove Policy

- 19. © 2015 IBM Corporation Apache Hadoop Day 2015 Request/Remove Policy Request • Pending tasks to be scheduled. • Spark request executors in rounds. • spark.dynamicAllocation.schedulerBacklogTim eout & spark.dynamicAllocation.sustainedSchedulerB acklogTimeout Remove • Removes when its idle for more than spark.dynamicAllocation.executorIdleTimeo ut seconds

- 20. © 2015 IBM Corporation Apache Hadoop Day 2015 Graceful Decommission of Executors • State before Dynamic Allocation • With Dynamic Allocation • Complexity increases with Shuffle • External Shuffle Service • State of Cached data either in disk or memory

- 21. © 2015 IBM Corporation Apache Hadoop Day 2015 Fair Scheduler • What is Fair Scheduling? • How to enable Fair Scheduler val conf = new SparkConf().setMaster(...).setAppName(...) conf.set("spark.scheduler.mode", "FAIR") val sc = new SparkContext(conf) • Fair Scheduler Pools

- 22. © 2015 IBM Corporation RDD Deep Dive • RDD Basics • How to create • RDD Operations • Lineage • Partitions • Shuffle • Type of RDDs • Extending RDD • Caching in RDD

- 23. © 2015 IBM Corporation RDD Basics • RDD (Resilient Distributed Dataset) • Distributed collection of Object • Resilient - Ability to re-compute missing partitions (node failure) • Distributed – Split across multiple partitions • Dataset - Can contain any type, Python/Java/Scala Object or User defined Object • Fundamental unit of data in spark

- 24. © 2015 IBM Corporation RDD Basics – How to create Two ways Loading external datasets − Spark supports wide range of sources − Access HDFS data through InputFormat & OutputFormat of Hadoop. − Supports custom Input/Output format Parallelizing collection in driver program val lineRDD = sc.textFile(“hdfs:///path/to/Readme.md”) textFile(“/my/directory/*”) or textFile(“/my/directory/*.gz”) SparkContext.wholeTextFiles returns (filename,content) pair val listRDD = sc.parallelize(List(“spark”,”meetup”,”deepdive”))

- 25. © 2015 IBM Corporation RDD Operations Two type of Operations Transformation Action Transformations are lazy, nothing actually happens until an action is called. Action triggers the computation Action returns values to driver or writes data to external storage.

- 26. © 2015 IBM Corporation Lazy Evaluation − Transformation on RDD, don’t get performed immediately − Spark Internally records metadata to track the operation − Loading data into RDD also gets lazy evaluated − Lazy evaluation reduce number of passes on the data by grouping operations − MapReduce – Burden on developer to merge the operation, complex map. − Failure in Persisting the RDD will re-compute complete lineage every time.

- 27. © 2015 IBM Corporation RDD In Action sc.textFile(“hdfs://file.txt") .flatMap(line=>line.split(" ")) .map(word => (word,1)) .reduceByKey(_+_) .collect() I scream you scream lets all scream for icecream! I wish I were what I was when I wished I were what I am. I scream you scream lets all scream for icecream (I,1) (scream,1) (you,1) (scream,1) (lets,1) (all,1) (scream,1) (icecream,1) (icecream,1) (scream,3) (you,1) (lets,1) (I,1) (all,1)

- 28. © 2015 IBM Corporation Lineage Demo

- 29. © 2015 IBM Corporation RDD Partition Partition Definition Fragments of RDD Fragmentation allows Spark to execute in Parallel. Partitions are distributed across cluster(Spark worker) Partitioning Impacts parallelism Impacts performance

- 30. © 2015 IBM Corporation Importance of partition Tuning Too few partitions Less concurrency, unused cores. More susceptible to data skew Increased memory pressure for groupBy, reduceByKey, sortByKey, etc. Too many partitions Framework overhead (more scheduling latency than the time needed for actual task.) Many CPU context-switching Need “reasonable number” of partitions Commonly between 100 and 10,000 partitions Lower bound: At least ~2x number of cores in cluster Upper bound: Ensure tasks take at least 100ms

- 31. © 2015 IBM Corporation How Spark Partitions data Input data partition Shuffle transformations Custom Partitioner

- 32. © 2015 IBM Corporation Partition - Input Data Spark uses same class as Hadoop to perform Input/Output sc.textFile(“hdfs://…”) invokes Hadoop TextInputFormat Below are Knobs which defines #Partitions dfs.block.size – default 128MB(Hadoop 2.0) numPartition – can be used to increase number of partition default is 0 which means 1 partition mapreduce.input.fileinputformat.split.minsize – default 1kb Partition Size = Max(minsize,Min(goalSize,blockSize) goalSize = totalInputSize/numPartitions 32MB, 0, 1KB, 640MB total size - Defaults −Max(1kb,Min(640MB,32MB) ) = 20 partitions 32MB, 30, 1KB , 640MB total size - Want more partition −Max(1kb,Min(32MB,32MB)) = 32 partition 32MB, 5, 1KB = Max(1kb,Min(120MB,32MB)) = 20 – Bigger size partition 32MB,0, 64MB = Max(64MB,Min(640MB,32MB)) = 10 Bigger size partition

- 33. © 2015 IBM Corporation Partition - Shuffle transformations All shuffle transformation provides parameter for desire number of partition Default Behavior - Spark Uses HashPartitioner. − If spark.default.parallelism is set , takes that as # of partitions − If spark.default.parallelism is not set largest upstream RDD ‘s number of partition − Reduces chances of out of memory 1. groupByKey 2. reduceByKey 3. aggregateByKey 4. sortByKey 5. join 6. cogroup 7. cartesian 8. coalesce 9. repartition 10.repartitionAndSort WithinPartitions Shuffle Transformation

- 34. © 2015 IBM Corporation Partition - Repartitioning RDD provides two operators repartition(numPartitions) − Can Increase/decrease number of partitions − Internally does shuffle − expensive due to shuffle − For decreasing partition use coalesce Coalesce(numPartition,Shuffle:[true/false]) − Decreases partitions − Goes for narrow dependencies − Avoids shuffle − In case of drastic reduction may trigger shuffle

- 35. © 2015 IBM Corporation Custom Partitioner Partition the data according to use case & data structure Provides control over no of partitions, distribution of data Extends Partitioner class, need to implement getPartitions & numPartitons

- 36. © 2015 IBM Corporation Partitioning Demo

- 37. © 2015 IBM Corporation Shuffle - GroupByKey Vs ReduceByKey val wordCountsWithGroup = rdd .groupByKey() .map(t => (t._1, t._2.sum)) .collect()

- 38. © 2015 IBM Corporation Shuffle - GroupByKey Vs ReduceByKey val wordPairsRDD = rdd.map(word => (word, 1)) val wordCountsWithReduce = wordPairsRDD .reduceByKey(_ + _) .collect()

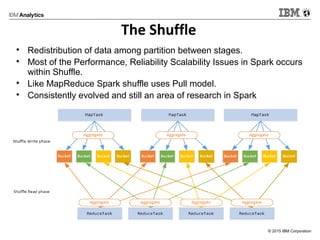

- 39. © 2015 IBM Corporation The Shuffle Redistribution of data among partition between stages. Most of the Performance, Reliability Scalability Issues in Spark occurs within Shuffle. Like MapReduce Spark shuffle uses Pull model. Consistently evolved and still an area of research in Spark

- 40. © 2015 IBM Corporation Shuffle Overview • Spark run job stage by stage. • Stages are build up by DAGScheduler according to RDD’s ShuffleDependency • e.g. ShuffleRDD / CoGroupedRDD will have a ShuffleDependency • Many operator will create ShuffleRDD / CoGroupedRDD under the hood. • Repartition/CombineByKey/GroupBy/ReduceByKey/cogrou p • Many other operator will further call into the above operators • e.g. various join operator will call CoGroup. •

- 41. © 2015 IBM Corporation You have seen this join union groupBy Stage 3 Stage 1 Stage 2 A: B: C: D: map E: F: G:

- 42. © 2015 IBM Corporation Shuffle is Expensive • When doing shuffle, data no longer stay in memory only, gets written to disk. • For spark, shuffle process might involve • Data partition: which might involve very expensive data sorting works etc. • Data ser/deser: to enable data been transfer through network or across processes. • Data compression: to reduce IO bandwidth etc. • Disk IO: probably multiple times on one single data block • E.g. Shuffle Spill, Merge combine

- 43. © 2015 IBM Corporation Shuffle History Shuffle module in Spark has evolved over time. Spark(0.6-0.7) – Same code path as RDD’s persist method. MEMORY_ONLY , DISK_ONLY options available. Spark (0.8-0.9) - Separate code for shuffle, ShuffleBlockManager & BlockObjectWriter for shuffle only. - Shuffle optimization - Consolidate Shuffle Write. Spark 1.0 – Introduced pluggable shuffle framework Spark 1.1 – Sort based Shuffle Implementation Spark 1.2 - Netty transfer Implementation. Sort based shuffle is default now. Spark 1.2+ - External shuffle service etc.

- 44. © 2015 IBM Corporation Understanding Shuffle Input Aggregation Types of Shuffle Hash based − Basic Hash Shuffle − Consolidate Hash Shuffle Sort Based Shuffle

- 45. © 2015 IBM Corporation Input Aggregation Like MapReduce, Spark involves aggregate(Combiner) on map side. Aggregation is done in ShuffleMapTask using AppendOnlyMap (In Memory Hash Table combiner) − Key’s are never removed , values gets updated ExternalAppendOnlyMap (In Memory and disk Hash Table combiner) − A Hash Map which can spill to disk − Append Only Map that spill data to disk if insufficient memory Shuffle file In-Memory Buffer – Shuffle writes to In-memory buffer before writing to a shuffle file.

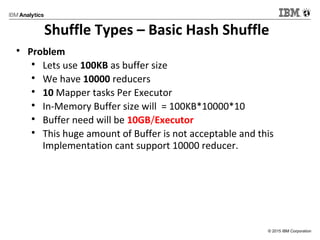

- 46. © 2015 IBM Corporation Shuffle Types – Basic Hash Shuffle Hash Based shuffle (spark.shuffle.manager). Hash Partitions the data for reducers Each map task writes each bucket to a file. #Map Tasks = M #Reduce Tasks = R #Shuffle File = M*R , #In-Memory Buffer = M*R

- 47. © 2015 IBM Corporation Shuffle Types – Basic Hash Shuffle Problem Lets use 100KB as buffer size We have 10000 reducers 10 Mapper tasks Per Executor In-Memory Buffer size will = 100KB*10000*10 Buffer need will be 10GB/Executor This huge amount of Buffer is not acceptable and this Implementation cant support 10000 reducer.

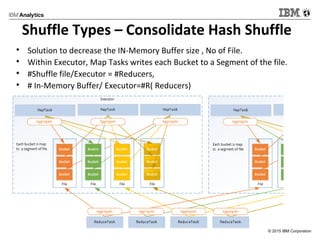

- 48. © 2015 IBM Corporation Shuffle Types – Consolidate Hash Shuffle Solution to decrease the IN-Memory Buffer size , No of File. Within Executor, Map Tasks writes each Bucket to a Segment of the file. #Shuffle file/Executor = #Reducers, # In-Memory Buffer/ Executor=#R( Reducers)

- 49. © 2015 IBM Corporation Shuffle Types – Sort Based Shuffle Consolidate Hash Shuffle needs one file for each reducer. - Total C*R intermediate file , C = # of executor running map tasks Still too many files(e.g ~10k reducers), Need significant memory for compression & serialization buffer. Too many open files issue. Sort Based Shuflle is similar to map-side shuffle from MapReduce Introduced in Spark 1.1 , now its default shuffle

- 50. © 2015 IBM Corporation Shuffle Types – Sort Based Shuffle Map output records from each task are kept in memory till they can fit. Once full , data gets sorted by partition and spilled to single file. Each Map task generate 1 data file and one index file Utilize external sorter to do the sort work If map side combiner is required data will be sorted by key and partition otherwise only by partition #reducer <=200, no sorting uses hash approach, generate file per reducer and merge them into a single file

- 51. © 2015 IBM Corporation Shuffle Reader On Reader side both Sort & Hash Shuffle uses Hash Shuffle Reader On reducer side a set of thread fetch remote output map blocks Once block comes its records are de-serialized and passed into a result queue. Records are passed to ExternalAppendOnlyMap , for ordering operation like sortByKey records are passed to externalSorter. 20 Bucket Bucket Bucket Bucket Bucket Bucket Bucket Bucket Bucket Bucket Bucket Bucket Bucket Bucket Bucket Bucket Reduce Task Aggregator Aggregator Aggregator Aggregator Reduce Task Reduce Task Reduce Task

- 52. © 2015 IBM Corporation Type of RDDS - RDD Interface Base for all RDDs (RDD.scala), consists of A Set of partitions (“splits” in Hadoop) A List of dependencies on parent RDDs A Function to compute the partition from its parents Optional preferred locations for each partition A Partitioner defines strategy for partitionig hash/range Basic operations like map, filter, persist etc Partitions Dependencies Compute PreferredLocations Partitioner map,filter,persist s Lineage Optimized execution Operations

- 53. © 2015 IBM Corporation Example: HadoopRDD partitions = one per HDFS block dependencies = none compute(partition) = read corresponding block preferredLocations(part) = HDFS block location partitioner = none

- 54. © 2015 IBM Corporation Example: MapPartitionRDD partitions = Parent Partition dependencies = “one-to-one “parent RDD compute(partition) = apply map on parent preferredLocations(part) = none (ask parent) partitioner = none

- 55. © 2015 IBM Corporation Example: CoGroupRDD partitions = one per reduce task dependencies = could be narrow or wide dependency compute(partition) = read and join shuffled data preferredLocations(part) = none partitioner = HashPartitioner(numTasks)

- 56. © 2015 IBM Corporation Extending RDDs Extend RDDs to To add transformation/actions Allow developer to express domain specific calculation in cleaner way Improves code readability Easy to maintain Custom RDD for Input Source, Domain Way to add new Input data source Better way to express domain specific data Better control on partitioning and distribution

- 57. © 2015 IBM Corporation How to Extend Add custom operators to RDD Use scala Impilicits Feels and works like built in operator You can add operator to Specific RDD or to all Custom RDD Extend RDD API to create our own RDD Implement compute & getPartitions abstract method

- 58. © 2015 IBM Corporation Implicit Class Creates an extension method to existing type Introduced in Scala 2.10 Implicits are compile time checked. Implicit class gets resolved into a class definition with implict conversion We will use Implicit to add new method in RDD

- 59. © 2015 IBM Corporation Adding new Operator to RDD We will use Scala Implicit feature to add a new operator to an existingRDD This operator will show up only in our RDD Implicit conversions are handled by Scala

- 60. © 2015 IBM Corporation Custom RDD Implementation Extending RDD allow you to create your own custom RDD structure Custom RDD allow control on computation, change partition & locality information

- 61. © 2015 IBM Corporation Caching in RDD Spark allows caching/Persisting entire dataset in memory Persisting RDD in cache First time when it is computed it will be kept in memory Reuse the the cache partition in next set of operation Fault-tolerant, recomputed in case of failure Caching is key tool for interactive and iterative algorithm Persist support different storage level Storage level - In memory , Disk or both , Techyon Serialized Vs Deserialized

- 62. © 2015 IBM Corporation Caching In RDD Spark Context tracks persistent RDDs Block Manager puts partition in memory when first evaluated Cache is lazy evaluation , no caching without an action. Shuffle also keeps its data in Cache after shuffle operations. We still need to cache shuffle RDDs

- 63. © 2015 IBM Corporation Caching Demo

Editor's Notes

- #12: MapReduce has MultithreadedMapper

- #13: MapReduce has MultithreadedMapper

- #14: Write coarse-grained and not fine grained. Intermediate results written to memory whereas between 2 mapreduce tasks the data is written to disk only. Replicate data or log updates across the machines. RDD provides fault tolerance by logging the transformations used to build a dataset (its lineage) rather than the actual data

- #15: RDDs can hold premitive , sequence , scala objects, mixed type Special RDDs are their for special purpose – Pair RDD, Double RDD, Sequence File RDD

- #16: Map leads to narrow dependency, while join lead to wide dependency. Wide dependency needs shuffling , parent gets materialized.

- #17: Map leads to narrow dependency, while join lead to wide dependency. Wide dependency needs shuffling , parent gets materialized.

- #18: In the Driver, there is something called DAG Scheduler…looks at the DAG all it understands its wide or narrow. The DAG scheduler than submits the first stage to the Task Scheduler which is also in the driver. A stage is split into tasks. An task is data + computation. The TS determines the number of tasks needed for the stage and allocate to the executors. Execute heap gives 60% to CachedRDD, 20% to shuffle and 20% to User Program by default.

- #25: (file systems & file formats – NFS,HDFS,S3, CSV,JSON,Sequence,Protocol Buffer)

- #26: Transformation doesn’t mutate original RDD, always returns a new RDD

- #30: fragmentation is what enables Spark to execute in parallel, and the level of fragmentation is a function of the number of partitions of your RDD The number of partitions is important because a stage in Spark will operate on one partition at a time (and load the data in that partition into memory)

- #31: since with fewer partitions there’s more data in each partition, you increase the memory pressure on your program. More Network and disk IO

- #33: dfs.block.size - The default value in Hadoop 2.0 is 128MB. In the local mode the corresponding parameter is fs.local.block.size (Default value 32MB). It defines the default partition size.

- #34: HashPartitionerextends Partitioner class

- #40: A shuffle involves two sets of tasks: tasks from the stage producing the shuffle data and tasks from the stage consuming it. For historical reasons, the tasks writing out shuffle data are known as “map task” and the tasks reading the shuffle data are known as “reduce tasks Every map task writes out data to local disk, and then the reduce tasks make remote requests to fetch that data

- #46: Just the same as Hadoop Map Reduce, Spark shuffle involves the aggregate step (combiner) before writing map outputs (intermediate values) to buckets. Spark also writes to a small buffers (size of buffer is configurable via spark.shuffle.file.buffer.kb) before writing to physical files to increase disk I/O speed

- #49: Reduces per map shuffle file to # of Reducer

![© 2015 IBM Corporation

Problems in Spark

Applications cannot share data(mostly RDDs in

Spark Context) without writing to external

Storage.

Resource allocation inefficiency

[spark.dynamicAllocation.enabled].

Not Exactly designed for interactive applications.](https://image.slidesharecdn.com/sparkrddfinal-151207112610-lva1-app6892/85/Apache-Spark-Introduction-and-Resilient-Distributed-Dataset-basics-and-deep-dive-12-320.jpg)

![© 2015 IBM Corporation

Partition - Repartitioning

RDD provides two operators

repartition(numPartitions)

− Can Increase/decrease number of partitions

− Internally does shuffle

− expensive due to shuffle

− For decreasing partition use coalesce

Coalesce(numPartition,Shuffle:[true/false])

− Decreases partitions

− Goes for narrow dependencies

− Avoids shuffle

− In case of drastic reduction may trigger shuffle](https://image.slidesharecdn.com/sparkrddfinal-151207112610-lva1-app6892/85/Apache-Spark-Introduction-and-Resilient-Distributed-Dataset-basics-and-deep-dive-34-320.jpg)