Apply Hammer Directly to Thumb; Avoiding Apache Spark and Cassandra AntiPatterns with Russell Spitzer

- 1. Spark and Cassandra Anti-Patterns © DataStax, All Rights Reserved. Russell Spitzer

- 2. Russell (left) and Cara (right) • Software Engineer • Spark-Cassandra Integration since Spark 0.9 • Cassandra since Cassandra 1.2 • 3 Year Scala Convert • Still not comfortable talking about Monads in public

- 3. Avoiding the Sharp Edges •Out of Memory Errors •RPC Failures •"It is Slow" •Serialization •Understanding what Catalyst does After working with customers for several years, most problems boil down to a few common scenarios.

- 4. Most Common Performance Pitfall val there = rdd.map(doStuff).collect() val backAgain = someWork.map(otherStuff) val thereAgain = sc.parallelize(backAgain) The Hobbit (1977) OOM Slow RPC Failures

- 5. There and Back Again: Don't Collect and Parallelize Don't Do val there = rdd.map(doStuff).collect() val backAgain = someWork.map(otherStuff) val thereAgain = sc.parallelize(backAgain) Instead val there = rdd .map(doStuff) .map(otherStuff) The Hobbit (1977)

- 6. There and Back Again: Don't Collect and Parallelize Don't Do val there = rdd.map(doStuff).collect() val backAgain = someWork.map(otherStuff) val thereAgain = sc.parallelize(backAgain) Instead val there = rdd .map(doStuff) .map(otherStuff) The Hobbit (1977)

- 7. Lord of the Rings, 2001-2003 Driver JVM Your Cluster Parallelize Collect Why Not? 1. You are using Spark for a Reason

- 8. Lord of the Rings, 2001-2003 Driver JVM Your Cluster Parallelize Collect Dependable Easy to work with Easy to understand Not very big Only 1 Why Not? 1. You are using Spark for a Reason

- 9. Lord of the Rings, 2001-2003 Driver JVM Your Cluster Parallelize Collect Dependable Easy to work with Easy to understand Not very big Only 1 The Entire Reason Behind Using Spark Why Not? 1. You are using Spark for a Reason

- 10. Lord of the Rings, 2001-2003 Driver JVM Your Cluster Parallelize Collect Dependable Easy to work with Easy to understand Not very big Only 1 The Entire Reason Behind Using Spark Why Not? 1. You are using Spark for a Reason OOM

- 11. Why Not? 2. Moving data between machines is slow Jim Gray, http://loci.cs.utk.edu/dsi/netstore99/docs/presentations/keynote/sld023.htm

- 12. Why Not? 2. Moving data between machines is slow Jim Gray, http://loci.cs.utk.edu/dsi/netstore99/docs/presentations/keynote/sld023.htm

- 13. Why Not? 2. Moving data between machines is slow Jim Gray, http://loci.cs.utk.edu/dsi/netstore99/docs/presentations/keynote/sld023.htm The Lord of the Rings, 1978

- 14. Why Not? 3. Parallelize sends data in task metadata parallelize()

- 15. Why Not? 3. Parallelize sends data in task metadata List[Dwarves] -> RDD[Dwarves] ENIAC Programmers, 1946, University of Pennsylvania ?

- 16. Why Not? 3. Parallelize sends data in task metadata List[Dwarves] -> RDD[Dwarves] Minimum of one Dwarf per Partition RPC Warns on Task Metadata over 100kb

- 17. Why Not? 3. Parallelize sends data in task metadata scala> val treasure = 1 to 100 map (_ => "x" * 1024) scala> sc.parallelize(Seq(treasure)).count WARN 2018-05-21 14:13:08,035 org.apache.spark.scheduler.TaskSetManager: Stage 0 contains a task of very large size (105 KB). The maximum recommended task size is 100 KB. res0: Long = 1 Storing indefinitely growing state in a single object will continue growing in size until you run into heap problems. J.R.R. Tolkien, “Conversation with Smaug” (The Hobbit, 1937)

- 18. Keep the work Distributed Don't Do val there = rdd.map(doStuff).collect() val backAgain = someWork.map(otherStuff) val thereAgain = sc.parallelize(backAgain) 1.We won't be doing distributed work 2.We end up sending things over the wire 3.Parallelize doesn't handle large objects well 4.We don't need to! Everyday val there = rdd .map(doStuff) .map(otherStuff) The Hobbit (1977)

- 19. Start Distributed if Possible Other alternatives to Parallelize Start Data out Distributed (Cassandra, HDFS, S3, …) The Hobbit (1977)

- 20. Predicate Pushdowns Failing! SELECT * FROM escapes WHERE time = 11-27-1977 No Pushdown Slow

- 21. What have I got in my pocket? Make your literals' types explicit! SELECT * FROM escapes WHERE time = 11-27-1977 Catalyst No precious predicate pushdowns

- 22. Catalyst Transforms SQL into Distributed Work Distributed Work ? ? ? SO MYSTERY MUCH MAGICCatalyst SELECT * FROM escapes WHERE time = 11-27-1977

- 23. SELECT * FROM escapes WHERE time = 11-27-1977 'Project [*] 'Filter ('time = 1977-11-27) 'UnresolvedRelation `test`.`escapes` Logical Plan Describes What Needs to Happen

- 24. 'UnresolvedRelation `test`.`escapes` SELECT * FROM escapes WHERE time = 11-27-1977 'Project [*] 'Filter ('time = 1977-11-27) It is transformed

- 25. SELECT * FROM escapes WHERE time = 11-27-1977 *Filter (cast(time#5 as string) = 1977-11-27) *Scan CassandraSourceRelation test.escapes[time#5,method#6] ReadSchema: struct<time:date,method:string> Into a Physical Plan which defines How it will be accomplished

- 26. SELECT * FROM escapes WHERE time = 11-27-1977 *Filter (cast(time#5 as string) = 1977-11-27) *Scan CassandraSourceRelation test.escapes[time#5,method#6] ReadSchema: struct<time:date,method:string> What happened to predicate pushdown? Into a Physical Plan which defines How it will be accomplished

- 27. Catalyst Needs to make Types Match '1977-11-27' Compare this to time#5

- 28. '1977-11-27' This is a string? time#5 This is a date? Catalyst Needs to make Types Match

- 29. '1977-11-27' This is a string? time#5 This is a date? Cast(time#5 as String) MAKE THEM BOTH STRINGS Catalyst Needs to make Types Match

- 30. '1977-11-27' This is a string? time#5 This is a date? Cast(time#5 as String) Functions Cannot be Pushed to Datasources MAKE THEM BOTH STRINGS Catalyst Needs to make Types Match

- 31. Let's try again with explicitly typed literals SELECT * FROM test.escapes WHERE time = cast('1977-11-27' as date) Catalyst Hmmmm….

- 32. Distributed Work ? ? ? SO MYSTERY MUCH MAGICCatalyst SELECT * FROM test.escapes WHERE time = cast('1977-11-27' as date) Catalyst Transforms SQL into Distributed Work

- 33. Same Logical Plan SELECT * FROM escapes WHERE time = 11-27-1977 'Project [*] 'Filter ('time = 1977-11-27) 'UnresolvedRelation `test`.`escapes`

- 34. Transform SELECT * FROM escapes WHERE time = 11-27-1977 'Project [*] 'Filter ('time = 1977-11-27) 'UnresolvedRelation `test`.`escapes`

- 35. Different Physical Plan SELECT * FROM escapes WHERE time = 11-27-1977 *Scan CassandraSourceRelation test.escapes[time#5,method#6] PushedFilters: [*EqualTo(time,1977-11-27)], ReadSchema: struct<time:date,method:string>

- 36. SELECT * FROM escapes WHERE time = 11-27-1977 *Scan CassandraSourceRelation test.escapes[time#5,method#6] PushedFilters: [*EqualTo(time,1977-11-27)], ReadSchema: struct<time:date,method:string> 1. PushedFilters is populated 2. There is no Spark Side Filter at all *Means that the Filter is Handled By the Datasource and not Catalyst Different Physical Plan

- 37. Succesful Pushdown SELECT * FROM test.escapes WHERE time = cast('1977-11-27' as date) Catalyst +----------+-----------------------------+ |time |method | +----------+-----------------------------+ |1977-11-27|Ask a totally not fair riddle| +----------+-----------------------------+

- 38. Writing to X is Slow Slow Bad Resource Utilization RDD.foreach(x => SlowIO(x))

- 39. You shall not pass! Concurrency in Spark

- 40. Functions are applied to iterators Iterator[Balrog] .map( balrog => moveAcrossBridge(balrog))

- 41. Iterator[Balrog] .map( balrog => moveAcrossBridge(balrog)) No other elements will have a function applied to them until the current element is done One Item is Processed at a Time

- 42. Native Spark Parallelism is Based on Cores Itera .map( balrog Itera .map( balrog Itera .map( balrog Core 1 Core 2 Core 3 Max Number of Balrogs crossing in parallel is limited by the number of cores

- 43. Increase Parallelism without Increasing Cores Iterator[Balrog] .map( balrog => moveAcrossBridge(balrog)) .foreach( balrog => balrog.eat(nearestHobbit)) Slow, Bottleneck Fast

- 44. Grouping or Futures Iterator[Balrog]Process in groups grouped.map(balrogGroup => moveGroup(balrogGroup)) Slow elements Will slow down the group

- 45. Grouping or Futures Iterator[Balrog] Iterator[Future[MovedBalrog]] Process in groups Return Futures map(balrog => asyncMove(balrog)) grouped.map(balrogGroup => moveGroup(balrogGroup)) Still need to draw elements multiple at a time (if not forEach) Slow elements Will slow down the group

- 46. DSE Spark Connector's Sliding Iterator Buffer Futures /** Prefetches a batchSize of elements at a time **/ protected def slidingPrefetchIterator[T](it: Iterator[Future[T]], batchSize: Int): Iterator[T] = { val (firstElements, lastElement) = it .grouped(batchSize) .sliding(2) .span(_ => it.hasNext) (firstElements.map(_.head) ++ lastElement.flatten).flatten.map(_.get) } Group

- 51. My Precious! Don't Cache without Reuse The Hobbit, 1966

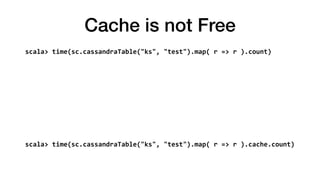

- 52. Cache is not Free scala> time(sc.cassandraTable("ks", "test").map( r => r ).count) Elapsed time: 35.773478836 res54: Long = 15436998 scala> time(sc.cassandraTable("ks", "test").map( r => r ).cache.count) Elapsed time: 58.657585144 res55: Long = 15436998

- 54. When does Caching for Resilience Make Sense? Let's MATH

- 55. When does Caching for Resilience Make Sense? Let's MATH Lets Assume our Shuffle/Read partially fails 1/10 times Cache costs c seconds Normal run costs r seconds Failures happen at a rate of f If (c + r < r + r * f) Caching helps us out

- 56. When does Caching for Resilience Make Sense? Let's MATH Lets Assume our Shuffle/Read partially fails 1/10 times Cache costs c seconds Normal run costs r seconds Failures happen at a rate of f If (c + r < r + r * f) Caching helps us out

- 57. When does Caching for Resilience Make Sense? Let's MATH Lets Assume our Shuffle/Read partially fails 1/10 times Cache costs c seconds Normal run costs r seconds Failures happen at a rate of f If (c + r < (r +1) * f) Caching helps us out

- 58. When does Caching for Resilience Make Sense? Let's MATH Lets Assume our Shuffle/Read partially fails 1/10 times Cache costs c seconds Normal run costs r seconds Failures happen at a rate of f If ( (c + r) / (r + 1) < f ) Caching helps us out

- 59. When does Caching for Resilience Make Sense? Let's MATH For Our Example Caching is worth it. if (.6 > failures) If ( (c + r) / (r + 1) < f ) Caching helps us out

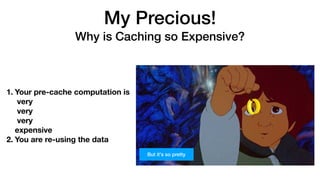

- 60. My Precious! Why is Caching so Expensive? 1. Serialize Everything 2. Hold all the data at once 3. Expensive disk access But it's so pretty

- 61. My Precious! Why is Caching so Expensive? But it's so pretty 1. Your pre-cache computation is very very very expensive 2. You are re-using the data

- 62. What have we learned? Don't Do Parallelize and Collect Rely on Spark to do our Types in Literals Do slow blocking actions in map/foreach Cache all the time Instead Keep in Distributed Actions Specify our Types Do concurrent actions when it makes sense Cache only when we re-use data The Hobbit (1977)

- 63. DSE 6 !63

- 64. Thank you !64 © DataStax, All Rights Reserved. http://www.russellspitzer.com/ @RussSpitzer Come chat with us at DataStax Academy: https://academy.datastax.com/slack

![Why Not?

3. Parallelize sends data in task metadata

List[Dwarves] -> RDD[Dwarves]

ENIAC Programmers, 1946, University of Pennsylvania

?](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-15-320.jpg)

![Why Not?

3. Parallelize sends data in task metadata

List[Dwarves] -> RDD[Dwarves]

Minimum of one

Dwarf per

Partition

RPC Warns on Task

Metadata over

100kb](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-16-320.jpg)

![SELECT * FROM escapes WHERE time = 11-27-1977

'Project [*]

'Filter ('time = 1977-11-27)

'UnresolvedRelation `test`.`escapes`

Logical Plan Describes

What Needs to Happen](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-23-320.jpg)

!['UnresolvedRelation `test`.`escapes`

SELECT * FROM escapes WHERE time = 11-27-1977

'Project [*]

'Filter ('time = 1977-11-27)

It is transformed](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-24-320.jpg)

![SELECT * FROM escapes WHERE time = 11-27-1977

*Filter (cast(time#5 as string) = 1977-11-27)

*Scan

CassandraSourceRelation

test.escapes[time#5,method#6]

ReadSchema:

struct<time:date,method:string>

Into a Physical Plan which defines

How it will be accomplished](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-25-320.jpg)

![SELECT * FROM escapes WHERE time = 11-27-1977

*Filter (cast(time#5 as string) = 1977-11-27)

*Scan

CassandraSourceRelation

test.escapes[time#5,method#6]

ReadSchema:

struct<time:date,method:string>

What happened to

predicate pushdown?

Into a Physical Plan which defines

How it will be accomplished](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-26-320.jpg)

![Same Logical Plan

SELECT * FROM escapes WHERE time = 11-27-1977

'Project [*]

'Filter ('time = 1977-11-27)

'UnresolvedRelation `test`.`escapes`](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-33-320.jpg)

![Transform

SELECT * FROM escapes WHERE time = 11-27-1977

'Project [*]

'Filter ('time = 1977-11-27)

'UnresolvedRelation `test`.`escapes`](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-34-320.jpg)

![Different Physical Plan

SELECT * FROM escapes WHERE time = 11-27-1977

*Scan

CassandraSourceRelation

test.escapes[time#5,method#6]

PushedFilters: [*EqualTo(time,1977-11-27)],

ReadSchema: struct<time:date,method:string>](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-35-320.jpg)

![SELECT * FROM escapes WHERE time = 11-27-1977

*Scan

CassandraSourceRelation

test.escapes[time#5,method#6]

PushedFilters: [*EqualTo(time,1977-11-27)],

ReadSchema: struct<time:date,method:string>

1. PushedFilters is populated

2. There is no Spark Side Filter at all

*Means that the Filter is Handled By the Datasource and not Catalyst

Different Physical Plan](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-36-320.jpg)

![Functions are applied to iterators

Iterator[Balrog]

.map( balrog => moveAcrossBridge(balrog))](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-40-320.jpg)

![Iterator[Balrog]

.map( balrog => moveAcrossBridge(balrog))

No other elements will have a

function applied to them until

the current element is done

One Item is Processed at a Time](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-41-320.jpg)

![Increase Parallelism

without Increasing Cores

Iterator[Balrog]

.map( balrog => moveAcrossBridge(balrog))

.foreach( balrog => balrog.eat(nearestHobbit))

Slow, Bottleneck

Fast](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-43-320.jpg)

![Grouping or Futures

Iterator[Balrog]Process

in groups

grouped.map(balrogGroup => moveGroup(balrogGroup))

Slow elements

Will slow down the group](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-44-320.jpg)

![Grouping or Futures

Iterator[Balrog]

Iterator[Future[MovedBalrog]]

Process

in groups

Return Futures

map(balrog => asyncMove(balrog))

grouped.map(balrogGroup => moveGroup(balrogGroup))

Still need to draw

elements multiple at a

time (if not forEach)

Slow elements

Will slow down the group](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-45-320.jpg)

: Iterator[T] = {

val (firstElements, lastElement) = it

.grouped(batchSize)

.sliding(2)

.span(_ => it.hasNext)

(firstElements.map(_.head) ++ lastElement.flatten).flatten.map(_.get)

}

Group](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-46-320.jpg)

: Iterator[T] = {

val (firstElements, lastElement) = it

.grouped(batchSize)

.sliding(2)

.span(_ => it.hasNext)

(firstElements.map(_.head) ++ lastElement.flatten).flatten.map(_.get)

}

Group

DSE Spark Connector's Sliding Iterator](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-47-320.jpg)

: Iterator[T] = {

val (firstElements, lastElement) = it

.grouped(batchSize)

.sliding(2)

.span(_ => it.hasNext)

(firstElements.map(_.head) ++ lastElement.flatten).flatten.map(_.get)

}

Sliding(2)

Group

Buffer

DSE Spark Connector's Sliding Iterator](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-48-320.jpg)

: Iterator[T] = {

val (firstElements, lastElement) = it

.grouped(batchSize)

.sliding(2)

.span(_ => it.hasNext)

(firstElements.map(_.head) ++ lastElement.flatten).flatten.map(_.get)

}

Sliding(2)

Group

Flatten

get

Buffer

DSE Spark Connector's Sliding Iterator](https://image.slidesharecdn.com/3russellspitzer-180614205425/85/Apply-Hammer-Directly-to-Thumb-Avoiding-Apache-Spark-and-Cassandra-AntiPatterns-with-Russell-Spitzer-49-320.jpg)