Artificial Intelligence and Cyber Secutity Module 1.pptx

- 1. Fundamentals of Artificial Intelligence & Cyber Security Dr. Maryjo M George, Asst. Prof., Dept. of AI & ML, MITE, Moodabidri

- 2. MITE - Institute Vision & Mission

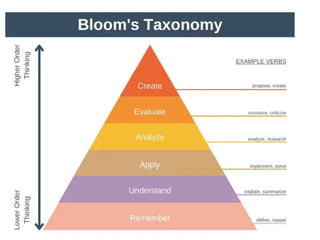

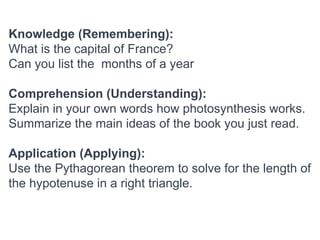

- 6. Knowledge (Remembering): What is the capital of France? Can you list the months of a year Comprehension (Understanding): Explain in your own words how photosynthesis works. Summarize the main ideas of the book you just read. Application (Applying): Use the Pythagorean theorem to solve for the length of the hypotenuse in a right triangle.

- 7. Analysis (Analyzing): Compare and contrast the themes and characters in two different novels. Evaluation (Evaluating): Critique the effectiveness of a government policy in addressing poverty. Synthesis (Creating): Design a marketing campaign for a new product, including a logo and advertising strategy. Create a multimedia presentation on the cultural significance of a traditional festival.

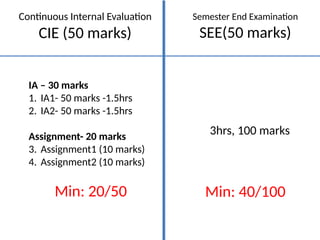

- 8. Continuous Internal Evaluation CIE (50 marks) Semester End Examination SEE(50 marks) 3hrs, 100 marks IA – 30 marks 1. IA1- 50 marks -1.5hrs 2. IA2- 50 marks -1.5hrs Assignment- 20 marks 3. Assignment1 (10 marks) 4. Assignment2 (10 marks) Min: 20/50 Min: 40/100

- 10. Program Outcomes

- 16. Copyright © 2019 by Wiley India Pvt. Ltd., 4436/7, Ansari Road, Daryaganj, New Delhi- 110002

- 20. 1.Voice Assistants: Voice-powered AI assistants like Siri (Apple), Google Assistant (Android), and Bixby (Samsung) use natural language processing and machine learning to understand and respond to voice commands and questions. 2.Camera Enhancements: AI is used for improving smartphone photography. Features like scene recognition, facial recognition, and automatic adjustments to settings like exposure and white balance are all AI-driven. 3.Predictive Text and Autocorrect: AI algorithms predict and suggest words or phrases while you're typing, making texting and typing more efficient. 4.Voice Recognition for Texting: AI-powered speech-to-text technology enables users to dictate messages, emails, and notes by speaking into their phones. 5.Smart Battery Management: AI algorithms monitor your usage patterns and optimize battery life by closing background apps and adjusting power settings accordingly.

- 34. Artificial Intelligence (AI) is a field with a broad scope, and its definition can vary depending on who you ask. In simple terms, the main goal of AI is to make computers perform tasks that typically require human intelligence. Here are some reasons why people are interested in automating human intelligence: 1.Understanding Human Intelligence: AI helps us better understand how human intelligence works by trying to replicate it in machines. 2.Smarter Programs: AI aims to create smarter computer programs that can learn, adapt, and make decisions like humans. 3.Solving Difficult Problems: AI techniques are valuable for solving complex problems that are challenging for traditional computing methods. Overview of Artificial Intelligence

- 35. To a layman: AI may seem like the combination of "artificial" (something made by humans) and "intelligence" (the ability to gain knowledge and use it). For technical experts: AI is a complex field that involves creating intelligent machines. AI doesn't solely aim to mimic human intelligence; it's about building systems that can solve problems, even if their methods are different from human approaches Intelligence vs. Artificial Intelligence: •Intelligence: Natural, can increase with experience and is sometimes hereditary. Doesn't rely on external electricity but on knowledge. •Artificial Intelligence: Involves computer systems that need electrical energy and a knowledge base to generate output. Knowledge in AI often comes from human input.

- 36. •Strong AI: Claims that computers can reach human-level thinking and reasoning. Aims for true machine intelligence that surpasses human capabilities. •Weak AI: Focuses on enhancing computers with intelligence for specific tasks or domains. Such AI acts intelligently within its limited scope but doesn't possess human-like general intelligence

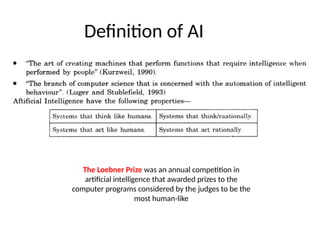

- 37. Definitions of AI Artificial Intelligence (AI) is a field where we make machines act smart and do things that typically need human thinking. Let's break down the key ideas in simpler terms: Four AI Properties: •AI can be like humans in thinking and behaving. •It can also think and act logically and rationally. 1. Acting Humanly (Turing Test): •Imagine chatting with a computer, and you can't tell it's not a person. That's smart AI. •There's a contest (Loebner Prize) to see if a computer can fool you into thinking it's human. Virtual Customer Support Chatbots

- 38. 2. Thinking Humanly (Cognitive Modeling): •It's not enough for AI to seem smart; it should think like humans. •We study how humans think and try to make computers do the same using experiments and computer tricks. •There's a whole field (Cognitive Science) for this, mixing AI, psychology, linguistics, and more •ensemble human thinking and behavior, making them more effective and user-friendly in various applications.

- 39. 3. Thinking Rationally (Laws of Thought): •AI can be like a super logical thinker, following strict rules to make decisions. •We use math and logic to teach computers to think correctly. •But, it's tough because real-life situations often need more than just logic. 4. Acting Rationally (Rational Agents): •AI can be like an intelligent agent with goals, like a robot trying to achieve something. •A smart agent acts in ways that maximize its goals, but it doesn't have to know everything. •It does its best with what it knows and the resources it has.

- 41. Foundations of AI Many older disciplines contribute to a foundation for artificial intelligence. Philosophy: logic, philosophy of mind, philosophy of science, philosophy of mathematics Mathematics: logic, probability theory, theory of computability Psychology: behaviourism, cognitive psychology Computer Science & Engineering: hardware, algorithms, computational complexity theory Linguistics: theory of grammar, syntax, semantics

- 42. Expert Systems Expert systems are computer-based systems that mimic the decision-making abilities of a human expert in a specific domain or field of knowledge. Mass spectrograms are graphical representations of the masses of different ions produced when a chemical compound is vaporized and ionized.

- 43. Early Expert Systems: Bridging Human Expertise •Subsequent expert systems showcased AI's potential: • MYCIN (1975): Revolutionized medical diagnosis, particularly in bacterial infections, by mimicking the decision-making process of medical experts. • PROSPECTOR (1979): Unearthed molybdenum deposits using geological data, highlighting the capacity of AI in geology and resource exploration. • R1 (1982): Configured computers for Digital Equipment Corporation (DEC), streamlining complex technical tasks.

- 44. AI in industry

- 45. In 1981, Japan initiated a project known as the "Fifth Generation" project with the goal of developing intelligent computers. This project was significant because it aimed to advance the field of artificial intelligence (AI) by focusing on the use of Prolog logic programming, a programming language specifically designed for symbolic reasoning and knowledge representation. Here's what this development means: 1.The "Fifth Generation" Project: Japan's "Fifth Generation" project was a government-funded research initiative that sought to achieve a new level of AI capabilities. The term "Fifth Generation" referred to a vision of computing technology that extended beyond traditional numerical computation to include knowledge-based reasoning, natural language understanding, and symbolic reasoning. 2.Prolog Logic Programming: Prolog is a programming language particularly well-suited for tasks involving symbolic reasoning and knowledge representation. It uses a formal logic called predicate logic to represent and manipulate knowledge. Japan's decision to base its project on Prolog indicated a commitment to symbolic AI and knowledge-based systems.

- 46. 1.MCC (Microelectronics and Computer Technology Corporation): In response to Japan's ambitious "Fifth Generation" project, the United States established the Microelectronics and Computer Technology Corporation (MCC) in Austin, Texas, in 1984. MCC was a research consortium comprising multiple American technology companies, and its primary mission was to counter the Japanese project's advancements in AI and semiconductor technology certain limitations in the field of Artificial Intelligence (AI) that became apparent during a period often referred to as the "AI Winter”.

- 47. certain limitations in the field of Artificial Intelligence (AI) that became apparent during a period often referred to as the "AI Winter." Let's break down what these limitations mean: 1.Brittleness: 1. In the context of AI, "brittleness" refers to the inability of AI systems to adapt to unexpected or unusual situations. 2. AI systems, especially early ones like expert systems, were often designed to perform well in specific, well-defined scenarios, but they struggled when faced with inputs or conditions they hadn't been explicitly programmed or trained for. 3. This lack of adaptability made them "brittle" because they could break or provide incorrect results when faced with novel or unforeseen circumstances. 2.Domain Specificity: 1. "Domain specificity" in AI refers to the limited scope or narrow focus of AI systems. 2. Many early AI systems, including expert systems, were highly specialized and could only excel within a predefined domain of knowledge. 3. They couldn't easily transfer their knowledge or skills to different domains or tasks, which limited their versatility. 3.Knowledge Acquisition Bottleneck: 1. The "knowledge acquisition bottleneck" refers to the challenge of acquiring and encoding expert knowledge into an AI system. 2. Building expert systems required substantial effort and resources to collect and formalize the knowledge of human experts in a particular field. 3. This process was labor-intensive and time-consuming, often involving experts

- 48. The "AI Winter" is a historical period marked by reduced funding and interest in AI research due, in part, to these limitations. The field faced skepticism because early AI systems, despite their successes, couldn't live up to the high expectations of achieving human-level general intelligence. However, it's essential to note that AI research eventually emerged from these challenges and limitations, leading to significant advancements in more recent years, with AI systems that are less brittle, more adaptable, and capable of tackling broader tasks and domains

- 49. Overview of Artificial Intelligence • Artificial Intelligence is a broad field – Different things to different people – Multiple definitions are there by different people • Main Objective of AI is – Computers to do tasks that require human intelligence • People want to automate human intelligence for following reasons – Understand human intelligence better – Smarter Programs – Useful technique for solving difficult problem • To a common man Artificial Intelligence is two words – Artificial : Make a copy of something natural – Intelligence : Ability to gain and apply knowledge and skills

- 50. Overview of Artificial Intelligence • List of Task that requires Intelligence – Speech generation & understanding – Pattern Recognition – Mathematical Theorem proving – Reasoning – Motion in obstacle filled space

- 51. Intelligence Vs Artificial Intelligence

- 52. Strong AI Vs Weak AI • Weak AI – Helping people in their intelligence task • Strong AI – Doing intelligence task on its own

- 53. Definition of AI The Loebner Prize was an annual competition in artificial intelligence that awarded prizes to the computer programs considered by the judges to be the most human-like

- 54. Definition of AI

- 55. Acting Humanly – Turing Test • The Turing test was developed by Alan Turing(A computer scientist) in 1950. • He proposed that the “Turing test is used to determine whether or not a computer(machine) can think intelligently like humans”? • The Turing Test is a widely used measure of a machine’s ability to demonstrate human-like intelligence. The basic idea of the Turing Test is simple: •a human judge engages in a text-based conversation with both a human and a machine, and then decides which of the two they believe to be a human. •If the judge is unable to distinguish between the human and the machine based on the conversation, then the machine is said to have passed the Turing Test.

- 56. Thinking Humanly : Cognitive Modeling • Once we gather enough data, we can create a model to simulate the human process. • This model can be used to create software that can think like humans. • Of course this is easier said than done! All we care about is the output of the program given a particular input. • If the program behaves in a way that matches human behavior, then we can say that humans have a similar thinking mechanism. • Within computer science, there is a field of study called Cognitive Modeling that deals with simulating the human thinking process. • It tries to understand how humans solve problems. • It takes the mental processes that go into this problem solving process and turns it into a software model. • This model can then be used to simulate human behavior. • Cognitive modeling is used in a variety of AI applications such as deep learning, expert systems, Natural Language Processing, robotics, and so on.

- 57. Thinking Rationally : Laws of Thought

- 58. Acting Rationally : Rational Agents

- 59. Acting Rationally : Rational Agents

- 60. Is automating intelligence is possible ? • Answer lies in – Turing Test – Yes – its possible

- 61. Man Vs Computer • AI is the study of how to make computers do things which, at the moment, people do better (Rich and Knight) • Second definition • Role of computer changes from something useful to essential

- 62. Man Vs Computer • What computers do better than people? – Numerical computation – Information Storage – Repetitive operations • What People can do better than computers? – People have outperformed computers in activities which involve intelligence. – We do not just process information. – We understand it , make sense out of what we see and hear – Then we come out new ideas

- 63. List of characteristics intelligence should possess

- 64. AI technique for mapping human knowledge

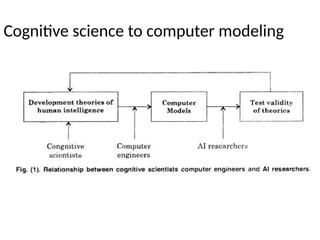

- 65. the relationship between cognitive science, computer modeling, and artificial intelligence (AI). It also touches upon different perspectives on the goal of AI and presents an alternative definition. Additionally, it mentions the importance of symbolic and non-algorithmic methods in AI research. Key points from the text include: 1.Continuous Process: Cognitive scientists develop theories of human intelligence, which are then programmed into computer models by AI researchers. These computer models are used to test and refine the validity of these theories. This iterative process helps improve our understanding of human intelligence. 2.Debate on AI Goals: There is a debate within the AI community about the primary goal of AI. Some believe that AI's goal is to simulate intelligent behavior on a computer, regardless of the techniques used. Others argue that true AI should simulate intelligence using methods similar to those employed by humans. 3.Alternative Definition: The text provides an alternative definition of AI as "that branch of computer science which deals with symbolic, non-algorithmic methods of problem solving." This definition highlights two key characteristics: symbolic thinking, which is different from numerical processing, and non- algorithmic problem-solving, which differs from the algorithmic approach

- 66. 4.Focus on Symbolic and Non-Algorithmic Processing: AI research places a significant emphasis on developing techniques that involve symbolic reasoning and non-algorithmic problem-solving. This approach aims to emulate human reasoning processes more closely. 5.Application Areas of AI: The text mentions that AI is applied in various areas, including general problem-solving, expert systems, natural language processing, computer vision, robotics, and other domains. These applications demonstrate the wide-ranging impact of AI in different fields. In summary, the text highlights the ongoing relationship between cognitive science and AI, the debate over AI goals, and the importance of symbolic and non-algorithmic processing in AI research. It also briefly mentions the diverse application areas of artificial intelligence.

- 67. Cognitive science to computer modeling

- 70. Cognitive science to computer modeling

- 71. Application Areas of AI • General Problem Solving – Water jug, TSP, Tower of Hanoi etc.

- 72. Application Areas of AI • Expert Systems

- 73. Natural Language Processing NLP aims to enable computers to understand, interpret, and generate human language in a way that is both valuable and meaningful.

- 74. Example: Sentiment Analysis: Determining and extracting the sentiment or emotional tone expressed in a piece of text, such as a review, comment, or social media post Review 1:* "I absolutely love this smartphone! The camera quality is amazing, and it's so easy to use.“ In this review, the sentiment is clearly positive. NLP algorithms analyze the words and context to understand that the customer is expressing satisfaction and delight about the smartphone's features. *Review 2:* "The phone constantly freezes, and the battery life is terrible. I regret buying it.“ This review conveys a negative sentiment. NLP algorithms recognize the negative language used ("freezes," "terrible battery life," "regret") and interpret the overall tone as dissatisfaction. *Review 3:* "The smartphone is functional. Nothing special, but it gets the job done.“ This review is neutral. The words used are neither strongly positive nor negative, indicating a neutral sentiment. In this example, NLP algorithms analyze the words, phrases, and context within these reviews to automatically determine the sentiment expressed. Businesses often use sentiment analysis to gauge customer opinions, feedback, and reviews at scale, enabling them to make data-driven decisions based on customer sentiments.

- 75. • Natural Language Processing Components of a Natural Language Computer: A computer capable of interacting through natural language requires three key components: •Parser: This component is responsible for breaking down sentences and understanding their grammatical structure. •Knowledge Representation System: This system stores information and knowledge in a format that the computer can use to make sense of language input. •Output Translator: It helps the computer generate meaningful responses in natural language.

- 76. • Computer Vision Computer vision is a field of artificial intelligence and computer science that focuses on enabling computers to interpret and understand visual information from the world, similar to how humans perceive and make sense of the visual world through their eyes

- 77. Example: Face Recognition Computer vision algorithms analyze the image to extract relevant features. In the case of face recognition, these features might include the location of eyes, nose, mouth, and other distinctive facial characteristics

- 78. AI Computing

- 79. AI system can learn to recognize evolving patterns of spam and phishing attempts without relying solely on fixed rules. Conventional spam filter operates based on rules like checking for specific keywords, known spam email addresses, AI computing vs conventional computing Eg: Email Filtering

- 80. AI Computing

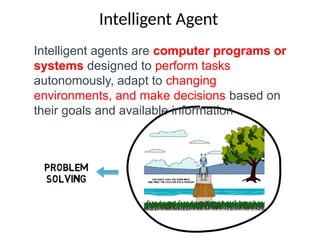

- 81. Intelligent Agent Intelligent agents are computer programs or systems designed to perform tasks autonomously, adapt to changing environments, and make decisions based on their goals and available information

- 82. Intelligent agent An agent is defined as something that sees (perceives) and acts in an environment. IAs perform task that will be beneficial for the business procedure, PC application or a person.

- 83. Copyright © 2019 by Wiley India Pvt. Ltd., 4436/7, Ansari Road, Daryaganj, New Delhi- 110002 Percept: It is defined as an agent’s perceptual inputs at a specified instance. Percept sequence: It is defined as the history of everything that an agent has perceived till date. Agent function Agent Program

- 84. Vacuum agent Perception: 1. Which room it is in 2. whether there is dirt in the room Actions: move left, right, clean the dirt Agent function: if the current room is dirty clean it otherwise move to the next room

- 85. Agent function Percept sequence Action [A, Clean] Right [A, Dirty] Suck [B, Clean] Left [B, Dirty] Suck [A, Dirty], [A, Clean] Right [A, Clean], [B, Dirty] Suck [B, Dirty], [B, Clean] Left [B, Clean], [A, Dirty] Suck [A, Clean], [B, Clean] No-op [B, Clean], [A, Clean] No-op

- 86. Agent function: It is defined as a map from the precept sequence to an action. Agent function, a = F(p) where p : the current percept, a : the action carried out, F :the agent function F maps precepts to actions F :P → A where P :the set of all precepts A : the set of all actions. Generally, an action may be dependent of all the precepts observed, not only the current percept, then, F :P * → A

- 87. Agent program . Function REFLEX-VACUUM-AGENT([location, status]) returns Action if status = Dirty then return Suck if location= A then return Right if location = B then return Left return action

- 88. Copyright © 2019 by Wiley India Pvt. Ltd., 4436/7, Ansari Road, Daryaganj, New Delhi- 110002 REFLEX-VACUUM-AGENT is a function that takes an input consisting of two parameters: location and status. location represents the current location of the vacuum agent (either A or B), and status represents the status of the current location (either Clean or Dirty). The code checks the status of the current location. If the status is "Dirty," the agent returns the action "Suck" to clean the current location. If the location is "A," the agent returns the action "Right" to move to location B. If the location is "B," the agent returns the action "Left" to move to location A. If none of the above conditions are met (i.e., if the location is clean and neither A nor B), the agent returns a default action, which is "NoOp" (indicating no operation or staying in the current location)

- 89. Structure of agent The structure of agent can be represented as: Agent= Architecture + Agent program Program where, Architecture : The machinery that an agent executes on Agent program: An implementation of an agent function Function REFLEX-VACUUM-AGENT([location, status]) returns Action if status = Dirty then return Suck if location= A then return Right if location = B then return Left return action

- 90. PAGE While designing the intelligent systems, there are following four main factors to be considered • P, Percepts: The inputs to the AI system. • A, Actions: The outputs of the AI system. • G, Goals: What the agent is expected to achieve. • E, Environment: What the agent is interacting with. Copyright © 2019 by Wiley India Pvt. Ltd., 4436/7, Ansari Road, Daryaganj, New Delhi-110002

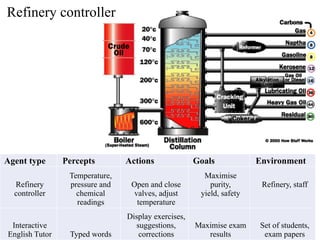

- 91. Agent type Percepts Actions Goals Enviro nment Medical diagnostic System Symptoms, test results, patient’s answers Questions, test requests, treatments, referrals Healthy patients, minimise the costs Patient, hospital, staff Satellite image analysis system Pixels of varying intensity and colour Display a categorisation of the scene Correct image categorisation Images from orbiting satellite Part-picking robot Pixels of varying intensity and colour Pick up parts and sort them into bins Place parts into correct bins Conveyo r belt with parts, bins Refinery controller Temperature, pressure and chemical readings Open and close valves, adjust temperature Maximise purity, yield, safety Refinery , staff Interactive English Tutor Typed words Display exercises, suggestions, corrections Maximise exam results Set of students, exam papers

- 92. Part- picking robot Pixels of varying intensity and colour Pick up parts and sort them into bins Place parts into correct bins Convey or belt with parts, bins Refinery controller Temperature, pressure and chemical readings Open and close valves, adjust temperature Maximise purity, yield, safety Refiner y, staff Interactive English Tutor Typed words Display exercises, suggestions, corrections Maximise exam results Set of students , exam papers

- 93. Satellite image analysis system Agent type Percepts Actions Goals Environment Satellite image analysis system Pixels of varying intensity and colour Display a categorisation of the scene Correct image categorisation Images from orbiting satellite

- 94. Part-picking robot Agent type Percepts Actions Goals Environment Part-picking robot Pixels of varying intensity and colour Pick up parts and sort them into bins Place parts into correct bins Conveyor belt with parts, bins

- 95. Refinery controller Agent type Percepts Actions Goals Environment Refinery controller Temperature, pressure and chemical readings Open and close valves, adjust temperature Maximise purity, yield, safety Refinery, staff Interactive English Tutor Typed words Display exercises, suggestions, corrections Maximise exam results Set of students, exam papers

- 96. Agent program On each invocation, the memory of the agent is updated to mirror the new percept, the best action selected and the fact that the action was taken is also stored inside the memory. The memory persists from one invocation to the next. Function SKELETON-AGENT(Percept) returns Action Static: Memory, the agent's memory of the world Memory <- UPDATE-MEMORY(Memory, Percept) Action <- CHOOSE-BEST-ACTION(Memory) Memory <- UPDATE-MEMORY(Memory, Percept) return Action

- 97. Memory <- UPDATE-MEMORY(Memory, Percept): This line indicates that the agent updates its memory based on the percept it receives from the environment. The function UPDATE-MEMORY is responsible for incorporating new information from the environment into the agent's memory. This is crucial because agents need to maintain an internal representation of the world to make informed decisions. Action <- CHOOSE-BEST-ACTION(Memory): After updating its memory, the agent selects an action using the function CHOOSE- BEST-ACTION. This function likely involves some form of decision-making process, where the agent evaluates its current state and the information in its memory to determine the most appropriate action to take. The "best" action might be based on previously learned knowledge or a predefined strategy. Memory <- UPDATE-MEMORY(Memory, Percept): After choosing an action, the agent again updates its memory. This update may reflect any changes in the environment due to the agent's chosen action. return Action: Finally, the agent returns the selected action as the output of the function, which it will execute in the environment.

- 98. Attributes of agent Autonomy: Agent work without the direct interference of the people or others and have some kind of control over their action and the internal state. Social ability: Agent’s interface with different agents and human by the means or specific likeness of agent communication language. Reactivity: An agent's ability to perceive and respond to its environment. Agents perceive their condition which might include the physical world, client by the means of graphical user interface, an accumulation of agent. Proactivity: Proactivity is the opposite of mere reactivity. It means that an agent doesn't just respond to stimuli from its environment but can also take initiative. Proactive agents can set and pursue their own goals, make decisions independently, and exhibit goal- directed behavior. This ability allows agents to plan, act, and achieve their objectives even in the absence of external stimuli or directives. Goal oriented: An agent is efficient of handling complex high-level tasks. The decision for how such a task is best split into smaller subtasks, and in which order and manner these subtasks should be composed by the agents itself.

- 99. Rationality Rationality is defined as the only status of being sensible and with great judgmental feeling. Rationality is only related to the expected activities or actions and this results relying on what the IA has viewed. Performing activities with the point of obtaining the valuable data is said to be a critical piece of rationality. Rational Agent A right action is always performed by a rational agent, where the right action is equivalent to the action that causes the agent to be most successful in the given percept sequence. The problem the agent solves is characterised by the performance measure, environment, actuators and sensors (PEAS).

- 100. Rational agent Rationality of an agent depends on mainly on the following four parameters: • The degree of success that is determined by the performance measures. • The percept sequence of the agent that have been perceived till date. • Prior knowledge of the environment that is gained by an agent till date. • The actions that the agent might perform in the environment.

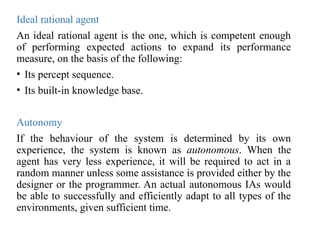

- 101. Ideal rational agent An ideal rational agent is the one, which is competent enough of performing expected actions to expand its performance measure, on the basis of the following: • Its percept sequence. • Its built-in knowledge base. Autonomy If the behaviour of the system is determined by its own experience, the system is known as autonomous. When the agent has very less experience, it will be required to act in a random manner unless some assistance is provided either by the designer or the programmer. An actual autonomous IAs would be able to successfully and efficiently adapt to all types of the environments, given sufficient time.

- 102. Environment and its properties 1. Accessible (Fully observable) and Inaccessible Environments (partially observable) 2. Deterministic Environment and Nondeterministic Environment 3. Episodic and Nonepisodic Environment 4. Static versus Dynamic Environment 5. Discrete versus Continuous Environment 6. Single Agent versus Multiagent Environment

- 109. Environment Types-I : Fully observable (accessible) vs. partially observable (inaccessible) Environment Types-II: Deterministic vs. stochastic (non-deterministic) • Fully observable if agents sensors detect all aspects of environment relevant to choice of action • Could be partially observable due to noisy, inaccurate or missing sensors, or inability to measure everything that is needed • Model can keep track of what was sensed previously, cannot be sensed now, but is probably still true. • Often, if other agents are involved, their intentions are not observable, but their actions are • E.g chess – the board is fully observable, as are opponent’s moves. • Driving – what is around the next bend is not observable (yet). • · Deterministic = the next state of the environment is completely predictable from the current state and the action executed by the agent • Stochastic = the next state has some uncertainty associated with it • Uncertainty could come from randomness, lack of a good environment model, or lack of complete sensor coverage • Strategic environment if the environment is deterministic except for the actions of other agents • Examples: Non-deterministic environment: physical world: Robot on Mars Deterministic environment: Tic Tac Toe game

- 110. Environment Types-III : Episodic vs. sequential Environment Types-IV: Discrete vs. continuous • The agent's experience is divided into atomic "episodes" (each episode consists of the agent perceiving and then performing a single action) and the choice of action in each episode depends only on the episode itself • Sequential if current decisions affect future decisions, or rely on previous ones • Examples of episodic are expert advice systems – an episode is a single question and answer • Most environments (and agents) are sequential • Many are both – a number of episodes containing a number of sequential steps to a conclusion • Examples: Episodic environment: mail sorting system Non- episodic environment: chess game • Discrete = time moves in fixed steps, usually with one measurement per step (and perhaps one action, but could be no action). E.g. a game of chess • Continuous = Signals constantly coming into sensors, actions continually changing. E.g. driving a car

- 111. Environment types-V: Static vs. dynamic: Environment types-VI Single agent vs. multi agent: • Dynamic if the environment may change over time. Static if nothing (other than the agent) in the environment changes • Other agents in an environment make it dynamic • The goal might also change over time • Not dynamic if the agent moves from one part of an environment to another, though it has a very similar effect • E.g. – Playing football, other players make it dynamic, mowing a lawn is static (unless there is a cat…), expert systems usually static (unless knowledge changes) • An agent operating by itself in an environment is single agent! • Multi agent is when other agents are present! • A strict definition of an other agent is anything that changes from step to step. A stronger definition is that it must sense and act • Competitive or co-operative Multi-agent environments • Human users are an example of another agent in a system • E.g. Other players in a football team (or opposing team), wind and waves in a sailing agent, other cars in a taxi driver

- 112. Fully observable? Deterministic? Episodic? Static? Discrete? Single agent? Solitaire No Yes Yes Yes Yes Yes Backgammo n Yes No No Yes Yes No Taxi driving No No No No No No Internet shopping No No No No Yes No Medical diagnosis No No No No No Yes Crossword puzzle Yes Yes Yes Yes Yes Yes English No No No No Yes No

- 113. PEAS for Agent automated Taxi Driver Performance Measure Safety, time, legal drive, comfort Environment Roads, other cars, pedestrians, road signs Actuators Steering, accelerator, brake, signal, horn Sensors Camera, sonar, GPS, Speedometer, odometer, vacuum cleaner Performance Measure cleanness, efficiency: distance traveled to clean, battery life, security Environment room, table, wood floor, carpet, different obstacles Actuators wheels, different brushes, vacuum extractor Sensors camera, dirt detection sensor, cliff sensor, bump sensors, infrared wall sensors

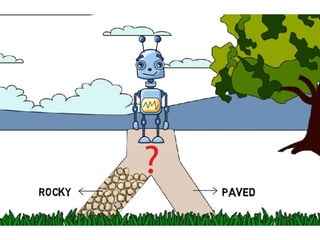

- 114. Types of agents 1. Simple reflex agent 2. Model based agent 3. Goal based agent 4. Utility based agent 5. Learning agent

- 115. 1.Simple Reflex Agent: 1.A simple reflex agent is the most basic type of agent. 2.It makes decisions based solely on the current percept, without considering past percepts or future consequences. 3.It uses a set of condition-action rules (if-then statements) to map percept inputs to actions. 2.Model-Based Agent: 1.A model-based agent maintains an internal model of the world or environment it operates in. 2.It uses this model to plan for future actions and make decisions by considering the current state and its understanding of how actions will affect that state. 3.Goal-Based Agent: 1.A goal-based agent has a specific goal or objective it is trying to achieve. 2.It considers the current state, the desired goal state, and plans a sequence of actions to reach the goal.

- 116. 1.Model-Based Agent: 1.A model-based agent maintains an internal model of the world or environment it operates in. 2.It uses this model to plan for future actions and make decisions by considering the current state and its understanding of how actions will affect that state. 2.Goal-Based Agent: 1.A goal-based agent has a specific goal or objective it is trying to achieve. 2.It considers the current state, the desired goal state, and plans a sequence of actions to reach the goal. 3.Goal-based agents are often more sophisticated and capable of handling complex tasks.

- 117. 5. Learning Agent: 1.A learning agent is capable of learning from its interactions with the environment. 2.It can adapt and improve its performance over time through learning algorithms, such as reinforcement learning or supervised learning. 3.Learning agents can start with minimal knowledge and gradually acquire knowledge and improve their decision- making capabilities through experience.

- 118. 4. Utility-Based Agent: 4. A utility-based agent is similar to a goal-based agent, but it also assigns utilities or values to different states and actions. 5. It aims to maximize the expected utility when making decisions. 6. Utility represents the agent's preference for different outcomes, and the agent tries to choose actions that maximize expected utility.

- 119. motion-activated lights are simple reflex agents

- 121. 2. Model-Based Agent: Environment Model: A model-based agent maintains an internal model or representation of the environment it interacts with. This model includes information about the current state, possible future states, and the effects of different actions. Comprehensive Knowledge: Model-based agents have a more comprehensive understanding of the environment. They can anticipate the consequences of actions by simulating them in their model. Planning and Decision Making: These agents use their internal models to plan ahead. They can consider multiple possible future states and choose actions that lead to desirable outcomes. They may use algorithms like search and optimization to make decisions. Memory and History: Model-based agents typically have memory or a history of past interactions, allowing them to consider the context of the current decision in light of previous actions and observations.

- 123. 2. Model based agent Model-based agent is known as Reflex agents with an internal state. One problem with the simple reflex agents is that their activities are dependent of the recent data provided by their sensors. On the off chance that a reflex agent could monitor its past states (that is, keep up a portrayal or “model” of its history in the world), and understand about the development of the world.

- 124. 3. Goal based Indeed, even with the expanded information of the current situation of the world given by an agent’s internal state, the agent may not, in any case, have enough data to reveal to it. The proper action for the agent will regularly depend upon its goals .Thus, it must be provided with some goal information.

- 126. 4. Utility based agent Goals individually are insufficient to produce top high-quality behavior. Frequently, there are numerous groupings of actions that can bring about a similar goal being accomplished. Given proper criteria, it might be conceivable to pick ‘best’ sequence of actions from a number that all result in the goal being achieved. Any utility-based agent can be depicted as having a utility capacity that maps a state, or grouping of states, on to a genuine number that speaks to its utility or convenience or usefulness.

- 130. Types of agent 1. Simple reflex agent Simple reflex agent is said to be the simplest kind of agent. These agents select an action based on the current percept ignoring the rest of the percept history. These percept to action mapping which is known as condition-action rules (so-called situation–action rules, productions, or if–then rules) in the simple reflex agent. It can be represented as follows: if {set of percepts} then {set of actions} For example, if it is raining then put up umbrella or

- 131. Code for simple reflex agent

- 132. Limitations • Intelligence level in these agents is very limited. • It works only in a fully observable environment. • It does not hold any knowledge or information of nonperceptual parts of state. • Because of the static knowledge based; it’s usually too big to generate and store. • If any change in the environment happens, the collection of the rules are required to be updated. Copyright © 2019 by Wiley India Pvt. Ltd., 4436/7, Ansari Road, Daryaganj, New Delhi-

- 133. Updating status requires information on 1. How the world evolves independent of agent 2. What is impact of agent actions on enviornemnt

- 134. Code for model based agent

- 136. Code for goal based agent

- 137. 4. Utility based agent Goals individually are insufficient to produce top high-quality behavior. Frequently, there are numerous groupings of actions that can bring about a similar goal being accomplished. Given proper criteria, it might be conceivable to pick ‘best’ sequence of actions from a number that all result in the goal being achieved. Any utility-based agent can be depicted as having a utility capacity that maps a state, or grouping of states, on to a genuine number that speaks to its utility or convenience or usefulness.

- 138. Code for utility based agent

- 139. Copyright © 2019 by Wiley India Pvt. Ltd., 4436/7, Ansari Road, Daryaganj, New Delhi- 110002

- 140. 5. Learning agent By actively exploring and experimenting with their environment, the most powerful agents are able to learn. A learning agent can be further divided into the four conceptual components

- 141. Copyright © 2019 by Wiley India Pvt. Ltd., 4436/7, Ansari Road, Daryaganj, New Delhi- 110002

- 142. Environment and its properties 1. Accessible (Fully observable) and Inaccessible Environments (partially observable) 2. Deterministic Environment and Nondeterministic Environment 3. Episodic and Nonepisodic Environment 4. Static versus Dynamic Environment 5. Discrete versus Continuous Environment 6. Single Agent versus Multiagent Environment

- 143. Copyright © 2019 by Wiley India Pvt. Ltd., 4436/7, Ansari Road, Daryaganj, New Delhi- 110002 Quiz 1 1.Explain the concept of intelligent agent 2.Explain any two types of environments in detail

Editor's Notes

- #39: https://www.youtube.com/watch?v=bioz_1pHSvs

- #109: When an action is taken, 100% possibility things can happen in enviorment this is deterministic world

- #110: One step task, dog in image? Take 1 action see what happened then next actions

- #111: Enviorment doesn’t change wrt time, rain (dynamic)..until agent makes action enviorm changes.. Like puzzles with pieces Many agents.. They act 2gthr

![Agent function

Percept sequence Action

[A, Clean] Right

[A, Dirty] Suck

[B, Clean] Left

[B, Dirty] Suck

[A, Dirty], [A, Clean] Right

[A, Clean], [B, Dirty] Suck

[B, Dirty], [B, Clean] Left

[B, Clean], [A, Dirty] Suck

[A, Clean], [B, Clean] No-op

[B, Clean], [A, Clean] No-op](https://image.slidesharecdn.com/artificialintelligenceandcybersecutitymodule1-250318070055-eceea004/85/Artificial-Intelligence-and-Cyber-Secutity-Module-1-pptx-85-320.jpg)

![Agent program

.

Function REFLEX-VACUUM-AGENT([location, status]) returns Action

if status = Dirty then return Suck

if location= A then return Right

if location = B then return Left

return action](https://image.slidesharecdn.com/artificialintelligenceandcybersecutitymodule1-250318070055-eceea004/85/Artificial-Intelligence-and-Cyber-Secutity-Module-1-pptx-87-320.jpg)

![Structure of agent

The structure of agent can be represented as:

Agent= Architecture + Agent program

Program where,

Architecture : The machinery that an agent executes on

Agent program: An implementation of an agent function

Function REFLEX-VACUUM-AGENT([location, status])

returns Action

if status = Dirty then return Suck

if location= A then return Right

if location = B then return Left

return action](https://image.slidesharecdn.com/artificialintelligenceandcybersecutitymodule1-250318070055-eceea004/85/Artificial-Intelligence-and-Cyber-Secutity-Module-1-pptx-89-320.jpg)