Big Data Basic Concepts | Presented in 2014

- 2. Intro Intended to whet the audience’s appetite and possibly start a discussion within this environment on this subject among interested parties Big Data is relatively new and still emerging. In the last decade, it has influenced the emergence of new companies as well we the way old business handle data

- 3. Puzzles for the IA How do the likes of Google and Bing index the entire World Wide Web? How do Amazon and eBay maintain a global , dynamic, open online market How can the NSA process phone records and make meaning of the data? How does Facebook handle all your nice pictures and produce them when you ask?

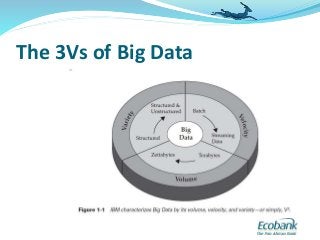

- 4. The 3Vs of Big Data

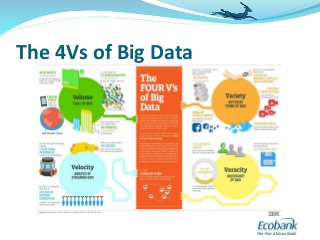

- 5. The 4Vs of Big Data

- 6. 6Vs of Big Data Volume – massive data approaching petabytes Velocity – highly transient data Variety – data is not pre-defined and not quite structured Veracity – Is the data that is being stored, and mined meaningful to the problem being analyzed? Volatility – How long can we keep the data? Validity – Is the data valid and accurate for the intended use? Huh? 3, 4, or 6?

- 7. Why Big Data? – Saptak Sen Human Fault Tolerance Minimize CAPEX Hyper Scale on Demand Low Learning Curve

- 8. Key Drivers for Big Data Platforms Businesses want systems that can survive human error or malicious intent. Businesses do not want to spend too much when the ROIs are not yet clear Businesses want the assurance that even if they start small, they can easily grow big by expanding rather than replacing systems. Businesses and their staff want to spend the minimum amount of time, money and effort on training

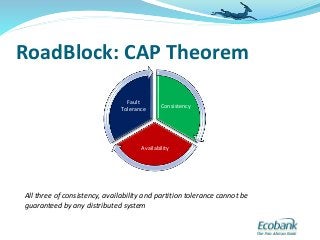

- 9. RoadBlock: CAP Theorem Consistency Availability Fault Tolerance All three of consistency, availability and partition tolerance cannot be guaranteed by any distributed system

- 10. CAP Explained Consistency At any point all result sets fetched from different nodes in a distributed system are the same Availability Data is available when required and response time is within acceptable limits Partition Tolerance The system can survive having large data sharded across many drives/nodes

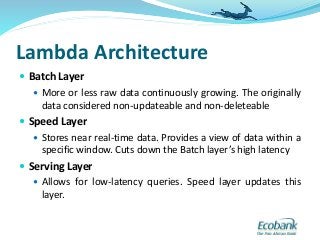

- 11. Lambda Architecture Batch Layer More or less raw data continuously growing. The originally data considered non-updateable and non-deleteable Speed Layer Stores near real-time data. Provides a view of data within a specific window. Cuts down the Batch layer’s high latency Serving Layer Allows for low-latency queries. Speed layer updates this layer.

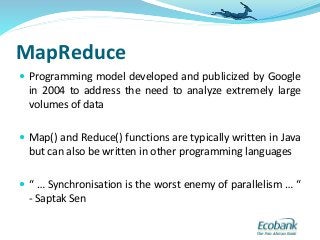

- 13. MapReduce Programming model developed and publicized by Google in 2004 to address the need to analyze extremely large volumes of data Map() and Reduce() functions are typically written in Java but can also be written in other programming languages “ … Synchronisation is the worst enemy of parallelism … “ - Saptak Sen

- 14. Detour: CXPACKET Wait Events FINISH

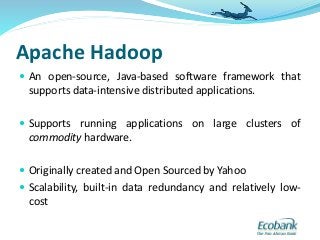

- 17. Apache Hadoop An open-source, Java-based software framework that supports data-intensive distributed applications. Supports running applications on large clusters of commodity hardware. Originally created and Open Sourced by Yahoo Scalability, built-in data redundancy and relatively low- cost

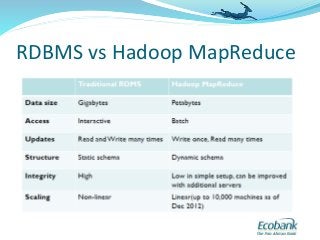

- 19. RDBMS vs Hadoop MapReduce

- 20. Hadoop Framework Hadoop Common contains libraries and utilities needed by other Hadoop modules Hadoop Distributed File System (HDFS) distributed file-system that stores data on commodity machines, providing very high aggregate bandwidth across the cluster. Hadoop YARN resource-management platform for managing compute resources in clusters and using them for scheduling apps Hadoop MapReduce

- 21. NoSQL NoSQL Databases are distributed and are considered better options than RDBMSes for applications that can handle the absence of one of the CAP properties NoSQL as a superior technology over SQL for dealing with the complexities of large volumes of data is a debatable proposition NoSQL as a term was first used by Carlo Strozzi in 1998 and subsequently made popular by Eric Evans in 2009

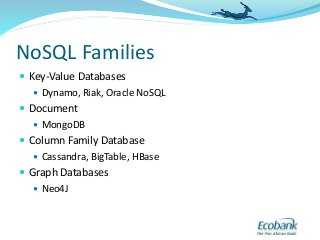

- 22. NoSQL Families Key-Value Databases Dynamo, Riak, Oracle NoSQL Document MongoDB Column Family Database Cassandra, BigTable, HBase Graph Databases Neo4J

- 23. Dynamo Created by Amazon to meet their requirements for Extreme Availability, Extreme Performance and Extreme Scalability Amazon was willing to sacrifice consistency (‘C’ in the well know ACID relational database model) in order to provide higher availability Components of the Dynamo Key-Value Store were Functional Segmentation, Sharding, Replication, and BLOBs

- 24. MongoDB Cross-platform document-oriented database system developed between 2007 and 2010 by a company then known as 10gen Considered the most popular NoSQL Database now used by such big names as eBay, SourceForge and The New york Times http://www.mongodb.org/

- 25. Large Volume: Amazon vs eBay

- 26. Summary Necessity is the mother of invention. The Internet Revolution has driven deep thinkers to amazing heights in the last decade and Big Data is one of the results Big companies like Google and Yahoo showed the maturity and security by sharing with the world such ground breaking discoveries There is certainly a place in the future for Big Data and that furture is approaching at light speed. It may pay to key in.

- 27. … or questions

- 29. Authorities’ Quotes Ignatius Fernandez “ … still believes in the superiority of relational technology but believes that the relational camp needs to get its act together if it wants to compete with the NoSQL camp in performance, scalability, and availability … “ Arup “ … but in the case of the phone records, and especially collated with other records to identify criminal or pseudo- criminal activities such as financial records, travel records, etc., the traditional databases such as Oracle and DB2 likely will not scale well… “

- 30. - Cetin Ozbutun "Oracle Big Data Appliance X4-2 continues to raise the big data bar, offering the industry's only comprehensive appliance for Hadoop to securely meet enterprise big data challenges“ Google “ …Bigtable is used by more than sixty Google products and projects, including Google Analytics, Google Finance, Orkut, Personalized Search, Writely, and Google Earth … “

Editor's Notes

- #4: IA – Internet Age

- #5: Source

- #6: Source: http://www.ibmbigdatahub.com/infographic/four-vs-big-data

- #7: Volume Gigabytes 10^9 bytes Terabytes 10^12 bytes Petabytes 10^15 bytes Exabytes 10^18 bytes Source: http://inside-bigdata.com/2013/09/12/beyond-volume-variety-velocity-issue-big-data-veracity/ Ref: http://theinnovationenterprise.com/summits/big-data-boston-2014

- #8: Human Fault Tolerance – Businesses require systems that can survive human error or malicious intent. Hence the craze for Data Protection and Disaster Recovery Hyper Scale on Demand – businesses want the assurance that even if they start small, they can easily grow big by expanding rather than replacing systems. Minimize CAPEX – Businesses do not want to spend too much when the ROIs are not yet clear Low Learning Curve – Businesses and their staff want to spend the minimum amount of time, money and effort on training Saptak Sen – Senior Product Manager, Azure Data Platform

- #9: Meeting the above requirements faces a challenge called the CAP Theorem on Brewer’s Theorem

- #10: Consistency: At any point all result sets fetched from different data structures are available Availability: Data is available when required and response is as defined in SLA Partition Tolerance: the system can survive having large data sharded across many drives As you scale I/O disk I/O lags other components of s typical system Consistency here is slightly different from ACID consistency BASE, an acronym for Basically Available Soft-state services with Eventual-consistency

- #11: OLAP can sacrifice Consistency for availability but not OLTP Highlight Eventual Consistency Sharding: Partition all tables in a schema in the exact same way. Shards live on different servers in shared nothing fashion

- #12: Batch Layer – All raw data comes in here to the system Speed Layer – The response time limitations of the first layer are mitigated Serving Layer - Acquisition – the Batch Layer Organization Analysis

- #14: “ … Synchronisation is the worst enemy of parallelism … “ - Saptak Sen

- #17: Source: Ignatius Fernandez http://www.confio.com/webinars/nosql-big-data/lib/playback.html Parallelism occurs in both Map and Reduce phases Note Key Value Pairs. Highlight Word Count problem (or Fruit Count problem) Acquisition – the Batch Layer Organization Analysis Hello World for Big Data

- #18: From Google and Yahoos approach to solving their high volume data problems. http://arup.blogspot.com/2013/06/demystifying-big-data-for-oracle.html Doug Cutting, Hadoop's creator, named the framework after his child's stuffed toy elephant HDInsight is Microsoft’s Implementation of Hadoop http://searchcloudcomputing.techtarget.com/definition/Hadoop

- #19: Name Node Stores Metadata and manages access Each block replicated across several Data Nodes

- #20: http://www.snia.org/sites/default/education/tutorials/2013/fall/BigData/SergeBazhievsky_Introduction_to_Hadoop_MapReduce_v2.pdf Designed for analytics but Facebook customied to support realtime through messages in 2008.

- #21: Hadoop MapReduce A programming model for large scale data processing

- #22: http://www.slideshare.net/AswaniVonteddu/big-data-nosql-the-dba

- #23: Concepts: Distributed Hash Tables, Eventual Consistency, Replication and Data Partitioning, Example: Amazon Dynamo Concepts: Distributed Key Value Stores, Supports Nested Columns, Example: Cassandra

- #24: If you are not prepared to work with small schemas, by definition you are not interested in NoSQL. First task in Amazons development of Dynamo was breaking up the monolithic schema. Amazon’s Dynamo did not use distributed transactions but asynchronous replication as such but attempted to provide what is called “Eventual Consistency” Worthy of Note is that eBay uses Oracle and regular SQL to achieve the same objectives are Amazon according to Ignatius Fernandez

- #26: Source: Randy Shoup. http://www.infoq.com/presentations/shoup-ebay-architectural-principles