Big Data Intelligence: from Correlation Discovery to Causal Reasoning

- 1. Big Data Intelligence: from Correlation Discovery to Causal Reasoning College of Computer Science Zhejiang University Fei Wu http://mypage.zju.edu.cn/wufei/ 2019 July

- 2. Table of Content 1. Correlation discovery via seq2seq learning 2. Seq2seq learning via memory model 3. From correlation discovery to causal reasoning 4. Conclusion 2

- 3. Big data intelligence: From big data to knowledge 3 Big data is valuable, but knowledge is more powerful. Data Information Knowledge Wisdom 1. Collect, transmit and aggregate data from various types of sources. 2. Concentrate data to improve the density of data value. 3. Analyze data in depth and find the knowledge. Future

- 4. From Big data to Knowledge and Decision From Data to Knowledge to Decision

- 5. Yueting Zhuang, Fei Wu, Chun Chen, Yunhe Pan, Challenges and Opportunities: From Big Data to Knowledge in AI 2.0, Frontiers of Information Technology & Electronic Engineering, 2017,18(1):3-14 From Big data to Knowledge and Decision

- 6. correlation learning : from data to knowledge

- 7. Latent correlation Visual features Acoustical features Visual features 500D Acoustical features 400D (3, 9,5,…,2,8,6) (34,56,49,…,47,45) (45,51,43,…,53,59) (61,41,55,…,43,42) (54,52,63,…,57,48) (39,36,46,…,55,56) (2,8,6,…,3,7,5) (2,8,6,…,3,8,6) (4,7, 3,…2,,4,7) (5,6,8,…, 5,5,6) Hotelling, H., Relations Between Two Sets of Variates, Biometrika 28 (3-4): 321-377,1936 CCA (Canonical Correlation Analysis) and its extensions Kernel CCA, Sparse CCA, Sparse Structure CCA;2D CCA, local 2D-CCA, sparse 2D-CCA, 3-D CCA correlation learning in a shallow manner

- 8. Images Audio The sharing space correlation learning in a shallow manner

- 9. The shallow model: The Representation in term of bag of latent topics First, Latent Dirichlet Allocation(LDA) is conducted to perform uni-modal topic modeling for images and texts respectively. correlation learning in a shallow manner

- 10. The shallow model: Multi-modal Mutual Topic Reinforce Modeling Second, cross-modal topics (reflecting the same semantic information) are encouraged and therefore enhanced. Yanfei Wang, Fei Wu, Jun Song, Xi Li, Yueting Zhuang, Multi-modal Mutual Topic Reinforce Modeling for Cross-media Retrieval, ACM Multimedia (FULL paper), 2014 Yueting Zhuang, Haidong Gao, Fei Wu, Siliang Tang, Yin Zhang, Zhongfei Zhang, Probabilistic Word Selection via Topic Modeling, IEEE Transactions on Knowledge and Data Engineering(accepted) correlation learning in a shallow manner

- 11. In order to find relevant correlation between multi-modal data, we should bridge both semantic-gap and heterogeneity gap Audio Video Webpage Relevant multi-modal Data The common representation space across modalities correlation learning in a shallow manner

- 12. Cross-Media Hashing with Neural Networks, ACM Multimedia 2014 Learning Multimodal Neural Network with Ranking Examples, ACM Multimedia 2014 Deep multi-model embedding Learn the deep representations(embedding) of multi-modal data via deep models (i.e., cnn or rnn) respectively. devise a decent loss layer to fine-tune the multi-modal deep models to generate a more discriminative common space Back-propagate errors for fine-tuning Multi-modal embedding multimodal embedding via deep learning

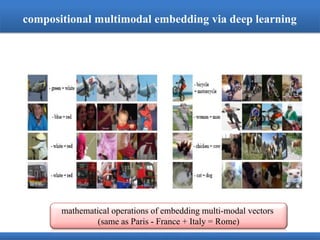

- 13. isolated semantics embedding: visual objects or textual entities are bi- directionally grounded compositional semantics embedding: phrases or neighboring visual objects are bi-directionally grounded Deep Compositional Cross-modal Learning to Rank via Local-Global Alignment, ACM Multimedia 2015(Full Paper) Multi-modal Deep Embedding via Hierarchical Grounded Compositional SemanticsIEEE Transactions on Circuits and Systems for Video Technology, 28(1):76-89,2018 compositional semanticsisolated semanticsa pair of image-sentence compositional multimodal embedding via deep learning

- 14. preserves not only the relevance between an image and text, but also the relevance between visual objects and textual words compositional multimodal embedding via deep learning

- 15. compositional multimodal embedding via deep learning

- 16. Images Generated captions compositional multimodal embedding via deep learning

- 17. compositional multimodal embedding via deep learning

- 18. The architecture of Seq2Seq Learning w1 w2 w3 w4 w5 Encoder Decoder v1 v2 v3 v4 correlation learning via seq2seq architecture

- 19. Part of Speech Parsing Semantic Analysis Jordan likes playing basketball Jordan/NNP likes/VBZ playing/VBG basketball/NN S NP VP Jordan/NNP likes/VBZ S VP playing/VBG NP basketball/NN AD V A1 AD V A1 Jordan likes playing basketball 乔丹 喜欢 打篮球 seq2seq learning: machine translation

- 20. seq2seq learning: machine translation Encoder Jordan likes playing basketball Encoder Encoder Encoder Decoder Decoder Decoder 乔丹 喜欢 打篮球 Data-driven learning via amounts of bilingual corpus (the aligned source-target sentences )

- 21. seq2seq learning: visual Q-A Convolutional Neural Network what is the man doing ? Encoder Decoder Riding a bike

- 22. seq2seq learning: Image-captioning Encoder <start> A man in a white helmet is riding a bike Decoder <start> A man in a white helmet is riding a bike

- 23. seq2seq learning: video action classification Encoder Decoder NO ACTION pitching pitching pitching NO ACTION

- 24. Seq2seq learning: put it together One Input Many Output Many Input One Output One Input One Output Image Classification Image Captioning Sentiment Analysis

- 25. Seq2seq learning: put it together Machine Translation Video Storyline Many Input Many Output Many Input Many Output

- 26. 26 The basic unit of deep learning:cell/neuron aj :Activation value of unit j wj,i :Weight on link from unit j to unit i ini :Weighted sum of inputs to unit i ai :Activation value of unit i g :Activation function Biological inspiration: Modeling one neuron

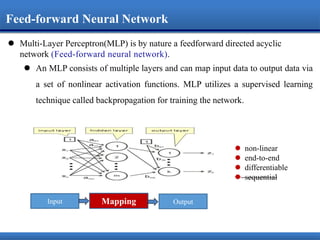

- 27. Neural Networks are modeled as collections of neurons that are connected in an acyclic graph (layer-wise organization). the most common layer type is the fully-connected layer in which neurons between two adjacent layers are fully pairwise connected, but neurons within a single layer share no connections. Feed-forward Neural Network

- 28. Multi-Layer Perceptron(MLP) is by nature a feedforward directed acyclic network . An MLP consists of multiple layers and can map input data to output data via a set of nonlinear activation functions. MLP utilizes a supervised learning technique called backpropagation for training the network. MappingInput Output non-linear end-to-end differentiable sequential Feed-forward Neural Network

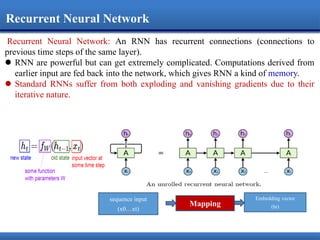

- 29. Recurrent Neural Network: deal with a sequence of vectors x by applying a recurrence formula at every time step Predicted output Input sequence new state function f with Parameters W, U and V old state input x at Time t Notice: the same function and the same set of parameters are shared at every time step , , 1( , )t W U V t th f h x Recurrent Neural Network

- 30. Recurrent Neural Network: An RNN has recurrent connections (connections to previous time steps of the same layer). RNN are powerful but can get extremely complicated. Computations derived from earlier input are fed back into the network, which gives RNN a kind of memory. Standard RNNs suffer from both exploding and vanishing gradients due to their iterative nature. Mapping sequence input (x0…xt) Embedding vector (ht) Recurrent Neural Network

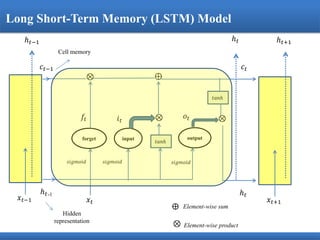

- 31. LSTM is an RNN devised to deal with exploding and vanishing gradient problems in RNN. An LSTM hidden layer consists of a set of recurrently connected blocks, known as memory cells. Each of memory cells is connected by three multiplicative units - the input, output and forget gates. The input to the cells is multiplied by the activation of the input gate, the output to the net is multiplied by the output gate, and the previous cell values are multiplied by the forget gate. Sepp Hochreiter &Jűrgen Schmidhuber, Long short-term memory, Neural computation, Vol. 9(8), pp. 1735--1780, MIT Press, 1997 Long Short-Term Memory (LSTM) Model

- 32. 𝑥 𝑡 forget ℎ 𝑡-1 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑓𝑡 ⊗ input 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑖 𝑡 ⊗ 𝑡𝑎𝑛ℎ ⊕ output 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 ⊗𝑜𝑡 𝑡𝑎𝑛ℎ ℎ 𝑡 𝑐𝑡𝑐𝑡−1 𝑥 𝑡−1 𝑥 𝑡+1 Element-wise sum⨁ ⊗ Element-wise product ℎ 𝑡ℎ 𝑡−1 ℎ 𝑡+1 Cell memory Hidden representation Long Short-Term Memory (LSTM) Model

- 33. Table of Content 1. Correlation discovery via seq2seq learning 2. Seq2seq learning via memory model 3. From correlation discovery to causal reasoning 4. Conclusion 33

- 34. The behavior of the computer at any moment is determined by the symbols which he is observing and his 'state of mind' at that moment.” – Alan Turing Three kinds of memories in our brain Sensory memory (multi-modal perception) duration:< 5 sec Working memory (intuition, inspiration and reasoning) duration:< 30 sec Long-term memory (priors and knowledge) duration: 1 sec--lifelong Baddeley, A., Working Memory, Science, 1992, 255(5044):556-559

- 35. From Turing Machine to Neural Turing Machine 35 A.M.Turing, On Computable Numbers with an Application to the Entscheidungsproblem, Proceedings of the London Mathematical Society, Ser. 2, Vol. 42, 1937

- 36. Alex Graves, et al., Hybrid computing using a neural network with dynamic external memory, Nature 538, 471– 476,2016 Graves Alex, et al., Neural Turing Machines Jason Weston, et al., Memory Networks, arXiv:1410.3916 From Turing Machine to Neural Turing Machine

- 37. seq2seq Q-A via knowledge memory document question answer Temporal Interaction and Causation Influence in Community-based Question Answering, IEEE Transactions on Knowledge and Data Engineering, 29(10):2304-2317,2017 Temporality-enhanced knowledge memory network for factoid question answering, Frontiers of Information Technology & Electronic Engineering,19(1):104-115,2018

- 38. seq2seq Q-A via knowledge memory Temporal Interaction and Causation Influence in Community-based Question Answering, IEEE Transactions on Knowledge and Data Engineering, 29(10):2304-2317,2017 Temporality-enhanced knowledge memory network for factoid question answering, Frontiers of Information Technology & Electronic Engineering,19(1):104-115,2018

- 39. seq2seq Q-A via knowledge memory

- 40. Reasoning, Attention, Memory (RAM) Shallow models Deep models Memo Language model Neural language model Mission: how to effectively utilize data, priors and knowledge into the data-driven learning manner Bayesian Learning Bayesian deep learning Turing Machine Neural Turing Machine Reinforcement Learning Deep Reinforcement Learning X Deep or Neural + X

- 41. Table of Content 1. Correlation discovery via seq2seq learning 2. Knowledge Discovery: from data to knowledge 3. From correlation discovery to causal reasoning 4. Conclusion 41

- 42. Rooster's crow and the rising of the sun (Rooster does not cause the sun to rise) Correlation Does Not Mean Causation

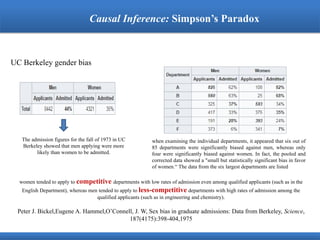

- 43. Causal Inference: Simpson’s Paradox UC Berkeley gender bias The admission figures for the fall of 1973 in UC Berkeley showed that men applying were more likely than women to be admitted. Peter J. Bickel,Eugene A. Hammel,O’Connell, J. W, Sex bias in graduate admissions: Data from Berkeley, Science, 187(4175):398-404,1975 when examining the individual departments, it appeared that six out of 85 departments were significantly biased against men, whereas only four were significantly biased against women. In fact, the pooled and corrected data showed a "small but statistically significant bias in favor of women.“ The data from the six largest departments are listed women tended to apply to competitive departments with low rates of admission even among qualified applicants (such as in the English Department), whereas men tended to apply to less-competitive departments with high rates of admission among the qualified applicants (such as in engineering and chemistry).

- 44. 𝐛 𝐚 < 𝐝 𝐜 , 𝐛′ 𝐚′ < 𝐝′ 𝐜′ 𝐛 + 𝐛′ 𝐚 + 𝐚′ > 𝐝 + 𝐝′ 𝐜 + 𝐜′ Drug No Drug Men 81 out of 87 recovered (93%) 234 out of 270 recovered (87%) Women 192 out of 263 recovered (73%) 55 out of 80 recovered (69%) Combined data 273 out of 350 recovered (78%) 289 out of 350 recovered (83%) Results of a study into a new drug, with gender being taken into account In male patients, drug takers had a better recovery rate than those who went without the drug (93% vs 87%). In female patients, again, those who took the drug had a better recovery rate than nontakers (73% vs 69%). However, in the combined population, those who did not take the drug had a better recovery rate than those who did (83% vs 78%). The data seem to say that if we know the patient’s gender—male or female—we can prescribe the drug, but if the gender is unknown we should not! Obviously, that conclusion is ridiculous. If the drug helps men and women, it must help anyone; our lack of knowledge of the patient’s gender cannot make the drug harmful. Causal Inference: Simpson’s Paradox

- 45. 𝐛 𝐚 < 𝐝 𝐜 , 𝐛′ 𝐚′ < 𝐝′ 𝐜′ 𝐛 + 𝐛′ 𝐚 + 𝐚′ > 𝐝 + 𝐝′ 𝐜 + 𝐜′ Results of a study into a new drug, with gender being taken into account In order to decide whether the drug will harm or help a patient, we first have to understand the story behind the data—the causal mechanism that led to, or generated, the results we see. For instance, suppose we knew an additional fact: Estrogen has a negative effect on recovery, so women are less likely to recover than men, regardless of the drug. In addition, as we can see from the data, women are significantly more likely to take the drug than men are. So, the reason the drug appears to be harmful overall is that, if we select a drug user at random, that person is more likely to be a woman and hence less likely to recover than a random person who does not take the drug. Put differently, being a woman is a common cause of both drug taking and failure to recover. Therefore, to assess the effectiveness, we need to compare subjects of the same gender, thereby ensuring that any difference in recovery rates between those who take the drug and those who do not is not ascribable to estrogen. This means we should consult the segregated data, which shows us unequivocally that the drug is helpful. This matches our intuition, which tells us that the segregated data is “more specific,” hence more informative, than the unsegregated data. Drug No Drug Men 81 out of 87 recovered (93%) 234 out of 270 recovered (87%) Women 192 out of 263 recovered (73%) 55 out of 80 recovered (69%) Combined data 273 out of 350 recovered (78%) 289 out of 350 recovered (83%) Causal Inference: Simpson’s Paradox

- 46. Difference between Statistical Learning and Causal Inference Traditional statistical inference paradigm Judea Pearl, Causality: models, reasoning, and inference (second edition), Cambridge University Press, 2009 Judea Pearl, Madelyn Glymour, Nicholas P. Jewell, Causal inference in statistics: a primer, John Wiley & Sons, 2016 Data Inference Q(P) (Aspects of P) Joint Distribution P e.g., Infer whether customers who bought product A would also buy product B ( modeling of the joint distribution of A and B). Q = P(B | A) “The object of statistical methods is the reduction of data” (Fisher 1922).

- 47. From statistical inference to causal inference Data Q(P′) (Aspects of P′) change Joint Distribution P Joint Distribution P′ Inference e.g., Estimate P′(sales) if we double the price. How does P change to P′? New oracle e.g., Estimate P′(cancer) if we ban smoking. Difference between Statistical Learning and Causal Inference

- 48. Causal Inference hierarchy Observational Questions What if we see A (what is?) 𝑃(𝑦|𝐴) Action Questions What if we do A (what if?) 𝑃(𝑦|𝑑𝑜(𝐴)) Counterfactual Questions What if we did things differently (why?) 𝑃(𝑦′ |𝐴) Options: with what probability Actions: B will be true if we do A. Counterfactuals: B would be different if A were true

- 49. Theoretical Impediments to Machine Learning With Seven Sparks from the Causal Revolution, Judea Pearl (2018) Level(Symbol) Typical Activity Typical Questions Examples Association 𝑃(𝑦|𝑥) seeing What is? How would seeing 𝑋 change my belief in 𝑌 What does a symptom tell me about a disease? What does a survey tell us about the election results? Intervention 𝑃(𝑦|𝑑𝑜 𝑥 , 𝑧) Doing intervening What if? What if I do ? What if I take aspirin, will my headache be cured? What if we ban cigarettes? Counterfactuals 𝑃(𝑦𝑥|𝑥′ , 𝑦′ ) Imagining, Retrospection Why? Was it 𝑋 that caused in 𝑌 ? What if I had acted differently? Was it the aspirin that stopped my headache? Would Kennedy be alive had Oswald not shot him? What if I had not been smoking the past 2 years? Correlation to Causal

- 50. Table of Content 1. Correlation discovery via seq2seq learning 2. Knowledge Discovery 3. From correlation discovery to causal reasoning 4. Conclusion 50

- 51. China’s National 15-year AI Plan This is the first top-level Artificial Intelligence plan: develop new generation of artificial intelligence (AI) in China for the next 15 years, and set up ambitious goals up to 2030

- 52. National 15-year AI Plan: China’s A.I. revolution

- 53. Yunhe Pan, Heading toward Artificial Intelligence 2.0, Engineering, 2016,409-413 New Generation Artificial Intelligence: AI 2.0 Yunhe Pan The leader of New AI President of Zhejiang University (1995-2006) Deputy VP of the Chinese Academy of Engineering (2006-2015) …AI faces important adjustments, and scientific foundations are confronted with new breakthroughs, as AI enters a new stage: AI 2.0…

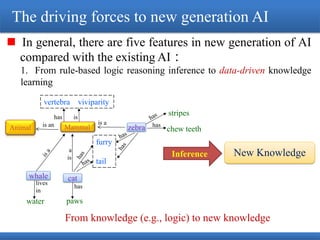

- 54. The driving forces to new generation AI In general, there are five features in new generation of AI compared with the existing AI: 1. From rule-based logic reasoning inference to data-driven knowledge learning New Knowledge water paws is an chew teeth furry tail stripes vertebra viviparity has has is is a a is lives in has zebra catwhale Animal Mammal Inference From knowledge (e.g., logic) to new knowledge

- 55. • Concepts • Entities • Attributes • Relations Machine learning From big data (e.g., documents and images) to knowledge The driving forces to new generation AI

- 56. 2. From single media data processing to cross-media learning and reasoning How to utilize the data with different modalities from different sources to understand our real world becomes a great challenge Images Social Media Micro Messages /Short Texts Other Sensors … Video Surveillance Video Webpages The driving forces to new generation AI

- 57. bridging the gap between low-level features and high-level semantics Audio Video Webpage Relevant multi-modal Data The common representation space across modalities The driving forces to new generation AI

- 58. Human Brain Machine Intelligence self-learning learning by examples adaptation routine common sense No intuition logic … … The driving forces to new generation AI 3. From machine intelligence to Human-machine hybrid- augmented intelligence

- 59. How to introduce human cognitive capabilities or human-like cognitive models (i.e., intuition or transfer learning) into AI systems? The driving forces to new generation AI

- 60. 4. From individual intelligence to collective intelligence (crowdsourcing intelligence) Collective intelligence can combine the strengths of humans and computers to accomplish tasks that neither can do alone. The driving forces to new generation AI

- 61. Crowd Intelligence • Wikipedia • City Transportation • Online recommendation • Smart cities The driving forces to new generation AI

- 62. 5. From robots to autonomous unmanned systems autonomous unmanned systems are systems that are man-made and capable of carrying out operations or management by means of advanced technologies without human intervention The driving force to new generation AI

- 63. The main architecture of new generation AI Fundamental AI research AI chips, AI super-computing system, AI software and hardware, AI cloud platform Big Data intelligence Collective intelligence Cross- media intelligence Human- machine Hybrid intelligence unmanned intelligence intelligent economy and smart society

- 64. Conclusion Traditional AI Artificial General Intelligence Focus on having knowledge and skills Focus on acquiring knowledge and skills Action acquiring via programing Ability acquiring via learning domain-specific ability via rule- based and exemplar-based general ability via abstraction (intuition) and context (common sense) Learning by data and rules Learning to learn

- 65. The Next Generation AI integrates data-driven machine learning approaches (bottom-up) with knowledge-guided methods (top- down). employ data with different modalities (e.g., visual, auditory, and natural language processing) to perform cross-media learning and inference. from the pursuit of an intelligent machine to the hybrid- augmented intelligence (i.e., high-level man- machine collaboration and fusion). a more explainable, robust, open, and general AI

- 66. The convergence of AI learning models Learning from experience Learning from data Learning from rules L o g i c i n f e r e n c e ( f o r m a l m e t h o d s ) D i s c o v e r y h i d d e n p a t t e r n ( s t a t i s t i c a l m e t h o d s ) e x p l o r a t i o n a n d e x p l o i t a t i o n ( c o n t r o l t h e o r y ) Fusion models?

- 67. The convergence of AI, Neuroscience and domain-specific application AI Neuroscience Domain-specific application How to borrow strength from each other and collaborate each other

- 68. Thanks 68