Build Your Own VR Display Course - SIGGRAPH 2017: Part 4

- 1. Build Your Own VR Display Spatial Sound Nitish Padmanaban Stanford University stanford.edu/class/ee267/

- 2. Overview • What is sound? • The human auditory system • Stereophonic sound • Spatial audio of point sound sources • Recorded spatial audio Zhong and Xie, “Head-Related Transfer Functions and Virtual Auditory Display”

- 3. What is Sound? • “Sound” is a pressure wave propagating in a medium • Speed of sound is where c is velocity, is density of medium and K is elastic bulk modulus • In air, speed of sound is 340 m/s • In water, speed of sound is 1,483 m/s c = K r r

- 4. Producing Sound • Sound is longitudinal vibration of air particles • Speakers create wavefronts by physically compressing the air, much like one could a slinky

- 5. The Human Auditory System pinna Wikipedia

- 6. The Human Auditory System pinna cochlea Wikipedia • Hair receptor cells pick up vibrations

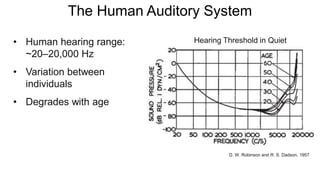

- 7. The Human Auditory System • Human hearing range: ~20–20,000 Hz • Variation between individuals • Degrades with age D. W. Robinson and R. S. Dadson, 1957 Hearing Threshold in Quiet

- 8. Stereophonic Sound • Mainly captures differences between the ears: • Inter-aural time difference • Amplitude differences from path length and scatter Wikipedia time L R t + Dt t L R Hello, SIGGRAPH!

- 9. Stereo Panning • Only uses the amplitude differences • Relatively common in stereo audio tracks • Works with any source of audio Line of sound 0 0.5 1 L R 0 0.5 1 L R 0 0.5 1 L R

- 10. Stereophonic Sound Recording • Use two microphones • A-B techniques captures differences in time-of-arrival • Other configurations work too, capture differences in amplitude Rode Olympus Wikipedia X-Y technique

- 11. Stereophonic Sound Synthesis L R R time amplitude L time amplitude• Ideal case: scaled & shifted Dirac peaks • Shortcoming: many positions are identical Input time amplitude Input

- 12. Stereophonic Sound Synthesis • In practice: the path lengths and scattering are more complicated, includes scattering in the ear, shoulders etc. R time amplitude L time amplitude R time amplitude L time amplitude

- 13. Head-Related Impulse Response (HRIR) • Captures temporal responses at all possible sound directions, parameterized by azimuth and elevation • Could also have a distance parameter • Can be measured with two microphones in ears of mannequin & speakers all around Zhong and Xie, “Head-Related Transfer Functions and Virtual Auditory Display” q q f L R

- 14. Head-Related Impulse Response (HRIR) • CIPIC HRTF database: http://interface.cipic.ucdavis.edu/sound/hrtf.html • Elevation: –45° to 230.625°, azimuth: –80° to 80° • Need to interpolate between discretely sampled directions V. R. Algazi, R. O. Duda, D. M. Thompson and C. Avendano, "The CIPIC HRTF Database,” 2001

- 15. Head-Related Impulse Response (HRIR) • Storing the HRIR • Need one timeseries for each location • Total of samples, where is the number of samples for azimuth, elevation, and time, respectively hrirL t;q,f( ) hrirR t;q,f( ) 2×Nq ×Nf ×Nt Nq,f,t

- 16. Head-Related Impulse Response (HRIR) Applying the HRIR: • Given a mono sound source and its 3D position L R s t( ) s t( )

- 17. Head-Related Impulse Response (HRIR) Applying the HRIR: • Given a mono sound source and its 3D position 1. Compute relative to center of listener’s head L R q,f( ) s t( ) s t( ) q,f( )

- 18. Head-Related Impulse Response (HRIR) Applying the HRIR: • Given a mono sound source and its 3D position 1. Compute relative to center of listener’s head 2. Look up interpolated HRIR for left and right ear at these angles time time amplitude hrirL t;q,f( ) hrirR t;q,f( ) amplitude q,f( ) s t( )

- 19. Head-Related Impulse Response (HRIR) Applying the HRIR: • Given a mono sound source and its 3D position 1. Compute relative to center of listener’s head 2. Look up interpolated HRIR for left and right ear at these angles 3. Convolve signal with HRIRs to get the sound at each ear time time amplitudeamplitude sL t( )= hrirL t;q,f( )*s t( ) sR t( )= hrirR t;q,f( )*s t( ) hrirL t;q,f( ) hrirR t;q,f( ) q,f( ) s t( )

- 20. Head-Related Transfer Function (HRTF) frequency amplitude hrtfL wt;q,f( ) hrtfR wt;q,f( ) amplitude sL t( )= hrirL t;q,f( )*s t( ) sR t( )= hrirR t;q,f( )*s t( ) • HRTF is Fourier transform of HRIR! (you’ll find the term HRTF more often that HRIR) sL t( ) = F-1 hrtfL wt;q,f( )×F s t( ){ }{ } sR t( ) = F-1 hrtfR wt;q,f( )× F s t( ){ }{ } time time amplitude hrirL t;q,f( ) hrirR t;q,f( ) frequency

- 21. • HRTF is Fourier transform of HRIR! (you’ll find the term HRTF more often that HRIR) • HRTF is complex-conjugate symmetric (since the HRIR must be real-valued) Head-Related Transfer Function (HRTF) frequency amplitudeamplitude frequency hrtfL wt;q,f( ) hrtfR wt;q,f( ) sL t( )= hrirL t;q,f( )*s t( ) sR t( )= hrirR t;q,f( )*s t( ) sL t( ) = F-1 hrtfL wt;q,f( )×F s t( ){ }{ } sR t( ) = F-1 hrtfR wt;q,f( )× F s t( ){ }{ }

- 22. Spatial Sound of N Point Sound Sources L R s2 t( ) • Superposition principle holds, so just sum the contributions of each s1 t( ) q2 ,f2 ( ) q1 ,f1 ( ) sL t( ) = F-1 hrtfL wt;qi ,fi ( )× F si t( ){ }{ } i=1 N å sR t( ) = F-1 hrtfR wt;qi ,fi ( )× F si t( ){ }{ } i=1 N å

- 23. Spatial Audio for VR • VR/AR requires us to re-think audio, especially spatial audio! • User’s head rotates freely traditional surround sound systems like 5.1 or even 9.2 surround isn’t sufficient

- 24. Spatial Audio for VR Two primary approaches: 1. Real-time sound engine • Render 3D sound sources via HRTF in real time, just as discussed in the previous slides • Used for games and synthetic virtual environments • A lot of libraries available: FMOD, OpenAL, etc.

- 25. Spatial Audio for VR Two primary approaches: 2. Spatial sound recorded from real environments • Most widely used format now: Ambisonics • Simple microphones exist • Relatively easy mathematical model • Only need 4 channels for starters • Used in YouTube VR and many other platforms

- 26. Ambisonics • Idea: represent sound incident at a point (i.e. the listener) with some directional information • Using all angles is impractical – need too many sound channels (one for each direction) • Some lower-order (in direction) components may be sufficient directional basis representation to the rescue! q,f

- 27. Ambisonics – Spherical Harmonics • Use spherical harmonics! • Orthogonal basis functions on the surface of a sphere, i.e. full-sphere surround sound • Think Fourier transform equivalent on a sphere

- 28. Ambisonics – Spherical Harmonics 0th order 1st order 2nd order 3rd order Wikipedia Remember, these representing functions on a sphere’s surface

- 29. Ambisonics – Spherical Harmonics 1st order approximation 4 channels: W, X, Y, Z W X Y Z Wikipedia

- 30. Ambisonics – Recording • Can record 4-channel Ambisonics via special microphone • Same format supported by YouTube VR and other platforms http://www.oktava-shop.com/

- 31. Ambisonics – Rendered Sources W = S × 1 2 X = S ×cosq cosf Y = S ×sinq cosf Z = S ×sinf • Can easily convert a point sound source, S, to the 4- channel Ambisonics representation • Given azimuth and elevation , compute W, X, Y, Z asq,f omnidirectional component (angle-independent) “stereo in x” “stereo in y” “stereo in z”

- 32. Ambisonics – Playing it Back LF = 2W + X +Y( ) 8 LB = 2W - X +Y( ) 8 RF = 2W + X -Y( ) 8 RB = 2W - X -Y( ) 8 • Easiest way to render Ambisonics: convert W, X, Y, Z channels into 4 virtual speaker positions • For a regularly-spaced square setup, this results in LF LB RF R L R

- 33. Ambisonics – Omnitone • Javascript-based first-order Ambisonic decoder Google, https://github.com/GoogleChrome/omnitone

- 34. References and Further Reading • Google’s take on spatial audio: https://developers.google.com/vr/concepts/spatial-audio HRTF: • Algazi, Duda, Thompson, Avendado “The CIPIC HRTF Database”, Proc. 2001 IEEE Workshop on Applications of Signal Processing to Audio and Electroacoustics • download CIPIC HRTF database here: http://interface.cipic.ucdavis.edu/sound/hrtf.html Resources by Google: • https://github.com/GoogleChrome/omnitone • https://developers.google.com/vr/concepts/spatial-audio • https://opensource.googleblog.com/2016/07/omnitone-spatial-audio-on-web.html • http://googlechrome.github.io/omnitone/#home • https://github.com/google/spatial-media/

- 35. References and Further Reading • Google’s take on spatial audio: https://developers.google.com/vr/concepts/spatial-audio HRTF: • Algazi, Duda, Thompson, Avendado “The CIPIC HRTF Database”, Proc. 2001 IEEE Workshop on Applications of Signal Processing to Audio and Electroacoustics • download CIPIC HRTF database here: http://interface.cipic.ucdavis.edu/sound/hrtf.html Resources by Google: • https://github.com/GoogleChrome/omnitone • https://developers.google.com/vr/concepts/spatial-audio • https://opensource.googleblog.com/2016/07/omnitone-spatial-audio-on-web.html • http://googlechrome.github.io/omnitone/#home • https://github.com/google/spatial-media/ Demo