Building a sdn solution for the deployment of web application stacks in docker

- 1. Building a SDN solution for the deployment of web application stacks in Docker Technical Considerations Jorge Juan Mendoza Pons Cloud Software Engineering & Operations Direction March 2016

- 2. Summary ● Significant Assumptions ● Docker networking approach, modes and rules ● Building a network driver plugin as a remote driver for Docker’s libnetwork CNM ● The Proposed solution: wSDN ● Show me the codes: Docker overlay sources (as a sample) ● Why Docker needs a better networking/SDN solution ? use cases & requirements ● Technical considerations of the proposed solution ● How to (simple) test the solution’s performance ● Appendixes

- 3. Significant Assumptions ● SDN solution based on a single container format (Docker) and ecosystem ● Application-space scope is the deployment of web application 'stacks' in such a system (including their associated delivery pipeline), leaving out of the scope other industrial/IoT/real time video/trading scenarios.

- 4. Docker networking approach and default rules ● Whether a container can talk to the world is governed by: ○ whether the host machine is forwarding its IP packets ○ whether the host’s iptables allow this particular connection. ● Whether two containers can communicate is governed, at the operating system level, by two factors. ○ Does the network topology connect the containers’ network interfaces? Docker (default) attaches all containers to a single docker0 bridge, providing a path for packets to travel between them. ○ Do your iptables allow this particular connection? Docker server will add a default rule to the FORWARD chain with a blanket ACCEPT policy (unless you set --iptables=false when the daemon starts) if you retain the default --icc=true, or else will set the policy to DROP if --icc=false. ● The intention of Docker networks is to segregate services such that the only things on a network are things that need to talk to each other: we’ll end up having lots of networks with a dynamic amount of containers in them. Networks are all isolated from each other. If two containers are not on the same network, they cannot talk.

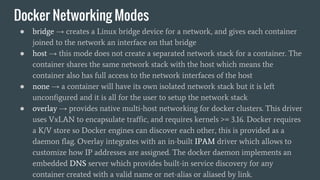

- 5. Docker Networking Modes ● bridge → creates a Linux bridge device for a network, and gives each container joined to the network an interface on that bridge ● host → this mode does not create a separated network stack for a container. The container shares the same network stack with the host which means the container also has full access to the network interfaces of the host ● none → a container will have its own isolated network stack but it is left unconfigured and it is all for the user to setup the network stack ● overlay → provides native multi-host networking for docker clusters. This driver uses VxLAN to encapsulate traffic, and requires kernels >= 3.16. Docker requires a K/V store so Docker engines can discover each other, this is provided as a daemon flag. Overlay integrates with an in-built IPAM driver which allows to customize how IP addresses are assigned. The docker daemon implements an embedded DNS server which provides built-in service discovery for any container created with a valid name or net-alias or aliased by link.

- 6. Docker networking with libnetwork ● libnetwork uses a driver / plugin model to abstract the complexity of the driver implementations by exposing a simple and consistent Network Model to users. ● CNM objects and attributes ○ NetworkController → entry point to bind in-built or remote drivers to networks ○ Driver → implementation in order to satisfy specific use cases and deployment scenarios ○ Sandbox → contains the configuration of a container's network stack. This includes management of the container's interfaces, routing table and DNS settings. Local/Container Scope. ○ Endpoint → represents a Service Endpoint. It provides the connectivity for services exposed by a container in a network. Global Scope within a cluster ○ Network → provides connectivity between a group of end-points that belong to the same network (single/multiple host) isolated from the rest (the Driver performs the actual work of providing the required connectivity and isolation). Global Scope within a cluster ○ Cluster → Group of Docker hosts participate it multihost networking. ○ Attributes: Options & Labels

- 7. Proposed solution: wSDN ● Develop a wSDN network plugin by implementing the Docker plugin API and the network plugin protocol (network driver plugins are activated in the same way as other plugins, and use the same kind of protocol) ● wSDN Network driver plugin is supported via LibNetwork that includes a proxy which forwards driver operations to a remote process, called a remote driver ● Golang interface philosophy → Define one “interface” per function to encourage composability and compose different solutions for different needs.

- 8. Proposed solution: wSDN 1. wSDN as both a libnetwork remote network driver and IPAM driver implementation 2. wSDN takes care of binding one of a veth pair to a network interface on the host, e. g., Linux bridge, Open vSwitch datapath. 3. libnetwork discovers wSDN via plugin discovery mechanism before the first request is made a. During this process libnetwork makes a HTTP POST call on /Plugin.Active and examines the driver type, which defaults to "NetworkDriver" and "IpamDriver" b. libnetwork also calls the following two API endpoints i. /NetworkDriver.GetCapabilities to obtain the capability of wSDN which defaults to "local" ii. /IpamDriver.GetDefaultAddressSpcaces to get the default address spaces used for the IPAM 4. libnetwork registers wSDN as a remote driver 5. User makes requests against libnetwork with the network driver specifier for wSDN (-d wSDN and --ipam-driver=wSDN from the Docker CLI) 6. libnetwork stores the returned information to its key/value datastore backend (abstracted by libkv)

- 9. Show me the codes ● Netlink library ● Type Driver interface ● Remote driver api ● Type Ipam interface ● Simple DNS service ● Overlay driver

- 10. What’s still missing in Docker networking (v1.10.2) ● Secure endpoints (otherwise make sure that the channel being overlayed is secured) ● Dynamically apply configurations (IPAM, subnet restriction) ● Support for larger MTUs (the default libnetwork driver currently doesn’t support larger) + dynamic PMTU discovery ● Having multiple pluggable drivers participating in handling various network management functionalities (multi-host IPAM, SDN, DNS, etc) ● DHCP / dynamic multi-host IPAM driver ● Multicast networking support ● Tighter integration with microservices main architectural approach: service discovery and load balancing (i.e. UCP clusters) ● Critical runtime features for multi-tenants environments (multi-cloud & cross-DC, partition-tolerant & eventually consistent, partially connected network support) (i.e. Docker Cloud & On-premises CaaS)

- 11. The impact of Docker in the SDN field ● Ephemeral nature: Docker containers are ephemeral by design, this leads to several potential issues like being able to keep your firewall (or LB) configuration up to date because of difficult IP address management, connect to services that might disappear at any moment, etc ● Density Factor: Docker brings densities that will change the way applications behave in a network environment: the subscription ratio will change considerably. There may be 2,000 containers on a physical server in an environment that has 1000+ hosts ● Data Gravity: Data should be treated as an object that attracts more objects to it. The more data, the more services and applications it will attract. As more data collects, which is inevitable, compute resources will need to be faster, called to the app in many ways. The compute will swarm or be streamed, which we are seeing already (i.e. AWS Lambda, Google Functions)

- 12. wSDN main Use Case: Delivery pipeline of web app stacks in a multi-tenant clustered environment ● One LB IP for each application; One NAT Subnet for the containers of each application; One Application Router for each application subnet ● Implement IPAM (both static and DHCP based for Subnet/Bridge/Router/LB Creation cases) across clusters and/or DCs. Autoscaling, Downscaling and destroying events should trigger calls to reconfigure the LB for that app ● L3 connectivity between gateways of two clusters and/or routers of the same tenant across DCs ● Multi-tenant networking. Tenant Isolation and identification across DCs ● Coordinate and synchronize Subnet / LB IP (De)Allocation across DCs ● Near flaw-less content delivery (i.e. Netflix intelligent edge networks that cache rich content closer to the user)

- 13. General approach of a SDN solution based on Docker ● Network Model ● Protocol Support ● Container / Group Isolation ● Container Discovery / Name Service ● Distributed Storage ● Encryption Channel ● Partially Connected Network Support ● Separate vNIC for Container ● IP Overlap and Container Subnet Overlap support ● Advanced Networking Services (i.e. stateful L4 -L7 services)

- 14. How to simple test (the performance of) our SDN solution ● Testing on Amazon Web Services with modern AWS instance types (i.e. m4) that support Jumbo Frames with an MTU of 9001 instead of the old default of 1500. ● Using both the iperf3 and qperf test tools to test the performance. Qperf is a very simple tool that does short tests runs, I use iperf3 to do a longer test run, using more parallel connections (higher number of parallel connections causes more overhead) ● The default libnetwork driver currently doesn’t support larger MTU sizes.

- 15. Appendix I. Docker underlying linux kernel features ● namespaces - allow processes to be put into different namespaces, with all processes in each namespace has no clue about the existence of other processes in other namespaces. It then provides a form of lightweight virtualization and resource isolation. There are several types of namespaces used by Dockers to accomplish their goals of building isolated containers: pid, net, ipc, mnt, uts ● control groups (cgroups) - it is Linux kernel layer that provides resource management and resource accounting for groups of processes. Docker implement cgroups so that available hardware resources can be shared fairly between containers and also to limit it if necessary. ● union file system (UnionFS) - it is a file system that operates by creating layers which is used to provide the building blocks for containers. ● container format - it is a wrapper that combines all the previously mentioned technologies. Despite the fact that libcontainer is the default container format, Docker also supports LXC (Linux Container)13

- 16. Appendix II. Container ecosystem map

- 17. Appendix II. Docker ecosystem features

- 18. Appendix III. Docker current limitations Containers promise rapid scalability, flexibility and ease, but they're not right for every workload. 1. Unstable ecosystem (how much of your infra are you going to virtualize ?) 2. Some applications simply need to be monolithic 3. Dependencies (underlaying OS kernel, libraries, binaries) 4. Security & Vulnerability management (compliance) 5. Weaker isolation (dependencies + deep level of authorization) 6. Potential for sprawl 7. Complicated Data Management 8. Repetitive Troubleshooting 9. Limited tools (monitor, manage)

- 19. Appendix IV. SDN common std use cases 1. Automating Network Provisioning for Internal Private Cloud or DC 2. Simplifying Operations by Centralizing Policy and Configuration Management (i.e Support for network "slices") 3. Increasing WAN Utilization Through Traffic Steering 4. Detecting and Mitigating Security Threats 5. Aggregating Data Center Network Tap Traffic 6. Programmability: the ability to use open APIs to link applications to the network. Current networks lack a common set of APIs, which make it very difficult to program applications directly to network resources

- 20. Appendix IV. (cont.) SDN common std use cases 1. Holistic Network Management: SDN allows you to plan your network at the software level and get as complex as you want without worrying about re- cabling at the hardware level. 2. Security: SDN usually provides a more granular security model than traditional hardware/wired security (e.g. NIC validation) 3. Centralized Provisioning: it’s almost trivial to set up completely isolated networks over existing copper. 4. Efficiency: SDN can reduce downtime because everything is done at the software level: you never have to touch the cables. 5. Cost Reduction: SDN network provisioning automation helps lowering operating and expenses (reduces the required human effort).

- 21. References 1. Weaveworks simple distributed SDN for Docker (github) (blog) (docs) 2. Container patterns 3. Battlefield: Calico, Flannel, Weave and Docker Overlay Network (weave) 4. Docker libnetwork (blog) 5. Remote drivers (plugins) 6. Docker changelog (network) (dns) 7. Docker networking: define containers’ comms at the app level 8. Docker multi-host networking (benchmrk) 9. Plumgrid / libnetwork-plugin 10. SDN controllers