Building a Unified Data Pipline in Spark / Apache Sparkを用いたBig Dataパイプラインの統一

- 1. Building a Unified Data Aaron Davidson Slides adapted from Matei Zaharia spark.apache.org Pipeline in Spark で構築する統合データパイプライン

- 2. What is Apache Spark? Fast and general cluster computing system interoperable with Hadoop Improves efficiency through: »In-memory computing primitives »General computation graphs Improves usability through: »Rich APIs in Java, Scala, Python »Interactive shell Up to 100× faster (2-10× on disk) 2-5× less code Hadoop互換のクラスタ計算システム 計算性能とユーザビリティを改善

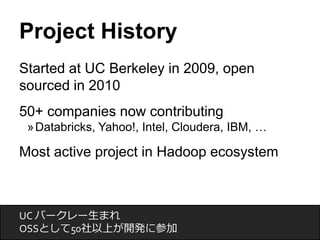

- 3. Project History Started at UC Berkeley in 2009, open sourced in 2010 50+ companies now contributing »Databricks, Yahoo!, Intel, Cloudera, IBM, … Most active project in Hadoop ecosystem UC バークレー生まれ OSSとして50社以上が開発に参加

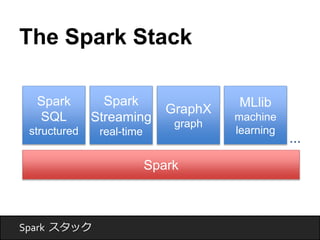

- 4. A General Stack Spark Spark Streaming real-time Spark SQL structured GraphX graph MLlib machine learning … 構造化クエリ、リアルタイム分析、グラフ処理、機械学習

- 5. This Talk Spark introduction & use cases Modules built on Spark The power of unification Demo Sparkの紹介とユースケース

- 6. Why a New Programming Model? MapReduce greatly simplified big data analysis But once started, users wanted more: »More complex, multi-pass analytics (e.g. ML, graph) »More interactive ad-hoc queries »More real-time stream processing All 3 need faster data sharing in parallel aMpappRseduceの次にユーザが望むもの: より複雑な分析、対話的なクエリ、リアルタイム処理

- 7. Data Sharing in MapReduce iter. 1 iter. 2 . . . Input HDFS read HDFS write HDFS read HDFS write Input query 1 query 2 query 3 result 1 result 2 result 3 . . . HDFS read Slow due to replication, serialization, and disk IO MapReduce のデータ共有が遅いのはディスクIOのせい

- 8. What We’d Like iter. 1 iter. 2 . . . Input Distributed memory Input query 1 query 2 query 3 . . . one-time processing 10-100× faster than network and disk ネットワークやディスクより10~100倍くらい高速化したい

- 9. Spark Model Write programs in terms of transformations on distributed datasets Resilient Distributed Datasets (RDDs) »Collections of objects that can be stored in memory or disk across a cluster »Built via parallel transformations (map, filter, …) »Automatically rebuilt on failure 自己修復する分散データセット(RDD) RDDはmap やfilter 等のメソッドで並列に変換できる

- 10. Example: Log Mining Load error messages from a log into memory, then interactively search for various patterns BaseT RraDnDsformed RDD lines = spark.textFile(“hdfs://...”) errors = lines.filter(lambda s: s.startswith(“ERROR”)) messages = errors.map(lambda s: s.split(‘t’)[2]) messages.cache() Block 1 Result: full-scaled text to search 1 TB data of Wikipedia in 5-7 sec in <1 (sec vs 170 (vs 20 sec sec for for on-on-disk disk data) data) Block 2 Action Block 3 Worker Worker Worker Driver messages.filter(lambda s: “foo” in s).count() messages.filter(lambda s: “bar” in s).count() . . . results tasks Cache 1 Cache 2 Cache 3 様々なパターンで対話的に検索。1 TBの処理時間が170 -> 5~7秒に

- 11. Fault Tolerance RDDs track lineage info to rebuild lost data file.map(lambda rec: (rec.type, 1)) .reduceByKey(lambda x, y: x + y) .filter(lambda (type, count): count > 10) map reduce filter Input file **系統** 情報を追跡して失ったデータを再構築

- 12. Fault Tolerance RDDs track lineage info to rebuild lost data file.map(lambda rec: (rec.type, 1)) map reduce filter Input file .reduceByKey(lambda x, y: x + y) .filter(lambda (type, count): count > 10) **系統** 情報を追跡して失ったデータを再構築

- 13. Example: Logistic Regression 4000 3500 3000 2500 2000 1500 1000 500 0 1 5 10 20 30 Running Time (s) Number of Iterations 110 s / iteration Hadoop Spark first iteration 80 s further iterations 1 s ロジスティック回帰

- 14. Behavior with Less RAM 68.8 58.1 40.7 29.7 11.5 100 80 60 40 20 0 Cache disabled 25% 50% 75% Fully cached Iteration time (s) % of working set in memory キャッシュを減らした場合の振る舞い

- 15. Spark in Scala and Java // Scala: val lines = sc.textFile(...) lines.filter(s => s.contains(“ERROR”)).count() // Java: JavaRDD<String> lines = sc.textFile(...); lines.filter(new Function<String, Boolean>() { Boolean call(String s) { return s.contains(“error”); } }).count();

- 16. Spark in Scala and Java // Scala: val lines = sc.textFile(...) lines.filter(s => s.contains(“ERROR”)).count() // Java 8: JavaRDD<String> lines = sc.textFile(...); lines.filter(s -> s.contains(“ERROR”)).count();

- 17. Supported Operators map filter groupBy sort union join leftOuterJoin rightOuterJoin reduce count fold reduceByKey groupByKey cogroup cross zip sample take first partitionBy mapWith pipe save ...

- 18. Spark Community 250+ developers, 50+ companies contributing Most active open source project in big data MapReduce YARN HDFS Storm Spark 1400 1200 1000 800 600 400 200 0 commits past 6 months ビッグデータ分野で最も活発なOSSプロジェクト

- 19. Continuing Growth source: ohloh.net Contributors per month to Spark 貢献者は増加し続けている

- 20. Get Started Visit spark.apache.org for docs & tutorials Easy to run on just your laptop Free training materials: spark-summit.org ラップトップ一台から始められます

- 21. This Talk Spark introduction & use cases Modules built on Spark The power of unification Demo Spark 上に構築されたモジュール

- 22. The Spark Stack Spark Spark Streaming real-time Spark SQL structured GraphX graph MLlib machine learning … Spark スタック

- 23. Evolution of the Shark project Allows querying structured data in Spark From Hive: c = HiveContext(sc) rows = c.sql(“select text, year from hivetable”) rows.filter(lambda r: r.year > 2013).collect() {“text”: “hi”, “user”: { “name”: “matei”, “id”: 123 }} From JSON: c.jsonFile(“tweets.json”).registerAsTable(“tweets”) c.sql(“select text, user.name from tweets”) tweets.json Spark SQL Shark の後継。Spark で構造化データをクエリする。

- 24. Spark SQL Integrates closely with Spark’s language APIs c.registerFunction(“hasSpark”, lambda text: “Spark” in text) c.sql(“select * from tweets where hasSpark(text)”) Uniform interface for data access Python Scala Java Hive Parquet JSON Cassan-dra … SQL Spark 言語APIとの統合 様々なデータソースに対して統一インタフェースを提供

- 25. Spark Streaming Stateful, fault-tolerant stream processing with the same API as batch jobs sc.twitterStream(...) .map(tweet => (tweet.language, 1)) .reduceByWindow(“5s”, _ + _) Storm Spark 35 30 25 20 15 10 5 0 Throughput … ステートフルで耐障害性のあるストリーム処理 バッチジョブと同じAPI

- 26. MLlib Built-in library of machine learning algorithms »K-means clustering »Alternating least squares »Generalized linear models (with L1 / L2 reg.) »SVD and PCA »Naïve Bayes points = sc.textFile(...).map(parsePoint) model = KMeans.train(points, 10) 組み込みの機械学習ライブラリ

- 27. This Talk Spark introduction & use cases Modules built on Spark The power of unification Demo 統合されたスタックのパワー

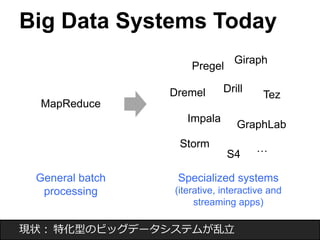

- 28. Big Data Systems Today MapReduce Pregel Dremel GraphLab Storm Giraph Drill Tez Impala S4 … Specialized systems (iterative, interactive and streaming apps) General batch processing 現状: 特化型のビッグデータシステムが乱立

- 29. Spark’s Approach Instead of specializing, generalize MapReduce to support new apps in same engine Two changes (general task DAG & data sharing) are enough to express previous models! Unification has big benefits »For the engine »For users Spark Streaming GraphX … Shark MLbase Spark のアプローチ: 特化しない 汎用的な同一の基盤で、新たなアプリをサポートする

- 30. What it Means for Users Separate frameworks: … HDFS read HDFS write ETL HDFS read HDFS write train HDFS read HDFS write query Spark: Interactive HDFS HDFS read ETL train query analysis 全ての処理がSpark 上で完結。さらに対話型分析も

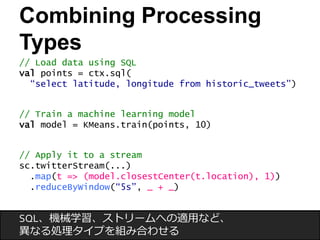

- 31. Combining Processing Types // Load data using SQL val points = ctx.sql( “select latitude, longitude from historic_tweets”) // Train a machine learning model val model = KMeans.train(points, 10) // Apply it to a stream sc.twitterStream(...) .map(t => (model.closestCenter(t.location), 1)) .reduceByWindow(“5s”, _ + _) SQL、機械学習、ストリームへの適用など、 異なる処理タイプを組み合わせる

- 32. This Talk Spark introduction & use cases Modules built on Spark The power of unification Demo デモ

- 33. The Plan Raw JSON Tweets SQL Streaming Machine Learning 訓生S特p練徴aJSrしkベO SNたクQ をモLト でデHルDツルをFイSで抽 かー、出らトツし読本イてみ文ーk込を-トmむ抽スea出トns リでーモムデをルクをラ訓ス練タすリるングする

- 34. Demo!

- 35. Summary: What We Did Raw JSON SQL Streaming Machine Learning -生JSON をHDFS から読み込む -Spark SQL でツイート本文を抽出 -特徴ベクトルを抽出してk-means でモデルを訓練する -訓練したモデルで、ツイートストリームをクラスタリングする

- 36. import org.apache.spark.sql._ val ctx = new org.apache.spark.sql.SQLContext(sc) val tweets = sc.textFile("hdfs:/twitter") val tweetTable = JsonTable.fromRDD(sqlContext, tweets, Some(0.1)) tweetTable.registerAsTable("tweetTable") ctx.sql("SELECT text FROM tweetTable LIMIT 5").collect.foreach(println) ctx.sql("SELECT lang, COUNT(*) AS cnt FROM tweetTable GROUP BY lang ORDER BY cnt DESC LIMIT 10").collect.foreach(println) val texts = sql("SELECT text FROM tweetTable").map(_.head.toString) def featurize(str: String): Vector = { ... } val vectors = texts.map(featurize).cache() val model = KMeans.train(vectors, 10, 10) sc.makeRDD(model.clusterCenters, 10).saveAsObjectFile("hdfs:/model") val ssc = new StreamingContext(new SparkConf(), Seconds(1)) val model = new KMeansModel( ssc.sparkContext.objectFile(modelFile).collect()) // Streaming val tweets = TwitterUtils.createStream(ssc, /* auth */) val statuses = tweets.map(_.getText) val filteredTweets = statuses.filter { t => model.predict(featurize(t)) == clusterNumber } filteredTweets.print() ssc.start()

- 37. Conclusion Big data analytics is evolving to include: »More complex analytics (e.g. machine learning) »More interactive ad-hoc queries »More real-time stream processing Spark is a fast platform that unifies these apps Learn more: spark.apache.org ビッグデータ分析は、複雑で、対話的で、リアルタイムな方向へと進化 Sparkはこれらのアプリを統合した最速のプラットフォーム

Editor's Notes

- #4: TODO: Apache incubator logo

- #8: Each iteration is, for example, a MapReduce job

- #11: Add “variables” to the “functions” in functional programming

- #14: 100 GB of data on 50 m1.xlarge EC2 machines

- #19: Alibaba, tenzent At Berkeley, we have been working on a solution since 2009. This solution consists of a software stack for data analytics, called the Berkeley Data Analytics Stack. The centerpiece of this stack is Spark. Spark has seen significant adoption with hundreds of companies using it, out of which around sixteen companies have contributed back the code. In addition, Spark has been deployed on clusters that exceed 1,000 nodes.

- #20: Despite Hadoop having been around for 7 years, the Spark community is still growing; to us this shows that there’s still a huge gap in making big data easy to use and contributors are excited about Spark’s approach here

![Example: Log Mining

Load error messages from a log into memory,

then interactively search for various patterns

BaseT RraDnDsformed RDD

lines = spark.textFile(“hdfs://...”)

errors = lines.filter(lambda s: s.startswith(“ERROR”))

messages = errors.map(lambda s: s.split(‘t’)[2])

messages.cache() Block 1

Result: full-scaled text to search 1 TB data of Wikipedia in 5-7 sec

in

<1 (sec vs 170 (vs 20 sec sec for for on-on-disk disk data)

data)

Block 2

Action

Block 3

Worker

Worker

Worker

Driver

messages.filter(lambda s: “foo” in s).count()

messages.filter(lambda s: “bar” in s).count()

. . .

results

tasks

Cache 1

Cache 2

Cache 3

様々なパターンで対話的に検索。1 TBの処理時間が170 -> 5~7秒に](https://image.slidesharecdn.com/a5-submatsuriunification-140912023220-phpapp02/85/Building-a-Unified-Data-Pipline-in-Spark-Apache-Spark-Big-Data-10-320.jpg)