CBlocks - Posix compliant files systems for HDFS

- 1. 1 © Hortonworks Inc. 2011 – 2016. All Rights Reserved CBlock – Posix Compliant File Systems for Hadoop Anu Engineer [email protected] Xiaoyu Yao [email protected]

- 2. 2 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Cblocks - What is CBlocks ⬢ CBlocks allows creation of HDFS backed volumes ⬢ It can be mounted like normal disk devices ⬢ Formatted with traditional file systems like Ext4 or XFS ⬢ The disks are persisted directly to an HDFS cluster. ⬢ Disks can be created dynamically and stored away for later use. ⬢ Allows non-HDFS aware programs to run smoothly on a Hadoop cluster via Docker. Apache Hadoop, Hadoop, Apache are either registered trademarks or trademarks of the Apache Software Foundation in the United States and other countries.

- 3. 3 © Hortonworks Inc. 2011 – 2016. All Rights Reserved CBlocks – Why support a Posix compliant file system ? ⬢ Data Lake 3.0 attempts to run packaged applications via YARN + docker. ⬢ This creates huge flexibility in what kind of applications can be deployed on a Hadoop cluster. ⬢ Many of these new applications are not Hadoop aware, and they assume a Posix compliant file system ⬢ CBlock allows creation of persistent volumes that are backed by HDFS. ⬢ With CBlock + YARN + DOCKER, a completely new set of applications can be run on Hadoop clusters.

- 4. 4 © Hortonworks Inc. 2011 – 2016. All Rights Reserved CBlock – Contributors ⬢ CBlock relies on Storage Container Manager (SCM) and Container layer. We want to thank all code contributors who made this talk possible. ⬢ Chen Liang and Mukul Kumar Singh did extensive work on CBlock. ⬢ Along with Chen and Mukul, Weiwei Yang, Yuanbo Liu, Yiqun Lin, Nandakumar Vadivelu, Tsz Wo Nicholas Sze, Xiaoyu Yao and Anu Engineer are the core contributors to SCM. ⬢ Along with SCM contributors, Container layer was developed by Chris Nauroth, Arpit Agarwal and Jitendra Pandey. ⬢ A special call out to Sebastian Graf, one of the original authors of jSCSI. Without his support and work CBlock could not exist. A huge thank you to the jSCSI team for the work and support they provided us in making CBlock real.

- 5. 5 © Hortonworks Inc. 2011 – 2016. All Rights Reserved CBlock – Flow of this talk ⬢ This talk takes a user-centric point of view and leads the audience from how CBlock is used to the internals of CBlock. We refer to Storage container manager (SCM) and Containers in CBlock section, but rest assured that we will take you behind the scenes to see how it all works. ⬢ CBlock users start off by creating a simple CBlock Volume. ⬢ Once the volume is created the user can mount it like a normal iSCSI volume. ⬢ Format the volume with a file system they like. ⬢ Use the volume like normal file system.

- 6. 6 © Hortonworks Inc. 2011 – 2016. All Rights Reserved CBlock – Commands to use CBlock ⬢ Create a 4 TB volume owned by user bilbo – hdfs cblock -c bilbo volume1 4TB ⬢ Run a bunch of standard iSCSI commands to mount the volume. – iscsiadm -m node -o new -T bilbo:volume1 -p localhost:3260 – iscsiadm -m node -T bilbo:volume1 --login ⬢ Run format command on the volume to create new file system ⬢ Mount the newly formatted volume and you are ready to use CBlocks.

- 7. 7 © Hortonworks Inc. 2011 – 2016. All Rights Reserved CBlock – Current State ⬢ CBlock is running in the QE environment of Hortonworks. ⬢ It acts as the Log server, the machine that holds the logs of from thousands of hadoop test runs inside hortonworks. ⬢ Log Servers expose the CBlock volume for external access via NFS, Rsync and HTTP. ⬢ Many volumes are deployed in parallel and TBs of data being written regularly. ⬢ CBlock is still a work in progress – Ratis based replication work needs to complete for CBlock to be highly redundant and fault tolerant. ⬢CBlock Volume Sizes –We have created volumes from 1GB size to 6TB. –We have created 100s of Volumes and used them concurrently. ⬢ It is a stable, usable piece of software. This deployment allows us to test the HDFS- 7240 (Ozone branch) continuously.

- 8. 8 © Hortonworks Inc. 2011 – 2016. All Rights Reserved CBlock Architecture

- 9. 9 © Hortonworks Inc. 2011 – 2016. All Rights Reserved CBlock Components ⬢ Containers – Actual storage locations on Datanodes. –We acknowledge the term container is overloaded. No relation to YARN containers or LXC. –Assume container means a collection of blocks on a datanode for now. –Containers deep dive to follow. ⬢ CBlock ISCSI Server – Deployed on datanodes that act as iSCSI servers to which iscsi clients connect. ⬢ CBlock Server - Maps volumes to Containers. The metadata server for CBlock. Create/Mount operations are serviced by the CBlock Server. ⬢ Storage Container Manager (SCM) - Manages the container life cycle.

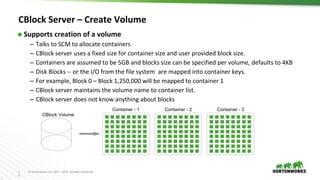

- 10. 1 0 © Hortonworks Inc. 2011 – 2016. All Rights Reserved CBlock Server – Create Volume ⬢ Supports creation of a volume – Talks to SCM to allocate containers – CBlock server uses a fixed size for container size and user provided block size. – Containers are assumed to be 5GB and blocks size can be specified per volume, defaults to 4KB – Disk Blocks -- or the I/O from the file system are mapped into container keys. – For example, Block 0 – Block 1,250,000 will be mapped to container 1 – CBlock server maintains the volume name to container list. – CBlock server does not know anything about blocks

- 11. 1 1 © Hortonworks Inc. 2011 – 2016. All Rights Reserved CBlock Server – Mount Volume ⬢ CBlock iSCSI server reads the container list from CBlock server. ⬢ Since each container is assumed to have a fixed size and blocks are also fixed size, no extra metadata is needed from CBlock server other than a ordered list of containers. ⬢ iSCSI server reads this list and replies to the client that is trying to mount the disk.

- 12. 1 2 © Hortonworks Inc. 2011 – 2016. All Rights Reserved CBlock Server – I/O path ⬢ File system sends I/O to the mounted device. ⬢ The iSCSI server takes the I/O and uses simple math to locate the container. ⬢ ContainerSize / BlockSize == 5GB/4KB == 1,250,000 blocks are in the first container. ⬢ iSCSI server writes to that container using putSmallFile API. ⬢ For example, if block0 is being written, it will write to volume0.container0.block0. ⬢ Since there are no lookups involved I/O path runs at the network speed.

- 13. 1 3 © Hortonworks Inc. 2011 – 2016. All Rights Reserved CBlock Server – I/O path - Caches ⬢ Replicated I/O can be expensive from the point of view for many applications. ⬢ Hence CBlock supports a local on disk write-back cache. ⬢ The presence of the cache not only makes I/O faster, but avoids lots of remote reads. ⬢ By default the cache is disabled, but enabling it makes CBlock as fast as local storage. ⬢ We intended to support Write-through caches in future. ⬢ When caches are enabled, a clean unmount is recommended.

- 14. 1 4 © Hortonworks Inc. 2011 – 2016. All Rights Reserved1 4 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Container Framework

- 15. 1 5 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Container Framework ⬢ A shareable generic block service that can be used by distributed storage services. ⬢ Make it easier to develop new storage services - BYO storage format and naming scheme. ⬢ Design Goals –No single points of failure. All services are replicated. –Avoid bottlenecks •Minimize central state •No verbose Block Reports ⬢ Lessons learned from large scale HDFS clusters. ⬢ Ideas from earlier proposals in HDFS community (Container Framework borrows heavily from ideas proposed in HDFS-5477)

- 16. 1 6 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Container Framework

- 17. 1 7 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Container Framework ⬢ Will offer the following services to build other storage services. ⬢ Replicated Log - This offers a replicated state machine as a service. The replicated log is the basis for all other abstractions offered by this framework. ⬢ Replicated Block - a key value pair. The key is assumed to be unique and value is a stream of bytes. ⬢ Replicated Container - A collection of replicated blocks is a replicated container. Replicated containers can be open or closed. ⬢ Replicated Map - A distributed map built using replicated log. This is the standard building block used when building name services like CBlock server or Ozone’s Namespace manager (KSM).

- 18. 1 8 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Storage Services using Container Framework Service Namespace Block Services Data store HDFS Namenode SCM Containers CBlock CBlock Server SCM Containers Ozone Key space Manager(KSM) SCM Containers ⬢ Set of Storage services sharing a single storage cluster. ⬢ Ozone and CBlock shares a common backend. ⬢ Only name services and client protocol is different. – Ozone uses KSM and Ozone Rest Protocol to speak to the cluster. – CBlock uses CBlock Server and ISCSI protocol to communicate to the cluster.

- 19. 1 9 © Hortonworks Inc. 2011 – 2016. All Rights Reserved1 9 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Storage Container Manager

- 20. 2 0 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Storage Container Manager - Container ⬢ A container is the unit of replication. –Size bounded by how quickly it can be re-replicated after a loss. ⬢ Each container is an independent key-value store. –No requirements on the structure or format of keys/values. –Keys are unique only within a container. ⬢ E.g. key-value pair could be one of –An Ozone Key-Value pair –An HDFS block ID and block contents •Or part of a block, when a block spans containers. –A CBlock disk block and its contents

- 21. 2 1 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Containers (continued) ⬢ Each container has metadata – Metadata consistency maintained via the RAFT protocol – Metadata consists of keys and references to chunks. ⬢ Container metadata stored in LevelDB/RocksDB. – Exact choice of KV store unimportant. ⬢ A chunk is a piece of user data. – Chunks are replicated via a data pipeline. – Chunks can be of arbitrary sizes e.g. a few KB to GBs. – Each chunk reference is a (file, offset, length) triplet. ⬢ Containers may garbage collect unreferenced chunks. ⬢ Each container independently decides how to map chunks to files – Rewrites files for performance, compaction and overwrites.

- 22. 2 2 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Containers (continued)

- 23. 2 3 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Storage Container Manager ⬢ A fault-tolerant replicated service. ⬢ Replicates its own state using RAFT protocol. ⬢ SCM is a highly available replicated block manager – Manages Blocks – Manages Containers – Manages Pipelines – Manages Pools – Manages Datanodes

- 24. 2 4 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Storage Container Manager – Block Services ⬢ SCM acts like the traditional block manager of HDFS. – Providing service for HDFS or Ozone uses the block services. – APIs support allocateBlock, deleteBlock, infoBlock etc. ⬢With Block services Namespace services can deal with a higher level abstraction than one offered by container services. ⬢ CBlock currently does not use the block services, instead relies on pre-allocating the block space using Container Services.

- 25. 2 5 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Storage Container Manager – Container Services ⬢ Containers are a collection of unrelated blocks. ⬢ Containers can be open or closed. ⬢ An Open container is mutable and a closed container is immutable. ⬢ A closed /immutable container makes it easier to support Erasure coding. ⬢ Caching is much easier in the presence of closed containers. ⬢ Deleting a key offers some interesting problems. ⬢ Deleting a key will be supported via a container rewrite, but that is a pretty large topic to cover here.

- 26. 2 6 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Containers (continued) ⬢ Containers can be Open or Closed. ⬢ Open Containers –Mutable –Replicated via Apache Ratis –Consistency is guaranteed by Raft Protocol. ⬢ Closed Containers –Immutable –Replicated via SCM –Consistency is guaranteed by replication protocols similar to the one used by HDFS block replication today.

- 27. 2 7 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Storage Container Manager – Pipelines ⬢ Containers replicate data using RAFT protocol. ⬢ Apache Ratis provides a RAFT library used by SCM. ⬢ SCM creates a replica set of three machines as datanode pipeline. ⬢ Metadata is replicated via RAFT while data is written directly to blocks. ⬢ Open Containers exist on machines backed by pipelines. ⬢ SCM manages the lifecycle of these pipelines. ⬢ Closed containers are immutable and they can exist independent of pipelines.

- 28. 2 8 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Storage Container Manager – Pools ⬢ Pools define replication domains -- That is it is a set of machines in which a container can exist. ⬢ Based on work done at Facebook and CopySets paper. ⬢ A closed container is replicated only to members of a pool. ⬢ By default, a pool consists of 24 machines. ⬢ This allows SCM to scale to very large clusters, because replica processing can be constrained to a pool at a time. ⬢ Reduces the chance of data loss in the event of loss of more than 2 machines.

- 29. 2 9 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Storage Container Manager – Datanodes management ⬢ Similar to what Namenodes does, SCM listens to heartbeats from Datanodes. ⬢ Similar to Namenode uses HB response to deliver commands to Datanodes. ⬢ Datanodes expose the container protocol and shares the HTTP endpoint with WebHDFS for Ozone.

- 30. 3 0 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Replication Pipeline – Loss of a single node ⬢ Raft healing kicks in. ⬢ SCM will recruit a new datanode to heal this pipeline. ⬢ One important thing to note with CBlock and Ozone, we can control how many replication pipelines or Open containers should be active in the cluster. ⬢ Defaults to creating 30% of Maximum pipeline capacity of the cluster. Generally, no need for active user intervention, but can be optimized by user for specific workloads.

- 31. 3 1 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Replication Pipeline – Loss of two nodes ⬢ If and when you lose two nodes that form a RAFT quorum, SCM cannot automatically heal that ring. ⬢Once a pipeline is lost, we can still read the data from the single node’s RAFT state machine. That is user data is not lost. We simply don’t know if we are reading the latest data. ⬢ Manual intervention required if users decide to heal this ring. ⬢ Probability of losing 2 nodes in RAFT ring becomes lower as number of datanodes increase.

- 32. 3 2 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Replication Pipeline – Loss of two nodes ⬢ SCM is planning to deal with using three different techniques. –These are ideas under consideration and not something that is working now. ⬢SCM actively tries not to get into this situation, when a single node is lost, SCM closes all open containers and re-routes all I/O to healthy pipelines. ⬢One approach is to support MVCC, so that we can read data and if the other 2 nodes come back -- we are able to resolve which version is latest. ⬢Another simpler approach is to write checkpoint entries and we are guaranteed that entries up to the checkpoint are consistent, that is replicated consistently. ⬢All these approaches suffer from the issue that we can not guarantee the liveness of the data, it is possible that we might be reading older data.

- 33. 3 3 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Storage Container Manager - Scale ⬢ Designed to handle trillions of objects. Container reports and heartbeats are controlled by SCM. Since replication reconciliation is done pool by pool, SCM scales to any very large clusters. ⬢ The average size of a container is completely independent of the individual file sizes. That is small files is not a problem with container architecture. ⬢ Ratis offers fairly fast replication performance and can maintain pretty high throughputs. ⬢ SCM assumes the presence of SSDs, since SCM assumes partial namespace in memory. So IOPS matter. ⬢ GC is not an issue, since metadata is cached off-heap which does not cause GC pressures. SCM, KSM and CBlock server uses native memory to avoid GC pressure.

- 34. 3 4 © Hortonworks Inc. 2011 – 2016. All Rights Reserved High Availability for Storage Container Manager and CBlock server ⬢ Both CBlock Server and SCM has critical metadata that is needed for the operation of CBlock. ⬢ This data will be replicated using RAFT protocol. ⬢ So both CBlock server and SCM will always deployed in HA mode. ⬢ This is a work in progress.

- 35. 3 5 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Acknowledgements ⬢ Ozone is being actively developed by Jitendra Pandey, Chris Nauroth, Tsz Wo (Nicholas) Sze, Jing Zhao, Weiwei Yang, Yuanbo Liu, Mukul Kumar Singh, Chen Liang, Nandakumar Vadivelu, Yiqun Lin, Suresh Srinivas, Sanjay Radia, Xiaoyu Yao, Enis Soztutar, Devaraj Das, Arpit Agarwal and Anu Engineer. ⬢ The Apache community has been very helpful and we were supported by comments and contributions from khanderao, Kanaka Kumar Avvaru, Edward Bortnikov, Thomas Demoor, Nick Dimiduk, Chris Douglas, Jian Fang, Lars Francke, Gautam Hegde, Lars Hofhansl, Jakob Homan, Virajith Jalaparti, Charles Lamb, Steve Loughran, Haohui Mai, Colin Patrick McCabe, Aaron Myers, Owen O’Malley, Liam Slusser, Jeff Sogolov, Andrew Wang, Fengdong Yu, Zhe Zhang & Kai Zheng.

- 36. 3 6 © Hortonworks Inc. 2011 – 2016. All Rights Reserved3 6 © Hortonworks Inc. 2011 – 2016. All Rights Reserved Thank You