Classification by backpropacation

- 1. By, B.Kohila ,MSc(IT), Nadar saraswathi college of arts and science

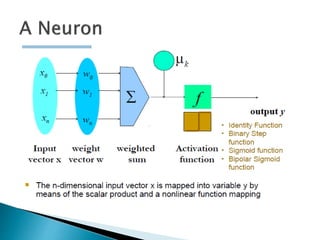

- 2. A neural network: A set of connected input/output units where each connection has a weight associated with it Computer Programs Pattern detection and machine learning algorithms Build predictive models from large databases Modeled on human nervous system Offshoot of AI McCulloch and Pitt Originally targeted Image Understanding, Human Learning, Computer Speech

- 3. Highly accurate predictive models for a large number of different types of problems Ease of use and deployment – poor Connection between nodes Number of units Training level Learning Capability Model is built one record at a time

- 5. Model Specified by weights Training modifies weights Complex models Fully interconnected Multiple hidden layers

- 6. Or Gate Functionality Input 1 Input 2 Weighted Sum Output 0 0 0 0 0 1 1 1 1 0 1 1 1 1 2 1

- 7. Backpropagation: A neural network learning algorithm Started by psychologists and neurobiologists to develop and test computational analogues of neurons During the learning phase, the network learns by adjusting the weights so as to be able to predict the correct class label of the input tuples Also referred to as connectionist learning due to the connections between units

- 8. Weakness Long training time Require a number of parameters typically best determined empirically, e.g., the network topology or “structure." Poor interpretability: Difficult to interpret the symbolic meaning behind the learned weights and of “hidden units" in the network Strength High tolerance to noisy data Ability to classify untrained patterns Well-suited for continuous-valued inputs and outputs Successful on a wide array of real-world data Algorithms are inherently parallel Techniques have recently been developed for the extraction of rules from trained neural networks

- 10. The inputs to the network correspond to the attributes measured for each training tuple Inputs are fed simultaneously into the units making up the input layer They are then weighted and fed simultaneously to a hidden layer The number of hidden layers is arbitrary, although usually only one The weighted outputs of the last hidden layer are input to units making up the output layer, which emits the network's prediction The network is feed-forward in that none of the weights cycles back to an input unit or to an output unit of a previous layer

- 11. First decide the network topology: # of units in the input layer, # of hidden layers (if > 1), # of units in each hidden layer, and # of units in the output layer Normalizing the input values for each attribute measured in the training tuples to [0.0—1.0] One input unit per domain value, each initialized to 0 Output, if for classification and more than two classes, one output unit per class is used Once a network has been trained and its accuracy is unacceptable, repeat the training process with a different network topology or a different set of initial weights

- 12. Updation of weights and biases Case Updating Epoch Updating Terminating condition Weight changes are below threshold Error rate / Misclassification rate is small Number of epochs Efficiency of Backpropagation O(|D| x w) – for each epoch w – number of weights

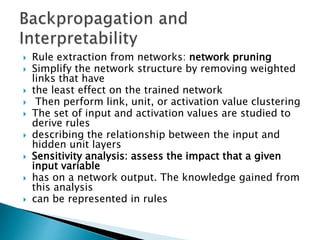

- 13. Rule extraction from networks: network pruning Simplify the network structure by removing weighted links that have the least effect on the trained network Then perform link, unit, or activation value clustering The set of input and activation values are studied to derive rules describing the relationship between the input and hidden unit layers Sensitivity analysis: assess the impact that a given input variable has on a network output. The knowledge gained from this analysis can be represented in rules

![ First decide the network topology: # of units in the input layer, # of

hidden layers (if > 1), # of units in each hidden layer, and # of units

in the

output layer

Normalizing the input values for each attribute measured in the

training

tuples to [0.0—1.0]

One input unit per domain value, each initialized to 0

Output, if for classification and more than two classes, one output

unit per

class is used

Once a network has been trained and its accuracy is unacceptable,

repeat the training process with a different network topology or a

different

set of initial weights](https://image.slidesharecdn.com/classificationbybackpropacation-200123095258/85/Classification-by-backpropacation-11-320.jpg)