Classsourcing: Crowd-Based Validation of Question-Answer Learning Objects @ ICCCI 2013

- 1. Classsourcing: Crowd-Based Validation of Question-Answer Learning Objects Jakub Šimko, Marián Šimko, Mária Bieliková, Jakub Ševcech, Roman Burger 12.9.2013 [email protected] ICCCI ’13

- 2. This talk • How can we use crowd of students to reinforce the learning process? • What are the upsides and downsides of using student crowd? • And what are the tricky parts? • Case of a specific method: interactive exercise featuring text answer correctness validation

- 3. Using students as a crowd • Cheap (free) • Students can be motivated – The process must benefit them – Secondarily reinforced by teacher’s points • Heterogeneity (in skill, in attitude) • Tricky behavior

- 4. Example 1: Duolingo • Learning language by translating real web • Translations and ratings also support the learning itself

- 5. Example 2: ALEF • Adaptive LEarning Framework • Students crowdsourced for highlights, tags, external resources

- 6. Our method: motivation • Students like online interactive exercises – Some as a preferred form of learning – Most as self-testing tool (used prior to exams) • … but these are limited – They require manually-created content – Automated evaluation is limited for certain answer types • OK with (multi)choice questions, number results, … • BAD with free text answers, visuals, processes, … • … limited to certain domains of learning content

- 7. Method goal • Bring-in interactive online exercise, that 1. Provides instant feedback to student 2. Goes beyond knowledge type limits 3. Is less dependent on manual content creation

- 8. Method idea Instead of answering a question with free text, student evaluates an existing answer… The question-answer combination is our learning object.

- 9. … like this:

- 10. This form of exercise • Uses answers of student origin – Difficult and tricky to be evaluated, thus challenging • Enables to re-use existing answers – Plenty of past exam questions and answers – Plenty of additional exercises done by students • Feedback may be provided – By existing teacher evaluations – By aggregated evaluations of other students (average)

- 11. Deployment • • • • • Integrated into ALEF learning framework 2 weeks, 200 questions (each 20 answers) 142 students 10 000 collected evaluations Greedy task assignment – We wanted 16 evaluations for each questionanswer (in the end, 465 reached this). – Counter-requirement: one student can’t be assigned with the same question for some time.

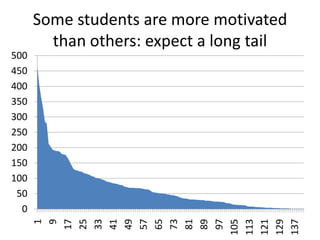

- 12. 1 9 17 25 33 41 49 57 65 73 81 89 97 105 113 121 129 137 500 450 400 350 300 250 200 150 100 50 0 Some students are more motivated than others: expect a long tail

- 13. Crowd evaluation: is the answer correct or wrong? • Our first thought: (having a set of individual evaluations – values between 0 and 1): – Compute average – Split the interval in half – Discretize accordingly • … didn’t work well – “trustful student effect”

- 14. Example of a trustful student 120 100 80 60 40 20 0 0,1 0,2 0,3 0,4 0,5 0,6 0,7 0,8 0,9 1 0 0,1 0,2 0,3 0,4 0,5 0,6 0,7 Estimated correctness (intervals) 0,8 0,9 True ratio of correct and wrong answers in the data set was 2:1

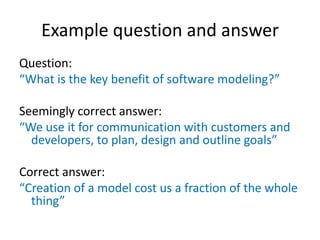

- 15. Example question and answer Question: “What is the key benefit of software modeling?” Seemingly correct answer: “We use it for communication with customers and developers, to plan, design and outline goals” Correct answer: “Creation of a model cost us a fraction of the whole thing”

- 16. Interpretation of the crowd • Wrong answer 0 1 • Correct answer 0 1 • Correctness computation – Average – Threshold – Uncertainty interval around threshold

- 17. Evaluation: crowd correctness • We trained threshold (t) and uncertainty interval (ε) • Resulting in precision and “unknown cases” ratios t 0.55 ε = 0.0 79.60 (0.0) ε = 0.05 83.52 (12.44) ε = 0.10 86.88 (20.40) 0.60 82.59 (0.0) 86.44 (11.94) 88.97 (27.86) 0.65 84.58 (0.0) 87.06 (15.42) 91.55 (29.35) 0.70 80.10 (0.0) 88.55 (17.41) 88.89 (37.31) 0.75 79.10 (0.0) 79.62 (21.89) 86.92 (46.77)

- 18. Aggregate distribution of student evaluations to correctness intervals

- 19. Conclusion • Students can work as a cheap crowd, but – – – – They need to feel benefits of their work They abuse/spam the system, if this benefits them Be more careful with their results (“trustful student”) Expect long-tailed student activity distribution • Interactive exercise with immediate feedback, bootstrapped from the crowd – Future work: • Moving towards learning support CQA • Expertise detection (spam detection)