Cloud Cost Management and Apache Spark with Xuan Wang

- 1. Xuan Wang, Databricks Cloud Cost Management and Apache Spark #DSSAIS13

- 2. Introduction ● Goal of this talk ○ share our experience in managing cloud costs ○ tools and technologies ○ lessons learnt and good practices ○ go wide rather than go deep 2#DSSAIS13

- 3. Introduction ● Goal of this talk ○ share our experience in managing cloud costs ○ tools and technologies ○ lessons learnt and good practices ○ go wide rather than go deep ● Why do we care about cloud cost? ○ growth in cloud revenue in Q1 2018: Amazon: 49%, Microsoft: 58% ● 3#DSSAIS13

- 4. Databricks’ Unified Analytics Platform 4 DATABRICKS RUNTIME COLLABORATIVE NOTEBOOKS Delta SQL Streaming Powered by Data Engineers Data Scientists CLOUD NATIVE SERVICE Unifies Data Engineers and Data Scientists Unifies Data and AI Technologies Eliminates infrastructure complexity XGBoost

- 5. Three paths toward cost control ● Native reporting from cloud providers ○ Good general information and supports ○ Limited options, not scalable as environment grows ● Commercial tools ○ More details and flexibilities, connectors to raw data ○ Not enough customization, additional charges 5#DSSAIS13

- 6. Three paths toward cost control ● Native reporting from cloud providers ○ Good general information and supports ○ Limited options, not scalable as environment grows ● Commercial tools ○ More details and flexibilities, connectors to raw data ○ Not enough customization, additional charges ● In-house solutions ○ Most flexible, deeper understanding of the costs ○ Opportunity costs 6#DSSAIS13

- 7. Challenges in cloud cost control ● overwhelming and complex usage details ○ need to convert data into insights/actions ● gaps between “hands” and “wallets” ○ developers consume resources without realizing the charges ● evolving cloud landscape ○ external: new services, new discounts, ... ○ internal: new use cases, new architecture, ... 7#DSSAIS13

- 8. Our solutions 8#DSSAIS13 DATABRICKS DELTA Raw Data cost and usage s3 access logs s3 inventory ec2/rds snapshot reserved instances ... Databricks Notebooks Monitors and alerts BI tools: Superset, Tableau, ... DATA LAKE Analytics

- 9. Our solutions 9#DSSAIS13 DATABRICKS DELTA Raw Data cost and usage s3 access logs s3 inventory ec2/rds snapshot reserved instances ... Databricks Notebooks Monitors and alerts BI tools: Superset, Tableau, ... DATA LAKE Analytics The data problem: ETL and attribute costs The process problem: prioritize, optimize, monitor, automate

- 10. The data problem ● cost and usage report (detailed billing) ○ CSV, grouped by month, updated daily 10#DSSAIS13

- 11. The data problem ● cost and usage report (detailed billing) ○ CSV, grouped by month, updated daily ● EC2/RDS snapshots and reserved instances ○ JSON, from REST API 11#DSSAIS13

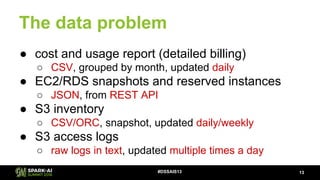

- 12. The data problem ● cost and usage report (detailed billing) ○ CSV, grouped by month, updated daily ● EC2/RDS snapshots and reserved instances ○ JSON, from REST API ● S3 inventory ○ CSV/ORC, snapshot, updated daily/weekly ● S3 access logs ○ raw logs in text, updated multiple times a day 12#DSSAIS13

- 13. The data problem ● cost and usage report (detailed billing) ○ CSV, grouped by month, updated daily ● EC2/RDS snapshots and reserved instances ○ JSON, from REST API ● S3 inventory ○ CSV/ORC, snapshot, updated daily/weekly ● S3 access logs ○ raw logs in text, updated multiple times a day 13#DSSAIS13

- 14. Data pipelines with Spark Challenges ● Data corruptions ● Multiple jobs/staging tables ● Reliability and consistency 14 Raw Data InsightData Lake ETL Analytics #DSSAIS13

- 15. Databricks Delta: Analytics Ready Data 1. Data Reliability ACID Compliant Transactions Schema Enforcement & Evolution DATABRICKS DELTA LOTS OF NEW DATA Customer Data Click Streams Sensor data (IoT) Video/Speech … Reporting Machine Learning Alerting Dashboards 2. Query Performance Very Fast at Scale Indexing & Caching (10-100x Faster) 3. Simplified Architecture Unify batch & streaming Early data availability for analytics DATA LAKE

- 16. ETL: AWS cost and usage 16#DSSAIS13

- 17. ETL: AWS cost and usage 17#DSSAIS13

- 18. ETL: AWS s3 access logs 18#DSSAIS13

- 19. Manage Databricks Delta tables ● Create table CREATE TABLE s3_access_logs USING delta LOCATION '$path' ● Optimize table OPTIMIZE s3_access_logs ZORDER BY bucket 19#DSSAIS13

- 20. Manage Databricks Delta tables ● Create table CREATE TABLE s3_access_logs USING delta LOCATION '$path' ● Optimize table OPTIMIZE s3_access_logs ZORDER BY bucket ● Query table SELECT * FROM s3_access_logs WHERE bucket = 'my-bucket' 20 Files layout & statistics: File1 File2 File3 Delta Logs: File1: min='a', max='g' File2: min='g', max='n' File3: min='o', max='z' #DSSAIS13

- 21. Attributions ● Rule based attributions ○ accounts ■ dedicated accounts for different teams / use cases ○ tagging ■ tag resources with budget groups ○ manual rules ■ should avoid this as much as possible 21#DSSAIS13

- 22. The process problem ● Prioritize ○ high data transfer cost ● Optimize ○ reserved instance purchases ● Monitor ○ predictions and alerts ● Automate ○ auto-shutdown unused resources 22#DSSAIS13

- 23. Story: high data transfer cost ● Observation ○ Cross region data transfers are expensive ○ Two buckets cost about $1k/day 23#DSSAIS13

- 24. Story: high data transfer cost ● Observation ○ Cross region data transfers are expensive ○ Two buckets cost about $1k/day ● Root cause ○ downloading spark images 24#DSSAIS13

- 25. Story: high data transfer cost ● Actions ○ Distribute images to multiple regions. ○ Monitor on cross region cost 25#DSSAIS13

- 26. Story: high data transfer cost ● Actions ○ Distribute images to multiple regions. ○ Monitor on cross region cost ● Results ○ Significantly reduced cost ○ Faster cluster creation 26#DSSAIS13

- 27. Optimization: reserved instances ● Reserved instances (RI) ○ 1-yr/3-yr commitment in exchange for discounts ○ underutilized instances, upfront cost ○ significant discounts, availability 27#DSSAIS13

- 28. Optimization: reserved instances ● Reserved instances (RI) ○ 1-yr/3-yr commitment in exchange for discounts ○ underutilized instances, upfront cost ○ significant discounts, availability ● Challenges ○ non-trivial to decide how much RI to purchase ○ need to predict the future 28#DSSAIS13

- 29. Optimization: reserved instances 29 ● Assign budgets to teams ● Provide tool to compute the optimal RI to buy ● Define process for RI purchase requests and approvals #DSSAIS13

- 30. Monitor and alerts ● Why ○ prevent degenerations ○ proactive to “bill shock” ● Challenges ○ different patterns for different use cases ○ changing baselines 30#DSSAIS13

- 31. Monitor and alerts 31 Picture from Sharma, S., Swayne, D.A. & Obimbo, C. Energ. Ecol. Environ. (2016) 1: 123. https://doi.org/10.1007/s40974-016-0011-1 #DSSAIS13

- 32. Monitor and alerts ● adaptive prediction with change point detection ● alerts for each budget group ● scheduled jobs and dashboards 32#DSSAIS13

- 33. Auto-shutdown with Custodian ● https://github.com/capitalone/cloud-custodian ○ Rule based cloud infrastructure management tool 33#DSSAIS13

- 37. Summary ● in-house solutions because ○ flexibility and deeper understanding of cost and usage ● cost attribution - a data problem ○ ETL: Databricks Delta for analytics ready data ○ explore: Databricks Notebooks and BI tools via JDBC ○ attribute: rule-based, tagging is important ● cost control - a process problem ○ prioritize: get the work done! ○ optimize: distributed ownerships, and centralized tools ○ monitor: change points + basic linear model ○ automate: Custodian for managing cloud infrastructure 37#DSSAIS13

- 39. Xuan Wang, Databricks BACKUP SLIDES #AssignedHashtagGoesHere

- 40. New: Databricks Delta Extends Apache Spark to simplify data reliability and performance Data Reliability Fast Analytics +

- 41. The data problem ● cost and usage report ○ detailed usage and billing information by hours ○ CSV, delivered at least once a day ● s3 inventory ○ list of all objects in s3 and associated metadata ○ CSV/ORC, delivered daily/weekly 41#DSSAIS13

- 42. The data problem ● s3 access logs ○ requests/API calls to access s3 objects ○ raw logs, delivered frequently ● EC2/RDS snapshots and reserved instances ○ json from REST API 42#DSSAIS13