Cluj Big Data Meetup - Big Data in Practice

- 1. Big Data in Practice: The TrustYou Tech Stack Cluj Big Data Meetup, Nov 18th Steffen Wenz, CTO

- 2. Goals of today’s talk ● Relate first-hand experiences with a big data tech stack ● Introduce to a few essential technologies beyond Hadoop: ○ Hortonworks HDP ○ Apache Pig ○ Luigi

- 4. Who are we? ● For each hotel on the planet, provide a summary of all reviews ● Expertise: ○ NLP ○ Machine Learning ○ Big Data ● Clients: …

- 6. TrustYou Tech Stack Batch Layer ● Hadoop (HDP 2.1) ● Python ● Pig ● Luigi Service Layer ● PostgreSQL ● MongoDB ● Redis ● Cassandra Data Data Queries Hadoop cluster (100 nodes) Application machines

- 7. Hadoop cluster (includes all live and development machines)

- 8. Python ♥ Big Data Hadoop ist Java-first, but: ● Hadoop streaming cat input | ./map.py | sort | ./reduce.py > output ○ MRJob, Luigi ○ VirtualEnv ● Pig: Python UDFS ● Real-time processing: PySpark, PyStorm ● Data processing: ○ Numpy, SciPy ○ Pandas ● NLP: ○ NLTK ● Machine learning: ○ Scikit-learn ○ Gensim (word2vec)

- 9. Use case: Semantic analysis ● “Nice room” ● “Room wasn‘t so great” ● “The air-conditioning was so powerful that we were cold in the room even when it was off.” ● “อาหารรสชาติดี” ● “ ” خدمة جیدة ● 20 languages ● Linguistic system (morphology, taggers, grammars, parsers …) ● Hadoop: Scale out CPU ● Python for ML & NLP libraries

- 11. Hortonworks Distribution ● Hortonworks Data Platform: Enterprise architecture out of the box ● Try out in VM: Hortonworks Sandbox ● Alternatives: Cloudera CDH, MapR TrustYou & Hortonworks @ BITKOM Big Data Summit

- 12. Apache Pig ● Define & execute parallel data flows on Hadoop ○ Engine + Language (“Pig Latin”) + Shell (“Grunt”) ● “SQL of big data” (bad comparison; many differences) ● Goal: Make Pig Latin native language of parallel data processing ● Native support for: Projection, filtering, sort, group, join

- 13. Why not just MapReduce? ● Projection SELECT a, b ... ● Filter WHERE ... ● Sort ● Distinct ● Group ● Join Source: Hadoop - the definitive guide

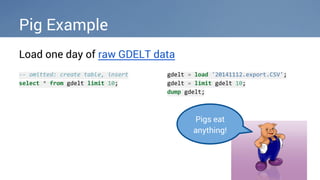

- 14. Pig Example Load one day of raw GDELT data -- omitted: create table, insert select * from gdelt limit 10; gdelt = load '20141112.export.CSV'; gdelt = limit gdelt 10; dump gdelt; Pigs eat anything!

- 15. Specifying a schema gdelt = load '20141112.export.CSV' as ( event_id: chararray, sql_date: chararray, month_year: chararray, year: chararray, fraction_date: chararray, actor1: chararray, actor1_name: chararray, -- ... 59 columns in total ... event: int, goldstein_scale: float, date_added: chararray, source_url: chararray );

- 16. Pig Example Look at all non-empty actor countries select actor1_country from gdelt where actor1_country != ''; -- where: gdelt = filter gdelt by actor1_country != ''; -- select: country = foreach gdelt generate actor1_country; dump country;

- 17. Pig Example Get histogram of actor countries select actor1_country, count(*) from gdelt group by actor1_country; gdelt_grp = group gdelt by actor1_country; gdelt_cnt = foreach gdelt_grp generate group as country, COUNT(gdelt) as count; dump gdelt_cnt;

- 18. Pig Example Count total rows, count distinct event IDs select count(*) from gdelt; select count(distinct event_id) from gdelt; gdelt_grp = group gdelt all; gdelt_cnt = foreach gdelt_grp generate COUNT(gdelt); dump gdelt_cnt; -- 180793 event = foreach gdelt generate event; event_dis = distinct event; event_grp = group event_dis all; event_cnt = foreach event_grp generate COUNT(event_dis); dump event_cnt; -- 215

- 19. Things you can’t do in Pig i = 2; Top-level variables are bags (sort of like tables). if (x#a == 2) dump xs; None of the usual control structures. You define data flows. For everything else: UDFs (user-defined functions). Custom operators implemented in Java or Python. Also: Directly call Java static methods

- 20. Cool, but where’s the parallelism? event = foreach gdelt generate event; -- map event_dis = distinct event parallel 50; -- reduce! event_grp = group event_dis all parallel 50; -- reduce! event_cnt = foreach event_grp generate COUNT(event_dis); -- map dump event_cnt;

- 21. Pig’s execution engine $ pig -x local -e "explain -script gdelt.pig" #----------------------------------------------- # New Logical Plan: #----------------------------------------------- event_cnt: (Name: LOStore Schema: #131:long) ColumnPrune:InputUids=[69]ColumnPrune:OutputUids=[69] | |---event_cnt: (Name: LOForEach Schema: #131:long) | | | (Name: LOGenerate[false] Schema: #131:long) | | | | | (Name: UserFunc(org.apache.pig.builtin. COUNT) Type: long Uid: 131) | | | | | |---event_dis:(Name: Project Type: bag Uid: 67 Input: 0 Column: (*)) | | | |---event_dis: (Name: LOInnerLoad[1] Schema: event#27:int) | |---event_grp: (Name: LOCogroup Schema: group#66: chararray,event_dis#67:bag{#129:tuple(event#27:int)}) $ pig -x local -e "explain -script gdelt.pig -dot -out gdelt.dot" $ dot -Tpng gdelt.dot > gdelt.png

- 22. Pig advanced: Asymmetric country relations -- we're only interested in countries gdelt = filter ( foreach gdelt generate actor1_country, actor2_country, goldstein_scale ) by actor1_country != '' and actor2_country != ''; gdelt_grp = group gdelt by (actor1_country, actor2_country); -- it's not necessary to aggregate twice - except that Pig doesn't allow self joins gold_1 = foreach gdelt_grp generate group.actor1_country as actor1_country, group.actor2_country as actor2_country, SUM(gdelt.goldstein_scale) as goldstein_scale; gold_2 = foreach gdelt_grp generate group.actor1_country as actor1_country, group.actor2_country as actor2_country, SUM(gdelt.goldstein_scale) as goldstein_scale; -- join both sums together, to get the Goldstein values for both directions in one row gold = join gold_1 by (actor1_country, actor2_country), gold_2 by (actor2_country, actor1_country);

- 23. Pig advanced: Asymmetric country relations -- compute the difference in Goldstein score gold = foreach gold generate gold_1::actor1_country as actor1_country, gold_1::actor2_country as actor2_country, gold_1::goldstein_scale as gold_1, gold_2::goldstein_scale as gold_2, ABS(gold_1::goldstein_scale - gold_2::goldstein_scale) as diff; -- keep only the values where one direction is positive, the other negative -- also, remove all duplicate rows gold = filter gold by gold_1 * gold_2 < 0 and actor1_country < actor2_country; gold = order gold by diff desc; dump gold;

- 24. Pig advanced: Asymmetric country relations (PSE,USA,93.49999961256981,-76.30000001192093,169.79999962449074) (NGA,USA,15.900000423192978,-143.5999995470047,159.49999997019768) (ISR,JOR,143.89999967813492,-12.700000494718552,156.60000017285347) (IRN,SYR,103.50000095367432,-50.50000023841858,154.0000011920929) (IRN,ISR,16.60000056028366,-112.40000087022781,129.00000143051147) (GBR,RUS,73.09999999403954,-41.99999952316284,115.09999951720238) (EGY,SYR,-87.60000020265579,12.0,99.60000020265579) (USA,YEM,-78.30000007152557,15.700000047683716,94.00000011920929) (ISR,TUR,2.4000001549720764,-90.60000002384186,93.00000017881393) (MYS,UKR,35.10000038146973,-52.0,87.10000038146973) (GRC,TUR,-47.60000029206276,36.5,84.10000029206276) (HTI,USA,34.99999976158142,-45.40000009536743,80.39999985694885)

- 25. Apache Pig @ TrustYou ● Before: ○ Usage of Unix utilities (sort, cut, awk etc.) and custom tools (map_filter.py, reduce_agg.py) to transform data with Hadoop Streaming ● Now: ○ Data loading & transformation expressed in Pig ○ PigUnit for testing ○ Core algorithms still implemented in Python

- 26. Further Reading on Pig ● O’Reilly Book - free online version See code samples on TrustYou GitHub account: https://github. com/trustyou/meetups/tre e/master/big-data

- 27. Luigi ● Build complex pipelines of batch jobs ○ Dependency resolution ○ Parallelism ○ Resume failed jobs ● Pythonic replacement for Apache Oozie ● Not a replacement for Pig, Cascading, Hive

- 28. Anatomy of a Luigi task class MyTask(luigi.Task): # Parameters which control the behavior of the task. Same parameters = the task only needs to run once! param1 = luigi.Parameter() # These dependencies need to be done before this task can start. Can also be a list or dict def requires(self): return DependentTask(self.param1) # Path to output file (local or HDFS). If this file is present, Luigi considers this task to be done. def output(self): return luigi.LocalTarget("data/my_task_output_{}".format(self.param1)) def run(self): # To make task execution atomic, Luigi writes all output to a temporary file, and only renames when you close the target. with self.output().open("w") as out: out.write("foo")

- 29. Luigi tasks vs. Makefiles class MyTask(luigi.Task): def requires(self): return DependentTask() def output(self): return luigi.LocalTarget ("data/my_task_output")) def run(self): with self.output().open("w") as out: out.write("foo") data/my_task_output: DependentTask run run run ...

- 30. Luigi Hadoop integration class HadoopTask(luigi.hadoop.JobTask): def output(self): return luigi.HdfsTarget("output_in_hdfs") def requires(self): return { "some_task": SomeTask(), "some_other_task": SomeOtherTask() } def mapper(self, line): key, value = line.rstrip().split("t") yield key, value def reducer(self, key, values): yield key, ", ".join(values)

- 31. Luigi example Crawl a URL, then extract all links from it! CrawlTask(url) ExtractTask(url)

- 32. Luigi example: CrawlTask class CrawlTask(luigi.Task): url = luigi.Parameter() def output(self): url_hash = hashlib.md5(self.url).hexdigest() return luigi.LocalTarget(os.path.join("data", "crawl_" + url_hash)) def run(self): req = requests.get(self.url) res = req.text with self.output().open("w") as out: out.write(res.encode("utf-8"))

- 33. Luigi example: ExtractTask class ExtractTask(luigi.Task): url = luigi.Parameter() def requires(self): return CrawlTask(self.url) def output(self): url_hash = hashlib.md5(self.url).hexdigest() return luigi.LocalTarget(os.path.join("data", "extract_" + url_hash)) def run(self): soup = bs4.BeautifulSoup(self.input().open().read()) with self.output().open("w") as out: for link in soup.find_all("a"): out.write(str(link.get("href")) + "n")

- 34. Luigi example: Running it locally $ python luigi_demo.py --local-scheduler ExtractTask --url http://www.trustyou.com DEBUG: Checking if ExtractTask(url=http://www.trustyou.com) is complete INFO: Scheduled ExtractTask(url=http://www.trustyou.com) (PENDING) DEBUG: Checking if CrawlTask(url=http://www.trustyou.com) is complete INFO: Scheduled CrawlTask(url=http://www.trustyou.com) (PENDING) INFO: Done scheduling tasks INFO: Running Worker with 1 processes DEBUG: Asking scheduler for work... DEBUG: Pending tasks: 2 INFO: [pid 2279] Worker Worker(salt=083397955, host=steffen-thinkpad, username=steffen, pid=2279) running CrawlTask(url=http://www.trustyou.com) INFO: [pid 2279] Worker Worker(salt=083397955, host=steffen-thinkpad, username=steffen, pid=2279) done CrawlTask(url=http://www.trustyou.com) DEBUG: 1 running tasks, waiting for next task to finish DEBUG: Asking scheduler for work... DEBUG: Pending tasks: 1 INFO: [pid 2279] Worker Worker(salt=083397955, host=steffen-thinkpad, username=steffen, pid=2279) running ExtractTask(url=http://www.trustyou.com) INFO: [pid 2279] Worker Worker(salt=083397955, host=steffen-thinkpad, username=steffen, pid=2279) done ExtractTask(url=http://www.trustyou.com) DEBUG: 1 running tasks, waiting for next task to finish DEBUG: Asking scheduler for work...

- 35. Luigi @ TrustYou ● Before: ○ Bash scripts + cron ○ Manual cleanup after failures due to network issues etc. ● Now: ○ Complex nested Luigi job graphs ○ Failed jobs usually repair themselves

- 36. TrustYou wants you! We offer positions in Cluj & Munich: ● Data engineer ● Application developer ● Crawling engineer Write me at [email protected], check out our website, or see you at the next meetup!

- 37. Backup

![Pig’s execution engine

$ pig -x local -e "explain -script gdelt.pig"

#-----------------------------------------------

# New Logical Plan:

#-----------------------------------------------

event_cnt: (Name: LOStore Schema: #131:long)

ColumnPrune:InputUids=[69]ColumnPrune:OutputUids=[69]

|

|---event_cnt: (Name: LOForEach Schema: #131:long)

| |

| (Name: LOGenerate[false] Schema: #131:long)

| | |

| | (Name: UserFunc(org.apache.pig.builtin.

COUNT) Type: long Uid: 131)

| | |

| | |---event_dis:(Name: Project Type: bag

Uid: 67 Input: 0 Column: (*))

| |

| |---event_dis: (Name: LOInnerLoad[1] Schema:

event#27:int)

|

|---event_grp: (Name: LOCogroup Schema: group#66:

chararray,event_dis#67:bag{#129:tuple(event#27:int)})

$ pig -x local -e "explain -script gdelt.pig

-dot -out gdelt.dot"

$ dot -Tpng gdelt.dot > gdelt.png](https://image.slidesharecdn.com/meetup-bigdatainpractice-141126045125-conversion-gate02/85/Cluj-Big-Data-Meetup-Big-Data-in-Practice-21-320.jpg)

![Luigi example: Running it locally

$ python luigi_demo.py --local-scheduler ExtractTask --url http://www.trustyou.com

DEBUG: Checking if ExtractTask(url=http://www.trustyou.com) is complete

INFO: Scheduled ExtractTask(url=http://www.trustyou.com) (PENDING)

DEBUG: Checking if CrawlTask(url=http://www.trustyou.com) is complete

INFO: Scheduled CrawlTask(url=http://www.trustyou.com) (PENDING)

INFO: Done scheduling tasks

INFO: Running Worker with 1 processes

DEBUG: Asking scheduler for work...

DEBUG: Pending tasks: 2

INFO: [pid 2279] Worker Worker(salt=083397955, host=steffen-thinkpad, username=steffen, pid=2279) running

CrawlTask(url=http://www.trustyou.com)

INFO: [pid 2279] Worker Worker(salt=083397955, host=steffen-thinkpad, username=steffen, pid=2279) done

CrawlTask(url=http://www.trustyou.com)

DEBUG: 1 running tasks, waiting for next task to finish

DEBUG: Asking scheduler for work...

DEBUG: Pending tasks: 1

INFO: [pid 2279] Worker Worker(salt=083397955, host=steffen-thinkpad, username=steffen, pid=2279) running

ExtractTask(url=http://www.trustyou.com)

INFO: [pid 2279] Worker Worker(salt=083397955, host=steffen-thinkpad, username=steffen, pid=2279) done

ExtractTask(url=http://www.trustyou.com)

DEBUG: 1 running tasks, waiting for next task to finish

DEBUG: Asking scheduler for work...](https://image.slidesharecdn.com/meetup-bigdatainpractice-141126045125-conversion-gate02/85/Cluj-Big-Data-Meetup-Big-Data-in-Practice-34-320.jpg)