Comparative Analysis of Text Summarization Techniques

- 1. Comparative Analysis of Different Text Summarization Techniques UNDER THE GUIDANCE OF PRESENTED BY Dr. B. LAVANYA,ME, U.RAMESH(20331A04H4) ASSOCIATE PROFESSOR, Y.SOWJANYA(213315A0419) DEPARTMENT OF ECE SK.MEERA VALI(20331A04G3) Ph.D

- 2. CONTENTS 1. ABSTRACT 2. LITERATURE REVIEW 3. WHY TEXT SUMMARIZATION 4. EXTRACTIVE SUMMARIZATION METHODS 5. ABSTRACTIVE SUMMARIZATION METHODS 6. DATASET AND EVALUATION METRICS 7. BART MODEL 8. RESULTS 9. REFERENCES

- 3. ABSTRACT Text Summarization is the process of generating a concise and meaningful summary of a text. To better help identify relevant information and consume relevant information faster, automatic text summarization methods are needed to address the growing amount of text data available online. Text Summarization techniques are classified into Abstractive, Extractive and Hybrid summarization. Extractive text summarization techniques select entire sentences from documents according to some criteria to form a summary. Abstractive Text Summarization is the task of generating a short and concise summary that captures the salient ideas of the source text. The generated summaries potentially contain new phrases and sentences that may not appear in the source text. In this project, we review and compare the performance of few summarization techniques. The study of various text summarization methods helps in understanding the considerations, applicability, and accuracy of these methods and suggest the need for an improved text summarization model.

- 4. Why Text Summarization ? If you are looking for specific information from an online news article, you may have to dig through its content and spend a lot of time weeding out the unnecessary stuff before getting the information you want. Everyone in today's technology-driven society has to produce some kind of Document online, whether it's a presentation, documentation, or even email. We often see that students need to produce large pdf files in front of their universities or colleges. During the period of Revision before exams for students the skimmed and highlight points are important for content based approach of the subject. Researchers may want to distill the main findings and contributions of academic papers. This can be especially needed when conducting literature reviews. In healthcare it is easy if extract essential information from patient records, for healthcare professionals to review patient histories.

- 5. Need of Text Summarization To comfort the above situations we require a solution for all of them The objective of this project is to develop a text summarization algorithm that can produce coherent and concise summaries while preserving the most important information. So, for solving the above problems we need an approach where highlight of the documents, newspapers, textbooks, research papers will be there and rest of the chunks will get eliminated. Only the important points or sentences will be present. There are three types of techniques, they are a. Extractive Summarization b. Abstractive Summarization

- 6. LITERATURE SURVEY Author Year Techniques/Methods Outcomes Kasarapu Ramani 2023 TextRank, LexRank, Latent Semantic Analysis (LSA) TextRank Algorithm gives a better result than other two algorithms Prof. Kavyashree 2023 Natural language processing (NLP) and Deep Learning(DL) Customized algorithm using NLP and DL to achieve a more accurate summary. Chetana Varagantham 2022 Text summarization using NLP The Project has carried its purpose thereby decreasing the input textual data to a more compact reduced summarized result Kamal Deep Garg 2021 Fine-Tuned PEGASUS: Exploring the Performance of the Transformer-Based Model on a Diverse Text Summarization Datase These methods are efficient for automatic evaluation of single document summary. Dipti Bartakke 2020 Text Summarization and Dimensionality Reduction using Learning Approach To distinguish sentences including important information, a set of suitable highlights are extracted from the text. The result shows the proposed system is more efficient and reliable as compared to the existing system. Ming Zhong 2020 Extractive Summarization as Text Matching Experimental results show MATCHSUM outperforms the current state-of-the-art extractive model , which demonstrates the effectiveness of our method Mike Lewis 2019 ART: Denoising Sequence-to-Sequence Pre- training for Natural Language Generation, Translation, and Comprehension Enhancing the summary generation procedure to significantly reduce redundancy in the generated summary.

- 7. Anish Jadhav 2019 Text Summarization using Neural Networks Using Machine Learning and Deep Learning algorithms NLP can understand human language and analyze the language. J.N.Madhuri 2019 Sentence Ranking It enables the extraction of key information while discarding redundant or less relevant content. Sheikh Abujar 2019 An Approach for Bengali Text Summarization using Word2Vector Word2vec is important when working with text such as text summarization. It keeps all similar word in a numeric value which helps when LSTM cell working with important or non-important value. Santosh Kumar Bharti 2017 Text mining Approach Text mining is the process of extracting large quantities of text to derive high-quality information Narendra Andhale 2016 Various Methods Discussed for both Extractive and Abstractive Summarization The reviewed literature opens up the challenging area for hybridization of these methods to produce informative, well compressed and readable summaries.

- 8. PROBLEM STATEMENT As lot of information available and it takes lot of and efforts for getting a precise information from the sources in this project we come with effectively summarized information (data)

- 9. The general architecture of an Text Summarization system Preprocessing in text summarization techniques refers to a set of preliminary steps and tasks that are performed on the input text before the actual summarization process begins. The purpose of preprocessing is to clean and prepare the text data. Some common examples are: Spell checking and correction, part-of-speech tagging, stop word removal, text cleaning, tokenization. Feature weight extraction is typically refers to the process of determining the importance or significance of various features within the text when generating a summary. These features can include words, phrases, sentences, or other textual elements. Sentence weighing and classification in text summarization refer to the techniques and processes used to assess the importance or relevance of individual sentences in the source text and classify them as either included or excluded in the summary.

- 10. EXTRACTIVE SUMMARIZATION Extractive summarization is a technique that involves selecting the most relevant sentences from a text document and presenting them in a shortened version. The advantage of this technique is that it produces a summary that is accurate and faithful to the original text. One example of extractive summarization is the use of bullet points to summarize a long report. Common techniques used in extractive summarization: TF-IDF, TextRank Algorithm,

- 11. EXTRACTIVE METHODOLIGIES TF-IDF (Term Frequency-Inverse Document Frequency): TF-IDF calculates the importance of each word in a document relative to its frequency in the entire corpus. Sentences containing important words (based on TF-IDF scores) are selected for the summary. Sentence Scoring with Machine Learning Models: Supervised learning models can be trained to assign scores to sentences based on various features such as word frequency, sentence length, position, and more. Examples of models include Support Vector Machines (SVM), Random Forest, or neural networks. Fig: Sentence Scoring Approach for Extractive summarization

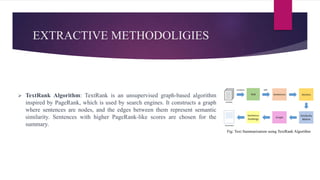

- 12. EXTRACTIVE METHODOLIGIES TextRank Algorithm: TextRank is an unsupervised graph-based algorithm inspired by PageRank, which is used by search engines. It constructs a graph where sentences are nodes, and the edges between them represent semantic similarity. Sentences with higher PageRank-like scores are chosen for the summary. Fig: Text Summarization using TextRank Algorithm

- 13. ABSTRACTIVE SUMMARIZATION Abstractive summarization is a technique that involves generating a summary of a text by understanding its meaning and producing new sentences that convey the same information. The advantages of abstractive summarization include the ability to generate more concise and fluent summaries that better capture the main ideas of the text. Common techniques used in abstractive summarization: PEGASUS, Bidirectional and Auto-Regressive Transformers (BART),T5

- 14. ABSTRACTIVE TECHNIQUES PEGASUS: It is a transformer-based model designed for abstractive text summarization. It was introduced by researchers at Google in the paper titled "PEGASUS: Pre-training with Extracted Gap-sentences for Abstractive Summarization." The model is pre-trained using a denoising autoencoder objective, where it learns to reconstruct original documents from documents with randomly masked sentences. BART: It which stands for "Bidirectional and Auto-Regressive Transformers," is a transformer-based model designed for sequence-to-sequence tasks, particularly for tasks like text summarization and text generation. BART was introduced by Facebook Fig: PEGASUS Architecture Fig: BART Architecture

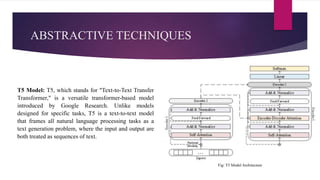

- 15. ABSTRACTIVE TECHNIQUES T5 Model: T5, which stands for "Text-to-Text Transfer Transformer," is a versatile transformer-based model introduced by Google Research. Unlike models designed for specific tasks, T5 is a text-to-text model that frames all natural language processing tasks as a text generation problem, where the input and output are both treated as sequences of text. Fig: T5 Model Architecture

- 16. CNN/Daily Mail Dataset CNN/Daily Mail is a dataset for text summarization. Human generated abstractive summary bullets were generated from news stories in CNN and Daily Mail websites as questions (with one of the entities hidden), and stories as the corresponding passages from which the system is expected to answer the fill-in the-blank question. The authors released the scripts that crawl, extract and generate pairs of passages and questions from these websites. The CNN/DailyMail dataset has 3 splits: train, validation, and test. Below are the statistics for Version 3.0.0 of the dataset. Data Split Number of Instance in Split Train 287,113 Validation 13,368 Test 11,490 Table 1: CNN/DailyMail Datset

- 17. SAM-SUM Dataset

- 18. Evaluation Metric ROUGE, or Recall-Oriented Understudy for Gisting Evaluation, is a set of metrics and a software package used for evaluating automatic summarization and machine translation software in natural language processing. The metrics compare an automatically produced summary or translation against a reference or a set of references (human- produced) summary or translation. ROUGE metrics range between 0 and 1, with higher scores indicating higher similarity between the automatically produced summary and the reference. The following three evaluation metrics are used. • ROUGE-N: Overlap of n-grams between the system and reference summaries. o ROUGE-1 refers to the overlap of unigrams (each word) between the system and reference summaries. o ROUGE-2 refers to the overlap of bigrams between the system and reference summaries. • ROUGE-L: Longest Common Subsequence (LCS) based statistics. Longest common subsequence problem takes into account sentence-level structure similarity naturally and identifies longest co-occurring in sequence n-grams automatically.

- 19. BLEU SCORE BLEU (bilingual evaluation understudy) is an algorithm for evaluating the quality of text which has been machine- translated from one natural language to another. Quality is considered to be the correspondence between a machine's output and that of a human: "the closer a machine translation is to a professional human translation, the better it is" – this is the central idea behind BLEU. Invented at IBM in 2001, BLEU was one of the first to claim a high correlation with human judgements of quality, and remains one of the most popular automated and inexpensive metrics. Scores are calculated for individual translated segments—generally sentences—by comparing them with a set of good quality reference translations. Those scores are then averaged over the whole corpus to reach an estimate of the translation's overall quality. Intelligibility or grammatical correctness are not taken into account. BLEU's output is always a number between 0 and 1. This value indicates how similar the candidate text is to the reference texts, with values closer to 1 representing more similar texts. Few human translations will attain a score of 1, since this would indicate that the candidate is identical to one of the reference translations. For this reason, it is not necessary to attain a score of 1. Because there are more opportunities to match, adding additional reference translations will increase the BLEU score.

- 20. Bidirectional and Auto-Regressive Transformers (BART) BART is the model developed by Facebook. It is sort of a combination of Google’s BERT and OpenAI’s GPT. BERT’s bidirectional and autoencoder nature helps in downstream tasks that require information about the whole input sequence. But it is not good for sequence generation tasks. GPT models are good at text generation but not good at downstream tasks that require knowledge of whole sequence. This is due to its unidirectional and autoregressive nature. BART combines the approaches of both models and thus is the best of both worlds. However the metric values can be increased with the help of fine tuning BART Model thereby increasing the familiarity between Ground truth summary and Generated Summary. Fig: BART architecture

- 21. What is Fine tuning BART Model ? Fine-tuning a BART (Bidirectional and Auto-Regressive Transformers) model for text summarization involves adjusting the pre-trained BART model on a specific summarization dataset to improve its performance on that task. This process allows the model to adapt its knowledge from a general context to a more specific one, improving its ability to generate summaries that are coherent, concise, and relevant to the input text. Fine Tuning involves major steps as follow: 1.Loading Datasets 2.Loaading and Tokenizing data 3.Load Model 4.Train pre-trained Model on data and evaluating 5.Testing the fine tuned model 6.Evaluating the metric values of fine tuned model

- 22. METHOD ROUGE-1 Score ROUGE-2 Score ROUGE-L Score PEGASUS Precision: 0.311367 Recall: 0.32856 F Score: 0.318262 Precision: 0.142305 Recall: 0.164585 F Score: 0.151692 Precision: 0.301453 Recall: 0.317272 F Score: 0.307743 T5(Text-To-Text Transfer Transformer) Precision: 0.44129 Recall: 0.24195 F Score: 0.31254 Precision: 0.21212 Recall: 0.11480 F Score: 0.14890 Precision: 0.3529 Recall: 0.1935 F Score: 0.25000 BART(OPENLLMs) Precision: 0.41176 Recall: 0.24561 F Score: 0.30769 Precision: 0.21212 Recall: 0.12500 F Score: 0.15730 Precision: 0.35294 Recall: 0.21052 F Score: 0.26373 Recurrent Neural Network Precision: 0.34184 Recall: 0.23568 F Score: 0. 29869 Precision: 0.19853 Recall: 0.11587 F Score: 0.14826 Precision: 0.32594 Recall: 0.14561 F Score: 0.20743 RESULTS BEFORE FINE TUNING THE MODEL Table 1: Evaluation Metric Values for Different Summarization Methods on CNN/Daily Mail dataset(before fine tuning BART Model) TF-IDF Precision: 0.22105 Recall: 0.39623 F Score: 0.28378 Precision: 0.02128 Recall: 0.03846 F Score: 0.02740 Precision: 0.09474 Recall: 0.16981 F Score: 0.12162 LSA Precision: 0.28947 Recall: 0.41509 F Score: 0.34109 Precision: 0.02667 Recall: 0.03846 F Score: 0.03150 Precision: 0.22105 Recall: 0.18868 F Score: 0.15504 TextRank Precision: 0.23404 Recall: 0.41509 F Score: 0.29932 Precision: 0.06452 Recall: 0.11538 F Score: 0.29932 Precision: 0.09574 Recall: 0.16981 F Score: 0.12245 Transformers Precision: 0.43400 Recall: 0.38333 F Score: 0.5000 Precision: 0.61672 Recall: 0.24561 F Score: 0.30769 Precision: 0.41176 Recall: 0.24561 F Score: 0.30769 Evaluation Metrics for Abstractive Summarization Evaluation Metrics for Extractive Summarization

- 23. RESULTS AFTER FINE TUNING THE BART MODEL Extractive Model R1 R2 RL RLSum B1 B2 SB LexRank 0.3333 0.1282 0.3333 0.2627 0.2267 0.1335 0.0803 TextRank 0.2340 0.2150 0.2993 0.2828 0.2267 0.1426 0.0880 Position Rank 0.3272 0.1851 0.2545 0.2179 0.2179 0.1407 0.1092 Transformers 0.3541 0.2340 0.2916 0.2932 0.2615 0.1691 0.1314 Topic Rank 0.3235 0.1463 0.3823 0.2578 0.2489 0.1458 0.0986 TF-IDF 0.2210 0.02128 0.0947 0.1332 0.2727 0.2292 0.1939 LSA 0.2894 0.0266 0.1315 0.0926 0.1659 0.1360 0.1145 Abstractive PEGASUS 0.3113 0.1423 0.3014 0.2849 0.2307 0.1519 0.0821 mT5-multilingual 0.4412 0.2121 0.3014 0.3824 0.0214 0.0143 0.0043 PEGASUS-XSum 0.1000 0.0560 0.5000 0.0079 0.0689 0.0156 0.0101 BART-large-CNN 0.3250 0.1025 0.1999 0.3028 0.2173 0.1203 0.0709 Table 2: Experimental Evaluation Results on CNN/Dailly Mail(After fine tuning BART Model)

- 24. Result before fine tuning BART Model Evaluation Metric value for Bidirectional and Auto- Regressive Transformers (BART)

- 25. Result after fine tuning BART Model Evaluation Metric value for Bidirectional and Auto-Regressive Transformers (BART)

- 26. RESULT Original Article Ground Truth Summary Generated Summary Ever noticed how plane seats appear to be getting smaller and smaller? With increasing numbers of people taking to the skies, some experts are questioning if having such packed-out planes is putting passengers at risk. They say that the shrinking space on airplanes is not only uncomfortable - it's putting our health and safety in danger……… Experts question if packed out planes are putting passengers at risk . U.S consumer advisory group says minimum space must be stipulated. Safety tests conducted on planes with more leg room than airlines offer . U.S consumer advisory group set up by Department of Transportation said that while the government is happy to set standards for animals flying on planes, it doesn't stipulate a minimum amount of space for humans. Tests conducted by the FAA use planes with a 31-inch pitch, a standard which on some airlines has decreased. A drunk teenage boy had to be rescued by security after jumping into a lions' enclosure at a zoo in western India. Rahul Kumar, 17, clambered over the enclosure fence at the Kamla Nehru Zoological Park in Ahmedabad, and began running towards the animals, shouting he would 'kill them……… Drunk teenage boy climbed into lion enclosure at zoo in west India . Rahul Kumar, 17, ran towards animals shouting 'Today I kill a lion!' Fortunately, he fell into a moat before reaching lions and was rescued . Rahul Kumar, 17, climbed into the lions' enclosure at a zoo in Ahmedabad. He ran towards the animals shouting: 'Today I kill a lion or a lion kills me!' Fortunately, he fell into a moat and was rescued by zoo security staff. Dougie Freedman is on the verge of agreeing a new two- year deal to remain at Nottingham Forest. Freedman has stabilized Forest since he replaced cult hero Stuart Pearce and the club's owners are pleased with the job, he has done at the City Ground…….. Nottingham Forest is close to extending Dougie Freedman's contract . The Forest boss took over from former manager Stuart Pearce in February . Freedman has since led the club to ninth in the Championship . Dougie Freedman is on the verge of agreeing a new two- year deal at Nottingham Forest. Freedman has stabilized Forest since he replaced cult hero Stuart Pearce and the club's owners are pleased with his work. Table 3: Example summaries from the BART model on CNN/Daily Mail Dataset.

- 27. APPLICATIONS Text summarization has various applications in different fields, such as news, research, and social media. In the news industry, text summarization is used to provide a brief summary of articles, making it easier for readers to get an overview of the news without having to read the entire article. In research, text summarization is used to analyze large volumes of data and extract important information quickly. Social media platforms also use text summarization to generate summaries of long posts or articles shared on their platforms. Summarizing educational materials such as textbooks, research papers, or lecture notes to aid students in understanding complex topics. Summarizing legal contracts, case law, or other legal documents to assist lawyers and legal professionals in quickly reviewing and understanding their content.

- 28. WORK DONE TILL NOW Analyzed different Techniques such as Pegasus, Textrank, LSA, etc., on CNN/DAILYMAIL dataset and obtained ROUGE metric values. In those techniques it is observed that BART model is best suitable to our problem.

- 29. REFERENCES [1] Mike Lewis and Yinhan Liu ,” BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension” , Submitted on: 29 Oct 2019 , DOI: https://doi.org/10.48550/arXiv:1910.13461v1 [cs.CL] [2] M. Jain and H. Rastogi, "Automatic Text Summarization using Soft-Cosine Similarity and Centrality Measures," 2020 4th International Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 2020, pp. 1021-1028, DOI: 10.1109/ICECA49313.2020.9297583. [3] Saiela Bilal and Pravneet Kaur, “Summarization of Text Based on Deep Neural Network”, International Journal for Research in Applied Science and Engineering Technology (IJRASET), Vol 9,Issue XII Dec 2021,924- 933. DOI: https://doi.org/10.22214/ijraset.2021.39419 [4] Hardy, Miguel Ballesteros, Faisal Ladhak, Muhammad Khalifa, Vittorio Castelli and, Kathleen McKeown, “Novel Chapter Abstractive Summarization using Spinal Tree Aware Sub-Sentential Content Selection”, Nov 2022, DOI: https://doi.org/10.48550/arXiv.2211.04903 [5] S Hima Bindu Sri, Sushma Rani Dutta, “A Survey on Automatic Text Summarization Techniques”, International Conference on Physics and Energy, Journal of Physics, 2021, DOI:10.1088/1742- 6596/2040/1/012044. [6] P Keerthana, “Automatic Text Summarization Using Deep Learning”, EPRA International Journal of Multidisciplinary Research (IJMR), Vol 7, Issue 4, April 2021, Journal DOI: 10.36713/epra2013 [7] Varun Deokar and, Kanishk Shah, “Automated Text Summarization of News Articles”, International Research Journal of Engineering and Technology(IRJET) publication, Vol 08, issue 09, Sep 2021, 2395-0072, DOI: https://www.irjet.net/archives/V8/i9/IRJET-V8I9304

- 30. REFERENCES [8] Mansoora Majeed and Kala M T ” Comparative Study on Extractive Summarization Using Sentence Ranking Algorithm and Text Ranking Algorithm.” Proceedings of the 2004 conference on empirical methods in natural language processing. 2023. [9] Prof. Kavyashree S , Sumukha R and Tejaswini S V . ” Survey on Automatic Text Summarization using NLP and Deep Learning .” 2023 DOI: 10.1109/ICAECIS58353.2023.1017066. [10] Mohammed Alsuhaibani ” Fine-Tuned PEGASUS: Exploring the Performance of the Transformer-Based Model on a Diverse Text Summarization Dataset” Proceedings of the 9th World Congress on Electrical Engineering and Computer Systems and Sciences (EECSS’23) [11] Jingqing Zhang, Yao Zhao, and Mohammad Saleh. ” PEGASUS: Pre-training with Extracted Gap-sentences for Abstractive Summarizatio.” IEEE Transactions on Computational Social Systems 9.3 (2021): 879-890.. [12] Hritvik Gupta and Mayank Patel . ” METHOD OF TEXT SUMMARIZATION USING LSAAND SENTENCE BASED TOPIC MODELLING WITH BERT ” 2021 international conference on data science and communication (IconDSC). | DOI: 10.1109/ICAIS50930.2021.9395976 [13] Anish Jadhav, Rajat Jain and Steve Fernandes . ” Text Summarization using Neural Networks.” arXiv preprint arXiv:2004.08795 (2020).

- 31. THANK YOU

![REFERENCES

[1] Mike Lewis and Yinhan Liu ,” BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and

Comprehension” , Submitted on: 29 Oct 2019 , DOI: https://doi.org/10.48550/arXiv:1910.13461v1 [cs.CL]

[2] M. Jain and H. Rastogi, "Automatic Text Summarization using Soft-Cosine Similarity and Centrality Measures," 2020 4th International

Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 2020, pp. 1021-1028,

DOI: 10.1109/ICECA49313.2020.9297583.

[3] Saiela Bilal and Pravneet Kaur, “Summarization of Text Based on Deep Neural Network”, International Journal for Research in Applied

Science and Engineering Technology (IJRASET), Vol 9,Issue XII Dec 2021,924- 933. DOI: https://doi.org/10.22214/ijraset.2021.39419

[4] Hardy, Miguel Ballesteros, Faisal Ladhak, Muhammad Khalifa, Vittorio Castelli and, Kathleen McKeown, “Novel Chapter Abstractive

Summarization using Spinal Tree Aware Sub-Sentential Content Selection”, Nov 2022, DOI: https://doi.org/10.48550/arXiv.2211.04903

[5] S Hima Bindu Sri, Sushma Rani Dutta, “A Survey on Automatic Text Summarization Techniques”, International Conference on Physics

and Energy, Journal of Physics, 2021, DOI:10.1088/1742- 6596/2040/1/012044.

[6] P Keerthana, “Automatic Text Summarization Using Deep Learning”, EPRA International Journal of Multidisciplinary Research (IJMR),

Vol 7, Issue 4, April 2021, Journal DOI: 10.36713/epra2013

[7] Varun Deokar and, Kanishk Shah, “Automated Text Summarization of News Articles”, International Research Journal of Engineering and

Technology(IRJET) publication, Vol 08, issue 09, Sep 2021, 2395-0072, DOI: https://www.irjet.net/archives/V8/i9/IRJET-V8I9304](https://image.slidesharecdn.com/seccbatch12review-11-240420034211-e21c4a0e/85/Comparative-Analysis-of-Text-Summarization-Techniques-29-320.jpg)

![REFERENCES

[8] Mansoora Majeed and Kala M T ” Comparative Study on Extractive Summarization Using Sentence Ranking Algorithm and

Text Ranking Algorithm.” Proceedings of the 2004 conference on empirical methods in natural language processing. 2023.

[9] Prof. Kavyashree S , Sumukha R and Tejaswini S V . ” Survey on Automatic Text Summarization using NLP and Deep

Learning .” 2023 DOI: 10.1109/ICAECIS58353.2023.1017066.

[10] Mohammed Alsuhaibani ” Fine-Tuned PEGASUS: Exploring the Performance of the Transformer-Based Model on a Diverse

Text Summarization Dataset” Proceedings of the 9th World Congress on Electrical Engineering and Computer Systems and

Sciences (EECSS’23)

[11] Jingqing Zhang, Yao Zhao, and Mohammad Saleh. ” PEGASUS: Pre-training with Extracted Gap-sentences for Abstractive

Summarizatio.” IEEE Transactions on Computational Social Systems 9.3 (2021): 879-890..

[12] Hritvik Gupta and Mayank Patel . ” METHOD OF TEXT SUMMARIZATION USING LSAAND SENTENCE BASED

TOPIC MODELLING WITH BERT ” 2021 international conference on data science and communication (IconDSC). | DOI:

10.1109/ICAIS50930.2021.9395976

[13] Anish Jadhav, Rajat Jain and Steve Fernandes . ” Text Summarization using Neural Networks.” arXiv preprint

arXiv:2004.08795 (2020).](https://image.slidesharecdn.com/seccbatch12review-11-240420034211-e21c4a0e/85/Comparative-Analysis-of-Text-Summarization-Techniques-30-320.jpg)