Consistency and Completeness: Rethinking Distributed Stream Processing in Apache Kafka

- 1. Guozhang Wang Lei Chen Ayusman Dikshit Jason Gustafson Boyang Chen Matthias J. Sax John Roesler Sophie Blee-Goldman Bruno Cadonna Apurva Mehta Varun Madan Jun Rao Consistency and Completeness Rethinking Distributed Stream Processing in Apache Kafka

- 2. Outline • Stream processing (with Kafka): correctness challenges • Exactly-once consistency with failures • Completeness with out-of-order data • Use case and conclusion 2

- 3. 3 Stream Processing • A different programming paradigm • .. that brings computation to unbounded data • .. but not necessarily transient, approximate, or lossy

- 4. 4 • Persistent Buffering • Logical Ordering • Scalable “source-of-truth” Kafka: Streaming Platform

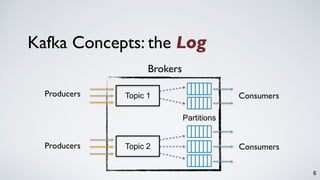

- 5. Kafka Concepts: the Log 4 5 5 7 8 9 10 11 12 ... Producer Write Consumer1 Reads (offset 7) Consumer2 Reads (offset 10) Messages 3 5

- 6. Topic 1 Topic 2 Partitions Producers Producers Consumers Consumers Brokers Kafka Concepts: the Log 6

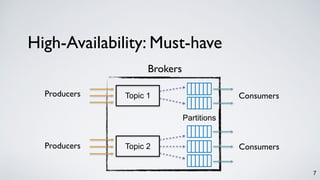

- 7. Topic 1 Topic 2 Partitions Producers Producers Consumers Consumers Brokers 7 High-Availability: Must-have

- 8. Topic 1 Topic 2 Partitions Producers Producers Consumers Consumers Brokers 7 High-Availability: Must-have

- 9. Topic 1 Topic 2 Partitions Producers Producers Consumers Consumers Brokers 7 High-Availability: Must-have [VLDB 2015]

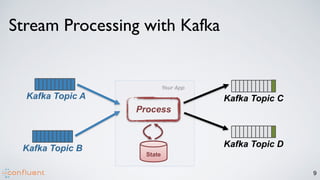

- 10. 8 Process State Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D Your App Stream Processing with Kafka

- 11. 9 Process State Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D Your App Stream Processing with Kafka

- 12. 9 Process State Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D Your App Stream Processing with Kafka

- 13. 9 Process State Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D Your App Stream Processing with Kafka

- 14. 9 Process State Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D Your App Stream Processing with Kafka

- 15. 9 Process State Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D ack ack Your App Stream Processing with Kafka

- 16. 9 Process State Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D ack ack commit Your App Stream Processing with Kafka

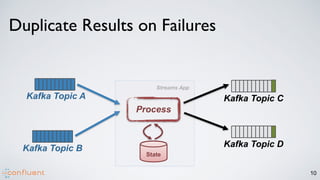

- 17. 10 Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D State Process Streams App Duplicate Results on Failures

- 18. 10 Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D ack ack State Process Streams App Duplicate Results on Failures

- 19. 10 Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D State Streams App Duplicate Results on Failures

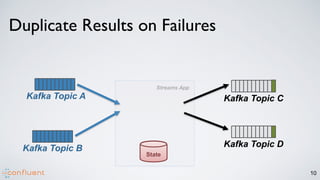

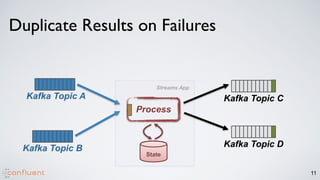

- 20. 11 State Process Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D Streams App Duplicate Results on Failures

- 21. 11 State Process Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D Streams App Duplicate Results on Failures

- 22. 11 State Process Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D Streams App Duplicate Results on Failures

- 23. 11 State Process Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D State Streams App Duplicate Results on Failures

- 24. 11 State Process Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D State Streams App Duplicate Results on Failures

- 25. 11 State Process Kafka Topic A Kafka Topic B Kafka Topic C Kafka Topic D State Streams App Duplicate Results on Failures

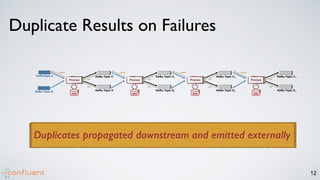

- 26. 12 Duplicate Results on Failures

- 27. 12 2 2 3 3 4 4 Duplicate Results on Failures

- 28. 12 2 2 3 3 4 4 Duplicates propagated downstream and emitted externally Duplicate Results on Failures

- 29. 13 Challenge #1: Consistency (a.k.a. exactly-once) An correctness guarantee for stream processing, .. that for each received record, .. its process results will be reflected exactly once, .. even under failures

- 30. It’s all about Time • Event-time (when a record is created) • Processing-time (when a record is processed) 14

- 31. Event-time 4 5 6 1 2 3 7 8 Processing-time 1977 1980 1983 1999 2002 2005 2015 2017 15 P H A N T O M M E N A C E A T T A C K O F T H E C L O N E S R E V E N G E O F T H E S I T H A N E W H O P E T H E E M P I R E S T R I K E S B A C K R E T U R N O F T H E J E D I T H E F O R C E A W A K E N S Out-of-Order T H E L A S T J E D I

- 32. Event-time 4 5 6 1 2 3 7 8 Processing-time 1977 1980 1983 1999 2002 2005 2015 2017 15 P H A N T O M M E N A C E A T T A C K O F T H E C L O N E S R E V E N G E O F T H E S I T H A N E W H O P E T H E E M P I R E S T R I K E S B A C K R E T U R N O F T H E J E D I T H E F O R C E A W A K E N S Out-of-Order T H E L A S T J E D I

- 33. Event-time 4 5 6 1 2 3 7 8 Processing-time 1977 1980 1983 1999 2002 2005 2015 2017 15 P H A N T O M M E N A C E A T T A C K O F T H E C L O N E S R E V E N G E O F T H E S I T H A N E W H O P E T H E E M P I R E S T R I K E S B A C K R E T U R N O F T H E J E D I T H E F O R C E A W A K E N S Out-of-Order T H E L A S T J E D I Incomplete results produced due to time skewness

- 34. 16 Challenge #2: Completeness (with out-of-order data) An correctness guarantee for stream processing, .. that even with out-of-order data streams, .. incomplete results would not be delivered

- 35. 17 Blocking + Checkpointing One stone to kill all birds? • Block processing and result emitting until complete • Hard trade-offs between latency and correctness • Depend on global blocking markers

- 36. 18 A Log-based Approach: • Leverage on persistent, immutable, ordered logs • Decouple consistency and completeness handling

- 37. Kafka Streams • New client library beyond producer and consumer • Powerful yet easy-to-use • Event time, stateful processing • Out-of-order handling • Highly scalable, distributed, fault tolerant • and more.. 19

- 38. 20 Anywhere, anytime Ok. Ok. Ok. Ok.

- 39. Streams DSL and KSQL 21 CREATE STREAM fraudulent_payments AS SELECT * FROM payments WHERE fraudProbability > 0.8; val fraudulentPayments: KStream[String, Payment] = builder .stream[String, Payment](“payments-kafka-topic”) .filter((_ ,payment) => payment.fraudProbability > 0.8) fraudulentPayments.to(“fraudulent-payments-topic”) [EDBT 2019]

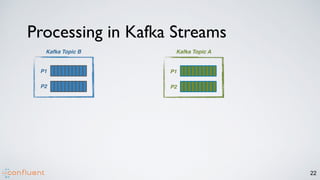

- 40. 22 Kafka Topic B Kafka Topic A P1 P2 P1 P2 Processing in Kafka Streams

- 41. 23 Kafka Topic B Kafka Topic A Processor Topology P1 P2 P1 P2 Processing in Kafka Streams

- 42. 24 Kafka Topic A Kafka Topic B Processing in Kafka Streams P1 P2 P1 P2

- 43. 24 Kafka Topic A Kafka Topic B Processing in Kafka Streams P1 P2 P1 P2

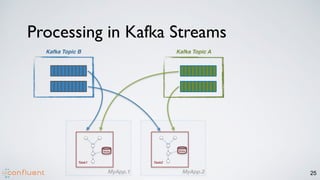

- 44. MyApp.2 MyApp.1 Kafka Topic B Task2 Task1 25 Kafka Topic A State State Processing in Kafka Streams P1 P2 P1 P2

- 45. MyApp.2 MyApp.1 Kafka Topic B Task2 Task1 25 Kafka Topic A State State Processing in Kafka Streams

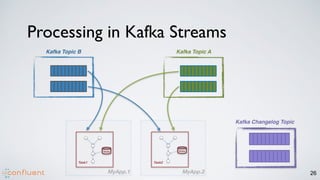

- 46. MyApp.2 MyApp.1 Kafka Topic B Task2 Task1 26 Kafka Topic A State State Processing in Kafka Streams

- 47. MyApp.2 MyApp.1 Kafka Topic B Task2 Task1 26 Kafka Topic A State State Processing in Kafka Streams Kafka Changelog Topic

- 48. MyApp.2 MyApp.1 Kafka Topic B Task2 Task1 26 Kafka Topic A State State Processing in Kafka Streams Kafka Changelog Topic

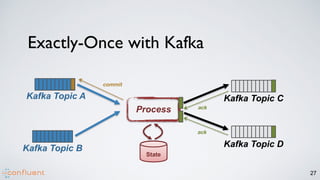

- 49. 27 Process State Kafka Topic C Kafka Topic D ack ack Kafka Topic A Kafka Topic B commit Exactly-Once with Kafka

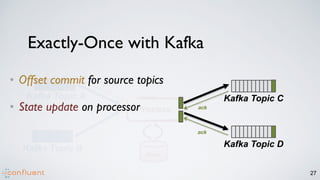

- 50. 27 Process State Kafka Topic C Kafka Topic D ack ack Kafka Topic A Kafka Topic B commit • Offset commit for source topics Exactly-Once with Kafka

- 51. 27 Process State Kafka Topic C Kafka Topic D ack ack Kafka Topic A Kafka Topic B commit • Offset commit for source topics • State update on processor Exactly-Once with Kafka

- 52. 27 Process State Kafka Topic C Kafka Topic D ack ack Kafka Topic A Kafka Topic B commit • Acked produce to sink topics • Offset commit for source topics • State update on processor Exactly-Once with Kafka

- 53. 27 • Acked produce to sink topics • Offset commit for source topics • State update on processor Exactly-Once with Kafka

- 54. 28 • Acked produce to sink topics • Offset commit for source topics • State update on processor Exactly-Once with Kafka

- 55. 29 • Acked produce to sink topics • Offset commit for source topics • State update on processor All or Nothing Exactly-Once with Kafka

- 56. 30 Exactly-Once with Kafka Streams • Acked produce to sink topics • Offset commit for source topics • State update on processor All or Nothing

- 57. 30 Exactly-Once with Kafka Streams • Acked produce to sink topics • Offset commit for source topics • State update on processor

- 58. 31 Exactly-Once with Kafka Streams • Acked produce to sink topics • A batch of records sent to the offset topic • State update on processor

- 59. 32 • Acked produce to sink topics • A batch of records sent to the offset topic Exactly-Once with Kafka Streams • A batch of records sent to changelog topics

- 60. 33 Exactly-Once with Kafka Streams • A batch of records sent to sink topics • A batch of records sent to the offset topic • A batch of records sent to changelog topics

- 61. 34 Exactly-Once with Kafka Streams All or Nothing • A batch of records sent to sink topics • A batch of records sent to the offset topic • A batch of records sent to changelog topics

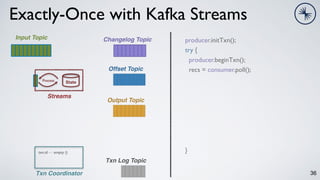

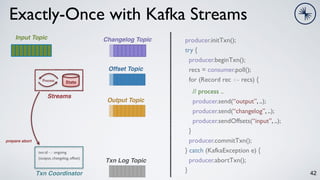

- 62. 35 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); <- Txn Coordinator Txn Log Topic

- 63. 35 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); <- Txn Coordinator Txn Log Topic register txn.id

- 64. 35 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); <- Txn Coordinator Txn Log Topic register txn.id

- 65. 35 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); <- Txn Coordinator Txn Log Topic txn.id -> empty () register txn.id

- 66. 36 Exactly-Once with Kafka Streams Input Topic Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); <- } Txn Coordinator Txn Log Topic State txn.id -> empty ()

- 67. 36 Exactly-Once with Kafka Streams Input Topic Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); <- } Txn Coordinator Txn Log Topic State txn.id -> empty ()

- 68. 37 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. } } Txn Coordinator Txn Log Topic txn.id -> empty ()

- 69. 37 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. } } Txn Coordinator Txn Log Topic txn.id -> empty ()

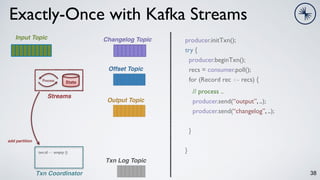

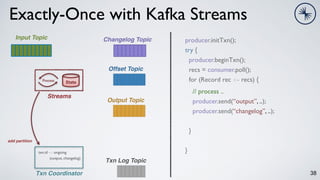

- 70. 38 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); } } Txn Coordinator Txn Log Topic txn.id -> empty ()

- 71. 38 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); } } Txn Coordinator Txn Log Topic add partition txn.id -> empty ()

- 72. 38 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); } } Txn Coordinator Txn Log Topic add partition txn.id -> empty ()

- 73. 38 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); } } Txn Coordinator Txn Log Topic txn.id -> ongoing (output, changelog) add partition

- 74. 38 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); } } Txn Coordinator Txn Log Topic txn.id -> ongoing (output, changelog) add partition

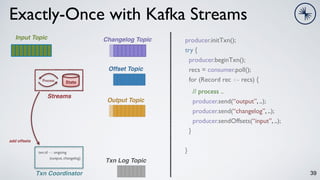

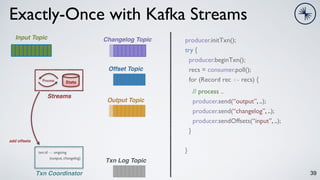

- 75. 39 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } } Txn Coordinator Txn Log Topic txn.id -> ongoing (output, changelog)

- 76. 39 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } } Txn Coordinator Txn Log Topic add offsets txn.id -> ongoing (output, changelog)

- 77. 39 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } } Txn Coordinator Txn Log Topic add offsets txn.id -> ongoing (output, changelog)

- 78. 39 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } } Txn Coordinator Txn Log Topic add offsets txn.id -> ongoing (output, changelog, offset)

- 79. 39 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } } Txn Coordinator Txn Log Topic add offsets txn.id -> ongoing (output, changelog, offset)

- 80. 40 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } Txn Coordinator Txn Log Topic txn.id -> ongoing (output, changelog, offset)

- 81. 40 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } Txn Coordinator Txn Log Topic prepare commit txn.id -> ongoing (output, changelog, offset)

- 82. 40 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } Txn Coordinator Txn Log Topic prepare commit txn.id -> ongoing (output, changelog, offset)

- 83. 40 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } Txn Coordinator Txn Log Topic prepare commit txn.id -> prepare-commit (output, changelog, offset)

- 84. 41 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } Txn Coordinator Txn Log Topic txn.id -> prepare-commit (output, changelog, offset)

- 85. 41 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } Txn Coordinator Txn Log Topic txn.id -> prepare-commit (output, changelog, offset)

- 86. 41 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } Txn Coordinator Txn Log Topic txn.id -> prepare-commit (output, changelog, offset)

- 87. 41 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } Txn Coordinator Txn Log Topic txn.id -> prepare-commit (output, changelog, offset)

- 88. 41 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } Txn Coordinator Txn Log Topic txn.id -> complete-commit (output, changelog, offset)

- 89. 42 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } catch (KafkaException e) { producer.abortTxn(); } Txn Coordinator Txn Log Topic txn.id -> ongoing (output, changelog, offset)

- 90. 42 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } catch (KafkaException e) { producer.abortTxn(); } Txn Coordinator Txn Log Topic prepare abort txn.id -> ongoing (output, changelog, offset)

- 91. 42 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } catch (KafkaException e) { producer.abortTxn(); } Txn Coordinator Txn Log Topic prepare abort txn.id -> ongoing (output, changelog, offset)

- 92. 42 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } catch (KafkaException e) { producer.abortTxn(); } Txn Coordinator Txn Log Topic txn.id -> prepare-abort (output, changelog, offset) prepare abort

- 93. 43 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } catch (KafkaException e) { producer.abortTxn(); } Txn Coordinator Txn Log Topic txn.id -> prepare-abort (output, changelog, offset)

- 94. 43 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } catch (KafkaException e) { producer.abortTxn(); } Txn Coordinator Txn Log Topic txn.id -> prepare-abort (output, changelog, offset)

- 95. 43 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } catch (KafkaException e) { producer.abortTxn(); } Txn Coordinator Txn Log Topic txn.id -> prepare-abort (output, changelog, offset)

- 96. 43 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } catch (KafkaException e) { producer.abortTxn(); } Txn Coordinator Txn Log Topic txn.id -> prepare-abort (output, changelog, offset)

- 97. 43 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } catch (KafkaException e) { producer.abortTxn(); } Txn Coordinator Txn Log Topic txn.id -> complete-abort (output, changelog, offset)

- 98. 44 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } catch (KafkaException e) { producer.abortTxn(); } Txn Coordinator Txn Log Topic txn.id -> complete-abort (output, changelog, offset)

- 99. 44 Exactly-Once with Kafka Streams Input Topic State Process Streams Changelog Topic Output Topic Offset Topic producer.initTxn(); try { producer.beginTxn(); recs = consumer.poll(); for (Record rec <- recs) { // process .. producer.send(“output”, ..); producer.send(“changelog”, ..); producer.sendOffsets(“input”, ..); } producer.commitTxn(); } catch (KafkaException e) { producer.abortTxn(); } Txn Coordinator Txn Log Topic txn.id -> complete-abort (output, changelog, offset)

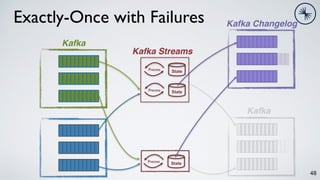

- 100. 45 State Process State Process State Process Exactly-Once with Failures Kafka Kafka Streams Kafka Changelog Kafka

- 101. 46 State Process State Process State Process Exactly-Once with Failures Kafka Kafka Streams Kafka Changelog Kafka

- 102. 46 State Process State Process Exactly-Once with Failures Kafka Kafka Streams Kafka Changelog Kafka

- 103. 47 State Process State Process Process Kafka Kafka Streams Kafka Changelog Kafka Exactly-Once with Failures State

- 104. 48 State Process State Process State Process Kafka Kafka Streams Kafka Changelog Kafka Exactly-Once with Failures

- 105. 48 State Process State Process State Process Kafka Kafka Streams Kafka Changelog Kafka Exactly-Once with Failures

- 106. 48 State Process State Process State Process Kafka Kafka Streams Kafka Changelog Kafka Exactly-Once with Failures

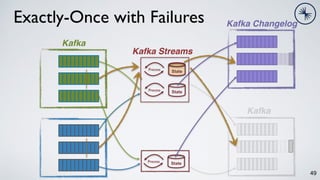

- 107. 49 State Process State Process State Process Kafka Kafka Streams Kafka Changelog Kafka Exactly-Once with Failures

- 108. 49 State Process State Process State Process Kafka Kafka Streams Kafka Changelog Kafka Exactly-Once with Failures

- 109. 49 State Process State Process State Process Kafka Kafka Streams Kafka Changelog Kafka Exactly-Once with Failures

- 110. 49 State Process State Process State Process Kafka Kafka Streams Kafka Changelog Kafka Exactly-Once with Failures

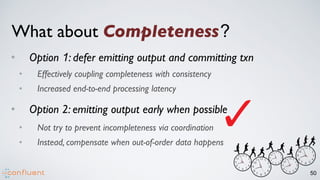

- 111. 50 What about Completeness? • Option 1: defer emitting output and committing txn • Effectively coupling completeness with consistency • Increased end-to-end processing latency • Option 2: emitting output early when possible • Not try to prevent incompleteness via coordination • Instead, compensate when out-of-order data happens

- 112. 50 What about Completeness? • Option 1: defer emitting output and committing txn • Effectively coupling completeness with consistency • Increased end-to-end processing latency • Option 2: emitting output early when possible • Not try to prevent incompleteness via coordination • Instead, compensate when out-of-order data happens

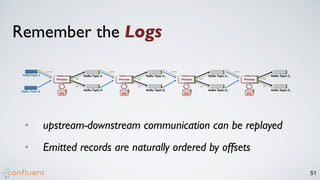

- 113. 51 2 2 3 3 4 4 Remember the Logs • upstream-downstream communication can be replayed • Emitted records are naturally ordered by offsets

- 114. 52 Ordering and Monotonicity • Stateless (filter, mapValues) • Order-agnostic: no need to block on emitting • Stateful (join, aggregate) • Order-sensitive: current results depend on history • Whether block emitting results depend on output types

- 115. KStream = interprets data as record stream ~ think: “append-only” KTable = data as changelog stream ~ continuously updated materialized view 53

- 116. 54 alice eggs bob bread alice milk alice lnkd bob googl alice msft User purchase history User employment profile KStream KTable

- 117. 55 alice eggs bob bread alice milk alice lnkd bob googl alice msft User purchase history User employment profile time “Alice bought eggs.” “Alice is now at LinkedIn.” KStream KTable

- 118. 56 alice eggs bob bread alice milk alice lnkd bob googl alice msft User purchase history User employment profile time “Alice bought eggs and milk.” “Alice is now at LinkedIn Microsoft.” KStream KTable

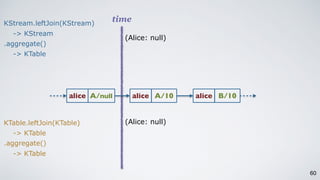

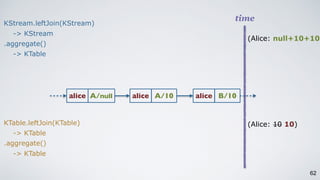

- 119. 57 time (Alice: A/null) alice 10 alice A alice B “do not emit” KStream.leftJoin(KStream) -> KStream KTable.leftJoin(KTable) -> KTable

- 120. 58 time alice 10 alice A alice B (Alice: A/null) (Alice: A/10) “do not emit” (Alice: A/10) KStream.leftJoin(KStream) -> KStream KTable.leftJoin(KTable) -> KTable

- 121. 59 time alice 10 alice A alice B (Alice: A/null) (Alice: A/10) (Alice: B/10) “do not emit” (Alice: A/10) (Alice: B/10) KStream.leftJoin(KStream) -> KStream KTable.leftJoin(KTable) -> KTable

- 122. 60 time (Alice: null) (Alice: null) alice A/null A/10 alice B/10 alice KStream.leftJoin(KStream) -> KStream .aggregate() -> KTable KTable.leftJoin(KTable) -> KTable .aggregate() -> KTable

- 123. 61 time (Alice: null 10) (Alice: null+10) alice A/null A/10 alice B/10 alice KStream.leftJoin(KStream) -> KStream .aggregate() -> KTable KTable.leftJoin(KTable) -> KTable .aggregate() -> KTable

- 124. 62 time (Alice: 10 10) (Alice: null+10+10) KStream.leftJoin(KStream) -> KStream .aggregate() -> KTable KTable.leftJoin(KTable) -> KTable .aggregate() -> KTable alice A/null A/10 alice B/10 alice

- 125. 62 time (Alice: 10 10) (Alice: null+10+10) KStream.leftJoin(KStream) -> KStream .aggregate() -> KTable KTable.leftJoin(KTable) -> KTable .aggregate() -> KTable alice A/null A/10 alice B/10 alice [BIRTE 2015]

- 126. 63 Use Case: Bloomberg Real-time Pricing

- 127. 64 Use Case: Bloomberg Real-time Pricing

- 128. 64 Use Case: Bloomberg Real-time Pricing

- 129. 64 Use Case: Bloomberg Real-time Pricing • One billion plus market events / day • 160 cores / 2TB RAM deployed on k8s • Exactly-once for market data stateful stream processing

- 130. • Apache Kafka: persistent logs to achieve correctness 65 Take-aways

- 131. • Apache Kafka: persistent logs to achieve correctness • Transactional log appends for exactly-once 66 Take-aways

- 132. • Apache Kafka: persistent logs to achieve correctness • Transactional log appends for exactly-once • Non-blocking output with revisions to handle out-of-order data 67 Take-aways

- 133. • Apache Kafka: persistent logs to achieve correctness • Transactional log appends for exactly-once • Non-blocking output with revisions to handle out-of-order data 68 Take-aways Guozhang Wang | [email protected] | @guozhangwang Read the full paper at: https://cnfl.io/sigmod

- 134. • Apache Kafka: persistent logs to achieve correctness • Transactional log appends for exactly-once • Non-blocking output with revisions to handle out-of-order data 68 Take-aways THANKS! Guozhang Wang | [email protected] | @guozhangwang Read the full paper at: https://cnfl.io/sigmod

- 135. 69 BACKUP SLIDES

- 136. Ongoing Work (3.0+) • Scalability improvements • Consistent state query serving • Further reduce end-to-end latency • … and more 70

![Topic 1

Topic 2

Partitions

Producers

Producers

Consumers

Consumers

Brokers

7

High-Availability: Must-have

[VLDB 2015]](https://image.slidesharecdn.com/consistencyandcompletenesssigmod2021long-210514213341/85/Consistency-and-Completeness-Rethinking-Distributed-Stream-Processing-in-Apache-Kafka-9-320.jpg)

![Streams DSL and KSQL

21

CREATE STREAM fraudulent_payments AS

SELECT * FROM payments

WHERE fraudProbability > 0.8;

val fraudulentPayments: KStream[String, Payment] = builder

.stream[String, Payment](“payments-kafka-topic”)

.filter((_ ,payment) => payment.fraudProbability > 0.8)

fraudulentPayments.to(“fraudulent-payments-topic”)

[EDBT 2019]](https://image.slidesharecdn.com/consistencyandcompletenesssigmod2021long-210514213341/85/Consistency-and-Completeness-Rethinking-Distributed-Stream-Processing-in-Apache-Kafka-39-320.jpg)

![62

time

(Alice: 10 10)

(Alice: null+10+10)

KStream.leftJoin(KStream)

-> KStream

.aggregate()

-> KTable

KTable.leftJoin(KTable)

-> KTable

.aggregate()

-> KTable

alice A/null A/10 alice B/10

alice

[BIRTE 2015]](https://image.slidesharecdn.com/consistencyandcompletenesssigmod2021long-210514213341/85/Consistency-and-Completeness-Rethinking-Distributed-Stream-Processing-in-Apache-Kafka-125-320.jpg)