Contrastive Learning with Adversarial Perturbations for Conditional Text Generation

- 1. Contrastive Learning with Adversarial Perturbations for Conditional Text Generation Seanie Lee1*, Dong Bok Lee1*, Sung Ju Hwang1,2 KAIST1, Daejeon, South Korea AITRICS2, Seoul, South Korea 1

- 2. Pretrained Language Model 2 Pretraining language model with large corpus and finetuning it for target task requires a large amount of labeled data.

- 3. Conditional Text Generation 3 Conditional text generation is to generate another sequence from the given sequence. Generally, we use encoder-decoder architecture. the blue Encoder Encoder Encoder house Embed Embed Embed Decoder Decoder Decoder Decoder Embed Embed Embed Embed la masion bleu <eos>

- 4. Exposure Bias 4 Seq2seq models trained with teacher forcing often show exposure bias problem, which hurts generalization to unseen inputs. the blue Encoder Encoder Encoder house <bos> Embed Embed Embed Decoder Decoder Decoder Decoder Embed Embed Embed Embed le masion bleu <eos> la masion bleu prediction Ground Truth

- 5. Contrastive Learning Framework 5 We propose to use contrast a ground truth pair to negative pairs for better representation of target sentence. I cannot do that. GT Target Sentence Source Sent ence Encoder-Decoder He wasn’t in great shape <eos> <bos> He wasn’t in great shape Randomly sampled negative examples are easily discriminated with the pretrained language model and requires a large batch size to mine meaningful negative examples.

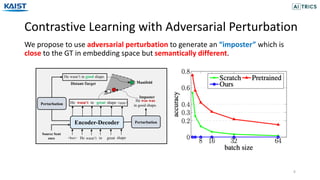

- 6. Contrastive Learning with Adversarial Perturbation 6 We propose to use adversarial perturbation to generate an “imposter” which is close to the GT in embedding space but semantically different. Imposter He wasn’t in good shape. Distant-Target Perturbation He was was in good shape. Perturbation Source Sent ence Encoder-Decoder He wasn’t in great shape <eos> <bos> He wasn’t in great shape Manifold

- 7. Contrastive Learning with Adversarial Perturbation 7 Conversely, we generate a “distant target” which is far away from the source sentence in embedding space but semantically similar. Imposter He wasn’t in good shape. Distant-Target Perturbation He was was in good shape. Perturbation Source Sent ence Encoder-Decoder He wasn’t in great shape <eos> <bos> He wasn’t in great shape Manifold

- 8. Contrastive Learning with Adversarial Perturbation 8 We pull the imposter as well as the negative examples away from the source and push the distant target and target to the source. Max Min push source target dist-target imposter pull

- 9. Contrastive Learning objective 9 Given a pair of source and target sentence 𝑥("), 𝒚(𝒊), we randomly sample 𝒚(𝒋) with 𝑖 ≠ 𝑗 and use them as a set of negative examples 𝑆. As SimCLR, we maximize the cosine similarity between source and target and minimize it between source and negative examples. Nu era într-o formă prea bună. 𝒙(𝒊) He wasn’t in great shape. 𝒚(𝒊) But I cannot do it anymore. By mid-July, it was 40 percent. 𝒚(𝒋) 𝒚(𝒌) Chen et al. "A simple framework for contrastive learning of visual representations." ICML 2020.

- 10. Generation of Imposter 10 We add a small perturbation to the hidden representation of target sentence to generate imposter with linear approximation as Goodfellow et al. (2015). Encoder-Decoder Nu era într-o formă prea bună. <bos> He wasn’t in great shape. He was was in great shape. Pooling Pooling Min Source Sentence Target Sentence Goodfellow et al. "Explaining and harnessing adversarial examples. International Conference on Learning Representations." ICLR 2015. Objective Linear Approximation

- 11. Generation of Distant Target 11 Add a large perturbation to the target embedding to be far away from the source sentence but preserving the semantics of target sentence. Maximize Distance Semantic Preservation Encoder Decoder Pooling Pooling He wasn’t in good shape. Source Sentence Max Nu era într-o formă prea bună. <bos> He wasn’t in great shape. Target Sentence

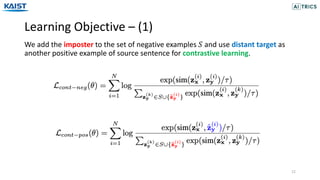

- 12. Learning Objective – (1) 12 We add the imposter to the set of negative examples 𝑆 and use distant target as another positive example of source sentence for contrastive learning.

- 13. Learning Objective 13 We jointly maximize the following objectives with stochastic gradient ascent.

- 14. Experimental Setup – (1) 14 1) Tasks and Evaluation Metric • Neural Machine Translation: BLEU score • Question Generation : BLEU score, F1/EM • Text Summarization: Rouge score 2) Data • WMT’16 RO-EN • SQuAD • Xsum

- 15. Experimental Setup – (2) 15 3) Baselines • T5-MLE: The T5 model trained with maximum likelihood estimation. • T5-𝛼-MLE: The T5 model trained with MLE but decode target sequence with temperature scaling 𝛼 in softmax. • T5-MLE-contrastive: Naïve contrastive learning with MLE. [Caccia 2020]Caccia et al., Language gans falling short, ICLR 2019

- 16. Experimental Setup – (2) 16 3) Baselines • T5-SSMBA [Ng 2020]: Generating additional examples by denoising and reconstructing target sentences with masked language model • T5-WordDropout Contrastive [Yang 2019]: Generate negative examples by removing the most frequent word from the target sentence. • T5-R3f [Aghajanyan 2021]: Add a Gaussian noise and enforce consistency loss. [Ng 2020] Ng et al, Ssmba: Self-supervised manifold based data augmentation for improving out-of-domain robustness, EMNLP 2020 [Yang2021] Reducing word omission errors in neural machine translation: A contrastive learning approach, ACL 2019 [Aghajanyan 2019] Better fine-tuning by reducing representational collapse, ICLR2021

- 17. Experimental Result – (1) 17 Method BLEU Machine Translation – WMT’16 RO-EN T5-MLE 32.43 T5-𝛼-MLE 32.14 T5-MLE-contrastive 32.03 T5-SSMBA 32.81 T5-WordDropout Contrastive 32.44 T5-CLAPS (Ours) 33.96

- 18. Experimental Result – (2) 18 Method BLEU F1 EM Question Generation – SQuAD T5-MLE 21.00 67.64 55.91 T5-𝛼-MLE 20.50 68.04 56.30 T5-MLE-contrastive 20.91 67.32 55.25 T5-SSMBA 21.07 68.47 56.37 T5-WordDropout Contrastive 21.19 68.16 56.41 T5-CLAPS (Ours) 21.55 69.01 57.06

- 19. Experimental Result – (3) 19 Method Rouge-1 Rouge-2 Rouge-L Text Summarization – Xsum T5-MLE 36.10 14.72 29.16 T5-𝛼-MLE 36.68 15.10 29.72 T5-MLE-contrastive 36.34 14.81 29.41 T5-SSMBA 36.58 14.81 29.79 T5-WordDropout Contrastive 36.88 15.11 29.68 T5-CLAPS (Ours) 37.89 15.78 30.59

- 20. Visualization of Sentence Embedding 20 The model learns to push away the imposter from the target sentence and pull the distant target to the source sentence.

- 21. Conclusion 21 • We propose a contrastive learning framework for conditional sequence generation to mitigate the exposure bias problem. • With adversarial perturbation, we generate negative and positive pairs that are more difficult for the model to distinguish from the GT pair. • Results show that we outperforms the baselines of T5 model across machine translation, question generation and summarization tasks.

- 22. Future work 22 • For future work, we will improve the quality of imposter which contains many grammatical errors. • Generating imposter and distant target still requires a large amount of labeled data. We need to improve the sample efficiency.

- 23. Thank you

![Experimental Setup – (2)

15

3) Baselines

• T5-MLE:

The T5 model trained with maximum likelihood estimation.

• T5-𝛼-MLE:

The T5 model trained with MLE but decode target sequence with temperature

scaling 𝛼 in softmax.

• T5-MLE-contrastive:

Naïve contrastive learning with MLE.

[Caccia 2020]Caccia et al., Language gans falling short, ICLR 2019](https://image.slidesharecdn.com/iclr2021contrastivelearningwithadversarialperturbationsforconditionaltextgeneration-210514140037/85/Contrastive-Learning-with-Adversarial-Perturbations-for-Conditional-Text-Generation-15-320.jpg)

![Experimental Setup – (2)

16

3) Baselines

• T5-SSMBA [Ng 2020]:

Generating additional examples by denoising and reconstructing target

sentences with masked language model

• T5-WordDropout Contrastive [Yang 2019]:

Generate negative examples by removing the most frequent word from the

target sentence.

• T5-R3f [Aghajanyan 2021]:

Add a Gaussian noise and enforce consistency loss.

[Ng 2020] Ng et al, Ssmba: Self-supervised manifold based data augmentation for improving out-of-domain robustness, EMNLP 2020

[Yang2021] Reducing word omission errors in neural machine translation: A contrastive learning approach, ACL 2019

[Aghajanyan 2019] Better fine-tuning by reducing representational collapse, ICLR2021](https://image.slidesharecdn.com/iclr2021contrastivelearningwithadversarialperturbationsforconditionaltextgeneration-210514140037/85/Contrastive-Learning-with-Adversarial-Perturbations-for-Conditional-Text-Generation-16-320.jpg)