Cooperative VM Migration for a virtualized HPC Cluster with VMM-bypass I/O devices

- 1. Cooperative VM Migration for a virtualized HPC Cluster with VMM-bypass I/O devices Ryousei Takano, Hidemoto Nakada, Takahiro Hirofuchi, Yoshio Tanaka, and Tomohiro Kudoh Information Technology Research Institute, National Institute of Advanced Industrial Science and Technology (AIST), Japan IEEE eScience 2012, Oct. 11 2012, Chicago

- 2. Background • HPC cloud is a promising e-Science platform. – HPC users begin to take an interest in Cloud computing, e.g., Amazon EC2 Cluster Compute Instances. • Virtualization is a key technology. – Pro: It makes migration of computing elements easy. • VM migration is useful for achieving fault tolerance, server consolidation, etc. – Con: It introduces a large overhead, spoiling I/O performance. • VMM-bypass I/O technologies, e.g., PCI passthrough and SR-IOV, can significantly mitigate the overhead. VMM-bypass I/O makes it impossible to migrate a VM. 2

- 3. Contribution • Goal: – To realize VM migration and checkpoint/restart on a virtualized cluster with VMM-bypass I/O devices. • E.g., VM migration on an Infiniband cluster • Contributions: – We propose cooperative VM migration based on the Symbiotic Virtualization (SymVirt) mechanism. – We demonstrate reactive/proactive fault tolerant (FT) systems. – We show postcopy migration helps to reduce the service downtime in the proactive FT system. 3

- 4. Agenda • Background and Motivation • SymVirt: Symbiotic Virtualization Mechanism • Experiment • Related Work • Conclusion and Future Work 4

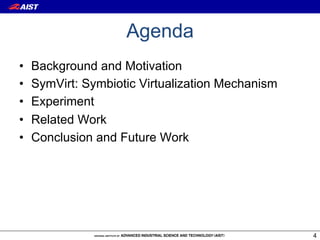

- 5. Motivating Observation • Performance evaluation of HPC cloud – (Para-)virtualized I/O incurs a large overhead. – PCI passthrough significantly mitigate the overhead. KVM (IB) KVM (virtio) 300 BMM (IB) BMM (10GbE) VM1 VM1 KVM (IB) KVM (virtio) 250 Guest OS Guest OS Execution time [seconds] 200 Physical Guest driver driver 150 100 VMM VMM 50 Physical driver 0 BT CG EP FT LU The overhead of I/O virtualization on the NAS IB QDR HCA 10GbE NIC Parallel Benchmarks 3.3.1 class C, 64 processes. BMM: Bare Metal Machine 5

- 6. Para-virtualized VMM-Bypass I/O device (virtio_net) PCI passthrough SR-IOV VM1 VM2 VM1 VM2 VM1 VM2 Guest OS Guest OS Guest OS … … … Guest Physical Physical driver driver driver VMM VMM VMM vSwitch Physical driver NIC NIC NIC Switch (VEB) Para-virt PCI SR-IOV device passthrough We address Performance this issue! Device sharing VM migration 6

- 7. Problem VMM-bypass I/O technologies make VM migration and checkpoint/restart impossible. 1. VMM does not know the time when VMM-bypass I/O devices are detached safely. • To perform such migration without losing in-flight data, packet transmission to/from the VM should be stopped prior to detaching. • With a VMM, it is hard to know the communication status of an application inside the VM, especially if VMM-bypass I/O devices are used. 2. VMM cannot migrate the state of VMM-bypass I/O devices from the source to the destination. • With Infiniband, Local ID, QueuePair Numbers, etc. 7

- 8. Goal migration Ethernet Cluster 1 Cluster 2 VM1 VM2 VM3 VM4 detach re-attach Infiniband Infiniband We need a mechanism of combining VM migration and PCI device hot-plugging. 8

- 10. Cooperative VM Migration Existing VM Migration Cooperative VM Migration (Black-box approach) (Gray-box approach) Pro: portability Pro: performance Guest OS Guest OS Global coordination Application Application Device setup Cooperation Global VMM coordination VMM VMM-bypass Device setup I/O Migration Migration NIC NIC 10

- 11. SymVirt: Symbiotic Virtualization • We focus on MPI programs. • We design and implement a symbiotic virtualization Guest OS Global (SymVirt) mechanism. coordination Application – It is a cross-layer mechanism Device setup between a VMM and an MPI runtime system. SymVirt Cooperation VMM VMM-bypass I/O Migration NIC 11

- 12. SymVirt: Overview node #0 node #n ... Guest OS Guest OS MPI app. MPI app. MPI app. Cloud scheduler MPI lib. MPI lib. MPI lib. 1) trigger events SymVirt SymVirt 2) coordination SymVirt coordinator coordinator coordinator 3) SymVirt wait 5) SymVirt signal VMM VMM 4) do something, e.g., SymVirt SymVirt migration/checkpointing SymVirt controller agent agent 12

- 13. SymVirt wait and signal calls • SymVirt provides a simple Guest OS-to-VMM communication mechanism. • SymVirt coordinator issues a SymVirt wait call, the guest OS is blocked until a SymVirt signal call is issued. • In the meantime, SymVirt agent controls the VM via a VMM monitor interface. Application confirm confirm linkup SymVirt coordinator SymVirt wait SymVirt Guest OS mode signal VMM mode detach migration re-attach SymVirt controller/agent 13

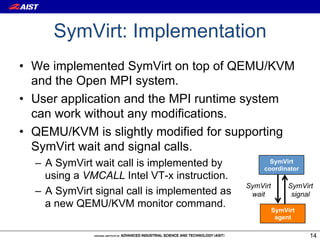

- 14. SymVirt: Implementation • We implemented SymVirt on top of QEMU/KVM and the Open MPI system. • User application and the MPI runtime system can work without any modifications. • QEMU/KVM is slightly modified for supporting SymVirt wait and signal calls. – A SymVirt wait call is implemented by SymVirt coordinator using a VMCALL Intel VT-x instruction. SymVirt SymVirt – A SymVirt signal call is implemented as wait signal a new QEMU/KVM monitor command. SymVirt agent 14

- 15. SymVirt: Implementation (cont’d) • SymVirt coordinator is heavily relied on the Open MPI checkpoint/restart (C/R) framework. – Global coordination of SymVirt is the same as a coordination protocol for MPI programs. – SymVirt executes VM-level migration or C/R instead of process-level C/R using the BLCR system. – SymVirt does not need to take care of changing LIDs and QPNs after a migration, because Open MPI’s BTL modules are re-constructed and connections are re-established at continue or restart phases. BTL: Point-to-Point Byte Transfer Layer 15

- 16. SymVirt: Implementation (cont’d) • SymVirt controller and agent are written in Python. import symvirt! ! agent_list = [migrate_from]! # device attach! ctl = symvirt.Controller(agent_list)! ctl.append_agent(migrate_to)! ! ctl.wait_all()! # device detach! kwargs = {'pci_id':'04:00.0', ctl.wait_all()! 'tag':'vf0'}! kwargs = {'tag':'vf0'}! ctl.device_attach(**kwargs)! ctl.device_detach(**kwargs)! ctl.signal()! ctl.signal()! ! ! ctl.close() # vm migration! ctl.wait_all()! kwargs = {'postcopy':True, 'uri':'tcp:%s:%d' ! % (migrate_to[0], migrate_port)}! ctl.migrate(**kwargs)! ctl.remove_agent(migrate_from)! 16

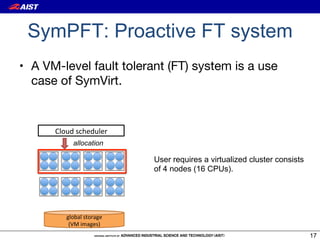

- 17. SymPFT: Proactive FT system • A VM-level fault tolerant (FT) system is a use case of SymVirt. Cloud&scheduler allocation User requires a virtualized cluster consists of 4 nodes (16 CPUs). global&storage& (VM&images) 17

- 18. SymPFT: Proactive FT system • A VM-level fault tolerant (FT) system is a use case of SymVirt. • A VM is migrated from a “unhealthy” node to a “healthy” node before the node crashes. Cloud&scheduler Cloud&scheduler allocation re-allocation Failure re!! Failu prediction VM migration global&storage& global&storage& (VM&images) (VM&images) 18

- 19. Experiment

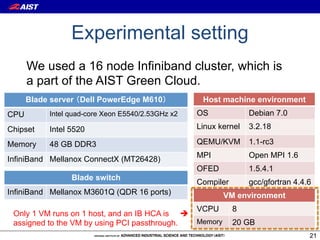

- 20. Experiment • The overhead of SymPFT – We used 8 VMs on an Infiniband cluster. – We migrated a VM once during a benchmark execution. • Two benchmark programs written in MPI – memtest: a simple memory intensive benchmark – NAS Parallel Benchmarks (NPB) version 3.3.1 • Overhead reduction using postcopy migration 20

- 21. Experimental setting We used a 16 node Infiniband cluster, which is a part of the AIST Green Cloud. Blade server Dell PowerEdge M610 Host machine environment CPU Intel quad-core Xeon E5540/2.53GHz x2 OS Debian 7.0 Chipset Intel 5520 Linux kernel 3.2.18 Memory 48 GB DDR3 QEMU/KVM 1.1-rc3 InfiniBand Mellanox ConnectX (MT26428) MPI Open MPI 1.6 OFED 1.5.4.1 Blade switch Compiler gcc/gfortran 4.4.6 InfiniBand Mellanox M3601Q (QDR 16 ports) VM environment VCPU 8 Only 1 VM runs on 1 host, and an IB HCA is ! assigned to the VM by using PCI passthrough. Memory 20 GB 21

- 22. Result: memtest • The migration time is dependent on the memory footprint. – The migration throughput is less than 3 Gbps. • Both hotplug and link-up times are approximately constant. – The link-up time is not a negligible overhead. c.f., Ethernet 100 migration hotplug linkup 80 Execution Time [Seconds] 44.2 53.7 60 35.9 38.7 40 14.6 13.5 12.5 11.3 20 28.5 28.5 28.5 28.6 0 2GB 4GB 8GB 16GB memory footprint This result does not include our proceeding. 22

- 23. Result: NAS Parallel Benchmarks 1400 linkup postcopy migration 1200 hotplug precopy migration +105 s Execution time [seconds] application 1000 There is no overhead +103 s 800 during normal operations +97 s +299 s 600 400 The overhead is proportional 200 to the memory footprint. 0 baseline precopy postcopy baseline precopy postcopy baseline precopy postcopy baseline precopy postcopy BT CG FT LU Transferred Memory Size during VM Migration [MB] BT CG FT LU 4417 3394 15678 2348 23

- 24. Integration with postcopy migration • In contrast to precopy migration, postcopy migration transfers memory pages on demand after the execution node is switched to the destination. • Postcopy migration can hide the overhead of the hot-add and link-up times by overlapping them and migration. • We used our postcopy migration implementation for QEMU/KVM, Yabusame. SymPFT a) hot-del b) migration c) hot-add d) link-up (precopy) SymPFT overhead mitigation (postcopy) 24

- 25. Result: Effect of postcopy migration 1400 linkup postcopy migration -15 % 1200 hotplug precopy migration Execution time [seconds] application -13 s 1000 800 -14 % -53 % 600 400 Postcopy migration can hide the 200 overhead of hotplug and link-up by 0 overlapping them and migration. baseline precopy postcopy baseline precopy postcopy baseline precopy postcopy baseline precopy postcopy BT CG FT LU Transferred Memory Size during VM Migration [MB] BT CG FT LU 4417 3394 15678 2348 25

- 26. Related Work • Some VM-level reactive and proactive FT systems have been proposed for HPC systems. – E.g., VNsnap: a distributed snapshots of VMs • The coordination is executed by snooping the traffic of a software switch outside the VMs. – They do not support VMM-bypass I/O devices. • Mercury: a self-virtualization technique – An OS can turn virtualization on and off on demand. – It lacks a coordination mechanism among distributed VMM. • SymCall: an upcall mechanism from a VMM to the guest OS, using a nested VM Exit call – SymVirt is a simple hypercall mechanism from a guest OS to the VMM, assuming it works in cooperation with a cloud scheduler. 26

- 27. Conclusion and Future Work

- 28. Conclustion • We have proposed a cooperative VM migration mechanism that enables us to migrate VMs with VMM- bypass I/O devices, using a simple Guest OS-to-VMM communication mechanism, called SymVirt. • Using the proposed mechanism, we demonstrated a proactive FT system in a virtualized Infiniband cluster. • We also confirmed that postcopy migration helps to reduce the downtime in the proactive FT system. • SymVirt can be useful for not only fault tolerant but also load balancing and server consolidation. 28

- 29. Future Work • Interconnect-transparent migration, called “Ninja migration” – We have submitted another conference paper. • Overhead mitigation of SymVirt – Very long link-up time problem – Better integration with postcopy migration • A generic communication layer supporting cooperative VM migration – It is independent on an MPI runtime system. 29

- 30. Thanks for your attention! This work was partly supported by JSPS KAKENHI 24700040 and ARGO GRAPHICS, Inc. 30

![Motivating Observation

• Performance evaluation of HPC cloud

– (Para-)virtualized I/O incurs a large overhead.

– PCI passthrough significantly mitigate the overhead.

KVM (IB) KVM (virtio)

300

BMM (IB) BMM (10GbE) VM1

VM1

KVM (IB) KVM (virtio)

250

Guest OS Guest OS

Execution time [seconds]

200 Physical Guest

driver driver

150

100 VMM VMM

50 Physical

driver

0

BT CG EP FT LU

The overhead of I/O virtualization on the NAS IB QDR HCA 10GbE NIC

Parallel Benchmarks 3.3.1 class C, 64 processes.

BMM: Bare Metal Machine

5](https://image.slidesharecdn.com/esci2012-takano-121011145155-phpapp02/85/Cooperative-VM-Migration-for-a-virtualized-HPC-Cluster-with-VMM-bypass-I-O-devices-5-320.jpg)

![SymVirt: Implementation (cont’d)

• SymVirt controller and agent are written in Python.

import symvirt! !

agent_list = [migrate_from]! # device attach!

ctl = symvirt.Controller(agent_list)! ctl.append_agent(migrate_to)!

! ctl.wait_all()!

# device detach! kwargs = {'pci_id':'04:00.0',

ctl.wait_all()! 'tag':'vf0'}!

kwargs = {'tag':'vf0'}! ctl.device_attach(**kwargs)!

ctl.device_detach(**kwargs)! ctl.signal()!

ctl.signal()! !

! ctl.close()

# vm migration!

ctl.wait_all()!

kwargs = {'postcopy':True, 'uri':'tcp:%s:%d' !

% (migrate_to[0], migrate_port)}!

ctl.migrate(**kwargs)!

ctl.remove_agent(migrate_from)!

16](https://image.slidesharecdn.com/esci2012-takano-121011145155-phpapp02/85/Cooperative-VM-Migration-for-a-virtualized-HPC-Cluster-with-VMM-bypass-I-O-devices-16-320.jpg)

![Result: memtest

• The migration time is dependent on the memory footprint.

– The migration throughput is less than 3 Gbps.

• Both hotplug and link-up times are approximately constant.

– The link-up time is not a negligible overhead. c.f., Ethernet

100

migration hotplug linkup

80

Execution Time

[Seconds]

44.2 53.7

60 35.9 38.7

40

14.6 13.5 12.5 11.3

20

28.5 28.5 28.5 28.6

0

2GB 4GB 8GB 16GB

memory footprint

This result does not include our proceeding.

22](https://image.slidesharecdn.com/esci2012-takano-121011145155-phpapp02/85/Cooperative-VM-Migration-for-a-virtualized-HPC-Cluster-with-VMM-bypass-I-O-devices-22-320.jpg)

![Result: NAS Parallel Benchmarks

1400

linkup postcopy migration

1200 hotplug precopy migration

+105 s

Execution time [seconds]

application

1000

There is no overhead +103 s

800 during normal operations

+97 s

+299 s

600

400

The overhead is proportional

200 to the memory footprint.

0

baseline precopy postcopy baseline precopy postcopy baseline precopy postcopy baseline precopy postcopy

BT CG FT LU

Transferred Memory Size during VM Migration [MB]

BT CG FT LU

4417 3394 15678 2348

23](https://image.slidesharecdn.com/esci2012-takano-121011145155-phpapp02/85/Cooperative-VM-Migration-for-a-virtualized-HPC-Cluster-with-VMM-bypass-I-O-devices-23-320.jpg)

![Result: Effect of postcopy migration

1400

linkup postcopy migration

-15 %

1200 hotplug precopy migration

Execution time [seconds]

application -13 s

1000

800 -14 %

-53 %

600

400

Postcopy migration can hide the

200 overhead of hotplug and link-up by

0 overlapping them and migration.

baseline precopy postcopy baseline precopy postcopy baseline precopy postcopy baseline precopy postcopy

BT CG FT LU

Transferred Memory Size during VM Migration [MB]

BT CG FT LU

4417 3394 15678 2348

25](https://image.slidesharecdn.com/esci2012-takano-121011145155-phpapp02/85/Cooperative-VM-Migration-for-a-virtualized-HPC-Cluster-with-VMM-bypass-I-O-devices-25-320.jpg)