Crowdsourcing Linked Data Quality Assessment

- 1. Crowdsourcing Linked Data Quality Assessment Maribel Acosta, Amrapali Zaveri, Elena Simperl, Dimitris Kontokostas, Sören Auer and Jens Lehmann @ISWC2013 KIT – University of the State of Baden-Wuerttemberg and National Research Center of the Helmholtz Association www.kit.edu

- 2. Motivation Varying quality of Linked Data sources Some quality issues require certain interpretation that can be easily performed by humans dbpedia:Dave_Dobbyn dbprop:dateOfBirth “3”. Solution: Include human verification in the process of LD quality assessment Direct application: Detecting pattern in errors may allow to identify (and correct) the extraction mechanisms 3 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

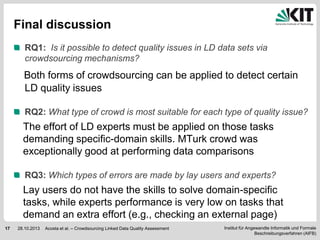

- 3. Research questions RQ1: Is it possible to detect quality issues in LD data sets via crowdsourcing mechanisms? RQ2: What type of crowd is most suitable for each type of quality issue? RQ3: Which types of errors are made by lay users and experts when assessing RDF triples? 4 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

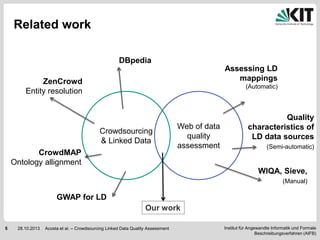

- 4. Related work DBpedia Assessing LD mappings ZenCrowd Entity resolution (Automatic) Crowdsourcing & Linked Data CrowdMAP Ontology allignment Web of data quality assessment Quality characteristics of LD data sources (Semi-automatic) WIQA, Sieve, (Manual) GWAP for LD Our work 5 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

- 5. OUR APPROACH 6 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

- 6. Methodology 2 1 Correct {s p o .} Dataset {s p o .} 3 Incorrect + Quality issue Steps to implement the methodology 1 2 Selecting the appropriate crowdsourcing approaches 3 7 Selecting LD quality issues to crowdsource Designing and generating the interfaces to present the data to the crowd 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

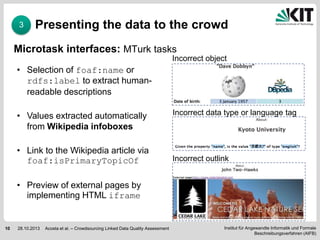

- 7. 1 Selecting LD quality issues to crowdsource Three categories of quality problems occur in DBpedia [Zaveri2013] and can be crowdsourced: Incorrect object Example: dbpedia:Dave_Dobbyn dbprop:dateOfBirth “3”. Incorrect data type or language tags Example: dbpedia:Torishima_Izu_Islands foaf:name “ ”@en. Incorrect link to “external Web pages” Example: dbpedia:John-Two-Hawks dbpedia-owl:wikiPageExternalLink <http://cedarlakedvd.com/> 8 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

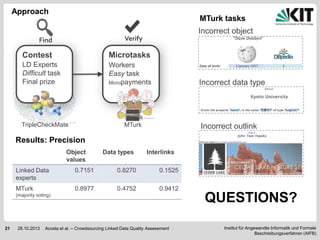

- 8. 2 Selecting appropriate crowdsourcing approaches (1) Find Verify Contest Microtasks LD Experts Difficult task Final prize Workers Easy task Micropayments TripleCheckMate [Kontoskostas2013] MTurk http://mturk.com Adapted from [Bernstein2010] 9 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

- 9. 3 Presenting the data to the crowd Microtask interfaces: MTurk tasks Incorrect object • Selection of foaf:name or rdfs:label to extract humanreadable descriptions • Values extracted automatically from Wikipedia infoboxes • Link to the Wikipedia article via foaf:isPrimaryTopicOf Incorrect data type or language tag Incorrect outlink • Preview of external pages by implementing HTML iframe 10 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

- 10. EXPERIMENTAL STUDY 11 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

- 11. Experimental design • Crowdsourcing approaches: • Find stage: Contest with LD experts • Verify stage: Microtasks (5 assignments) • Creation of a gold standard: • Two of the authors of this paper (MA, AZ) generated the gold standard for all the triples obtained from the contest • Each author independently evaluated the triples • Conflicts were resolved via mutual agreement • Metric: precision 12 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

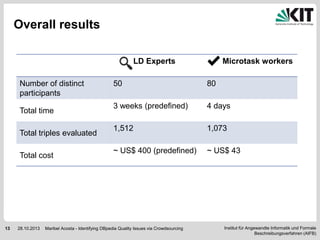

- 12. Overall results LD Experts Number of distinct participants Total time Total triples evaluated Total cost 13 28.10.2013 Microtask workers 50 80 3 weeks (predefined) 4 days 1,512 1,073 ~ US$ 400 (predefined) ~ US$ 43 Maribel Acosta - Identifying DBpedia Quality Issues via Crowdsourcing Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

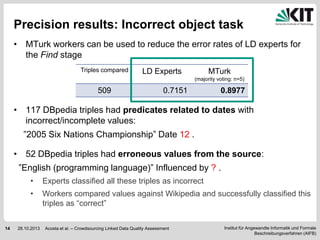

- 13. Precision results: Incorrect object task • MTurk workers can be used to reduce the error rates of LD experts for the Find stage Triples compared LD Experts MTurk (majority voting: n=5) 509 0.7151 0.8977 • 117 DBpedia triples had predicates related to dates with incorrect/incomplete values: ”2005 Six Nations Championship” Date 12 . • 52 DBpedia triples had erroneous values from the source: ”English (programming language)” Influenced by ? . • • 14 Experts classified all these triples as incorrect Workers compared values against Wikipedia and successfully classified this triples as “correct” 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

- 14. Precision results: Incorrect data type task Triples compared LD Experts MTurk (majority voting: n=5) 341 0.8270 0.4752 Number of triples 140 Experts TP 120 Experts FP 100 Crowd TP 80 Crowd FP 60 40 20 0 Date English Millimetre Nanometre Number Number with decimals Data types 15 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment Second Volt Year Not specified / URI Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

- 15. Precision results: Incorrect link task Triples compared Baseline LD Experts MTurk (n=5 majority voting) 223 0.2598 0.1525 0.9412 • We analyzed the 189 misclassifications by the experts: 11% 39% Freebase links 50% Wikipedia images External links • The 6% misclassifications by the workers correspond to pages with a language different from English. 16 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

- 16. Final discussion RQ1: Is it possible to detect quality issues in LD data sets via crowdsourcing mechanisms? Both forms of crowdsourcing can be applied to detect certain LD quality issues RQ2: What type of crowd is most suitable for each type of quality issue? The effort of LD experts must be applied on those tasks demanding specific-domain skills. MTurk crowd was exceptionally good at performing data comparisons RQ3: Which types of errors are made by lay users and experts? Lay users do not have the skills to solve domain-specific tasks, while experts performance is very low on tasks that demand an extra effort (e.g., checking an external page) 17 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

- 17. CONCLUSIONS & FUTURE WORK 18 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

- 18. Conclusions & Future Work A crowdsourcing methodology for LD quality assessment: Find stage: LD experts Verify stage: MTurk workers Crowdsourcing approaches are feasible in detecting the studied quality issues Application: Detecting pattern in errors to fix the extraction mechanisms Future Work Conducting new experiments (other quality issues and domains) Integration of the crowd into curation processes and tools 19 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

- 19. References & Acknowledgements [Bernstein2010] M. S. Bernstein, G. Little, R. C. Miller, B. Hartmann, M. S. Ackerman, D. R. Karger, D. Crowell, and K. Panovich. Soylent: a word processor with a crowd inside. In Proceedings of the 23nd annual ACM symposium on User interface software and technology, UIST ’10, pages 313–322, New York, NY, USA, 2010. ACM. [Kontoskostas2013] D Kontokostas, A Zaveri, S Auer, J Lehmann. TripleCheckMate: A Tool for Crowdsourcing the Quality Assessment of Linked Data . Knowledge Engineering and the Semantic Web, 2013 [Zaveri2013] A. Zaveri, A. Rula, A. Maurino, R. Pietrobon, J. Lehmann, and S. Auer. Quality as- sessment methodologies for linked open data. Under review, http://www.semantic-web-journal.net/content/quality-assessmentmethodologies-linked-open-data. 20 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

- 20. Approach MTurk tasks Incorrect object Verify Find Contest Microtasks LD Experts Difficult task Final prize Workers Easy task Micropayments TripleCheckMate Incorrect data type MTurk Incorrect outlink Results: Precision Object values Data types Interlinks Linked Data experts 0.7151 0.8270 0.1525 MTurk 0.8977 0.4752 0.9412 (majority voting) 21 28.10.2013 Acosta et al. – Crowdsourcing Linked Data Quality Assessment QUESTIONS? Institut für Angewandte Informatik und Formale Beschreibungsverfahren (AIFB)

Editor's Notes

- #4: As we know, the Linking Open Data cloud is a great source of data. However, the varying quality of Linked Data sets often imposes serious problems to developers aiming to consume and integrate LD in their applications.Keeping aside the factual flaws of the original sources, several quality issues are introduced during the RDFication process. Solution: Include human verification in the process of LD quality assessment in order to detect the quality issues that cannot be easily detected by other meansDirect application: Detecting patterns in errors may allow to identify (and correct) the extraction mechanisms in order

- #13: TP = a triple that is identified as “incorrect” by the crowd, and the triple is indeed incorrectFP = a triple identified as “incorrect” by the crowd, but was actually correct in the data set

![1

Selecting LD quality issues

to crowdsource

Three categories of quality problems occur

in DBpedia [Zaveri2013] and can be crowdsourced:

Incorrect object

Example: dbpedia:Dave_Dobbyn dbprop:dateOfBirth “3”.

Incorrect data type or language tags

Example: dbpedia:Torishima_Izu_Islands foaf:name “

”@en.

Incorrect link to “external Web pages”

Example: dbpedia:John-Two-Hawks dbpedia-owl:wikiPageExternalLink

<http://cedarlakedvd.com/>

8

28.10.2013

Acosta et al. – Crowdsourcing Linked Data Quality Assessment

Institut für Angewandte Informatik und Formale

Beschreibungsverfahren (AIFB)](https://image.slidesharecdn.com/vl-iswc2013-crowdsourcingldqualityissues-131028044500-phpapp01/85/Crowdsourcing-Linked-Data-Quality-Assessment-7-320.jpg)

![2

Selecting appropriate

crowdsourcing approaches (1)

Find

Verify

Contest

Microtasks

LD Experts

Difficult task

Final prize

Workers

Easy task

Micropayments

TripleCheckMate

[Kontoskostas2013]

MTurk

http://mturk.com

Adapted from [Bernstein2010]

9

28.10.2013

Acosta et al. – Crowdsourcing Linked Data Quality Assessment

Institut für Angewandte Informatik und Formale

Beschreibungsverfahren (AIFB)](https://image.slidesharecdn.com/vl-iswc2013-crowdsourcingldqualityissues-131028044500-phpapp01/85/Crowdsourcing-Linked-Data-Quality-Assessment-8-320.jpg)

![References & Acknowledgements

[Bernstein2010]

M. S. Bernstein, G. Little, R. C. Miller, B. Hartmann, M. S. Ackerman, D. R.

Karger, D. Crowell, and K. Panovich. Soylent: a word processor with a crowd

inside. In Proceedings of the 23nd annual ACM symposium on User interface

software and technology, UIST ’10, pages 313–322, New

York, NY, USA, 2010. ACM.

[Kontoskostas2013]

D Kontokostas, A Zaveri, S Auer, J Lehmann. TripleCheckMate: A Tool for

Crowdsourcing the Quality Assessment of Linked Data . Knowledge

Engineering and the Semantic Web, 2013

[Zaveri2013]

A. Zaveri, A. Rula, A. Maurino, R. Pietrobon, J. Lehmann, and S. Auer.

Quality as- sessment methodologies for linked open data. Under

review, http://www.semantic-web-journal.net/content/quality-assessmentmethodologies-linked-open-data.

20

28.10.2013

Acosta et al. – Crowdsourcing Linked Data Quality Assessment

Institut für Angewandte Informatik und Formale

Beschreibungsverfahren (AIFB)](https://image.slidesharecdn.com/vl-iswc2013-crowdsourcingldqualityissues-131028044500-phpapp01/85/Crowdsourcing-Linked-Data-Quality-Assessment-19-320.jpg)