Data mining with caret package

- 1. Dataminingwithcaretpackage Kai Xiao and Vivian Zhang @Supstat Inc.

- 2. Outline Introduction of data mining and caret before model training building model advance topic exercise · · visualization pre-processing Data slitting - - - · Model training and Tuning Model performance variable importance - - - · feature selection parallel processing - - · /

- 4. Introduction of caret The caret package (short for Classification And REgression Training) is a set of functions that attempt to streamline the process for creating predictive models. The package contains tools for: data splitting pre-processing feature selection model tuning using resampling variable importance estimation · · · · · /

- 7. visualizations The featurePlot function is a wrapper for different lattice plots to visualize the data. Scatterplot Matrix boxplot featurePlot(x=iris[,1:4], y=iris$Species, plot="pairs", ##Addakeyatthetop auto.key=list(columns=3)) featurePlot(x=iris[,1:4], y=iris$Species, plot="box", ##Addakeyatthetop auto.key=list(columns=3)) /

- 9. pre-processing Zero- and Near Zero-Variance Predictors data<-data.frame(x1=rnorm(100), x2=runif(100), x3=rep(c(0,1),times=c(2,98)), x4=rep(3,length=100)) nzv<-nearZeroVar(data,saveMetrics=TRUE) nzv nzv<-nearZeroVar(data) dataFilted<-data[,-nzv] head(dataFilted) /

- 11. pre-processing Identifying Linear Dependencies Predictors set.seed(1) x1<-rnorm(100) x2<-x1+rnorm(100,0.1,0.1) x3<-x1+rnorm(100,1,1) x4<-x2+x3 data<-data.frame(x1,x2,x3,x4) comboInfo<-findLinearCombos(data) dataFilted<-data[,-comboInfo$remove] head(dataFilted) /

- 15. data splitting create balanced splits of the data set.seed(1) trainIndex<-createDataPartition(iris$Species,p=0.8,list=FALSE, times=1) head(trainIndex) irisTrain<-iris[trainIndex,] irisTest<-iris[-trainIndex,] summary(irisTest$Species) createResample can be used to make simple bootstrap samples createFolds can be used to generate balanced cross–validation groupings from a set of data. · · /

- 16. Model Training and Parameter Tuning The train function can be used to evaluate, using resampling, the effect of model tuning parameters on performance choose the "optimal" model across these parameters estimate model performance from a training set · · · /

- 19. Model Training and Parameter Tuning define sets of model parameter values to evaluate tunedf<- data.frame(.cp=seq(0.001,0.2,length.out=10)) /

- 20. Model Training and Parameter Tuning define the type of resampling method k-fold cross-validation (once or repeated) leave-one-out cross-validation bootstrap (simple estimation or the 632 rule) · · · fitControl<-trainControl(method="repeatedcv", #10-foldcrossvalidation number=10, #repeated3times repeats=3) /

- 22. Model Training and Parameter Tuning look at the final result treemodel plot(treemodel) /

- 23. The trainControl Function method: The resampling method number and repeats: number controls with the number of folds in K-fold cross-validation or number of resampling iterations for bootstrapping and leave-group-out cross-validation. verboseIter: A logical for printing a training log. returnData: A logical for saving the data into a slot called trainingData. classProbs: a logical value determining whether class probabilities should be computed for held- out samples during resample. summaryFunction: a function to compute alternate performance summaries. selectionFunction: a function to choose the optimal tuning parameters. returnResamp: a character string containing one of the following values: "all", "final" or "none". This specifies how much of the resampled performance measures to save. · · · · · · · · /

- 24. Alternate Performance Metrics Performance Metrics: Another built-in function, twoClassSummary, will compute the sensitivity, specificity and area under the ROC curve regression: RMSE and R2 classification: accuracy and Kappa · · fitControl<-trainControl(method="repeatedcv", number=10, repeats=3, classProbs=TRUE, summaryFunction=twoClassSummary) treemodel<-train(x=trainData, y=trainClass, method='rpart', trControl=fitControl, tuneGrid=tunedf, metric="ROC") treemodel /

- 25. Extracting Predictions Predictions can be made from these objects as usual. pre<-predict(treemodel,testData) pre<-predict(treemodel,testData,type="prob") /

- 26. Evaluating Test Sets caret also contains several functions that can be used to describe the performance of classification models testPred<-predict(treemodel,testData) testPred.prob<-predict(treemodel,testData,type='prob') postResample(testPred,testClass) confusionMatrix(testPred,testClass) /

- 28. Exploring and Comparing Resampling Distributions Between-Models Comparing let's build a nnet model, and compare these two model performance · · tunedf<-expand.grid(.decay=0.1, .size=1:8, .bag=T) nnetmodel<-train(x=trainData, y=trainClass, method='avNNet', trControl=fitControl, trace=F, linout=F, metric="ROC", tuneGrid=tunedf) nnetmodel /

- 29. Exploring and Comparing Resampling Distributions Given these models, can we make statistical statements about their performance differences? To do this, we first collect the resampling results using resamples. We can compute the differences, then use a simple t-test to evaluate the null hypothesis that there is no difference between models. resamps<-resamples(list(tree=treemodel, nnet=nnetmodel)) bwplot(resamps) densityplot(resamps,metric='ROC') difValues<-diff(resamps) summary(difValues) /

- 30. Variable importance evaluation Variable importance evaluation functions can be separated into two groups: model-based approach Model Independent approach · · For classification, ROC curve analysis is conducted on each predictor. For regression, the relationship between each predictor and the outcome is evaluated - - #model-basedapproach treeimp<-varImp(treemodel) plot(treeimp) #ModelIndependentapproach RocImp<-varImp(treemodel,useModel=FALSE) plot(RocImp) #or RocImp<-filterVarImp(x=trainData,y=trainClass) plot(RocImp) /

- 31. feature selection Many models do not necessarily use all the predictors Feature Selection Using Search Algorithms("wrapper" approach) Feature Selection Using Univariate Filters('filter' approach) · · · /

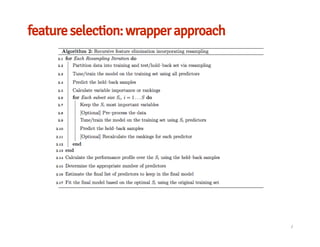

- 33. feature selection: wrapper approach feature selection based on random forest model pre-defined sets of functions: linear regression(lmFuncs), random forests (rfFuncs), naive Bayes (nbFuncs), bagged trees (treebagFuncs) ctrl<-rfeControl(functions=rfFuncs, method="repeatedcv", number=10, repeats=3, verbose=FALSE, returnResamp="final") Profile<-rfe(x=trainData, y=trainClass, sizes=1:8, rfeControl=ctrl) Profile /

- 34. feature selection: wrapper approach feature selection based on custom model tunedf<- data.frame(.cp=seq(0.001,0.2,length.out=5)) fitControl<-trainControl(method="repeatedcv", number=10, repeats=3, classProbs=TRUE, summaryFunction=twoClassSummary) customFuncs<-caretFuncs customFuncs$summary<-twoClassSummary ctrl<-rfeControl(functions=customFuncs, method="repeatedcv", number=10, repeats=3, verbose=FALSE, returnResamp="final") Profile<-rfe(x=trainData, y=trainClass, sizes=1:8, method='rpart', rfeControl=ctrl, /

- 36. exercise-1 use knn method to train model library(caret) fitControl<-trainControl(method="repeatedcv", number=10, repeats=3) tunedf<-data.frame(.k=seq(3,20,by=2)) knnmodel<-train(x=trainData, y=trainClass, method='knn', trControl=fitControl, tuneGrid=tunedf) plot(knnmodel) /

![A very simple example

library(caret)

str(iris)

set.seed(1)

#preprocess

process<-preProcess(iris[,-5],method=c('center','scale'))

dataScaled<-predict(process,iris[,-5])

#datasplitting

inTrain<-createDataPartition(iris$Species,p=0.75)[[1]]

length(inTrain)

trainData<-dataScaled[inTrain,]

trainClass<-iris[inTrain,5]

testData<-dataScaled[-inTrain,]

testClass<-iris[-inTrain,5]

/](https://image.slidesharecdn.com/dataminingwithcaretpackage-151214022117/85/Data-mining-with-caret-package-5-320.jpg)

![visualizations

The featurePlot function is a wrapper for different lattice plots to visualize the data.

Scatterplot Matrix

boxplot

featurePlot(x=iris[,1:4],

y=iris$Species,

plot="pairs",

##Addakeyatthetop

auto.key=list(columns=3))

featurePlot(x=iris[,1:4],

y=iris$Species,

plot="box",

##Addakeyatthetop

auto.key=list(columns=3))

/](https://image.slidesharecdn.com/dataminingwithcaretpackage-151214022117/85/Data-mining-with-caret-package-7-320.jpg)

![pre-processing

Zero- and Near Zero-Variance Predictors

data<-data.frame(x1=rnorm(100),

x2=runif(100),

x3=rep(c(0,1),times=c(2,98)),

x4=rep(3,length=100))

nzv<-nearZeroVar(data,saveMetrics=TRUE)

nzv

nzv<-nearZeroVar(data)

dataFilted<-data[,-nzv]

head(dataFilted)

/](https://image.slidesharecdn.com/dataminingwithcaretpackage-151214022117/85/Data-mining-with-caret-package-9-320.jpg)

![pre-processing

Identifying Correlated Predictors

set.seed(1)

x1<-rnorm(100)

x2<-x1+rnorm(100,0.1,0.1)

x3<-x1+rnorm(100,1,1)

data<-data.frame(x1,x2,x3)

corrmatrix<-cor(data)

highlyCor<-findCorrelation(corrmatrix,cutoff=0.75)

dataFilted<-data[,-highlyCor]

head(dataFilted)

/](https://image.slidesharecdn.com/dataminingwithcaretpackage-151214022117/85/Data-mining-with-caret-package-10-320.jpg)

![pre-processing

Identifying Linear Dependencies Predictors

set.seed(1)

x1<-rnorm(100)

x2<-x1+rnorm(100,0.1,0.1)

x3<-x1+rnorm(100,1,1)

x4<-x2+x3

data<-data.frame(x1,x2,x3,x4)

comboInfo<-findLinearCombos(data)

dataFilted<-data[,-comboInfo$remove]

head(dataFilted)

/](https://image.slidesharecdn.com/dataminingwithcaretpackage-151214022117/85/Data-mining-with-caret-package-11-320.jpg)

![pre-processing

Imputation:bagImpute/knnImpute/

data<-iris[,-5]

data[1,2]<-NA

data[2,1]<-NA

impu<-preProcess(data,method='knnImpute')

dataProced<-predict(impu,data)

/](https://image.slidesharecdn.com/dataminingwithcaretpackage-151214022117/85/Data-mining-with-caret-package-13-320.jpg)

![pre-processing

transformation: BoxCox/PCA

data<-iris[,-5]

pcaProc<-preProcess(data,method='pca')

dataProced<-predict(pcaProc,data)

head(dataProced)

/](https://image.slidesharecdn.com/dataminingwithcaretpackage-151214022117/85/Data-mining-with-caret-package-14-320.jpg)

![data splitting

create balanced splits of the data

set.seed(1)

trainIndex<-createDataPartition(iris$Species,p=0.8,list=FALSE, times=1)

head(trainIndex)

irisTrain<-iris[trainIndex,]

irisTest<-iris[-trainIndex,]

summary(irisTest$Species)

createResample can be used to make simple bootstrap samples

createFolds can be used to generate balanced cross–validation groupings from a set of data.

·

·

/](https://image.slidesharecdn.com/dataminingwithcaretpackage-151214022117/85/Data-mining-with-caret-package-15-320.jpg)

![Model Training and Parameter Tuning

prepare data

data(PimaIndiansDiabetes2,package='mlbench')

data<-PimaIndiansDiabetes2

library(caret)

#scaleandcenter

preProcValues<-preProcess(data[,-9],method=c("center","scale"))

scaleddata<-predict(preProcValues,data[,-9])

#YeoJohnsontransformation

preProcbox<-preProcess(scaleddata,method=c("YeoJohnson"))

boxdata<-predict(preProcbox,scaleddata)

/](https://image.slidesharecdn.com/dataminingwithcaretpackage-151214022117/85/Data-mining-with-caret-package-17-320.jpg)

![Model Training and Parameter Tuning

prepare data

#bagimpute

preProcimp<-preProcess(boxdata,method="bagImpute")

procdata<-predict(preProcimp,boxdata)

procdata$class<-data[,9]

#datasplitting

inTrain<-createDataPartition(procdata$class,p=0.75)[[1]]

length(inTrain)

trainData<-procdata[inTrain,1:8]

trainClass<-procdata[inTrain,9]

testData<-procdata[-inTrain,1:8]

testClass<-procdata[-inTrain,9]

/](https://image.slidesharecdn.com/dataminingwithcaretpackage-151214022117/85/Data-mining-with-caret-package-18-320.jpg)