Data science unit2

- 2. Handling Large Data on a Single System

- 3. Problems while handling large data: A large volume of data poses new challenges, such as overloaded memory and algorithms that never stop running. It forces you to adapt and expand your repertoire of techniques. But even when you can perform your analysis, you should take care of issues such as I/O (input/output) and CPU starvation, because these can cause speed issues.

- 4. General Techniques for handling large data: Never-ending algorithms, out-of-memory errors, and speed issues are the most common challenges you face when working with large data. In this section, we’ll investigate solutions to overcome or alleviate these problems.

- 5. Choosing the right algorithm: Choosing the right algorithm can solve more problems than adding more or better hardware. An algorithm that’s well suited for handling large data doesn’t need to load the entire data set into memory to make predictions. Ideally, the algorithm also supports parallelized calculations. Some of the three algorithms are, Online Algorithms, Block Matrices, MapReduce.

- 6. Choosing the right data structure: Algorithms can make or break your program, but the way you store your data is of equal importance. Data structures have different storage requirements, but also influence the performance of CRUD (create, read, update, and delete) and other operations on the data set.

- 8. Selecting the right tools: With the right class of algorithms and data structures in place, it’s time to choose the right tool for the job. The right tool can be a Python library or at least a tool that’s controlled from Python. The number of helpful tools available is enormous, so we’ll look at only a handful of them.

- 9. General programming tips for dealing with large data sets: The tricks that work in a general programming context still apply for data science. Several might be worded slightly differently, but the principles are essentially the same for all programmers. This section recapitulates those tricks that are important in a data science context. Don’t reinvent the wheel Get the most out of your hardware Reduce your computing needs

- 10. Case study 1: Predicting malicious URLs: The internet is probably one of the greatest inventions of modern times. It has boosted humanity’s development, but not everyone uses this great invention with honorable intentions. Many companies (Google, for one) try to protect us from fraud by detecting malicious websites for us. Doing so is no easy task, because the internet has billions of web pages to scan. In this case study we’ll show how to work with a data set that no longer fits in memory. Step 1: Defining the research goal Step 2: Acquiring the URL data Step 4: Data exploration Step 5: Model building

- 11. Case study 2: Building a recommender system inside a database: In reality most of the data you work with is stored in a relational database, but most databases aren’t suitable for data mining. But as shown in this example, it’s possible to adapt our techniques so you can do a large part of the analysis inside the database itself, thereby profiting from the database’s query optimizer, which will optimize the code for you. In this example we’ll go into how to use the hash table data structure and how to use Python to control other tools. Tools and techniques needed Step 1: Research question Step 3: Data preparation Step 5: Model building Step 6: Presentation and automation

- 12. First steps in big data

- 13. Distributing data storage and processing with frameworks: New big data technologies such as Hadoop and Spark make it much easier to work with and control a cluster of computers. Hadoop can scale up to thousands of computers, creating a cluster with petabytes of storage. This enables businesses to grasp the value of the massive amount of data available. Hadoop: a framework for storing and processing large data sets Apache Hadoop is a framework that simplifies working with a cluster of computers. It aims to be all of the following things and more: Reliable, Fault Tolerant, Scalable, Portable.

- 14. An example for MapReduce flow for counting the color in input text:

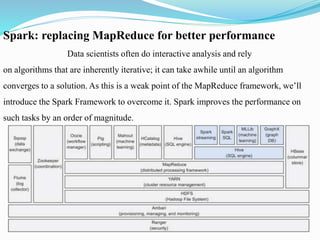

- 15. Spark: replacing MapReduce for better performance Data scientists often do interactive analysis and rely on algorithms that are inherently iterative; it can take awhile until an algorithm converges to a solution. As this is a weak point of the MapReduce framework, we’ll introduce the Spark Framework to overcome it. Spark improves the performance on such tasks by an order of magnitude.

- 16. Case study: Assessing risk when loaning money Enriched with a basic understanding of Hadoop and Spark, we’re now ready to get our hands dirty on big data. The goal of this case study is to have a first experience with the technologies we introduced earlier in this chapter, and see that for a large part you can (but don’t have to) work similarly as with other technologies. Step 1: The research goal, Step 2: Data retrieval, Step 3: Data preparation Steps 4 & 6: Exploration and report creation

- 17. Join the NoSQL movement

- 18. Introduction to NoSQL: As you’ve read, the goal of NoSQL databases isn’t only to offer a way to partition databases successfully over multiple nodes, but also to present fundamentally different ways to model the data at hand to fit its structure to its use case and not to how a relational database requires it to be modeled. ACID: the core principal of relational Database, CAP Theorm: the problem with DBs on many nodes, The BASE principal of NoSQL Database, NoSQL Database types,

- 19. ACID: the core principle of relational databases: The main aspects of a traditional relational database can be summarized by the concept ACID: Atomicity , Consistency, Isolation, Durability.

- 20. CAP Theorem: the problem with DBs on many nodes Once a database gets spread out over different servers, it’s difficult to follow the ACID principle because of the consistency ACID promises; the CAP Theorem points out why this becomes problematic. The CAP Theorem states that a database can be any two of the following things but never all three: Partition tolerant Available, Consistent

- 21. CAP Theorem: the problem with DBs on many nodes

- 22. The BASE principles of NoSQL databases RDBMS follows the ACID principles; NoSQL databases that don’t follow ACID, such as the document stores and key-value stores, follow BASE. BASE is a set of much softer database promises: Basically available, Soft State, Eventual Consistent,

- 24. NoSQL database types As you saw earlier, there are four big NoSQL types: key-value store, document store, column-oriented database, and graph database. Each type solves a problem that can’t be solved with relational databases. Actual implementations are often combinations of these. OrientDB, for example, is a multi-model database, combining NoSQL types. OrientDB is a graph database where each node is a document. Normalization, Many to many relationship

- 25. Case Study: What disease is that? Step-1: Setting the research goal, Step-2 & 3: Data Retrieval and Preparation, Step-4: Data Exploration, Step-3 revisited: Data Preparation for disease profiling, Step-4 revisited: Data Exploration for disease profiling, Step-6: Presentation and Automation

- 26. Step 1: Setting the research goal Steps 2 and 3: Data retrieval and preparation Data retrieval and data preparation are two distinct steps in the data science process, and even though this remains true for the case study, we’ll explore both in the same section. This way you can avoid setting up local intermedia storage and immediately do data preparation while the data is being retrieved.

- 28. Step 4: Data exploration It’s not lupus. It’s never lupus! Step 3 revisited: Data preparation for disease profiling

- 29. Step 4 revisited: Data exploration for disease profiling searchBody={ "fields":["name"], "query":{ "filtered" : { "filter": { 'term': {'name':'diabetes'} } } }, "aggregations" : { "DiseaseKeywords" : { "significant_terms" : { "field" : "fulltext", "size" : 30 } }, "DiseaseBigrams": { "significant_terms" : { "field" : "fulltext.shingles", "size" : 30 } } } } client.search(index=indexName,doc_type=docType, body=searchBody, from_ = 0, size=3)

- 30. Thank you

![Step 4 revisited: Data exploration for disease profiling

searchBody={

"fields":["name"],

"query":{

"filtered" : {

"filter": {

'term': {'name':'diabetes'}

}

}

},

"aggregations" : {

"DiseaseKeywords" : {

"significant_terms" : { "field" : "fulltext", "size" : 30 }

},

"DiseaseBigrams": {

"significant_terms" : { "field" : "fulltext.shingles",

"size" : 30 }

}

}

}

client.search(index=indexName,doc_type=docType,

body=searchBody, from_ = 0, size=3)](https://image.slidesharecdn.com/datascienceunit2-200227174411/85/Data-science-unit2-29-320.jpg)