Decision Tree Concepts and Problems Machine Learning

- 2. Decision Tree Classifier It is inspired based on how humans take decision in our day to day life

- 4. Decision Tree Classifier – Important Terminologies

- 5. How Decision Tree Classifier Works ?

- 6. Thus, a decision tree is a popular supervised machine learning algorithm used for both classification and regression tasks. The decision tree algorithm partitions the training data into subsets based on features/attributes at each internal node, with the goal of predicting the target variable's value at the leaf nodes. Decision Tree Classifier

- 7. It models decisions based on a tree-like structure where each internal node represents a "test" on an attribute (feature), each branch represents the outcome of the test, and each leaf node represents a class label (in classification) or a numerical value (in regression). When a node in a decision tree is pure, it means that further splitting based on any attribute won't improve the classification or regression accuracy because all the data points in that subset belong to the same class or have the same value. In practical terms, a pure question helps in effectively partitioning the dataset by separating instances into homogeneous groups, which facilitates accurate predictions or decisions. The goal in constructing a decision tree is often to find a series of pure questions that optimally split the dataset, leading to the creation of a tree that can make accurate predictions or classifications. Decision Tree Classifier

- 8. How Decision Tree Classifier Works ? Prediction using Decision Tree: Given a test sample with feature vector f, traverse through the tree from root to reach a leaf node and assign the value in the leaf to the test sample.

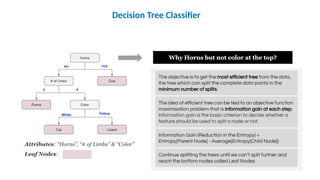

- 9. Decision Tree Classifier Consider the following training data. Here, the attributes/features are: Color, Horns and Number of Limbs Now the question is from which attribute the decision tree starts growing? One possibility is shown here where the root node is Horns? Is there exist any criteria to select an attribute to become the decision node of the tree?

- 11. Decision Tree - Example

- 12. Decision Tree - Example

- 13. Decision Tree - Example

- 14. Whether we need to start with Gender or Age ? Decision Tree - Example

- 15. Decision Tree - Example

- 16. Decision Tree - Example

- 17. Decision Tree - Example

- 18. Decision Tree - Example

- 19. Decision Tree - Example

- 20. Decision Tree - Example

- 21. Decision Tree - Example

- 22. Decision Tree - Example

- 23. Start with a feature in the data and make a split based on the feature.

- 24. Start with a feature in the data and make a split based on the feature. Continue splitting based on features.

- 25. Start with a feature in the data and make a split based on the feature. Continue splitting based on features.

- 27. High Impurity Low impurity If the given set is homogeneous, then it is less diverse or having low impurity. If the given set is heterogeneous, then it is highly diverse or having high impurity. Thus, we need numerical measures that quantify the diversity or impurity at each node of the tree.

- 28. What is purity of a split? The purity of a split refers to how homogeneous or uniform the resulting subsets are after splitting a node based on a certain attribute. A pure subset contains instances that belong to the same class, whereas an impure subset contains instances from multiple classes. Purity of a split can be measured in terms of the following metrics: Entropy and Information gain Gini Index

- 29. If a dataset is perfectly pure (i.e., contains only one class), the entropy is 0. If the dataset is evenly distributed across all classes, the entropy is at its maximum.

- 30. Information Gain Values (A) represents the possible values of attribute A ∣Sv is the number of examples in subset S ∣ vafter splitting on attribute A Information gain is the reduction in entropy achieved by splitting the data set S on a particular attribute A. Thus, choose the attribute A that maximizes the information gain to split the data at the current node of the decision tree.

- 31. Decision Tree Construction Using Entropy and Information Gain The construction of decision trees using entropy and information gain is popularly known as the ID3 (Iterative Dichotomiser 3) algorithm.

- 32. From the given training data, predict the class label for the new data D15 using decision tree Decision Tree Construction – ID3 Algorithm

- 33. Determine the Root Node Among Humidity, Outlook and Wind which one has to be selected as root?

- 34. Determine the Root Node Among Humidity, Outlook and Wind which one has to be selected as root?

- 35. Determine the Root Node Among Humidity, Outlook and Wind which one has to be selected as root?

- 36. Determine the Root Node Among Humidity, Outlook and Wind the one which maximizes information gain is Outlook. So, we can select Outlook as the root node of the Decision Tree.

- 37. Which Attribute to Select Next

- 38. Which Attribute to Select Next

- 39. Which Attribute to Select Next

- 40. Which Attribute to Select Next

- 41. Which Attribute to Select Next

- 42. Decision Tree Construction – ID3 Algorithm

- 43. Decision Tree Construction – ID3 Algorithm

- 44. The Gini Index is a measure of impurity or disorder used in decision tree algorithms for classification tasks. For a given dataset D with K classes, let pibe the probability of randomly selecting an element belonging to ith class from the dataset D. The Gini Index for the dataset D is then calculated as: A Gini Index of 0 indicates that the dataset is perfectly pure (i.e., all elements belong to the same class), while a Gini Index of 0.5 indicates maximum impurity (i.e., the elements are evenly distributed among two classes). How is Gini Index used to construct a decision tree classifier?

- 45. How is Gini Index used to construct a decision tree classifier? If the dataset D is split on any attribute A into k subsets D1, D2, .. , Dk , then the Gini Index for the split based on attribute A for the dataset D is calculated as: The attribute A that results in the lowest Gini index for split is then chosen as the optimal splitting attribute.

- 46. How is Gini Index used to construct a decision tree classifier? The procedure used to generate a decision tree classifier based on Gini Index is generally termed as CART (Classification and Regression Tree) algorithm.

- 47. How is Gini Index used to construct a decision tree classifier? From the given training data, construct a decision tree classifier using Gini Index

- 48. How is Gini Index used to construct a decision tree classifier? From the given training data, construct a decision tree classifier using Gini Index

- 49. How is Gini Index used to construct a decision tree classifier? From the given training data, construct a decision tree classifier using Gini Index Gini Index(D, Gender)

- 50. How is Gini Index used to construct a decision tree classifier? From the given training data, construct a decision tree classifier using Gini Index Gini Index(D, Car type)

- 51. How is Gini Index used to construct a decision tree classifier? From the given training data, construct a decision tree classifier using Gini Index Gini Index(D, Shirt Size)

- 52. How is Gini Index used to construct a decision tree classifier? From the given training data, construct a decision tree classifier using Gini Index Thus, the initial split will be based on the attribute Car Type The process is then repeated for other attributes (Gender and Shirt Size) in the three child nodes obtained after splitting based on car type to find the complete decision tree.