Deep dive into stateful stream processing in structured streaming by Tathagata Das

- 1. Deep Dive into Stateful Stream Processing in Structured Streaming Spark Summit Europe 2017 25th October, Dublin Tathagata “TD” Das @tathadas

- 2. Structured Streaming stream processing on Spark SQL engine fast, scalable, fault-tolerant rich, unified, high level APIs deal with complex data and complex workloads rich ecosystem of data sources integrate with many storage systems

- 3. you should not have to reason about streaming

- 4. you should write simple queries & Spark should continuously update the answer

- 5. Anatomy of a Streaming Word Count spark.readStream .format("kafka") .option("subscribe", "input") .load() .groupBy($"value".cast("string")) .count() .writeStream .format("kafka") .option("topic", "output") .trigger("1 minute") .outputMode(OutputMode.Complete()) .option("checkpointLocation", "…") .start() Source Specify one or more locations to read data from Built in support for Files/Kafka/Socket, pluggable. Generates a DataFrame

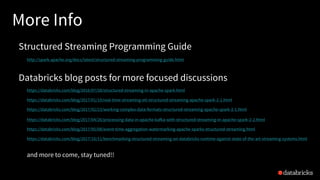

- 6. Anatomy of a Streaming Word Count spark.readStream .format("kafka") .option("subscribe", "input") .load() .groupBy('value.cast("string") as 'key) .agg(count("*") as 'value) .writeStream .format("kafka") .option("topic", "output") .trigger("1 minute") .outputMode(OutputMode.Complete()) .option("checkpointLocation", "…") .start() Transformation DataFrame, Dataset operations and/or SQL queries Spark SQL figures out how to execute it incrementally Internal processing always exactly-once

- 7. Anatomy of a Streaming Word Count spark.readStream .format("kafka") .option("subscribe", "input") .load() .groupBy('value.cast("string") as 'key) .agg(count("*") as 'value) .writeStream .format("kafka") .option("topic", "output") .trigger("1 minute") .outputMode(OutputMode.Complete()) .option("checkpointLocation", "…") .start() Sink Accepts the output of each batch When supported sinks are transactional and exactly once (e.g. files) Use foreach to execute arbitrary code

- 8. Anatomy of a Streaming Word Count spark.readStream .format("kafka") .option("subscribe", "input") .load() .groupBy('value.cast("string") as 'key) .agg(count("*") as 'value) .writeStream .format("kafka") .option("topic", "output") .trigger("1 minute") .outputMode("update") .option("checkpointLocation", "…") .start() Output mode – What's output Complete – Output the whole answer every time Update – Output changed rows Append – Output new rows only Trigger – When to output Specified as a time, eventually supports data size No trigger means as fast as possible

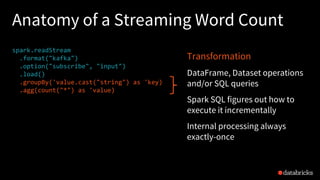

- 9. Anatomy of a Streaming Word Count spark.readStream .format("kafka") .option("subscribe", "input") .load() .groupBy('value.cast("string") as 'key) .agg(count("*") as 'value) .writeStream .format("kafka") .option("topic", "output") .trigger("1 minute") .outputMode("update") .option("checkpointLocation", "/cp/") .start() Checkpoint Tracks the progress of a query in persistent storage Can be used to restart the query if there is a failure

- 10. DataFrames, Datasets, SQL spark.readStream .format("kafka") .option("subscribe", "input") .load() .groupBy( 'value.cast("string") as 'key) .agg(count("*") as 'value) .writeStream .format("kafka") .option("topic", "output") .trigger("1 minute") .outputMode("update") .option("checkpointLocation", "/cp/") .start() Logical Plan Read from Kafka Project device, signal Filter signal > 15 Write to Kafka Spark automatically streamifies! Spark SQL converts batch-like query to a series of incremental execution plans operating on new batches of data Series of Incremental Execution Plans Kafka Source Optimized Operator codegen, off- heap, etc. Kafka Sink Optimized Physical Plan process newdata t = 1 t = 2 t = 3 process newdata process newdata

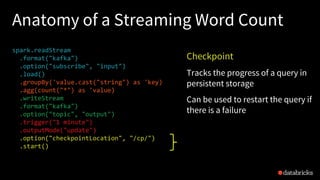

- 11. Spark automatically streamifies! process newdata t = 1 t = 2 t = 3 process newdata process newdata state state state Partial counts carries across triggers as distributed state Each execution reads previous state and writes out updated state State stored in executor memory (hashmap in Apache, RocksDB in Databricks Runtime), backed by checkpoints in HDFS/S3 Fault-tolerant, exactly-once guarantee! check point

- 12. This Talk Explore built-in stateful operations How to use watermarks to control state size How to build arbitrary stateful operations How to monitor and debug stateful queries [For a general overview of SS, see my earlier talks]

- 14. Aggregation by key and/or time windows Aggregation by key only Aggregation by event time windows Aggregation by both Supports multiple aggregations, user-defined functions (UDAFs)! events .groupBy("key") .count() events .groupBy(window("timestamp","10 mins")) .avg("value") events .groupBy( 'key, window("timestamp","10 mins")) .agg(avg("value"), corr("value"))

- 15. Automatically handles Late Data 12:00 - 13:00 1 12:00 - 13:00 3 13:00 - 14:00 1 12:00 - 13:00 3 13:00 - 14:00 2 14:00 - 15:00 5 12:00 - 13:00 5 13:00 - 14:00 2 14:00 - 15:00 5 15:00 - 16:00 4 12:00 - 13:00 3 13:00 - 14:00 2 14:00 - 15:00 6 15:00 - 16:00 4 16:00 - 17:00 3 13:00 14:00 15:00 16:00 17:00Keeping state allows late data to update counts of old windows red = state updated with late data But size of the state increases indefinitely if old windows are not dropped

- 16. Watermarking Watermark - moving threshold of how late data is expected to be and when to drop old state Trails behind max event time seen by the engine Watermark delay = trailing gap event time max event time watermark data older than watermark not expected 12:30 PM 12:20 PM trailing gap of 10 mins

- 17. Watermarking Data newer than watermark may be late, but allowed to aggregate Data older than watermark is "too late" and dropped Windows older than watermark automatically deleted to limit the amount of intermediate state max event time event time watermark late data allowed to aggregate data too late, dropped watermark delay of 10 mins

- 18. Watermarking max event time event time watermark parsedData .withWatermark("timestamp", "10 minutes") .groupBy(window("timestamp","5 minutes")) .count() late data allowed to aggregate data too late, dropped Used only in stateful operations Ignored in non-stateful streaming queries and batch queries watermark delay of 10 mins

- 19. Watermarking data too late, ignored in counts, state dropped Processing Time12:00 12:05 12:10 12:15 12:10 12:15 12:20 12:07 12:13 12:08 EventTime 12:15 12:18 12:04 watermark updated to 12:14 - 10m = 12:04 for next trigger, state < 12:04 deleted data is late, but considered in counts system tracks max observed event time 12:08 wm = 12:04 10min 12:14 More details in my blog post parsedData .withWatermark("timestamp", "10 minutes") .groupBy(window("timestamp","5 minutes")) .count()

- 20. Watermarking Trade off between lateness tolerance and state size lateness toleranceless late data processed, less memory consumed more late data processed, more memory consumed state size

- 22. Streaming Deduplication Drop duplicate records in a stream Specify columns which uniquely identify a record Spark SQL will store past unique column values as state and drop any record that matches the state userActions .dropDuplicates("uniqueRecordId")

- 23. Streaming Deduplication with Watermark Timestamp as a unique column along with watermark allows old values in state to dropped Records older than watermark delay is not going to get any further duplicates Timestamp must be same for duplicated records userActions .withWatermark("timestamp") .dropDuplicates( "uniqueRecordId", "timestamp")

- 24. Streaming Joins

- 25. Streaming Joins Spark 2.0+ support joins between streams and static datasets Spark 2.3+ will support joins between multiple streams Join (ad, impression) (ad, click) (ad, impression, click) Join stream of ad impressions with another stream of their corresponding user clicks Example: Ad Monetization

- 26. Streaming Joins Most of the time click events arrive after their impressions Sometimes, due to delays, impressions can arrive after clicks Each stream in a join needs to buffer past events as state for matching with future events of the other stream Join (ad, impression) (ad, click) (ad, impression, click) state state

- 27. Join (ad, impression) (ad, click) (ad, impression, click) Simple Inner Join Inner join by ad ID column Need to buffer all past events as state, a match can come on the other stream any time in the future To allow buffered events to be dropped, query needs to provide more time constraints impressions.join( clicks, expr("clickAdId = impressionAdId") ) state state ∞ ∞

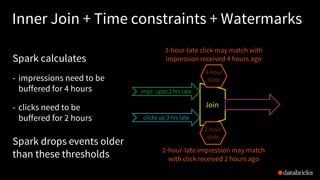

- 28. Inner Join + Time constraints + Watermarks time constraintsTime constraints - Impressions can be 2 hours late - Clicks can be 3 hours late - A click can occur within 1 hour after the corresponding impression val impressionsWithWatermark = impressions .withWatermark("impressionTime", "2 hours") val clicksWithWatermark = clicks .withWatermark("clickTime", "3 hours") impressionsWithWatermark.join( clicksWithWatermark, expr(""" clickAdId = impressionAdId AND clickTime >= impressionTime AND clickTime <= impressionTime + interval 1 hour """ )) Join Range Join

- 29. impressionsWithWatermark.join( clicksWithWatermark, expr(""" clickAdId = impressionAdId AND clickTime >= impressionTime AND clickTime <= impressionTime + interval 1 hour """ )) Inner Join + Time constraints + Watermarks Spark calculates - impressions need to be buffered for 4 hours - clicks need to be buffered for 2 hours Join impr. upto 2 hrs late clicks up 3 hrs late 4-hour state 2-hour state 3-hour-late click may match with impression received 4 hours ago 2-hour-late impression may match with click received 2 hours ago Spark drops events older than these thresholds

- 30. Join Outer Join + Time constraints + Watermarks Left and right outer joins are allowed only with time constraints and watermarks Needed for correctness, Spark must output nulls when an event cannot get any future match impressionsWithWatermark.join( clicksWithWatermark, expr(""" clickAdId = impressionAdId AND clickTime >= impressionTime AND clickTime <= impressionTime + interval 1 hour """ ), joinType = "leftOuter" ) Can be "inner" (default) /"leftOuter"/ "rightOuter"

- 32. Arbitrary Stateful Operations Many use cases require more complicated logic than SQL ops Example: Tracking user activity on your product Input: User actions (login, clicks, logout, …) Output: Latest user status (online, active, inactive, …) Solution: MapGroupsWithState / FlatMapGroupsWithState General API for per-key user-defined stateful processing More powerful + efficient than DStream's mapWithState/updateStateByKey Since Spark 2.2, for Scala and Java only MapGroupsWithState

- 33. MapGroupsWithState - How to use? 1. Define the data structures - Input event: UserAction - State data: UserStatus - Output event: UserStatus (can be different from state) case class UserAction( userId: String, action: String) case class UserStatus( userId: String, active: Boolean) MapGroupsWithState

- 34. MapGroupsWithState - How to use? 2. Define function to update state of each grouping key using the new data - Input - Grouping key: userId - New data: new user actions - Previous state: previous status of this user case class UserAction( userId: String, action: String) case class UserStatus( userId: String, active: Boolean) def updateState( userId: String, actions: Iterator[UserAction], state: GroupState[UserStatus]):UserStatus = { }

- 35. MapGroupsWithState - How to use? 2. Define function to update state of each grouping key using the new data - Body - Get previous user status - Update user status with actions - Update state with latest user status - Return the status def updateState( userId: String, actions: Iterator[UserAction], state: GroupState[UserStatus]):UserStatus = { } val prevStatus = state.getOption.getOrElse { new UserStatus() } actions.foreah { action => prevStatus.updateWith(action) } state.update(prevStatus) return prevStatus

- 36. MapGroupsWithState - How to use? 3. Use the user-defined function on a grouped Dataset Works with both batch and streaming queries In batch query, the function is called only once per group with no prior state def updateState( userId: String, actions: Iterator[UserAction], state: GroupState[UserStatus]):UserStatus = { } // process actions, update and return status userActions .groupByKey(_.userId) .mapGroupsWithState(updateState)

- 37. Timeouts Example: Mark a user as inactive when there is no actions in 1 hour Timeouts: When a group does not get any event for a while, then the function is called for that group with an empty iterator Must specify a global timeout type, and set per-group timeout timestamp/duration Ignored in a batch queries userActions.withWatermark("timestamp") .groupByKey(_.userId) .mapGroupsWithState (timeoutConf)(updateState) EventTime Timeout ProcessingTime Timeout NoTimeout (default)

- 38. userActions .withWatermark("timestamp") .groupByKey(_.userId) .mapGroupsWithState ( timeoutConf )(updateState) Event-time Timeout - How to use? 1. Enable EventTimeTimeout in mapGroupsWithState 2. Enable watermarking 3. Update the mapping function - Every time function is called, set the timeout timestamp using the max seen event timestamp + timeout duration - Update state when timeout occurs def updateState(...): UserStatus = { if (!state.hasTimedOut) { // track maxActionTimestamp while // processing actions and updating state state.setTimeoutTimestamp( maxActionTimestamp, "1 hour") } else { // handle timeout userStatus.handleTimeout() state.remove() } // return user status } EventTimeTimeout if (!state.hasTimedOut) { } else { // handle timeout userStatus.handleTimeout() state.remove() } return userStatus

- 39. Event-time Timeout - When? Watermark is calculated with max event time across all groups For a specific group, if there is no event till watermark exceeds the timeout timestamp, Then Function is called with an empty iterator, and hasTimedOut = true Else Function is called with new data, and timeout is disabled Needs to explicitly set timeout timestamp every time

- 40. Processing-time Timeout Instead of setting timeout timestamp, function sets timeout duration (in terms of wall-clock-time) to wait before timing out Independent of watermarks Note, query downtimes will cause lots of timeouts after recovery def updateState(...): UserStatus = { if (!state.hasTimedOut) { // handle new data state.setTimeoutDuration("1 hour") } else { // handle timeout } return userStatus } userActions .groupByKey(_.userId) .mapGroupsWithState (ProcessingTimeTimeout)(updateState)

- 41. FlatMapGroupsWithState More general version where the function can return any number of events, possibly none at all Example: instead of returning user status, want to return specific actions that are significant based on the history def updateState( userId: String, actions: Iterator[UserAction], state: GroupState[UserStatus]): Iterator[SpecialUserAction] = { } userActions .groupByKey(_.userId) .flatMapGroupsWithState (outputMode, timeoutConf) (updateState)

- 42. userActions .groupByKey(_.userId) .flatMapGroupsWithState (outputMode, timeoutConf) (updateState) Function Output Mode Function output mode* gives Spark insights into the output from this opaque function Update Mode - Output events are key-value pairs, each output is updating the value of a key in the result table Append Mode - Output events are independent rows that being appended to the result table Allows Spark SQL planner to correctly compose flatMapGroupsWithState with other operations *Not to be confused with output mode of the query Update Mode Append Mode

- 44. Monitor State Memory Consumption Get current state metrics using the last progress of the query - Total number of rows in state - Total memory consumed (approx.) Get it asynchronously through StreamingQueryListener API val progress = query.lastProgress print(progress.json) { ... "stateOperators" : [ { "numRowsTotal" : 660000, "memoryUsedBytes" : 120571087 ... } ], } new StreamingQueryListener { ... def onQueryProgress( event: QueryProgressEvent) }

- 45. Debug Stateful Operations SQL metrics in the Spark UI (SQL tab, DAG view) expose more operator-specific stats Answer questions like - Is the memory usage skewed? - Is removing rows slow? - Is writing checkpoints slow?

- 46. More Info Structured Streaming Programming Guide http://spark.apache.org/docs/latest/structured-streaming-programming-guide.html Databricks blog posts for more focused discussions https://databricks.com/blog/2016/07/28/structured-streaming-in-apache-spark.html https://databricks.com/blog/2017/01/19/real-time-streaming-etl-structured-streaming-apache-spark-2-1.html https://databricks.com/blog/2017/02/23/working-complex-data-formats-structured-streaming-apache-spark-2-1.html https://databricks.com/blog/2017/04/26/processing-data-in-apache-kafka-with-structured-streaming-in-apache-spark-2-2.html https://databricks.com/blog/2017/05/08/event-time-aggregation-watermarking-apache-sparks-structured-streaming.html https://databricks.com/blog/2017/10/11/benchmarking-structured-streaming-on-databricks-runtime-against-state-of-the-art-streaming-systems.html and more to come, stay tuned!!

- 47. UNIFIED ANALYTICS PLATFORM Try Apache Spark in Databricks! • Collaborative cloud environment • Free version (community edition) DATABRICKS RUNTIME 3.0 • Apache Spark - optimized for the cloud • Caching and optimization layer - DBIO • Enterprise security - DBES Try for free today databricks.com

![This Talk

Explore built-in stateful operations

How to use watermarks to control state size

How to build arbitrary stateful operations

How to monitor and debug stateful queries

[For a general overview of SS, see my earlier talks]](https://image.slidesharecdn.com/deepdiveintostatefulstreamprocessinginstructuredstreaming-sparksummiteurope2017-171026160013/85/Deep-dive-into-stateful-stream-processing-in-structured-streaming-by-Tathagata-Das-12-320.jpg)

![MapGroupsWithState - How to use?

2. Define function to update

state of each grouping

key using the new data

- Input

- Grouping key: userId

- New data: new user actions

- Previous state: previous status

of this user

case class UserAction(

userId: String, action: String)

case class UserStatus(

userId: String, active: Boolean)

def updateState(

userId: String,

actions: Iterator[UserAction],

state: GroupState[UserStatus]):UserStatus = {

}](https://image.slidesharecdn.com/deepdiveintostatefulstreamprocessinginstructuredstreaming-sparksummiteurope2017-171026160013/85/Deep-dive-into-stateful-stream-processing-in-structured-streaming-by-Tathagata-Das-34-320.jpg)

![MapGroupsWithState - How to use?

2. Define function to update

state of each grouping key

using the new data

- Body

- Get previous user status

- Update user status with actions

- Update state with latest user status

- Return the status

def updateState(

userId: String,

actions: Iterator[UserAction],

state: GroupState[UserStatus]):UserStatus = {

}

val prevStatus = state.getOption.getOrElse {

new UserStatus()

}

actions.foreah { action =>

prevStatus.updateWith(action)

}

state.update(prevStatus)

return prevStatus](https://image.slidesharecdn.com/deepdiveintostatefulstreamprocessinginstructuredstreaming-sparksummiteurope2017-171026160013/85/Deep-dive-into-stateful-stream-processing-in-structured-streaming-by-Tathagata-Das-35-320.jpg)

![MapGroupsWithState - How to use?

3. Use the user-defined function

on a grouped Dataset

Works with both batch and

streaming queries

In batch query, the function is called

only once per group with no prior state

def updateState(

userId: String,

actions: Iterator[UserAction],

state: GroupState[UserStatus]):UserStatus = {

}

// process actions, update and return status

userActions

.groupByKey(_.userId)

.mapGroupsWithState(updateState)](https://image.slidesharecdn.com/deepdiveintostatefulstreamprocessinginstructuredstreaming-sparksummiteurope2017-171026160013/85/Deep-dive-into-stateful-stream-processing-in-structured-streaming-by-Tathagata-Das-36-320.jpg)

![FlatMapGroupsWithState

More general version where the

function can return any number

of events, possibly none at all

Example: instead of returning

user status, want to return

specific actions that are

significant based on the history

def updateState(

userId: String,

actions: Iterator[UserAction],

state: GroupState[UserStatus]):

Iterator[SpecialUserAction] = {

}

userActions

.groupByKey(_.userId)

.flatMapGroupsWithState

(outputMode, timeoutConf)

(updateState)](https://image.slidesharecdn.com/deepdiveintostatefulstreamprocessinginstructuredstreaming-sparksummiteurope2017-171026160013/85/Deep-dive-into-stateful-stream-processing-in-structured-streaming-by-Tathagata-Das-41-320.jpg)

![Monitor State Memory Consumption

Get current state metrics using the

last progress of the query

- Total number of rows in state

- Total memory consumed (approx.)

Get it asynchronously through

StreamingQueryListener API

val progress = query.lastProgress

print(progress.json)

{

...

"stateOperators" : [ {

"numRowsTotal" : 660000,

"memoryUsedBytes" : 120571087

...

} ],

}

new StreamingQueryListener {

...

def onQueryProgress(

event: QueryProgressEvent)

}](https://image.slidesharecdn.com/deepdiveintostatefulstreamprocessinginstructuredstreaming-sparksummiteurope2017-171026160013/85/Deep-dive-into-stateful-stream-processing-in-structured-streaming-by-Tathagata-Das-44-320.jpg)