Deep Learning and Watson Studio

- 1. Deep Learning and Watson Studio Sasha Lazarevic, IBM Switzerland https://www.linkedin.com/in/lzrvc/ LZRVC.com

- 2. Two Reasons why we want to speak to you about Deep Learning

- 3. It is used for planet discoveries Source: NASA.gov Traditional techniques like: - Robovetter, - Random Forests, - Probabilistic methods, - PCA. Since December 2017: - CNN

- 4. .. for CERN experiments 2012 : Discovery of Higgs-Boson. Higgs boson identification involve the use of jet pull features, which is a class of features used to characterize the superstructure of a particle decay event. The scientists were using Fisher Discriminant Analysis (FDA) for classification, gradient boosting, random forests ML and many other methods. 2014: Challenge 2014 on Kaggle on tau-tau decays of Higgs Bosons. Winning solution (out of 1700 teams) was using an ensemble of 70 dropout neural networks, where each neural network had three hidden layers of 600 neurons: https://github.com/melisgl/higgsml 2016: Distributed Deep Learning: Cern created Dist-Keras and several new DDL optimization algorithms 2018: “Deep Learning could potentially help in data reconstruction in the upcoming runs of LHC where particles will generate a huge amount of hits in the detector where it would be infeasible to reconstruct the particle tracks using traditional techniques (combination of a Kalman filter and Runge–Kutta methods).”

- 5. .. and for many other amazing achievements

- 6. Deep Learning Scientific Publications Source: ResearchGate and ScienceDirect database For only 6 months

- 7. Our second reason is that since March 2018.. DLaaS in IBM Watson Studio has been launched Read the full story on : https://medium.com/ibm-watson/deep-learning-now-in-ibm-watson-studio-d8ba311e4ff1 https://medium.com/ibm-watson/introducing-ibm-watson-studio-e93638f0bb47

- 8. Source: NVIDIA Deep Learning training Let’s start from the beginning

- 9. Traditional AI algorithms Search Breadth-first, A*, Greedy, Beam Monte-Carlo Tree-search Heuristics Planning PDDL, Planning Graph Multi-agent planning Still extensively used in optimizations, logistics, manufacturing, robotics https://www.youtube.com/watch?time_continue=32&v=vjSohj-Iclc https://www.researchgate.net/publication/282477851_Optimization- based_locomotion_planning_estimation_and_control_design_for_the_atlas_humanoi d_robot?_sg=aJI6L_jzBn6V7qHUl8Xdt_kQ2GLcN-huoBfUD_jybf7SiPBTKvoSLfrS77CR- iOmknpIJXlUiA

- 10. Traditional ML algorithms Least Squares Linear Regression Logistic Regression Support Vector Machines (Boosted) Decision Tree Random Forest Naive Bayes Classification PCA, ICA K-means and other clustering algorithms … Still extensively used in Data Science for various applications SVM K-means Clustering Random Forest

- 11. ML vs DL Source: Stanford Deep Learning and NLP training In traditional ML, Data scientist would design features important for the problem and encode it by hand. The machine is doing numerical optimization. Deep Learning automatically learns the representations directly from data by deep neural networks, and on multiple levels (hierarchically). Computer will transform the feature at one level into a more abstract feature at a higher level. Much higher content of intelligence than in traditional ML algorithms. AI developers can develop models with less knowledge of data and with no manual feature engineering.

- 12. But DL was actually triggered by GPU and innovations in ML training

- 13. Brain Artificial Neural Network

- 14. Deep Neural Networks Multi-Layer Perceptrons - MLPs Convolutional Neural Networks – CNNs Recurrent Neural Networks – RNNs Memory-augmented (LSTM/ GRU, Attention) RNNs Tree-Recursive NNs Generative Adversarial Networks – GANs Deep Reinforcement Learning – RLs Other, rarely used: Autoencoder, RBM, DBN, Liquid state machines.. We will focus in this presentation on CNNs and Memory-augmented RNNs, and discuss the derived hybrid and complex architectures

- 15. Image is represented as matrix of numbers

- 16. Word Representations as Numerical Vectors Input in RNN are numerical vector representations of words Earlier, words were represented by one-hot vectors. Example : motel = [0 0 0 0 0 0 0 0 0 0 1 0 0 0 0] hotel = [0 0 0 0 0 0 0 1 0 0 0 0 0 0 0] But motelT X hotel = 0, so the search won’t return any value Nowadays, the words are expressed numerically in a way to represent their context. These representations are trained with frameworks like: - Word2vec (Google), pre-trained on 100B words, - Glove (Stanford), pre-trained on Wikipedia with 6B words Word2vec will go through each word of the whole corpus and create for each unique word a vector with predictions of the surrounding words. Vector dimensionality is between 100 and 1000 Much simpler: import gensim library and load pre-trained word2vec or fastText Word2vec representation of word “good”

- 17. The CNN training process Source: https://shafeentejani.github.io/2016-12-20/convolutional-neural-nets/ Training process: 1. Initialize filters and import the image 2. Apply convolution + ReLU + pooling 3. Chain several layers 4. Flatten the cubes (tensors) 5. Softmax classifier 6. Did we get the lion? Calculate the error 7. Backprop to tune the filters 8. Do this for all examples 9. Iterate to minimize the error 10. Test accuracy on separate data set

- 18. The process of convolution and pooling Image source: http://deeplearning.stanford.edu/wiki/index.php/Feature_extraction_using_convolution http://cs231n.github.io/convolutional-networks/

- 19. Convolutional Neural Networks More complex features are captured in deeper layers Sources: NVIDIA Deep Learning training, H. Lee, R. Grosse, R. Ranganath, and A. Y. Ng. “Convolutional deep belief networks for scalable unsupervised learning of hierarchical representations.”

- 20. CNNs Use Cases – Cancer Diagnostic Example of an architecture that takes as input 4 different scales of input images. Source: DL for Magnification Independent Breast Cancer Image Classification http://www.ee.oulu.fi/~nyalcinb/images/pub/icpr2016/presentation.pdf

- 21. CNNs Examples Object Detection Example: YOLO2 https://www.youtube.com/watch?v=VOC3huqHrss Uses one CNN for classification and localization Splits the input image in a grid of cells Output is tensor with predictions of box positions and object classes Neural Style Transfer Example: https://deepdreamgenerator.com/ New image = Content image + Style image Generate new image means maximize similarity (content image, new image) and maximize similarity (style matrix, new image) Style matrix is the correlation across channels of the style image + =

- 22. Memory-Augmented Recurrent Neural Networks Basic RNN Model : Can model unbounded text (sentences or entire documents) Inputs (time steps) xt-1,xt,xt+1 are words (vectors) U,V and W are shared matrices across layers which we train They reflect the number of features that we want to extract In most of the cases we care only of the last output o RNN is augmented with mechanisms like LSTM, GRU or Attention to memorize certain parts of the text LSTM can add or remove information to the cell using gates: Input gate (i) determines how much we will care about the current vector at all Forget gate (f) says how much we should forget. If it is 1, we forget the past Output gate (o) says how much a cell is going to be exposed vs to be kept longer time GRU is simpler, but has similar performances: Reset gate (r) – if it is 0, this will ignore the past and will store new word information Update gate (z) – if it is 1, copy the state of the cell from the previous time step Source: “Deep Learning” Article in Nature by Yan LeCun in 2015 LSTM and GRU Simple RNN Source: research paper arXiv:1412.3555, 2014

- 23. RNNs Use Cases - Neural Machine Translation Source: University of Edinburgh Statistical Machine Translation: Learn the probabilistic model from texts Use Bayes classifier Use parallel training data from both languages But need also to learn word alignments Neural Machine Translation: Novel techniques: Multilingual machine translation ( One-to-many, Many-to-one, Many-to-many ) Try IBM Watson NMT on : https://console.bluemix.net/catalog/services/language-translator with flag –H "X-Watson-Technology-Preview:2017-07-01"

- 24. RNNs Use Cases - Sentiment Analysis Use of character-based multiplicative LSTM-RNN for sentiment analysis – “Sentiment Neuron” Source: https://arxiv.org/pdf/1704.01444.pdf

- 25. Hybrid and complex models - NER Source: Research paper in 2016 “Named Entity Recognition with Bidirectional LSTM-CNNs” Example of a hybrid model for Named Entity Recognition: - Input is tokenized text and word embeddings - CNN extracts feature vector from characters (useful for capturing pre- and suffixes) - Features of each word fed to Bi-LSTM-RNN - Trained and tested on CoNLL-2003 dataset - The best performance when 50d Glove word embeddings + lexicon were used - Lexicon is based on DBpedia and is looked up during tag scoring

- 26. Hybrid and complex models – music generation Music representation with one-hot encodings Source: Music Generation by Deep Learning, https://www.arxiv-vanity.com/papers/1712.04371/ Example of a hybrid model for Music Generation - Note RNN is trained on a dataset of melodies encoded as one-hot vectors - Reward RNN is used to generate the new music - Q Network (CNN-type) will learn to choose the next action (musical note) - Target Q Network (CNN-type) will learn to generate the target Q-value (gain) for that note using Reinforcement Learning mechanism of reward - Q and Target Q are decoupled to avoid gain overestimation - Q-value (gain) function = similarity (new note, learned note) + similarity (new note, user-defined rules) An amazing implementation of a similar architecture IBM Watson Beat Watch and listen: https://www.youtube.com/watch?v=Z5ymVzTUU6Y And compose the AI-generated music yourself: https://github.com/cognitive-catalyst/watson-beat

- 27. Hybrid and complex models – Blockchain AI Source: Matrix.io ; https://www.matrix.io/html/MATRIXTechnicalWhitePaper.pdf Two-phase approach for security hardening of Smart Contracts 1. Static verification based on CNN-extracted features These features will help identify syntax or structural patterns The system is train on manually labeled open-source smart contracts 2. Use of GANs for the dynamic security testing and enhancement: One RNN will update the smart contract (Generator network) One RNN will learn to generate hacks (Discriminator network) The updated smart contract will be deployed in a sandbox where it will be exposed to the generated hacks The result will be taken by the Generator to improve the smart contract Repeat this training cycle until stable state is achieved CNN + RNN for the automatic generation of smart contract code 1. CNN for the feature extractions Uses natural language text as input (legal agreements, SOW, LOI etc) CNN needs to be trained on a set of similar contracts 2. These extracted features are fed to RNN for the code generation Different code libraries will be deployed depending on the identified design pattern of smart contracts

- 28. Hybrid and complex models – Lip Reading Source: Research paper Lip Reading Sentences in the Wild, 2017 (https://arxiv.org/pdf/1611.05358.pdf) “Watch” (CNN+LSTM RNN), “Listen” (LSTM-RNN), “Attend” and “Spell” (LSTM-RNN) Demo: https://www.youtube.com/watch?v=5aogzAUPilE

- 29. State of the Art in DL In many cases, CNNs can compete with RNNs for downstream NLP tasks, but the performance depends on the semantics of the problem. Some successful examples : - Dynamic convolutional neural network (DCNN) for semantic modeling of sentences - Sarcasm detection in Twitter texts based on a CNN network - Use CNNs to calculate similarity between a question and entries in a knowledge base (KB) to determine what supporting fact in the KB to look for when answering a question Sentiment Analysis is still best done with Tree-LSTM Recursive NNs or GRU-RNNs Use of Reinforcement Learning in combination with Deep Learning (example: train the chatbots) Use of GANs in NLP tasks like text generation, sentiment analysis and NMT. The Generator network is RNN, but the Discriminator network can be either CNN or RNN.

- 30. How are these things implemented ? Programming language Python Others: C++, R, Matlab Programming frameworks Tensorflow Keras Others: Torch, PyTorch, Caffe, MXNet Big data Spark Dist-Keras Transfer learning and model reuse Github source code GPUs

- 31. Transfer Learning accelerates the development GitHub code and pre-trained models Research Papers Repurpose and retrain Cut off the last layer(s)

- 32. Business Use Cases Banking and Insurance : Natural language generation - personalized communication with the customers Sentiment analysis Automatic extraction of information from contracts and legal documents Creditworthiness of online identity Fraud detection DL can take into account thousands of data points, not 20-30 like with traditional ML Image recognition and automatic processing of insurance claims Automated investment Example: http://www.alpaca.ai/alpacaalgo/ (create your own investment algorithm) Platform for fintech companies and innovations Predictive system for financial markets volatility (DL provides better accuracy) Pre-processing of unstructured big data sets Banks want to increase accuracy even very little, because this usually means a lot of money Some consultants take their data together with the predictive model and in few weeks return back with the improved predictions based on DL Blockchain : Smart Contract generation based on the text written using natural human language Vulnerability test of smart contracts Relational analysis of smart contracts to identify loopholes and malicious intents IT services (Take request to service desk as input sequence and using LSTM-RNN produce the action), Legal offices (information retrieval, text generations), Augmentation of chatbots Example: https://towardsdatascience.com/personality-for-your-chatbot-with-recurrent-neural-networks-2038f7f34636

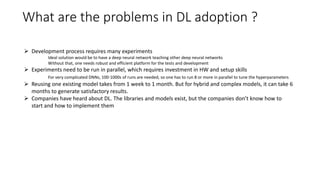

- 33. What are the problems in DL adoption ? Development process requires many experiments Ideal solution would be to have a deep neural network teaching other deep neural networks Without that, one needs robust and efficient platform for the tests and development Experiments need to be run in parallel, which requires investment in HW and setup skills For very complicated DNNs, 100-1000s of runs are needed, so one has to run 8 or more in parallel to tune the hyperparameters Reusing one existing model takes from 1 week to 1 month. But for hybrid and complex models, it can take 6 months to generate satisfactory results. Companies have heard about DL. The libraries and models exist, but the companies don’t know how to start and how to implement them

- 34. The Solution Watson Studio as DLaaS platform + DL consulting + Watson APIs + IBM Cloud private + IBM DSX Local + IBM COS Local + IBM FfDL + IBM MAX + Apache Spark + IBM Spectrum Scale + IBM PowerAI + …… For your exponential steps into the future

- 35. Demo Please follow the tutorial that I published on: https://medium.com/ibm-watson/exploring-deep-learning-and- neural-network-modeler-with-watson-studio-ead35d21a438

- 36. For more insights into DL, contact me on [email protected] Sasha Lazarevic, IBM Switzerland https://www.linkedin.com/in/lzrvc/ LZRVC.com

![Word Representations as Numerical Vectors

Input in RNN are numerical vector representations of words

Earlier, words were represented by one-hot vectors. Example :

motel = [0 0 0 0 0 0 0 0 0 0 1 0 0 0 0]

hotel = [0 0 0 0 0 0 0 1 0 0 0 0 0 0 0]

But motelT X hotel = 0, so the search won’t return any value

Nowadays, the words are expressed numerically in a way to

represent their context. These representations are trained with

frameworks like:

- Word2vec (Google), pre-trained on 100B words,

- Glove (Stanford), pre-trained on Wikipedia with 6B words

Word2vec will go through each word of the whole corpus and create

for each unique word a vector with predictions of the surrounding

words. Vector dimensionality is between 100 and 1000

Much simpler: import gensim library and load pre-trained word2vec

or fastText

Word2vec representation of word “good”](https://image.slidesharecdn.com/20180620-deeplearningandwatsonstudiov1-180621095309/85/Deep-Learning-and-Watson-Studio-16-320.jpg)