Deep Learning with Databricks

- 2. DEEP LEARNING WITH DATABRICKS A CONVOLUTIONAL NEURAL NETWORK IMPLEMENTATION FOR CAR CLASSIFICATION Dr. Evan Eames December 5th, 2019

- 3. © Data Insights, 2019 3December 5th, 2019 INTRO STUFF ABOUT ME ● My studies were in physics simulation ● In the last year of my studies I became increasingly interested in ML ● Graduated last year (whoohoo!) ● Started working in ML at Data Insights ● Still fairly new to the world of ML

- 4. © Data Insights, 2019 4December 5th, 2019 INTRO STUFF ABOUT ME ● My studies were in physics simulation ● In the last year of my studies I became increasingly interested in ML ● Graduated last year (whoohoo!) ● Started working in ML at Data Insights ● Still fairly new to the world of ML Credit: SMBC-comics

- 5. GAME PLAN ● CNN IN INDUSTRY ● A SPECIFIC DATABRICKS USE CASE (CAR CLASSIFICATION) ● A NEW NEURAL NETWORK ARCHITECTURE

- 6. The Paradigm... Academia: How does this make a contribution to humanity’s understanding of the Universe?

- 7. The Paradigm... Academia: How does this make a contribution to humanity’s understanding of the Universe? Industry: How does this make money?

- 8. Some Convolutional Neural Network (CNN) Use-Cases Any time you need to do anything with images or videos, you can use CNN.

- 9. Fault Detection ● For machine parts, circuit boards, wiring, etc. ● One or more images are taken of each object before it is cleared ● CNN can recognize if something is different, and flag it for a human to check ● This avoids having a human manually check hundreds or thousands of objects ● Bonus: CNN can also sort ‘defect types’, and can be trained without labelled data.

- 10. Anomaly Detection ● Insurance companies can have clients take a few pictures around a rental car before and after renting. ● CNN can then identify and localize damage to the car (scratches, dents, etc.) that is new. ● This saves the time and paperwork of manual inspections.

- 11. Anomaly Detection ● The same idea has been applied to infrastructure damage.

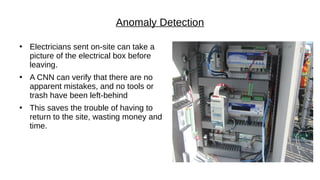

- 12. Anomaly Detection ● Electricians sent on-site can take a picture of the electrical box before leaving. ● A CNN can verify that there are no apparent mistakes, and no tools or trash have been left-behind ● This saves the trouble of having to return to the site, wasting money and time.

- 13. Object Classification ● Retail stores can use object detection to quickly verify inventory ● Efficient verification of incoming products.

- 14. YOLO Algorithm = Real Time Object Detection + Localization

- 15. Real-Time Object Detection and Localization ● Video feed of a worksite ● Vehicles are detected and localized ● They can they be automatically directed and managed ● Improved efficiency, reduced chance of accidents

- 16. Real-Time Object Detection and Localization Source: Nanonets Drone AI

- 17. Real-Time Object Detection and Localization ● Customer engagement ● How many people walking past a display window actually look at it?

- 18. Face Recognition ● Map, count, or identify faces ● Security purposes ● Emotion detection (is a customer happy or sad?)

- 19. Face Recognition

- 20. Car Recognition ● In April I was approached by a major car company ● When car photos are shared on social media, the company would like to identify their cars and re- post them to their company pages ● EU law states that all car models must be named, for emission purposes ● Right now they must hire somebody to do this manually

- 21. © Data Insights, 2019 THE IMPLEMENTATION (OVERCOMING ONE OBSTACLE AT A TIME) 21December 5th, 2019

- 22. © Data Insights, 2019 22August 30th, 2019

- 23. © Data Insights, 2019 ● Put in data (1 pixel = 1 node) ● Check output (1 pixel = 1 car type) ● If output is incorrect, adjust the strength of the wires between the nodes. ● If that made the output better, keep going in that direction. ● 1 epoch = 1 pass through all training data (8144 images) ● Lots of credit to this repository: https://github.com/foamliu/Car-Recognition 23December 5th, 2019 One Minute Crash Course

- 24. © Data Insights, 2019 24December 5th, 2019 FIRST ATTEMPT USING A RESNET-50 ON KERAS RUNNING ON MY LAPTOP ● On the training data the accuracy is over 90%! Wow! ● ...but on the test accuracy: 4% ● Yikes... ● This is very serious overfitting (the neural network is learning the training data too well.

- 25. © Data Insights, 2019 25December 5th, 2019 SECOND ATTEMPT ADDING DATA AUGMENTATION ● Now we get up to 20% test accuracy. ● Not bad! 1/5 are now being correctly classified. ● But we still have a long way to go...

- 26. © Data Insights, 2019 26December 5th, 2019 THIRD ATTEMPT MAKING THE NETWORK DEEPER (150 LAYERS) ● Deeper networks can learn more complicated patterns. ● This brings us up to 40% test accuracy. ● Now over 1/3 of cars are successfully categorized. ● Not bad...but overfitting remains a big problem...

- 27. © Data Insights, 2019 27December 5th, 2019 FOURTH ATTEMPT ADDING CROSS-VALIDATION ● Now we see.... ● Wait...what???

- 28. © Data Insights, 2019 28December 5th, 2019 FOURTH ATTEMPT ADDING CROSS-VALIDATION ● The network is still trying to improve. ● However the features are being input as integers (each pixel is a RGB colour value between 0-255). ● Gradient descent requires high precision to make tiny adjustments to the network. ● Int precision is insufficient ● But turning every feature into a float means 16000*224*224*3 = 2408448000 float entries!

- 29. © Data Insights, 2019 29December 5th, 2019 FOURTH ATTEMPT ADDING CROSS-VALIDATION AND FEATURE NORMALIZATION ● In the network, we can add a step to normalize the features of a single immediately before they go into the neural network. ● This saves memory, as it avoids turning all data into a float at the start.

- 30. © Data Insights, 2019 30December 5th, 2019 FOURTH ATTEMPT ADDING CROSS-VALIDATION AND FEATURE NORMALIZATION ● Great! Now the network is training again. ● And we can use the cross validation accuracy to monitor the overfitting at each epoch. ● However...we are still overfitting :(

- 31. © Data Insights, 2019 31December 5th, 2019 FIFTH ATTEMPT APPLYING TRANSFER LEARNING ● Starting with weights trained previously on another computer vision task. ● Specifically the 1000 class ImageNet dataset. ● We pass 50% accuracy! But overfitting is still not fixed :( :( :(

- 32. © Data Insights, 2019 At this point, the only option I could see was ‘hyperparameter tuning’. Basically this is fiddling with the hyperparameters until something works. But at ~1.5h/epoch, this isn’t really feasible... December 5th, 2019

- 33. © Data Insights, 2019 December 5th, 2019

- 34. © Data Insights, 2019 December 5th, 2019 On the most powerful cluster the network trains at 2min/epoch!!!

- 35. © Data Insights, 2019 35December 5th, 2019 SEVENTH ATTEMPT HYPERPARAMETER TUNING ON DATABRICKS ● In just 20 epochs we already cross 80% accuracy! ● Ultimately I was able to get up to 84.4% accuracy. ● Current large-group published attempts on this dataset range between 88-92%. ● There is still overfitting, but at high accuracies on complicated datasets this becomes unavoidable.

- 36. © Data Insights, 2019 TO CONCLUDE (SOME WISDOM FROM THIS JOURNEY) 36December 5th, 2019

- 37. © Data Insights, 2019 December 5th, 2019 ● There is no ‘miracle cure’ that will make a Neural Network stop overfitting. ● Best practise involves employing every trick you know of. ● And, of course, lots of hyperparameter tuning. ● For this last point, running on clusters is indispensable!

- 38. © Data Insights, 2019 December 5th, 2019

- 39. © Data Insights, 2019 Let’s take it for a spin, shall we? December 5th, 2019

- 40. SOME NEAT IDEAS OBSTACLES WITH CURRENT CNN AND HOW TO OVERCOME THEM (WARNING: I AM WAY ABOVE MY HEAD HERE...)

- 41. © Data Insights, 2019 ● # of layers is predefined ● # of output classes are predefined ● A new car (generally) means retraining ● Form is static during training (only weights change) 41December 5th, 2019 Most Neural Networks are Pre-Structured

- 42. © Data Insights, 2019 ● # of layers is pre-defined ● # of output classes are pre-defined ● # form is static during training (only weights change) 42December 5th, 2019 Most Neural Networks are Pre-Structured

- 43. © Data Insights, 2019 ● # of layers is pre-defined ● # of output classes are pre-defined ● # form is static during training (only weights change) 43December 5th, 2019 Most Neural Networks are Pre-Structured

- 44. © Data Insights, 2019 (not actually my kid, I don’t have one) ------ Human Brains have been Designed by Evolution to Build Order from Chaos

- 45. © Data Insights, 2019 Human Brains are Designed to Build Order from Chaos Some such Neural Networks have been developed to imitate the behaviour of brains: – Residual Network (ResNet) – Self Organizing Map (SOM) – Deep Belief Network (DBN) – Sanger’s Network – Google’s Morph Net – Many variants of unsupervised learning However, as far as I know (which is not very far), none have of the following: – Truly random at the start – Do not need predefined classes – Include a concept of ‘memory’ (recognize previously seen input) – Are able to ‘realize’ that a learned class can be further divided into multiple ‘sub-classes’

- 46. © Data Insights, 2019 Human Brains are Designed to Build Order from Chaos

- 47. © Data Insights, 2019 47December 5th, 2019 HEBBIAN NEURAL NETWORK ‘NEURONS THAT FIRE TOGETHER WIRE TOGETHER’ ● Hebb theory (1949) ● Refined into the Generalized Hebbian Algorithm (1989) ● Set up a random group neurons and axons (weights) ● Input signals (activate neurons) ● Apply altered GHA (with activation potentials) wij = weight between neurons i and j xi = activation of neuron i η = learning rate (may be dependant on many things...)

- 48. © Data Insights, 2019 48December 5th, 2019 Input 1 Input 2 Input 1 Input 2 Input 3 time = 0 time = 1 time = 2 time = 3 time = 4

- 49. © Data Insights, 2019 49December 5th, 2019 HEBBIAN NEURAL NETWORK THE NETWORK CAN ‘LEARN’ MNIST WITHOUT ANY CLASSES

- 50. © Data Insights, 2019 50December 5th, 2019 HEBBIAN NEURAL NETWORK THE NETWORK CAN ‘LEARN’ MNIST WITHOUT ANY CLASSES t = 1 t = 50 t = 100 t = 200 t = 500 t = 1000

- 51. © Data Insights, 2019 51December 5th, 2019 HEBBIAN NEURAL NETWORK THE NETWORK ALSO EXHIBITS SYMPTOMS OF `UNCERTAINTY’

- 52. CLOSING THOUGHTS ● NEURAL NETWORKS HAVE COME AMAZINGLY FAR ● HOWEVER, ALTHOUGH THEY CAN OUT-PERFORM HUMANS, THEY STILL FAIL TO ‘OUT-LEARN’ HUMANS (THEY NEED TO BE GIVEN A VERY SPECIFIC SET-UP TO LEARN A TASK) ● NEW ARCHITECTURES SHOULD LOOK TO THE BRAIN FOR INSPIRATION

- 53. HUGE SHOUT-OUT TO ALICIA AND HENNING ● THE REASON WE ARE HERE RIGHT NOW ● ALSO THE REASON THERE IS PIZZA

- 54. Data Insights GmbH ׀ Tumblingerstr. 12 ׀80337 München ׀ Germany + ׀49(0)8924217444׀ [email protected] Vielen Dank für Ihre Aufmerksamkeit! github.com/EvanEames Dr. Evan Eames 54